Decoding AI: Part 4, The age of multimodal AI

Welcome to Part 4 of our Decoding AI: A government perspective learning series! In our previous dialogue, we unraveled the complexities of semantic search and generative AI and how they are transforming public governance. In this module, let’s shift gears and focus on the dawn of a new AI era: multimodal sensing. This compelling advancement merges multiple sensory data—think vision, sound, text—into an insightful, unified whole data set. — Siddhartha Chaturvedi, Miri Rodriguez

Making sense of different senses

In our day-to-day lives, we seldom rely on just one sense to make decisions or understand our environment. Imagine walking into a café; you use your eyes to assess the ambience, ears to catch the vibe through music, and nose to appreciate the aroma of freshly brewed coffee. Similarly, multimodal sensing in AI attempts to capture and process a richer, more nuanced view of the world by combining different types of data.

Multimodal sensing: How different types of data can create a richer and more nuanced view of the world. This image shows how AI can integrate various types of data, such as images, text, and sound, to understand the world better. – Generated by Bing Chat

Use cases beyond text

From agriculture to environmental monitoring, let’s delve into how this new paradigm integrating human senses and machines allows us to light up interesting use cases for government:

Seamless impact: When technology meets human needs

When cutting-edge computing meets everyday life, we encounter technologies that are not just academic experiments—they influence our world in concrete ways. The following examples are an inspiration to unearth the patterns and practices that you might be able to borrow and apply to your mission, your agency, the work that you do. And when you do, share it with us, so that we can learn with you.

- Global environmental stewardship & policy impact: The Microsoft Planetary Computer does more than marvel at data; it enables informed decisions for climate change, conservation, and community initiatives, as we connect the world of geospatial imagery with text based research paper, with large language models (LLMs) understanding context much like they generate the descriptions for the images in this blog. This platform isn’t just for the scientists but for every citizen concerned about the Earth’s future. And with tools like ArcGIS for Microsoft Planetary Computer you can analyze at planetary scale today, and perhaps build a solution that connects your industry-centric dataset to bring new light to landcover, ecosystem monitoring, conservation planning and more.

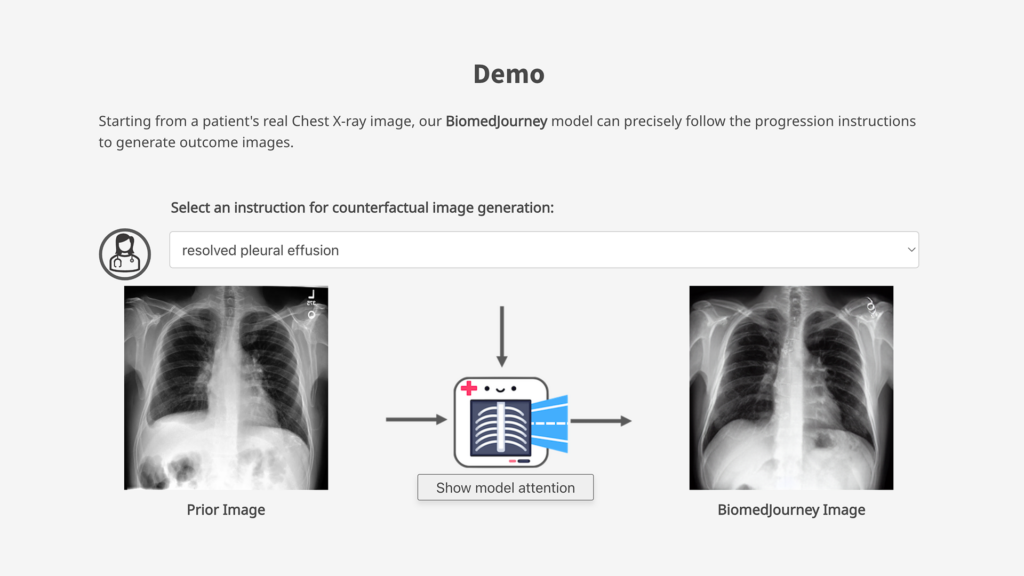

- Enhance patient and clinician outcomes: At HLTH 2023, Microsoft revealed a healthcare integration platform, to combine different types of data into a common structure and interface, across electronic health records (EHRs), Picture Archiving and Communication Systems (PACS), lab systems, claims systems and medical devices. This is the trend that we can observe across various industries to increase insight, using multiple “senses” and getting more context than ever before. And going further, working with collaborators at University of California, San Fransisco and Stanford University, Microsoft Research has introduced BiomedJourney, a method to model what the future image might look like on the biomedical journey, using generative AI.

The unseen hero: Data engineering

Multimodal sensing can be likened to a symphony, where various instruments come together to produce harmonious music. But orchestrating this requires a capable conductor—enter data engineering. It creates a robust system that makes the complex task of gathering and interpreting multi-faceted data feasible. It’s not just about software or hardware; it’s about the systemic integration of these elements.

Multimodal AI: How to leverage multiple types of data to create value for various industries. This image shows how multimodal AI can use different types of data, such as images, text, and sound, to create value for various industries, such as agriculture, environmental monitoring, and public governance. The image implies that multimodal AI can help solve complex problems, improve efficiency, and enhance decision making in these domains. – Generated by Bing Chat

Next steps: Try it yourself

The road to mastery is through doing. Here are some actionable steps for you:

- Build a data pipeline: Data is your raw material. You can begin by constructing your pipeline using Azure Data Factory. Read more: Azure Data Engineering

- Enable sensory capabilities: Tools like Azure Cognitive Services can help you add these “senses” to your AI models. Read more: Azure Cognitive Services

- Assessment phase: Don’t just implement and forget. Take time to evaluate what sensory combinations yield the best results for your specific use case. Make adjustments and iterate. Read more: Microsoft Learn on AI Monitoring

The road ahead

We’re only scratching the surface of what’s possible with AI and multisensory data.

Up next, we’ll be talking about Trustworthy AI in the era of LLMs and multimodal generative AI. Your engagement propels us forward in this dynamic field. Don’t hesitate to share your thoughts as we continue this intellectual journey together. Stay tuned!

Light

Light Dark

Dark

0 comments