Background

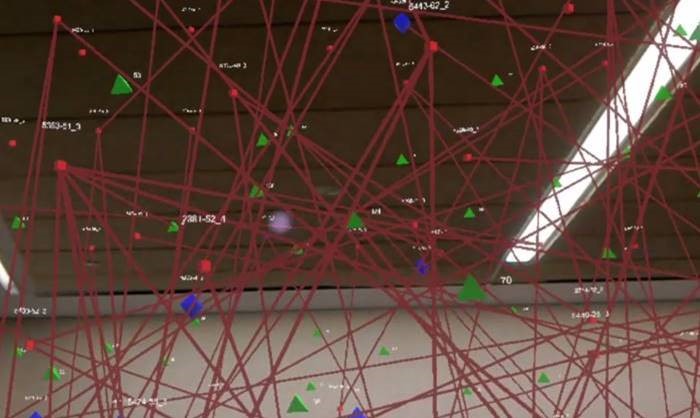

Researchers at the Englander Institute for Precision Medicine (EIPM) at Weill Cornell Medicine bring together basic science, medicine, DNA sequencing and big data to deliver the next generation of precision medicine; it is the most promising approach to tackle diseases like cancer, neurodegenerative diseases, and rare genetic conditions that have thus far eluded effective treatments. Their multidisciplinary team consists of physician-scientists, researchers, and bioinformaticians who are working together to identify the genetic alterations that drive the diseases and to form tailored, targeted treatments for every patient. This process is very data-intensive, involving integrating terabytes of information generated from genomic sequencing, heart rate, past clinical information, etc. To this end, EIPM developed Holo Graph, a HoloLens application that enables clinicians and researchers to visualize cancer drug networks in 3D, manipulate the structure and brainstorm with colleagues in real-time. This approach enables a new level of collaboration and analysis of data, not possible on a flat 2D screen. The data used in the application is real and you can find more information on their website. Olivier Elemento, the Director of Caryl and Israel Englander Institute for Precision Medicine at Weill Cornell, explains the importance of Holo Graph:

“Precision medicine is at the crossroad between big data and medicine where massive amounts of medical data are processed and analysed in pursuit of tailored treatment options. Optimizing data visualization tools like Holo Graph is of paramount importance to this pursuit as it brings data into the real world allowing our clinicians and researchers to fully immerse, collaborate and brainstorm in an unprecedented way towards a tailored treatment.”

We recently worked with the Englander Institute for Precision Medicine to push their application development to the next level, improve the user experience and performance, and publish the app to the Microsoft Store. We did a one-week hack with their development team and had a great outcome, which included:

- OneDrive integration to allow dynamic loading of data

- Adding sharing capabilities to allow physicians around the world interact in the same scene

- Voronoi selection in 3D to greatly improve the ability to interact with graph nodes

- Physics and performance improvements

- App published on the Microsoft Store

In this post, we will focus on the Voronoi selection feature and how we developed it to allow Weill Cornell to improve the node selection experience and make it intuitive for any physician to use. The final code was built using Unity and C#, is open-sourced and can be used in any 3D application.

The Challenge

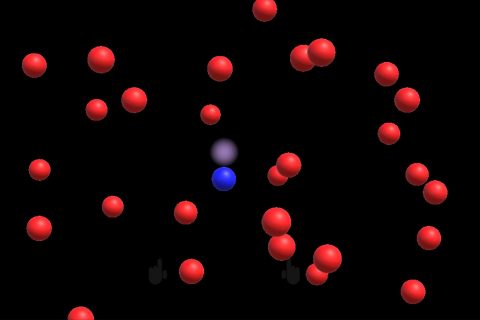

Cancer drug networks are very complex and can have up to 1000 nodes. Physicists wanted a way to visualize the graph in 3D and be able to manipulate individual nodes to see how small changes impact the overall structure. The ability to select and drag individual nodes is crucial in the brainstorming process as this feature enables physicians to collaborate and explore how they could alter the graph structure in real-time. Mixed reality headsets rely on your gaze to change focus, but gaze is often not precise enough in a structure that has many nodes. For example, looking at the image below, how can you tell which node the user intends to select, given the low precision of head movements?

In standard 3D applications, Unity uses raycasting to detect collisions between the gaze marker and 3D objects. Each object has a basic geometric solid representation used to calculate collisions. On each update, Unity sends a raytrace following your gaze to see if your gaze “hits” any of the objects (see the Unity manual for more details).

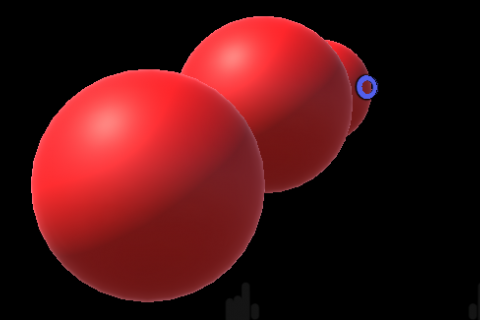

This method works well in applications with a small number (<100) of relatively large targets (>5 degrees of travel from boundary to boundary). Weill Cornell’s use case exceeds both scales with many small targets. In addition, we needed to calculate the hit target quickly and allow selection of objects that may be partially occluded or far off in the distance. Mixed reality allows users to navigate around the graph and observe from different angles. Just like in real life, objects that are closer to the user will be bigger in size, making it very hard to select the nodes in the back.

The object in the back has a small hit target – very difficult to select

The Solution – Voronoi Selection

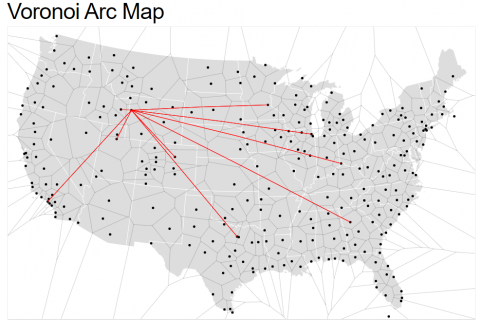

Voronoi selection takes an array of 2D coordinates as an input and creates a grid/layout around the points in such a way that the edges are drawn where the distance to the two nearest points is the same.

To better explain the process, look at the Voronoi selection example below:

Each point represents an airport around the US, and the grid around each point is generated by Voronoi. Moving the cursor around will select airports once you enter the generated grid. This creates great hit targets and makes it very intuitive to select objects. As a test, try and select airports around the New York area. Since the algorithm keeps the same distance between points, it will make selection easy even in crowded areas.

The Voronoi diagram is generated using Fortune’s Plane-Sweep Algorithm with a final complexity of O(n∗log(n)). For more information on the underlying algorithm and efficiency, please read the paper published by Kenny Wong and Hausi A. Müller, “An Efficient Implementation of Fortune’s Plane-Sweep Algorithm for Voronoi Diagrams.”

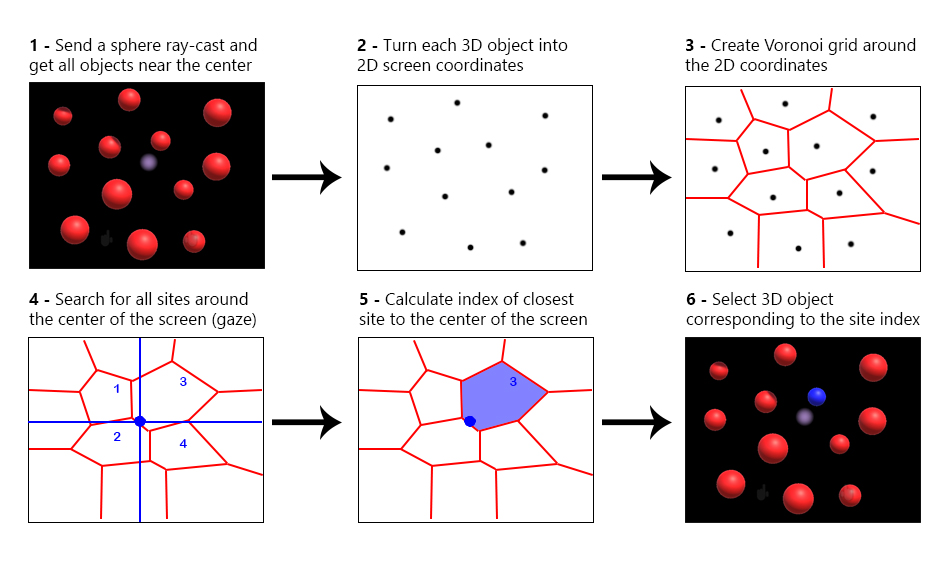

Taking Voronoi to the third dimension

As seen above, Voronoi works great on a 2D graph and we needed to have the same experience when interacting with a graph in 3D. In any 3D scene, you have the ability to find the 2D screen pixel coordinate of a 3D object since it’s the fundamental method by which the image is generated. This allows us to generate the array of 2D coordinates necessary to create a Voronoi grid.

The next step is to calculate the actual selection. When the cursor or the gaze moves around the grid, we check which site is closest to the central point and we return the 3D object contained in the site. Here is a diagram that explains the end-to-end selection process:

Now that we went through the process of using Voronoi in 3D, let’s look at the open-sourced Unity sample and how you can use this implementation in any 3D application.

Now that we went through the process of using Voronoi in 3D, let’s look at the open-sourced Unity sample and how you can use this implementation in any 3D application.

Unity sample in mixed reality

Our sample code is open-sourced and available on GitHub. This code is based on the code by Weill Cornell Medicine in their Holo Graph app but extracted in a sample for easy reuse. It is written in C# using the MixedRealityToolkit for Unity and can be deployed to any mixed reality headset like HoloLens or the HP headset. If you are new to mixed reality development, check out the great tutorials on Microsoft Academy.

The main scene contains a Graph generator that will randomly create objects around a sphere radius. You can change the number of objects generated and the radius (all values are measured in meters) by changing the public properties in GraphGenerator.cs:

// Values are in meters public int NumberOfNodes = 30; public int SpawnRadius = 5; public float SphereRadius = 0.1f;

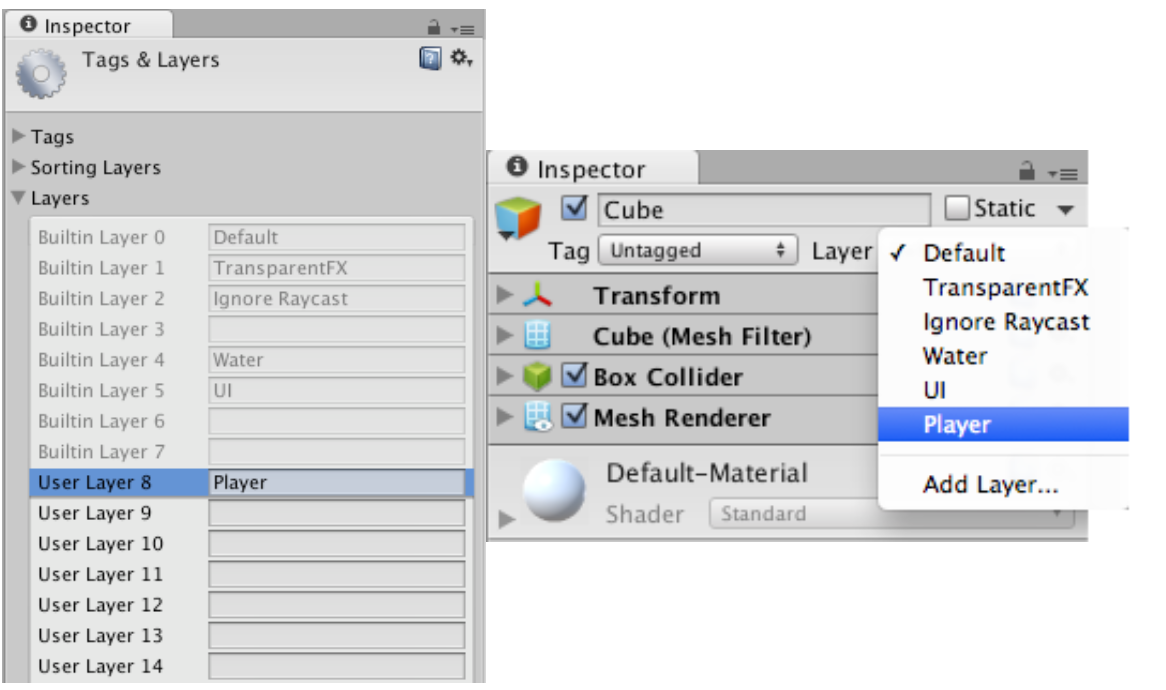

Each generated sphere gets assigned a specific layer and a HandDraggable.cs script (part of MixedRealityToolkit) to enable click and drag. In Unity, you have 32 slots for layers (32 bits) with layers 0-7 built in and 8-31 available for custom usage. We create a new layer at index 8 and we only look at objects in that layer for the Voronoi selection. You can modify this value to any layer that you wish (make sure you first create the layer in the editor).

for (var i=0; i < this.NumberOfNodes; i++)

{

var sphere = GameObject.CreatePrimitive(PrimitiveType.Sphere);

sphere.layer = 8;

sphere.transform.SetParent(transform);

// Scale to 0.1 meters in radius

sphere.transform.localScale = Vector3.one * SphereRadius;

sphere.transform.position = Random.insideUnitSphere * this.SpawnRadius;

sphere.AddComponent<HandDraggable>();

sphere.AddComponent<OnFocusObject>();

}

OnFocusObject is a simple script that changes the color of the object when it enters focus.

public void OnFocusEnter()

{

gameObject.GetComponent<Renderer>().material.SetColor("_Color", focusedColor);

}

public void OnFocusExit()

{

gameObject.GetComponent<Renderer>().material.SetColor("_Color", originalColor);

}

MixedRealityToolkit uses GazeManager.cs to constantly send a raycast from the camera position along the gaze direction. If the raycast hits an object directly, that becomes the focused object and the OnFocusEnter event is sent.

IsGazingAtObject = Physics.Raycast(GazeOrigin, GazeNormal, out hitInfo, MaxGazeCollisionDistance, RaycastLayerMasks[0]);

if (IsGazingAtObject)

{

HitObject = HitInfo.collider.gameObject;

HitPosition = HitInfo.point;

lastHitDistance = HitInfo.distance;

}

else

{

HitObject = null;

HitPosition = GazeOrigin + (GazeNormal * lastHitDistance);

}

GazeManager has public properties that tell when the user is gazing at an object, the exact hit position, and a reference to the object:

/// <summary>

/// Indicates whether the user is currently gazing at an object.

/// </summary>

public bool IsGazingAtObject { get; private set; }

/// <summary>

/// The game object that is currently being gazed at, if any.

/// </summary>

public GameObject HitObject { get; private set; }

/// <summary>

/// Position at which the gaze manager hit an object.

/// If no object is currently being hit, this will use the last hit distance.

/// </summary>

public Vector3 HitPosition { get; private set; }

Voronoi selection is implemented in VoronoiSelection.cs. The script uses the public properties above and only runs when no HitObject is detected.

private void Update()

{

if (GazeManager.Instance.HitObject && InputManager.Instance.OverrideFocusedObject != null)

{

InputManager.Instance.OverrideFocusedObject.SendMessage("OnFocusExit");

InputManager.Instance.OverrideFocusedObject = null;

}

else if (!GazeManager.Instance.IsGazingAtObject)

// Apply Voronoi and override the focus object

Turning 3D objects into 2D screen coordinates

Since our gaze is always in the center of the screen, we do not need to run Voronoi on all the 3D objects on the screen. Instead, we use a sphere raycast from the camera position and along the gaze direction to return a list of all 3D objects that are close to the center of the gaze.

// We are using a constant for the sphere cast radius. 0.3 meters is enough for most scenarios. // Increase this value if your objects are very far away from each other. public float SphereCastRadius = 0.3f; // Only check objects in layer 8 var layerMask = 1 << 8; // Cast a sphere to see what nodes are in radius of the head orientation var hitObjects = Physics.SphereCastAll(GazeManager.Instance.GazeOrigin, SphereCastRadius, GazeManager.Instance.GazeTransform.forward, GazeManager.Instance.MaxGazeCollisionDistance, layerMask); var voronoiNodes = new List<Vector2>();

We then transform the 3D position of each of these objects to a 2D screen position. On Unity, this is already part of the game engine and it can be done very easily:

public static Vector2 WorldToGUIPoint(Vector3 world)

{

Vector2 screenPoint = Camera.main.WorldToScreenPoint(world);

return screenPoint;

}

As discussed above, the array of coordinates is used as an input and a Voronoi grid is generated. We then check the closest site in the center of the grid which represents our gaze and we return the 3D object index to select.

foreach (var hit in hitObjects)

{

var screenCoordinates = WorldTo2DHelper.GUICoordinatesWithObject(hit.collider.gameObject);

voronoiNodes.Add(screenCoordinates);

}

// Only look at the center of the screen

objectindex = this.CreateNewVoronoiSelection(voronoiNodes, new Vector2(Screen.width / 2, Screen.height / 2));

// There are two ways you can create the voronoi diagram: with or without the lloyd relaxation.

// We do not want lloyd relaxation as it does not represent the real location of the 3D objects.

voronoi = new Voronoi(points, bounds, WeightDistributor);

edges = voronoi.Edges;

if(edges.Count < 1)

return -1;

// Returns the closest site based on a point.

// The algorithm calculates the closest edge and picks the closest adjacent site of that edge.

var closestSite = GetClosestSiteByPoint(new Vector2f(centerPoint.x, centerPoint.y));

if(closestSite == null)

return -1;

// The site index does not match the initial points index, we need to check for the correct index.

for(int i =0; i<points.Count; i++)

{

if(points[i] == closestSite.Coord)

return i;

}

The returned object is then used to override the focused object and OnFocusEnter event is sent to the object. We also make sure we send the OnFocusExit event to the previous object.

var hitObject = hitObjects[objectindex].collider.gameObject;

if (hitObject != InputManager.Instance.OverrideFocusedObject)

{

hitObject.SendMessage("OnFocusEnter");

if (InputManager.Instance.OverrideFocusedObject != null)

{

InputManager.Instance.OverrideFocusedObject.SendMessage("OnFocusExit");

}

InputManager.Instance.OverrideFocusedObject = hitObject;

}

At this point, we select an object using Voronoi selection. In this sample, you can click and drag to move the selected object in 3D space.

Reuse Opportunities

Our open-sourced code is the same code used in the Holo Graph application. Our Voronoi selection feature greatly improved the user experience and enabled any user, including those trying the app for the first time, to intuitively select nodes and interact with them. Selection problems were a major issue before our engagement, as the hit target was only around the nodes and it was very difficult to select using your gaze.

Our work has enabled physicians to focus on brainstorming and diagnostics, instead of struggling to interact with the right node. Alexandros Sigaras, the Senior Research Associate in Computational Biomedicine at Weill Cornell Medicine and the project lead of Holo Graph, explains the impact of our collaboration:

“Working together with Microsoft enabled us to tailor Holo Graph’s performance and responsiveness to HoloLens and bring the overall experience to a whole new level. Feedback from clinicians and researchers shows great promise and we plan to leverage Voronoi selection in all our upcoming mixed reality projects.”

The code is specific to Unity and C# but it can be ported to any platform or language following the steps explained in the “Taking Voronoi to the third dimension” section. It will greatly improve the user experience in any data visualization scenario, in particular when there are multiple small objects that are hard to select.

Import Voronoi Selection to your Unity Application

If you wish to import Voronoi selection to your Unity application, follow these steps:

- Clone the sample:

git clone https://github.com/anderm/voronoi-selection-in-3d.git

- Copy the Voronoi folder at path voronoi-selection-in-3dAssetsScriptsVoronoi into your own project directory.

- Add MixedRealityToolkit-Unity (Getting started with MixedRealityToolkit).

- Add GazeManager.cs script to your scene.

- Add the VoronoiSelection.cs script in your scene.

- Update the layer id to 8 for any object that you wish to select.

- Move the camera around and see how the color changes to blue for those objects!

You can also remove the MixedRealityToolkit dependency and constantly use Voronoi selection by removing the reference to GazeManager.cs and keeping track of the focused object in your own implementation.

Feel free to contribute to the repo and create any issues if you have trouble with the code. You can also reach out to us in the comments below.

Header Image: Dr. Olivier Elemento (left) alongside with his Ph.D students Neil Madhukar and Katie Gayvert, analyze medical network data (photo courtesy of the Englander Institute for Precision Medicine)

I’m confused as to why the Voronoi grid is necessary. Unless you have a fixed head position you won’t be able to reuse it and so the problem boils down simply to “which node is closest to the centre”. This does not require O(n.lg(n)), but just O(n) as all you need to do is calculate the distance from each object to the gaze.

Hi Ian,

The voronoi calculation happens every frame, it is not static. It replaces raycasting with colliders. In the best case comparison using basic sphere colliders, this is still effectively O(n^2) and almost every trick to reduce this to O(n) requires objects to stay completely or mostly static. So while still not O(n), voronoi gives us a massive boost to calculating selection without any special requirements on objects or motion.

It also has the benefit of providing forgiving selection to objects that are tiny or partial obscured in z-order. It allows the user to use much less stability...