Embedding vector caching (redux)

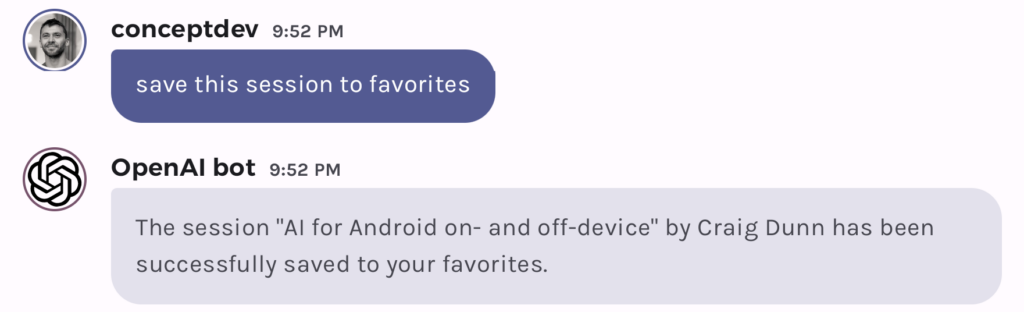

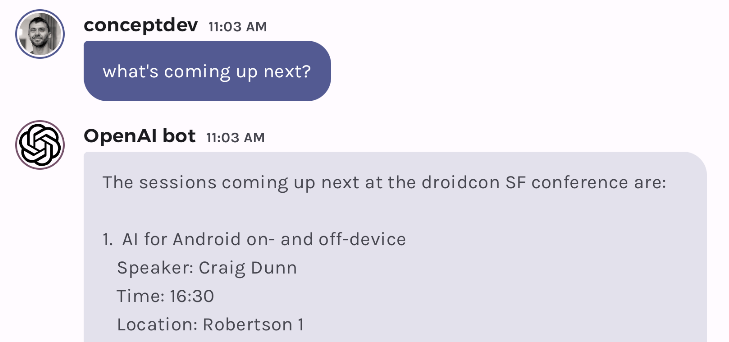

Chat memory with OpenAI functions

Combining OpenAI function calls with embeddings

OpenAI chat functions on Android

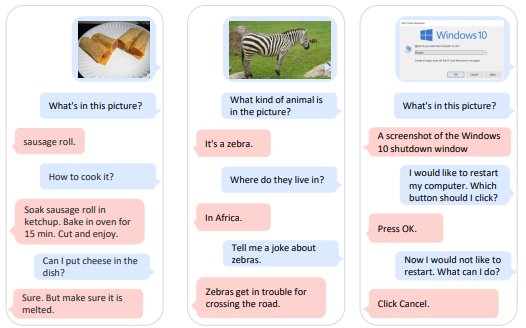

Multimodal Augmented Inputs in LLMs using Azure Cognitive Services

Embedding vector caching

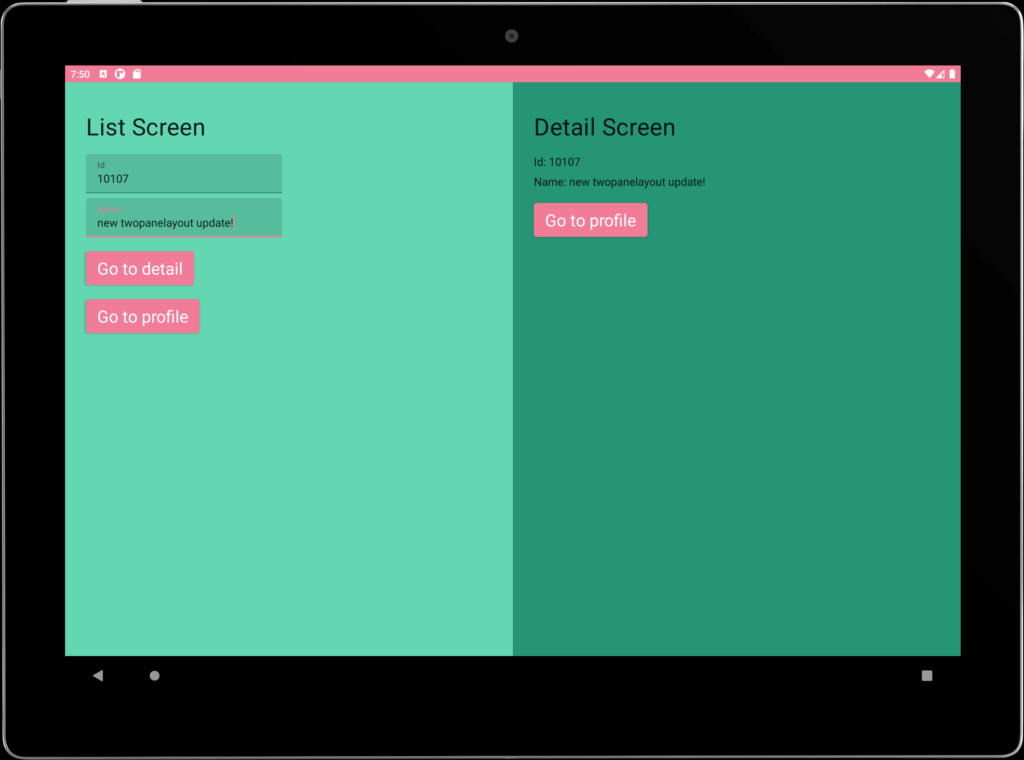

Announcing FoldAwareColumn in Accompanist Adaptive

JetchatAI gets smarter with embeddings

Light

Light Dark

Dark