Hello prompt engineers,

Earlier this year I tried to create a hardcoded cache of embedding vectors, only to be thwarted by the limitations of Kotlin (the combined size of the arrays of numbers exceeded Kotlin’s maximum function size). Now that we’ve added Sqlite to the solution to support memory and querying, we can use that infrastructure to also cache the embedding vectors.

Note that the version of Sqlite we’ll use on Android does not have any special “vector database” features – instead, the embedding vectors will just be serialized/deserialized and stored in a TEXT column. Embedding vector similarity comparisons will continue to be done with the dot function defined in DroidconEmbeddingsWrapper.kt.

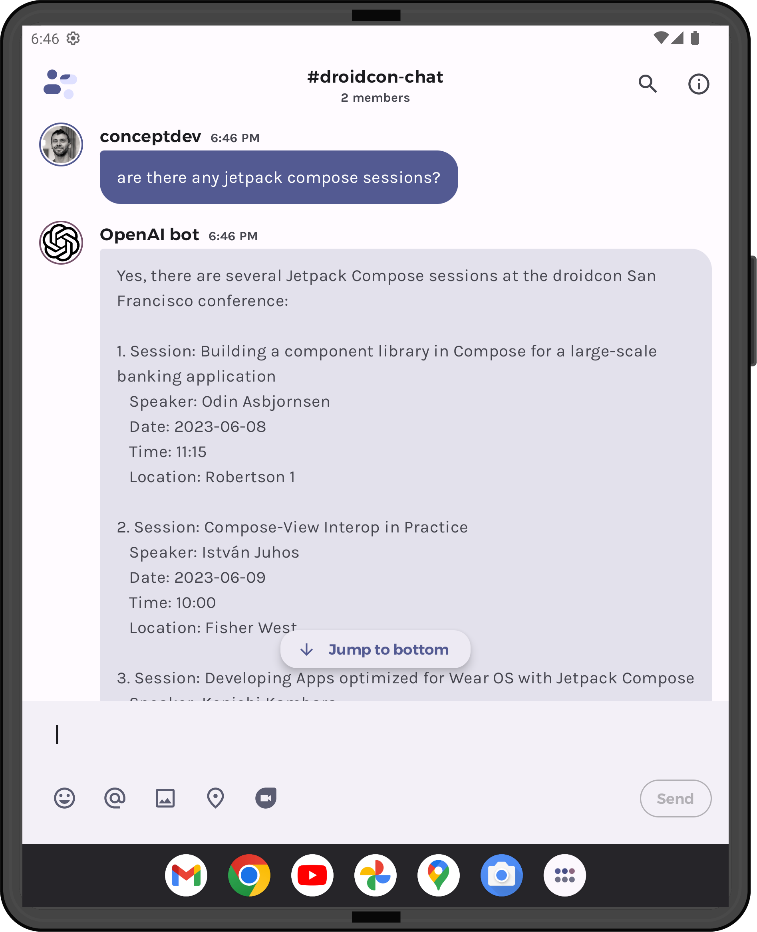

The demo app we’re working on is the JetchatAI sample that allows you to query the conference schedule for the droidcon SF 2023 event. The sample code is available at github.com/conceptdev/droidcon-sf-23. Don’t forget to choose the droidcon-chat from the top-left menu to test out these features.

Figure 1: Embeddings with similarity comparisons enable retrieval augmented generation (RAG) chat interactions

Configure the database

The database setup uses the same pattern that we used for the favorites feature. The schema and create/delete scripts for an embeddings table are defined in the data/DroidconDatabase.kt file:

object EmbeddingEntry : BaseColumns {

const val TABLE_NAME = "embedding"

const val COLUMN_NAME_SESSIONID = "session_id"

const val COLUMN_NAME_VECTOR = "vector"

}

private const val SQL_CREATE_EMBEDDING_ENTRIES =

"CREATE TABLE ${DroidconContract.EmbeddingEntry.TABLE_NAME} (" +

"${DroidconContract.EmbeddingEntry.COLUMN_NAME_SESSIONID} TEXT PRIMARY KEY," +

"${DroidconContract.EmbeddingEntry.COLUMN_NAME_VECTOR} TEXT)"

private const val SQL_DELETE_EMBEDDING_ENTRIES = "DROP TABLE IF EXISTS ${DroidconContract.EmbeddingEntry.TABLE_NAME}"

Figure 2: database schema declaration

The DroidconDbHelper then takes care of creating the table (don’t forget to update the DATABASE_VERSION whenever you make schema changes).

Store vectors with SQLite

There is already an initVectorCache function in the DroidconEmbeddingsWrapper.kt file which generates all the vectors in a loop over the droidconSessions collection and stores the vectors in-memory in the vectorCache variable. The updated code leverages this existing loop to also insert each vector into the local database.

As mentioned above, there are no special vector-handling features in the existing version of Sqlite, so the code will treat the vector as a string for data storage and retrieval.

In Figure 2, you can see the first half of the code snippet has not changed – it still loops through the conference schedule and generates an embedding for each session via the web API. The second half of the snippet adds the database customization – first the vector (which is an array of Double values) gets serialized, and then it is inserted into the database using the dbHelper class that has been added to the function:

// Gets the data repository in write mode

val db = dbHelper.writableDatabase

for (session in DroidconSessionData.droidconSessions) {

val embeddingRequest = EmbeddingRequest(

model = ModelId(Constants.OPENAI_EMBED_MODEL),

input = listOf(session.value)

)

val embedding = openAI.embeddings(embeddingRequest)

val vector = embedding.embeddings[0].embedding.toDoubleArray()

// add to in-memory cache

vectorCache[session.key] = vector

// serialize the vector to a string - removed for clarity

// Create a new map of values, where column names are the keys

val values = ContentValues().apply {

put(DroidconContract.EmbeddingEntry.COLUMN_NAME_SESSIONID, session.key)

put(DroidconContract.EmbeddingEntry.COLUMN_NAME_VECTOR, vectorString)

}

// Insert the new row, returning the primary key value of the row (would be -1 if error)

val newRowId =

db?.insert(DroidconContract.EmbeddingEntry.TABLE_NAME, null, values)

}

Figure 3: code that generates embedding vectors then inserts into a local database

The function also has a new check if (vectorCache.isEmpty()) so that once the data has been loaded once, it does not need to be re-loaded.

Retrieve vectors with SQLite

Once the database has been loaded with embeddings, the existing initVectorCache function should now be able to use the locally stored embeddings rather than regenerating from the web API. The code to load the embedding vectors is encapsulated in a new loadVectorCache function:

private fun loadVectorCache(dbHelper: DroidconDbHelper): Int {

var rowCount = 0

val db = dbHelper.readableDatabase

val projection = arrayOf(

DroidconContract.EmbeddingEntry.COLUMN_NAME_SESSIONID,

DroidconContract.EmbeddingEntry.COLUMN_NAME_VECTOR)

val cursor = db.query(

DroidconContract.EmbeddingEntry.TABLE_NAME, // The table to query

projection, // The array of columns to return

null, null, null, null, null // unused customizations

)

with(cursor) {

while (moveToNext()) {

val sessionId = getString(getColumnIndexOrThrow(DroidconContract.EmbeddingEntry.COLUMN_NAME_SESSIONID))

val vectorString = getString(getColumnIndexOrThrow(DroidconContract.EmbeddingEntry.COLUMN_NAME_VECTOR))

// deserialize vector - removed for clarity

// add to in-memory cache

vectorCache[sessionId] = vector.toDoubleArray()

rowCount++

}

}

cursor.close()

return rowCount

}

Figure 4: read the embedding vectors from the database and store in-memory cache

The initVectorCache is responsible for loading via the most efficient method – it will check in-memory first, if the embeddings aren’t cached it will attempt to load from the database. If the database is empty, it will generate new embeddings from the web API and update the database for next time.

Profit!

The old version of the code needed to get embeddings each time the app was started. There are around 70 sessions in the droidcon schedule, so that was 70 web API calls every time! Now the web API calls happen once and the results are stored in the database, available in each subsequent app restart. The only time they’ll be regenerated is if the database schema changes – but you can optimize that too by spending some time on database migration strategies (beyond the scope of this post).

Not only is this cheaper (no more unnecessary embeddings API calls), but it’ll also make the initial conversation startup time much faster.

Resources and feedback

We’d love your feedback on this post, including any tips or tricks you’ve learning from playing around with ChatGPT prompts.

If you have any thoughts or questions, use the feedback forum or message us on Twitter @surfaceduodev.

There will be no livestream this week, but you can check out the archives on YouTube.

The memory heap stack will increase, resulting in a memory allocation error and potentially corrupting the NT kernel and might initialize a kernel panic, rendering the CPU unusable until further repair.