With the first wave of new iPhone 8 devices landing in people’s hands, and the installed base of iOS 11 users growing rapidly, it’s an exciting time to build apps for iPhone and iPad, as well as macOS and the new 4k Apple TVs and Cellular Apple Watches. Of course, Visual Studio and Xamarin are ready to enable you as .NET developers to create native mobile apps for these new devices with C# and F#. We are excited to share with you that with our latest release of Xamarin.iOS on Mac and Windows, you now have access to all the latest features of the Apple’s new hardware.

iOS apps with Visual Studio Tools for Xamarin

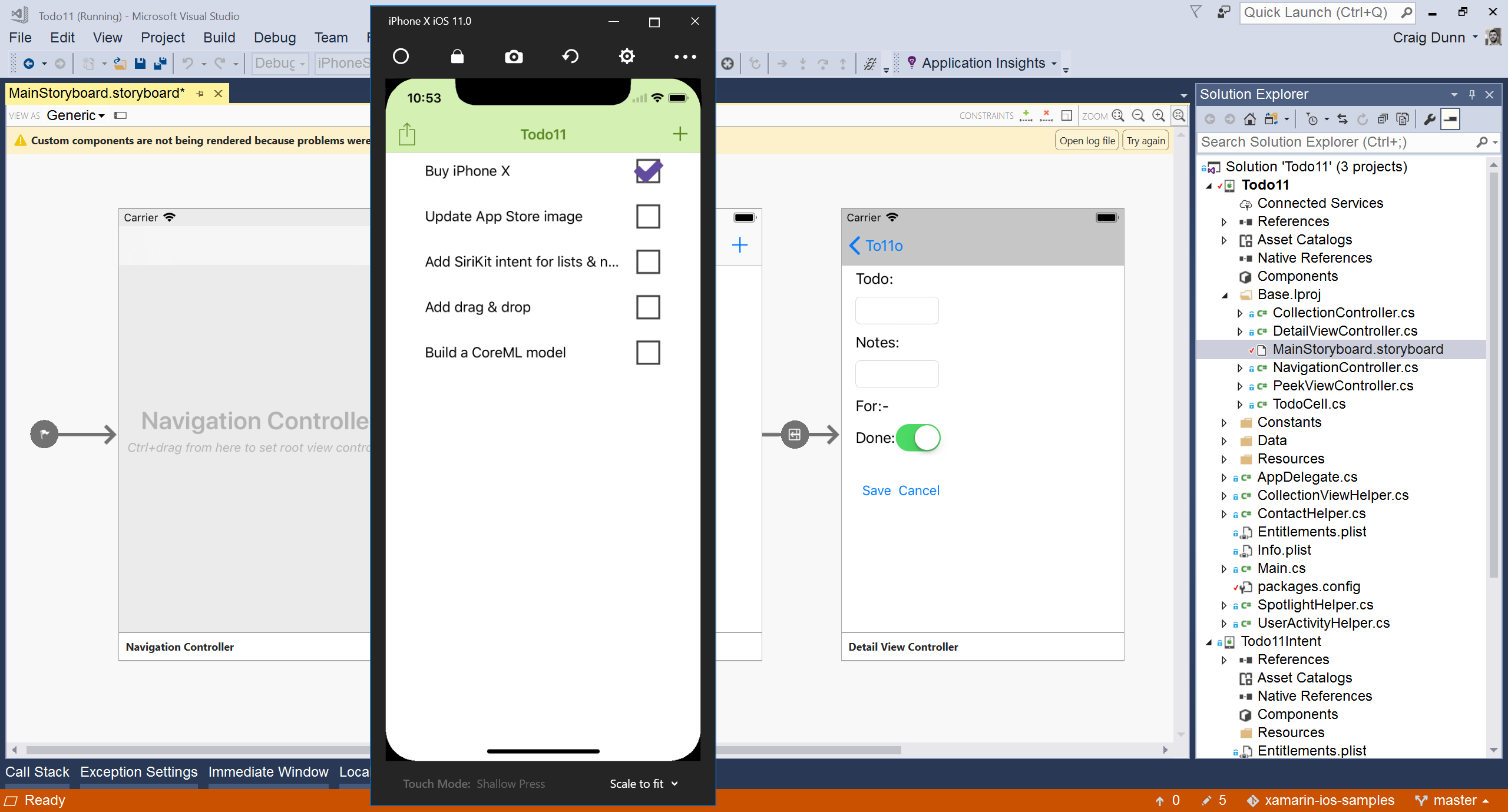

If you haven’t tried app development with Xamarin yet, it’s available as part of Visual Studio on PC and Mac (including the free Visual Studio Community Edition) and enables you to build apps for iPhone, iPad, Apple Watch, and Apple TV – using C# and F#.

This year, we’ve continued our tradition of shipping support for Xcode 9 within 24 hours, with a preview release on September 13 that enabled Visual Studio users to build and submit their iOS 11 apps to the App Store. On September 19, we updated Visual Studio 2017 and Visual Studio for Mac to support Xcode 9.

Augmented Reality, Machine Learning, and more!

To help you implement the new features, we have made C# samples available for you to take a look at. Here are some of the things we can’t wait to see you add to your apps using Xamarin:

Augmented reality with ARKit

Apps can detect surfaces to host 3D content, place objects in 3D space, and let ARKit track the user’s position and interactions on the screen. Your AR experiences can be built with SceneKit, or the cross-platform UrhoSharp 3D platform, which allows for sharing code with other mixed reality systems including HoloLens.

Applied Machine Learning with CoreML

CoreML provides a simple API for running machine learning models on iOS devices, including difficult problems like image feature recognition. Models must be pre-trained, and Apple provides a number of examples to get started, but you can also train your own models with Azure Custom Vision Service, and include those in your iOS apps using Xamarin.

Vision Framework

The new Vision framework includes image-processing and analysis features, including face and object detection, and barcode recognition. They become even more powerful when coupled with CoreML and Azure Cognitive Services – image and video data from Vision can be analyzed either on the device or in the cloud.

Drag and Drop

iPad users can now drag and drop text, images, and more between apps. New APIs let you implement the functionality in your own apps, with support for multi-touch dragging and spring-loading to assist with mid-drag navigation.

Other improvements

iOS 11 also contains improvements across the board, including clustering support for maps, the ability to work with PDFs, access to the NFC reader, depth maps from supporting device cameras, and lots more.

Get started with iOS 11 today

Put your C# skills to use building mobile apps with Visual Studio and Xamarin:

- If you haven’t already, get Visual Studio, which includes Xamarin

- Download and install our iOS 11 support by updating to Visual Studio 2017 version 15.3.5 and Visual Studio for Mac 7.1.5.2

- Check out our documentation and samples to learn more

- If you find a problem, report it via “Report a Problem” in the Help menu

|

Joseph Hill, Principal Director, Program Management

@JosephHill

As Principal Director PM for Mobile Developer Tools, Joseph Hill is responsible for Microsoft’s mobile development tools in Visual Studio. Prior to joining Microsoft, Joseph was VP of Developer Relations and Co-Founder at Xamarin. Joseph has been an active participant and contributor in the open source .NET developer community since 2003. In January 2008, Joseph began working with Miguel de Icaza as Product Manager for his Mono efforts, ultimately driving the product development and marketing efforts to launch Xamarin’s commercial products. |

0 comments