OpenAI Assistant functions on Android

Hello prompt engineers,

This week, we are taking one last look at the new Assistants API. Previous blog posts have covered the Retrieval tool with uploaded files and the Code interpreter tool. In today’s post, we’ll add the askWikipedia function that we’d previously built to the fictitious Contoso employee handbook document chat.

Configure functions in the playground

We’ll start by configuring the assistant in the OpenAI playground. This isn’t required – assistants can be created and figured completely in code – however it’s convenient to be able to test interactively before doing the work to incorporate the function into the JetchatAI Kotlin sample app.

Adding a function declaration is relatively simple – click the Add button and then provide a JSON description of the function’s capabilities. Figure 1 shows the Tools pane where functions are configured:

Figure 1: Add a function definition in the playground (shows askWikipedia already defined)

The function configuration is just an empty text box, into which you enter the JSON that describes your function – its name, what it does, and the parameters it needs. This information is then used by the model to determine when the function could be used to resolve a user query.

The JSON that describes the askWikipedia function from our earlier post is shown in Figure 2. It has a single parameter – query – which the model will extract from the user’s input.

{

"name": "askWikipedia",

"description": "Answer user questions by querying the Wikipedia website. Don't call this function if you can answer from your training data.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The search term to query on the wikipedia website. Extract the subject from the sentence or phrase to use as the search term."

}

},

"required": [

"query"

]

}

}

Figure 2: JSON description of the askWikipedia function

In theory, this should be enough for us to test whether the model will call the function; however, my test question “what is measles?” would always be answered by the model without calling askWikipedia. Notice that my function description included the instruction “Don’t call this function if you can answer from your training data.” – this was because in the earlier example, the function was being called too often!

Since this assistant’s purpose is to discuss health plans, I updated the system prompt (called Instructions in the Assistant playground) to the following (new text in bold):

answer questions about employee benefits and health plans from uploaded files. ALWAYS include the file reference with any annotations. use the askWikipedia function to answer questions about medical conditions

After this change, the test question now triggers a function call!

Figure 3 shows what a function call looks like in the playground – because function calls are delegated to the implementing application, the playground needs you to interactively supply a simulated function return value. In this case, I pasted in the first paragraph of the Wikipedia page for “measles”, and the model summarized that into its chat response.

Figure 3: Testing a function call in the playground

With the function configured and confirmation that it will get called for the test question, we can now add the function call handler to our Android assistant in Kotlin.

Assistant function calling in Kotlin

In the original Assistant API implementation, there is a loop after the message is added to a run (shown in Figure 4) where the chat app waits for the model to respond with its answer:

do {

delay(1_000)

val runTest = openAI.getRun(thread!!.id, run.id)

} while (runTest.status != Status.Completed)

Figure 4: Checking message status until the run is completed and the model response is available

Now that we’ve added a function definition to the assistant, the runTest.status can change to RequiresAction (instead of Completed), which indicates that the model is waiting for one (or more) function return values to be supplied.

The AskWikipediaFunction class already contains code to search Wikipedia, so we just need to add some code to detect when a function call has occurred and provide the return value. The code shown in Figure 5 does that in these steps:

-

Detect when the status becomes

RequiresAction -

Get the run’s

steps -

Examines the latest

stepDetailsfor information about what action is required -

If a tool call is indicated, check if it’s for the

FunctionTool - Get the function name and parameters (which are in json key:value format)

- Execute the function locally with the parameters provided

-

Create a

toolOutputwith the function return value - Send the output back to the assistant

Once the assistant receives the function return value, it can construct its answer back to the user. This will set the status to Completed (exiting the do loop) and the code will display the model’s response to the user.

do

{

delay(1_000)

val runTest = openAI.getRun(thread!!.id, run.id)

if (runTest.status == Status.RequiresAction) { // run is waiting for action from the caller

val steps = openAI.runSteps(thread!!.id, run.id) // get the run steps

val stepDetails = steps[0].stepDetails // latest step

if (stepDetails is ToolCallStepDetails) { // contains a tool call

val toolCallStep = stepDetails.toolCalls!![0] // get the latest tool call

if (toolCallStep is ToolCallStep.FunctionTool) { // which is a function call

var function = toolCallStep.function

var functionResponse = "Error: function was not found or did not return any information."

when (function.name) {

"askWikipedia" -> {

var functionArgs = argumentsAsJson(function.arguments) ?: error("arguments field is missing")

val query = functionArgs.getValue("query").jsonPrimitive.content

// CALL THE FUNCTION!!

functionResponse = AskWikipediaFunction.function(query)

}

// Package the function return value

val to = toolOutput {

toolCallId = ToolId(toolCallStep.id.id)

output = functionResponse

}

// Send back to assistant

openAI.submitToolOutput(thread!!.id, run.id, listOf(to))

delay(1_000) // wait before polling again, to see if status is complete

}

}

}

} while (runTest.status != Status.Completed)

Figure 5: Code to detect the RequiresAction state and call the function

Note that there are a number of shortcuts in the code shown – when multiple functions are declared, the assistant might orchestrate multiple function calls in a run, and there are multiple places where better error/exception handling is required. You can read more about how multiple function calls might behave in the OpenAI Assistant documentation.

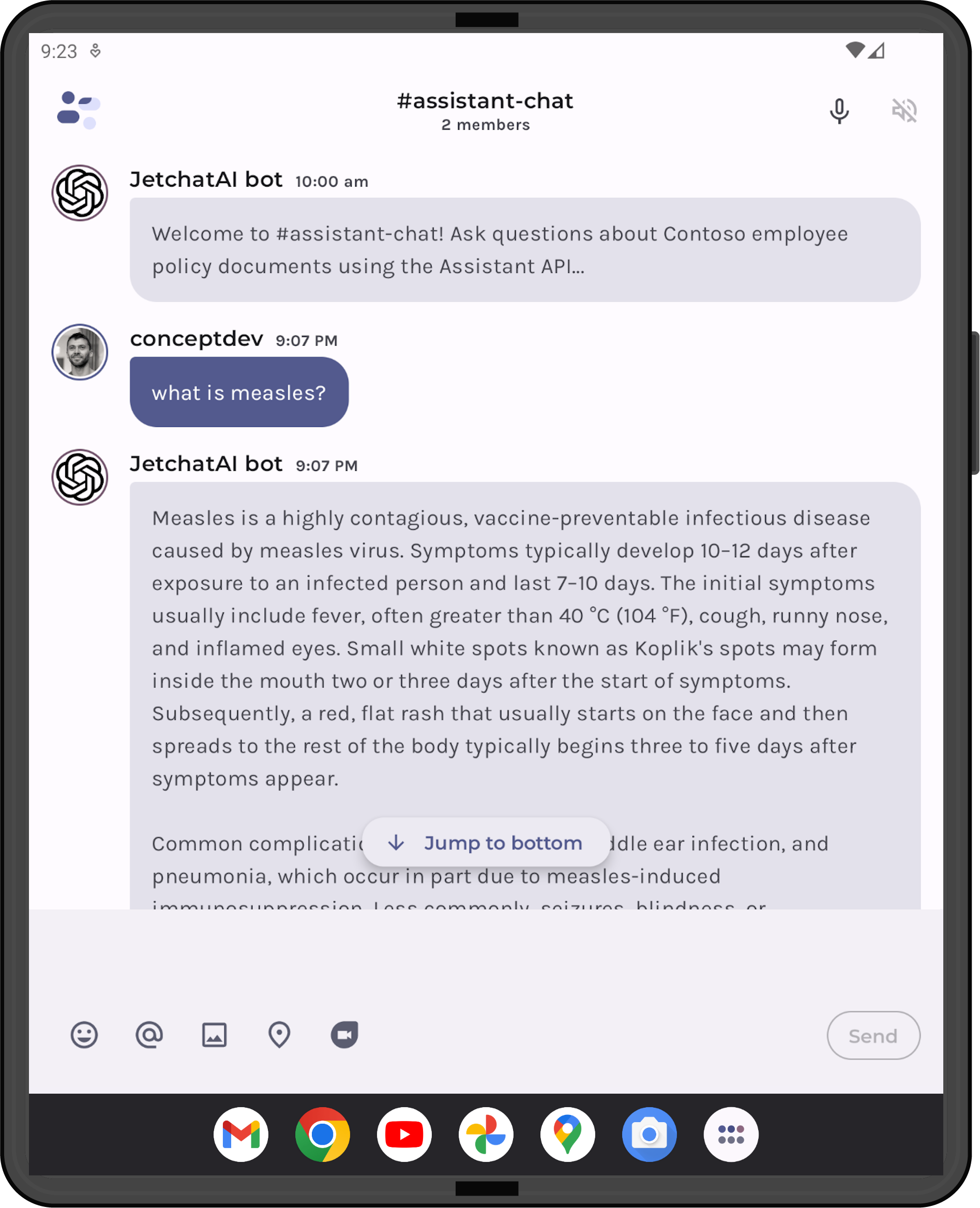

Here is a screenshot of JetchatAI for Android showing an assistant conversation using the askWikipedia function:

Figure 6: JetchatAI #assistant-chat showing a response generated by a function call

Feedback and resources

You can view the complete code for the assistant function call in this pull request for JetchatAI.

Refer to the OpenAI blog for more details on the Dev Day announcements, and the openai-kotlin repo for updates on support for the new features like the Assistant API.

We’d love your feedback on this post, including any tips or tricks you’ve learned from playing around with ChatGPT prompts.

If you have any thoughts or questions, use the feedback forum or message us on Twitter @surfaceduodev.

Light

Light Dark

Dark

0 comments