OpenAI Assistant on Android

Hello prompt engineers,

This week we’re continuing to discuss the new Assistant API announced at OpenAI Dev Day. There is documentation available that explains how the API works and shows python/javascript/curl examples, but in this post we’ll implement in Kotlin for Android and Jetpack Compose. You can review the code in this JetchatAI pull request.

OpenAI Assistants

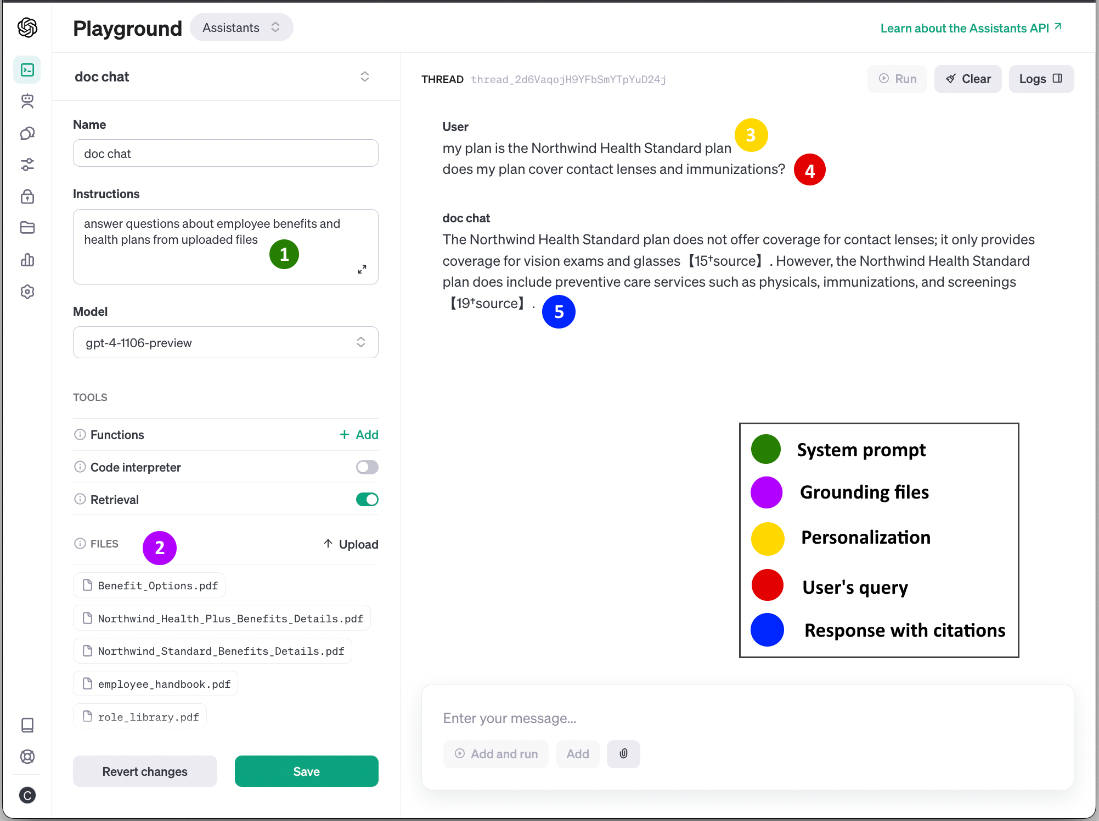

A few weeks ago, we demonstrated building a simple Assistant in the OpenAI Playground – uploading files, setting a system prompt, and performing RAG-assisted queries – mimicking this Azure demo. To refresh your memory, Figure 1 shows the Assistant configuration:

Figure 1: OpenAI Assistant Playground (key explained in this post)

We are going to reference this specific assistant, which has been configured in the playground, from an Android app. After the assistant is created, a unique identifier is displayed under the assistant name (see Figure 2), which can be referenced via the API.

Figure 2: Assistant unique identifier

Building in Kotlin

The openai-kotlin GitHub repo contains some basic instructions for accessing the Assistant API in Kotlin. This guidance has been adapted to work in JetchatAI, as shown in Figure 3. Comparing this file (AssistantWrapper.kt) to earlier examples (eg. OpenAIWrapper.kt) you’ll notice it is significantly simpler! Using the Assistant API means:

- No need for tracking token usage and message history for the sliding window – the Assistant API will automatically manage the model input size limit.

- No need to keep sending past messages with each request, since they are stored on the server. Each user query is added to a thread which is then run against a configured assistant, entirely on the server.

- No need to manually load or chunk document contents – we can upload documents and they will be chunked and stored on the server. Embeddings will automatically be generated.

- No need to generate an embedding for the user’s query or do the vector similarity comparisons in Kotlin. The RAG will be done by the Assistant API on the server.

Many lines of code over multiple files in older examples can be reduced to the chat function shown in Figure 3:

suspend fun chat(message: String): String {

if (assistant == null) { // open assistant and create a new thread every app-start

assistant = openAI.assistant(id = AssistantId(Constants.OPENAI_ASSISTANT_ID)) // from the Playground

thread = openAI.thread()

}

val userMessage = openAI.message (

threadId = thread!!.id,

request = MessageRequest(

role = Role.User,

content = message

)

)

val run = openAI.createRun(

threadId = thread!!.id,

request = RunRequest(assistantId = assistant!!.id)

)

do

{

delay(1_000)

val runTest = openAI.getRun(thread!!.id, run.id)

} while (runTest.status != Status.Completed)

val messages = openAI.messages(thread!!.id)

val message = messages.first() // bit of a hack, get the last one generated

val messageContent = message.content[0]

if (messageContent is MessageContent.Text) {

return messageContent.text.value

}

return "<Assistant Error>" // TODO: error handling

}

Figure 3: new chat function using a configured Assistant (by Id) using Kotlin API

Assistants can also support function calling, but we haven’t included any function demonstration in this sample.

Try it out

To access the new Assistant channel in JetchatAI, first choose #assistant-chat from the chat list:

Figure 4: Access the #assistant-chat channel in the Chats menu of JetchatAI

You can now ask questions relating to the content of the five source PDF documents that were uploaded in the playground. The documents relate to employee policies and health plans of the fictitious Contoso/Northwind organizations. There are two example queries and responses in the screenshot in Figure 5:

Figure 5: user queries against the Assistant loaded with fictitious ‘employee policy’ PDFs as the data source

Next steps

The Assistant API is still in preview, so it could change before the official release. The current JetchatAI implementation needs some more work in error handling, resolving citations, checking for multiple assistant responses, implementing functions, and more. The takeaway from this week is how much simpler the Assistant API is to use versus implementing the Chat API directly.

Resources and feedback

Refer to the OpenAI blog for more details on the Dev Day announcements, and the openai-kotlin repo for updates on support for the new features.

We’d love your feedback on this post, including any tips or tricks you’ve learned from playing around with ChatGPT prompts.

If you have any thoughts or questions, use the feedback forum or message us on Twitter @surfaceduodev.

Light

Light Dark

Dark

0 comments