Following the //Build conference last year I posted “Addressing Visual Studio Performance” to gather your feedback on Visual Studio performance and to discuss with you those areas you felt were important for us to improve. Next, I posted “An update on Visual Studio Performance” about some of the work we are doing and promised you a set of entries about performance as we prepared to ship Visual Studio Beta. We have arrived at the point where I can start posting about the significant investments in Visual Studio performance we have made that I believe will make you very happy. With this post today and two more to follow, I will cover three separate topics detailing some of the work we have done for performance along with what our benchmarks indicate you can expect.

With that being said, let me give you a quick rundown on what I will be discussing over the next few weeks:

Virtual Memory Usage: Virtual memory usage, as opposed to working set, is not the memory actually in use by the application at any time, but rather the total amount of memory reserved for usage. If virtual memory usage grows too large, it can lead to instability in the application. Later in this post I will walk you through where virtual memory usage comes from and what we have done to reduce our usage.

Solution Load: Many of you indicated that the time it takes to load solutions is too long, especially for larger solutions. We have done a great deal of work to reduce the impact of solution load in order to get you working on your code more quickly. I am very excited to provide to you some of the details on how we have improved Solution Load for you. Look for this post on 3/12/2012.

Debugging: We heard from you that the compile, edit and debug cycle had hiccups and was sluggish. We addressed many items in this area, but I want to specifically address with you changes we made in debugging. I think you will see that we have made some noticeable improvements in this cycle and I want to detail for you some of the more interesting work we have done to improve the debugging experience especially for the new Windows 8 Metro style applications. Look for this post on 3/19/2012.

With the preamble out of the way, let’s start this off with a discussion of our Virtual Memory use in Visual Studio.

Virtual Memory Usage

Visual Studio 2010 added many great new features, but all those new features take up a lot of memory. Most developers don’t have to worry about Virtual Memory (VM) for their applications. With 2GB of address space, most 32 bit processes have more memory than they’ll ever need (32 bit processes on 64 bit Windows get 4GB). However, Visual Studio was overflowing and some people were seeing crashes because of it. The scenario that consumed the most virtual memory was an end-to-end scenario using up 1.7GB (just shy of crashing) and that was before any 3rd party tools were loaded.

When we started the Visual Studio 11 project, we recognized that we needed to provide additional functionality and support Windows 8 development while at the same time using less memory! We analyzed a few options including a move to 64 bit. Moving to 64 bit would have given us more VM space, but we didn’t want to just provide more room and not clean house. You don’t buy a new larger house just because you have too much stuff cluttering it up – instead you get rid of what you don’t need. So, we got to work cleaning house.

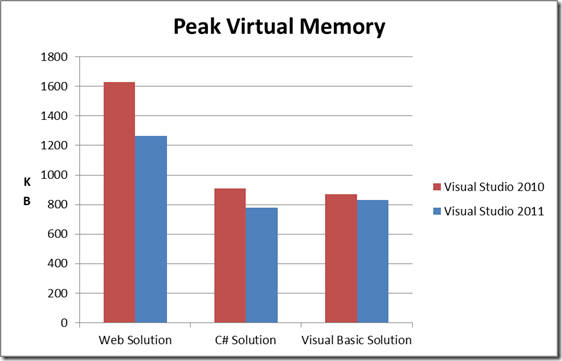

We focused primarily on the web development experience when looking at reducing the memory Visual Studio uses. The thinking was, first and foremost, that our web solution consumed the largest amount of virtual memory. Next, a large number of VS users are building web solutions and because many of the operations done in building the web solution are found in all solutions, we would ‘lower the tide’ for the other project systems as a side effect. We defined ‘End to End’ test cases (E2Es) for several project types (web and non-web) and used them to automatically measure performance results daily. The following charts compare Visual Studio 2010 SP1 with Visual Studio 11 Beta. As you can see, peak VM usage was reduced by 22% for the Web solution. We also reduced VM usage by 15% for a C# solution and 4% for a Visual Basic solution. Please continue reading for a detailed description of how we accomplished these savings.

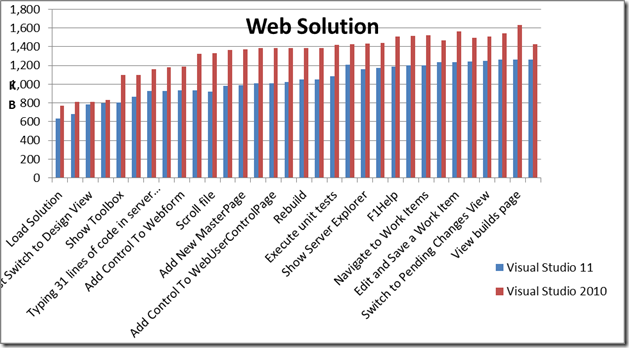

Now, let’s take a look at the details of the web solution in step by step usage and look at when we allocate more memory. This pointed at specific areas to investigate and while this is a web project, as mentioned above, many of the steps are generic to any development scenario.

As mentioned above while this is a web project many of these steps are apropos to projects you are working on every day and in our analysis, shown in the first chart above, you can see how the improvements we drove with the web solution translated into wins across different scenarios.

We started by looking at how memory was being used by Visual Studio as we ran through the E2E scenario and noticed that for our largest test case 46% of the memory was code loaded from disk (DLLs), 40% was heap and private memory, 5% was mapped files, 2% stacks, and 7% from other sources (analysis of additional scenarios showed similar memory usage).

The Biggest VM Consumer – DLLs

With nearly half the memory going to DLLs, we decided to target DLLs first. This was beneficial for a number of reasons. Not only was it the biggest memory bucket, but we knew that there would be a number of collateral wins. By delaying loading of the code until it was really needed (if ever) we would eliminate the disk IO (and time) to load the code, the time to run initialization code, and recover any data structures it created. And perhaps most importantly, we knew that we could write very actionable bugs for developers to address.

To do this, we created tools that take VMMAP snapshots Visual Studio memory usage while running our large E2E scenarios and then consolidated them to show which DLLs were loaded at each step and how much VM and Working Set (WS) each DLL took up at that step.

We then started hunting through the nearly 800 DLLs that VS loads looking for indications of excess memory usage. With this data in hand, feature teams considered a number of options:

- Move work that only needs to be done once into the phase where VS creates your user profile settings. When you run VS for the first time and choose your profile, VS actually launches a separate process to do a bunch of one time work. It does this in order to release the VM consumed by these one-time operations. See below for an interesting aside on what we learned about Visual Studio’s behavior as a result.

- Eliminate cases where a DLL was being loaded but never used. For example, loading F# project system support for a C# project.

- Don’t load code and/or initialize a feature until the user actually invokes the feature. For example, don’t fully load and initialize an add-in until the user wants to use it.

- Redesign the feature to run in its own process.

- Redesign the feature to run in a separate App Domain so the VM is reclaimed when the work is complete.

- Refactor DLLs so commonly used code lives in one DLL and less commonly used code lives in another. Candidate DLLs were identified by comparing the WS used by the DLL with the VM used. A low ratio of WS to VM indicated that a lot of code was being loaded but relatively little of it was being used. When doing this analysis we considered the OS’s behavior when allocating WS and VM. Specifically, WS is allocated in 4K blocks while VM is allocated in 64K blocks.

- Ensure that only one copy of a DLL (or mapped file) was loaded. The operating system is smart about this type of double load condition and only loads one copy into physical memory, so WS stays low, but each copy has its own address space so they all consume VM.

- For managed code, eliminate cases where both the IL and the NGEN code for the same assembly were loaded.

In cases where we wanted to eliminate the load of a DLL or refactor a DLL we needed to know why these DLL’s were getting loaded. For this, we used the Windows Event Tracing Library (ETL) to retrieve DLL load events with call stacks. By filtering on the DLL name we were able to see every place the DLL was loaded (and unloaded) and quickly ascertain what was causing the DLLs to load. (You can do this kind of analysis too if you want, just download the free PerfView tool, collect ETL, look at the Image Load Stacks, and set an include filter for the DLL you’re interested in.)

We repeated this process about three times during the product cycle in order to confirm improvements, identify additional opportunities and make sure new features didn’t cause regressions.

The Second Biggest Consumer – Heap and Private Memory

One of the next things we did was to consolidate the native heaps that were used by various components in VS.

To understand how Heap and Private Memory were being used, we developed a component that was injected into a process when it started and intercepted all memory APIs, including VirtualAlloc calls, heap use and file loads. For managed code, the tool monitored CLR object creation, class loading, JITting and GCs. Each call/event was tagged with information including:

- A sequence number for chronology (1,2,3…)

- Size

- Address

- Thread Id

- Full mixed managed/native call stack to show what code ran to cause the allocation

- Additional information like the CLR Class and VirtualAlloc parameters.

The API to free the allocation was also monitored and the tag is discarded when freed. Since managed objects are not explicitly freed, the tool tracked them through garbage collection, indicating how many GCs a particular object survived. Obviously a monitoring system like this can impact the performance of the application it is monitoring so the component had to be fast.

We also implemented a UI that communicates with the monitoring component (running inside the Visual Studio process) via named pipes, shared memory and events. The UI freezes the VS process and then take memory snapshots. It automates common ways of viewing the data in order to quickly identified common problems. For example:

- Allocating many copies of exactly the same data

- Wasteful allocations (4k of zeros, perhaps at the end of a 20k allocation, is suspicious)

- Chronology based allocations for a particular scenario (IntelliSense consumed what?)

- Managed object reference graph (how much memory does this object really cost including its references?)

- Unused members (of these 1000 instances of a (managed or native) class of size 30 bytes, those bytes at offset range 10-18 are always zero)

- 0 byte allocations (yes, these are allowed, but add overhead and cause heap fragmentation)

- Empty or inefficiently used heaps or containers (1000 empty Dictionary<string,string>)

- Heap fragmentation, efficiency (this heap is consuming 100k virtual memory, but has only 10k allocations in it)

- Analyze the loaded DLLs to find

- Multiple DLL loads

- DLL resource duplication (identical icons?)

- Empty DLL sections (consecutive zeros)

The tool enables us to understand how memory was being used and which components are causing the memory allocations. We are able to identify the line of code causing the memory allocation as well as the call stack that led to it. We identified hundreds of opportunities and were able to open “shovel ready” bugs for feature teams to fix.

The Result

The net effect of all this work resulted in roughly 300 fewer DLLs being loaded and nearly 400MB of VM savings when loading a Web solution within Visual Studio. This resulted in improved reliability, performance and scalability not just for Web solutions but across many different scenarios and usage patterns.

The interesting aside…

When we started analyzing the VM usage data, we noticed that if we ran the test case twice in a row the first run used approximately 400MB more VM than the second run. We were unaware of this behavior because our automated performance test system runs tests multiple times and drops outliers before calculating the results. The extra 400MB was a clear outlier (because it only showed up in the first run) so it was consistently removed from our analysis by our automation system. Fortunately we’d upgraded our system to make it easier to look at the raw data points, and this caught our attention. We did the same type of analysis (as outlined above) and discovered that first time initialization of the, Managed Extensibility Framework MEF cache was loading every DLL in the system into memory, and not releasing them. We were already using a separate app domain for loading the DLLs; however, it was flagged as shared which resulted in any NGEN DLLs it loaded staying in memory. By setting the LoaderOptimization to SingleDomain we eliminated the sharing and all DLLs were unloaded when we shut down the application domain (giving back all 400MB of memory). We also found a few other places where this type of global initialization was occurring and were able to apply similar fixes.

Coming Up Next

In the next two posts I will cover Solution Load and Debugging. I believe you will see performance improvements across the product and am excited for you to experience the next version of Visual Studio. As always, please let me know where you feel we still need improvement, but please let me know where you see noticeable performance improvements as well. I appreciate your continued support of Visual Studio.

Thanks,

Larry Sullivan Director of Engineering

Larry Sullivan – Director of Engineering, Microsoft Developer Tools Division

Short Bio: Larry has been at Microsoft for almost 20 years and has worked on a wide variety of technologies and products. Larry has spent the last several years focused on great engineering and fundamentals like performance and reliability. He and his team provide the engineering system for the Developer Tools division and provide direction for a number of engineering fundamentals for Visual Studio including performance.

Larry Sullivan – Director of Engineering, Microsoft Developer Tools Division

Short Bio: Larry has been at Microsoft for almost 20 years and has worked on a wide variety of technologies and products. Larry has spent the last several years focused on great engineering and fundamentals like performance and reliability. He and his team provide the engineering system for the Developer Tools division and provide direction for a number of engineering fundamentals for Visual Studio including performance.

0 comments