Hello prompt engineers,

OpenAI held their first Dev Day on November 6th, which included a number of new product announcements, including GPT-4 Turbo with 128K context, function calling updates, JSON mode, improvements to GPT-3.5 Turbo, the Assistant API, DALL*E 3, text-to-speech, and more. This post will focus just on the Assistant API because it greatly simplifies a lot of the challenges we’ve been addressing in the JetchatAI Android sample app.

Assistants

The Assistants overview explains the key features of the new API and how to implement an example in Python. In today’s blog post we’ll compare the new API to the Chat API we’ve been using in our Android JetchatAI sample, and specifically to the #document-chat demo that we built over the past few weeks.

Big differences between the Assistants API and the Chat API:

- Stateful – the history is stored on the server, and the client app just needs to track the thread identifier and send new messages.

- Sliding window – automatic management of the model’s context window.

- Doc upload and embedding – can be easily seeded with a knowledgebase which will be automatically chunked and embeddings created.

- Code interpreter – Python code can be generated and run to answer questions.

- Functions – supported just like the Chat API.

Revisiting the #document-chat…

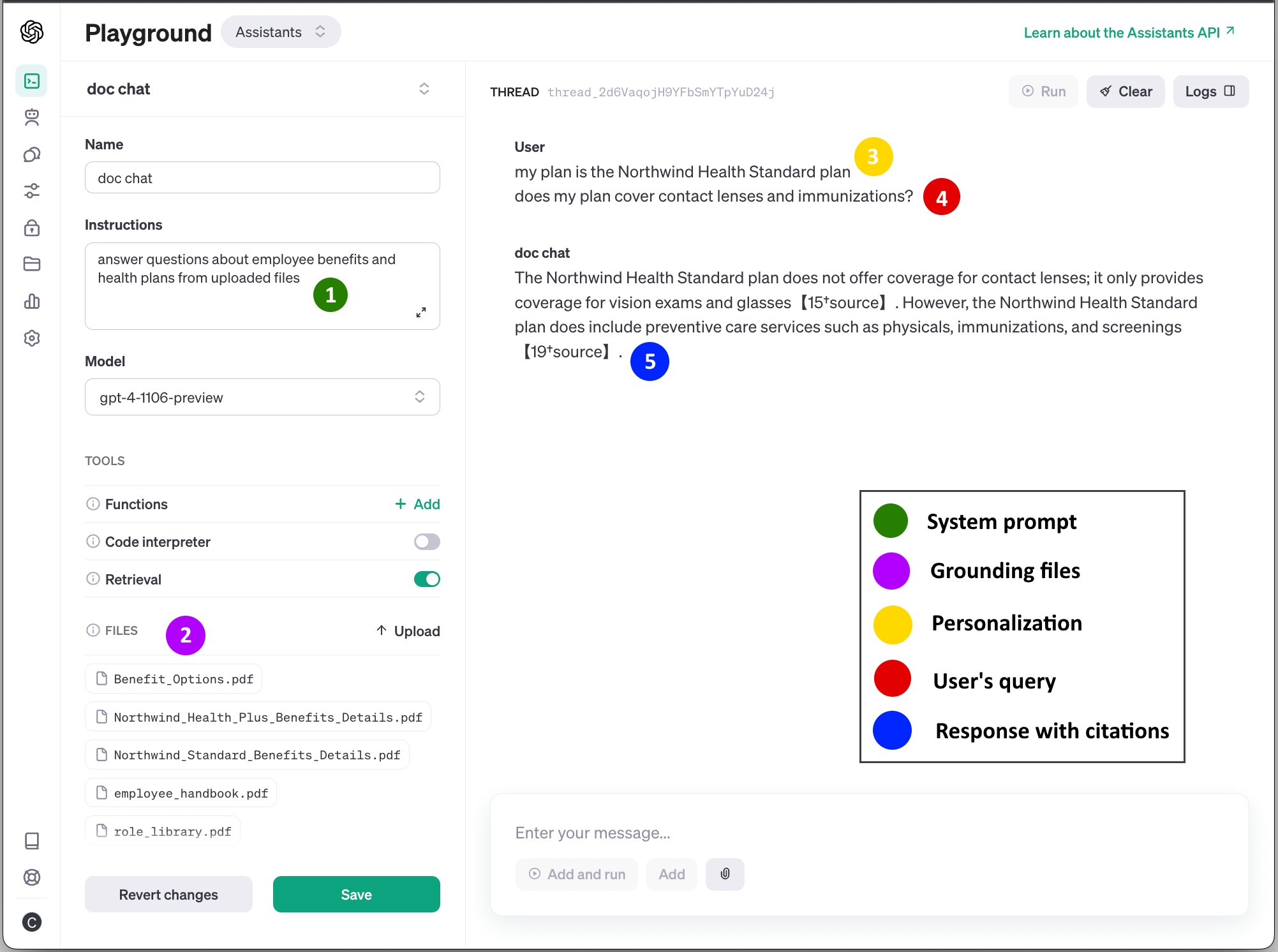

The last two posts have been about building a basic document chat similar to this Azure OpenAI sample, using a lot of custom Kotlin code. OpenAI Assistants now make this trivial to build, as shown in Figure 1 below. This example shows how to prototype an Assistant in the Playground that can answer questions based on documentation sources.

You can see the result is very similar to the responses from the previous blog post:

Figure 1: OpenAI Assistant playground, with the sample PDFs uploaded and supporting typical user queries

Each element in the playground in Figure 1 is explained below:

- The Assistant Instructions are like the system prompt.

- Enable Retrieval on the assistant and upload the source documents (in our case, the fictitious Contoso PDFs).

- Add personalization information to augment the query, eg “my plan is the Northwind Health Standard plan”. App application implementation would add this automatically, not require the user to enter it every time.

- The User query “does my plan cover contact lenses and immunizations”

- doc chat shows the result from the model is grounded in the uploaded documents, and includes citations to the relevant information source.

Comparing this example to the Chat Playground prototype last week you can see the Assistants API is much simpler.

Coming to Kotlin

The Assistant API is still in beta, so expect changes before it’s ready for production use, however it’s certain that the Kotlin code to implement the Assistant will be much simpler than the Chat API. Rather than keeping track of the entire conversation, managing the context window, chunking the source data, and prompt-tweaking for RAG, in future the code will only keep track of the thread identifier and exchange new queries and responses with the server. The citations can also be programmatically resolved when you implement the API (even though they appear as illegible model-generated strings in the playground).

The OpenAI Kotlin open-source client library is currently implementing the Assistant API – see the progress in this pull request.

Resources and feedback

Refer to the OpenAI blog for more details on the Dev Day announcements, and the Assistants docs for more technical detail.

We’d love your feedback on this post, including any tips or tricks you’ve learned from playing around with ChatGPT prompts.

If you have any thoughts or questions, use the feedback forum or message us on Twitter @surfaceduodev.

0 comments