In this post, Premier Developer Consultant Julien Oudot teaches you how to ensure network connectivity when working with Windows Containers and Virtual Machine Scale Set in Azure.

When working with Windows Containers and Virtual Machine Scale Set (VMSS) in Azure, some steps need to be performed to make sure the networking connectivity works as expected.

Coming from on-premises and VM oriented background, these platform specific operations are not always as obvious as a Ping. The intent of this 2-part article is to summarize a few things to know to test the connectivity in different configurations:

- From the outside of Azure, be able to test the connectivity to the Windows nodes part of a VMSS.

- When remote connecting to a node of a Virtual Machine Scale Set, test the connectivity with other nodes part of the same SUBNET.

- When running a Windows Container in one of your node, test the connectivity to this container from the node hosting it.

- When running Windows containers in your nodes of the VMSS, test the connectivity to another container running on another node.

The first part of the article will be focused on the first two configurations while the part 2 will be focused on the last two set up, when working with containers.

As a common prerequisite, we have a VM Scale Set deployed composed of 2 Windows Server 2016 with Container in Azure. The DNS created is vmss-blog-dn.eastus.cloudapp.azure.com and here is the list of resources automatically created by Azure when we created the VMSS.

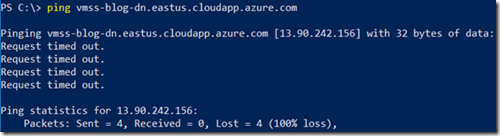

At this point, what we might want to try is to Ping the nodes part of the VMSS to ensure they are up and running. We might try to do this by pinging the DNS we just created as shown below.

However, as you can see in the list of deployed resources above, we have to go through the load balancer to reach the nodes part of the VMSS. And, it turns out that the load balancer blocks the ICMP traffic coming from Ping.

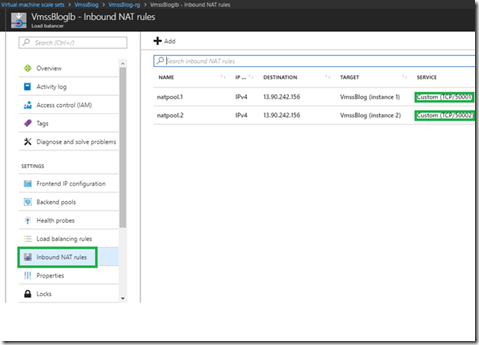

Our solution to target a particular node, is to drill down into the Load Balancer Inbound NAT rules as shown below, and check the port used to communicate with the node. In our example 50001 and 50002.

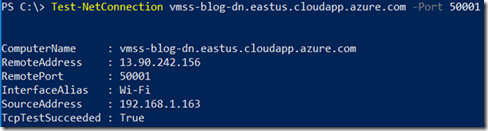

Then you can use the Test-NetConnection Powershell commandlet to test the network connectivity with the nodes as follow.

For this scenario, we need to RDP into the machines and we can do it by using the same vmss-blog-dn.eastus.cloudapp.azure.com address, along with the ports 50001 and 50002 from our regular Remote Desktop Connection client.

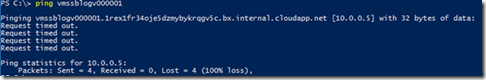

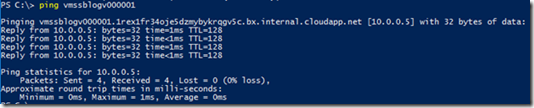

Again, to test the connectivity between one of the node and another node of my VMSS which is in the same VNET/SUBNET, the first idea would be to use ping as shown below.

We can see that the private IP address 10.0.0.5 is directly targeted, which means that we don’t go through the load balancer. However, the filtering happens at another level. This time, this is the Windows Server Firewall which blocks the ICMP traffic.

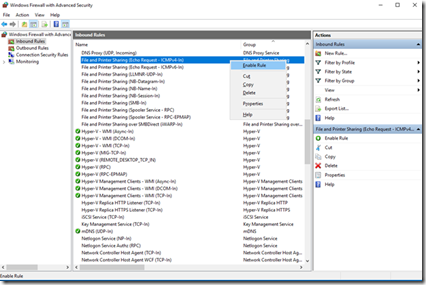

On the machine(s) we want to ping, we need to open the Windows Firewall application.

And then enable the File and Printer Sharing (Echo Request – ICMPv4-In) Inbound Rule.

Once this is done, we should be able to use ping between machines of our internal network.

This step concludes the first part of the article, focused on inter-node communication. In part 2, we are going to talk about how to communicate with containers running on these nodes.

0 comments