The DirectStorage team is pleased to share that GPU decompression with DirectStorage 1.1 is available now. This new version of DirectStorage contains everything a developer needs to get started with GPU decompression. For more information on this feature and how it benefits gamers, check out our previous blog post: DirectStorage 1.1 Coming Soon.

Getting Started with DirectStorage 1.1

For all the necessary resources to get started, please check out aka.ms/directstorage. This includes the NuGet repository with the redistributable package, the GitHub repo with samples and documentation, as well as links to PIX on Windows.

A few tips:

- See below for our deep dive on how to use the new features.

- Check out README.md in the DirectStorage Samples repo (https://github.com/microsoft/DirectStorage) for additional detailed information on API usage patterns.

- Check out our two new samples: GpuDecompressionBenchmark and BulkLoadDemo.

- Update to the latest drivers before testing performance.

- Encourage players to install games on NVMe SSDs for the best experience.

- Start as early in the development process as possible to avoid needing to drastically reconfigure existing workloads.

What’s New:

- GPU decompression and GDeflate now available.

- Added EnqueueSetEvent to use Win32 event objects for completion notification.

- Performance improvements and bug fixes.

Optimized Drivers Available Now

GPU decompression is supported on all DirectX 12 + Shader Model 6.0 GPUs. However, one of the benefits of DirectStorage 1.1 is that GPU hardware vendors can provide additional optimizations for their hardware, called metacommands. For more information about support for these metacommands from our partners, see the links below. As always, we recommend updating to the latest drivers for your gaming hardware for the best performance.

AMD: https://gpuopen.com/amd-support-for-microsoft-directstorage-1-1

Intel: https://www.intel.com/content/www/us/en/developer/articles/news/directstorage-on-intel-gpus.html

Developer Deep Dive

Compressing Assets

The first step in making use of GDeflate decompression is to compress the data!

In DirectStorage all the data read by a single request is compressed in isolation. Most games will arrange their data such that a single file on disk contains multiple streams. Even if the game wants to use a single file for a texture, that is likely to contain multiple streams. For example, a single texture file may have:

- 1 stream containing metadata that will be read into system memory

- 1 stream containing MIP 0

- 1 stream containing the remaining MIPs

Rather than thinking in terms of compressing files, it is better to think about compressing streams. For this reason, the DirectStorage SDK does not ship with a standalone tool for compressing files. Instances of the IDStorageCompressionCodec interface can be used for this. Use DStorageCreateCompressionCodec with DSTORAGE_COMPRESSION_FORMAT_GDEFLATE to obtain an instance of the GDeflate codec. This can be used to compress (and decompress) streams. See the MiniArchive project in the BulkLoadDemo sample for an example of this in action.

Compressing Assets on non-Windows platforms

Some developers may need to run their compression tools on non-Windows platforms. In order to enable this, we will be releasing the C++ source code to the GDeflate compressor and decompressor, and the HLSL reference implementation of the decompression. This code will be released under the Apache 2.0 license. We‘re targeting the end of 2022; more details will be posted on this blog as they become available.

GDeflate Format

Knowledge of how GDeflate works can inform decisions about appropriate stream sizes. First, GDeflate splits the uncompressed stream into 64 KiB-sized tiles. Each tile is compressed separately. This provides the first level of parallelism – for CPU decompression, each tile can be decompressed by a different thread, and for GPU decompression, each tile can be decompressed by a single thread group. For the second level of parallelism, GDeflate arranges the data within a tile so that multiple lanes within a thread group can work in parallel on decompressing that tile.

A key takeaway from this is the 64 KiB tile size. If a choice has to be made about whether or not to split some data into multiple streams – for example, whether or not to use a DSTORAGE_DESTINATION_TEXTURE_REGION or DSTORAGE_DESTINATION_MULTIPLE_SUBRESOURCES request – then understanding the tile size can help guide this decision.

Decompression

When the CompressionFormat field on DSTORAGE_REQUEST_OPTIONS is set to DSTORAGE_COMPRESSION_FORMAT_GDEFLATE, DirectStorage will decompress the stream using GDeflate

If the request is for system memory – REQUEST_DESTINATION_BUFFER – then the CPU will be used to decompress the stream. This is to avoid the extra overhead of transferring the data to the GPU and then reading it back to system memory.

The request is eligible for GPU decompression if the request’s destination is a D3D12 resource – i.e., it is one of:

- REQUEST_DESTINATION_BUFFER

- REQUEST_DESTINATION_TEXTURE_REGION

- REQUEST_DESTINATION_MULTIPLE_SUBRESOURCES

- REQUEST_DESTINATION_TILES

GPU Decompression

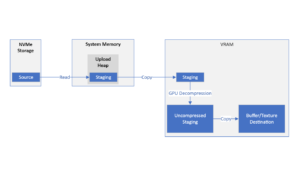

DirectStorage creates a compute queue and two copy queues. The decompression takes place on the compute queue – either by executing the decompression metacommand if available, or by dispatching the DirectCompute fallback implementation. If the GPU/driver is unable to use the DirectCompute fallback, then DirectStorage will decompress on the CPU. The first copy queue is used to copy the compressed stream from system memory to the GPU. The second copy queue is used to copy the decompressed stream to the final resource.

Decompression is performed in batches, where each Dispatch / metacommand execution operates on some number of streams. Recall that GDeflate splits each stream into tiles, where each tile decompresses to 64 KiB of data. The DirectCompute implementation has each wave work on one tile and uses a work-stealing scheme to allow the waves to work through all the tiles across all the streams. A heuristic is used to launch enough waves such that the GPU can decompress as quickly as possible. Note that the metacommand implementations are vendor-specific, but are also generally optimizing for high decompression bandwidth.

The amount of GPU decompression work given to the GPU can be controlled by the number of requests being made. This is like the way that games currently scale level of detail for rendering on different hardware configurations.

Due to the nature of this scheme, there’s a tail-effect where, as the batch runs out of tiles to decompress, the GPU starts to have less work to do. For this reason, the larger the batch, the smaller the tail effect. The size of each batch is affected by the speed of the IO device and the size of the staging buffer. Generally, the larger the staging buffer, the larger each batch can be.

The GpuDecompressionBenchmark sample can be used to demonstrate the results of different staging buffer sizes. We recommend that you experiment and measure the performance of different staging buffer sizes in the context of your game – staging buffer sizes of around 128 MiB are required to fully saturate the IO stack.

Controlling CPU Decompression

When DirectStorage performs GDeflate decompression on the CPU it uses its built-in decompression system. This uses a Windows threadpool to support multi-threaded CPU decompression. The NumBuiltInCpuDecompressionThreads field in DSTORAGE_CONFIGURATION can be used to control how many threads are assigned to this.

If a game wishes to have more control over exactly how CPU decompression is performed – integrating with its own job system perhaps, then DSTORAGE_DISABLE_BUILTIN_CPU_DECOMPRESSION, can be specified for NumBuiltInCpuDecompressThreads. When configured in this way, DirectStorage will no longer perform CPU decompression itself. Instead, the title can use the custom decompression queue with the DSTORAGE_GET_REQUEST_FLAG_SELECT_BUILTIN flag to collect decompression work. The game can use the same codec mentioned above to decompress the data, or it can plug in its own GDeflate decompression implementation if it wishes.

Forcing CPU Decompression

There may be some cases where GPU decompression isn’t desirable. To globally disable GPU decompression, set the DisableGpuDecompression field in DSTORAGE_CONFIGURATION to TRUE. Games may wish to provide this option to users as a performance setting, in case they are running on a system that maybe has a significantly faster CPU than GPU.

DirectStorage 1.1 doesn’t have a way to force CPU decompression on a per-request or per-queue basis. Instead, custom decompression can be used. For example, a title might designate DSTORAGE_CUSTOM_COMPRESSION_0 to mean “CPU decompressed GDeflate” and then use the custom decompression queue to decompress on the CPU.

Need help?

Questions on development? Have an implementation you want feedback on? Reach out to askwindstorage@microsoft.com.

Looking for community engagement? Check out the DX12 Discord Server.

thanks for the informative and well written blog, noob question ; does performance differ between nvme ssds linked through the chipset or the cpu