Neural Networks Will Revolutionize Gaming

Earlier this month, Microsoft announced the availability of Windows Machine Learning. We mentioned the wide-ranging applications of WinML on areas as diverse as security, productivity, and the internet of things. We even showed how WinML can be used to help cameras detect faulty chips during hardware production.

But what does WinML mean for gamers? Gaming has always utilized and pushed adoption of bleeding edge technologies to create more beautiful and magical worlds. With innovations like WinML, which extensively use the GPU, it only makes sense to leverage that technology for gaming. We are ready to use this new technology to empower game developers to use machine learning to build the next generation of games.

Games Reflect Gamers

Every gamer that takes time to play has a different goal – some want to spend time with friends or to be the top competitor, and others are just looking to relax and enjoy a delightful story. Regardless of the reason, machine learning can provide customizability to help gamers have an experience more tailored to their desires than ever before. If a DNN model can be trained on a gamer’s style, it can improve games or the gaming environment by altering everything from difficulty level to avatar appearance to suit personal preferences. DNN models can be trained to adjust difficulty or add custom content can make games more fun as you play along. If your NPC companion is more work than they are worth, DNNs can help solve this issue by making them smarter and more adaptable as they understand your in-game habits in real time. If you’re someone who likes to find treasures in game but don’t care to engage in combat, DNNs could prioritize and amplify those activities while reducing the amount or difficulty of battles. When games can learn and transform along with the players, there is an opportunity to maximize fun and make games better reflect their players.

A great example of this is in EA SEED’s Imitation Learning with Concurrent Actions in 3D Games. Check out their blog and the video below for a deeper dive on how reinforcement and imitation learning models can contribute to gaming experiences.

Better Game Development Processes

There are so many vital components to making a game: art, animation, graphics, storytelling, QA, etc, that can be improved or optimized by the introduction of neural networks. The tools that artists and engineers have at their disposal can make a massive difference to the quality and development cycle of a game and neural networks are improving those tools. Artists should be able to focus on doing their best work: imagine if some of the more arduous parts of terrain design in an open world could be generated by a neural network with the same quality as a person doing it by hand. The artist would then be able to focus on making that world more beautiful and interactive place to play, while in the end generating higher quality and quantity of content for gamers.

A real-world example of a game leveraging neural networks for tooling is Remedy’s Quantum Break. They began the facial animation process by training on a series of audio and facial inputs and developed a model that can move the face based just on new audio input. They reported that this tooling generated facial movement that was 80% of the way done, giving artists time to focus on perfecting the last 20% of facial animation. The time and money that studios could save with more tools like these could get passed down to gamers in the form of earlier release dates, more beautiful games, or more content to play.

Unity has introduced the Unity ML-Agents framework which allows game developers to start experimenting with neural networks in their game right away. By providing an ML-ready game engine, Unity has ensured that developers can start making their games more intelligent with minimal overhead.

Improved Visual Quality

We couldn’t write a graphics blog without calling out how DNNs can help improve the visual quality and performance of games. Take a close look at what happens when NVIDIA uses ML to up-sample this photo of a car by 4x. At first the images will look quite similar, but when you zoom in close, you’ll notice that the car on the right has some jagged edges, or aliasing, and the one using ML on the left is crisper. Models can learn to determine the best color for each pixel to benefit small images that are upscaled, or images that are zoomed in on. You may have had the experience when playing a game where objects look great from afar, but when you move close to a wall or hide behind a crate, things start to look a bit blocky or fuzzy – with ML we may see the end of those types of experiences. If you want to learn more about how up-sampling works, attend NVIDIA’s GDC talk.

What is Microsoft providing to Game Developers? How does it work?

Now that we’ve established the benefits of neural networks for games, let’s talk about what we’ve developed here at Microsoft to enable games to provide the best experiences with the latest technology.

Quick Recap of WinML

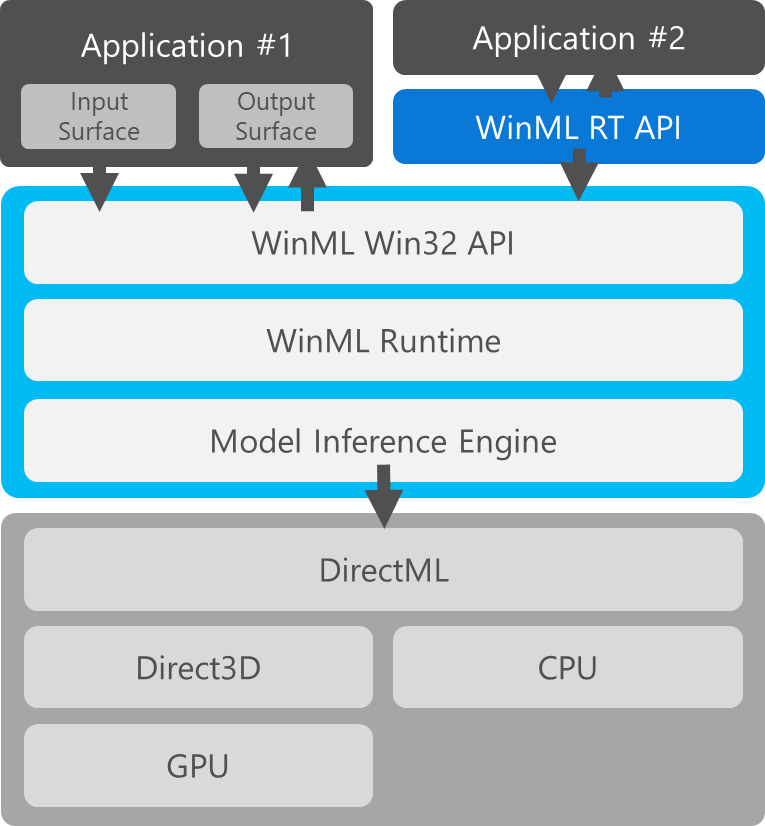

As we disclosed earlier this month, The WinML API allows game developers to take their trained models and perform inference on the wide variety of hardware (CPU, GPU, VPU) found in gaming machines across all vendors. A developer would choose a framework, such as CNTK, Caffe2, or Tensorflow, to build and train a model that does anything from visually improving the game to controlling NPCs. That model would then be converted to the Open Neural Network Exchange (ONNX) format, co-developed between Microsoft, Facebook, and Amazon to ensure neural networks can be used broadly. Once they’ve done this, they can pipe it up to their game and expect it to run on a gamer’s Windows 10 machine with no additional work on the gamer’s part. This works, not just for gaming scenarios, but in any situation where you would want to use machine learning on your local machine.

DirectML Technology Overview

We know that performance is a gamer’s top priority. So, we built DirectML to provide GPU hardware acceleration for games that use Windows Machine Learning. DirectML was built with the same principles of DirectX technology: speed, standardized access to the latest in hardware features, and most importantly, hassle-free for gamers and game developers – no additional downloads, no compatibility issues – everything just works. To understand why how DirectML fits within our portfolio of graphics technology, it helps to understand what the Machine Learning stack looks like and how it overlaps with graphics.

DirectML is built on top of Direct3D because D3D (and graphics processors) are very good for matrix math, which is used as the basis of all DNN models and evaluations. In the same way that High Level Shader Language (HLSL) is used to execute graphics rendering algorithms, HLSL can also be used to describe parallel algorithms of matrix math that represent the operators used during inference on a DNN. When executed, this HLSL code receives all the benefits of running in parallel on the GPU, making inference run extremely efficiently, just like a graphics application.

In DirectX, games use graphics and compute queues to schedule each frame rendered. Because ML work is considered compute work, it is run on the compute queue alongside all the scheduled game work on the graphics queue. When a model performs inference, the work is done in D3D12 on compute queues. DirectML efficiently records command lists that can be processed asynchronously with your game. Command lists contain machine learning code with instructions to process neurons and are submitted to the GPU through the command queue. This helps to integrate in machine learning workloads with graphics work, which makes bringing ML models to games more efficient and it gives game developers more control over synchronization on the hardware.

Inspired by and Designed for Game Developers

D3D12 Metacommands

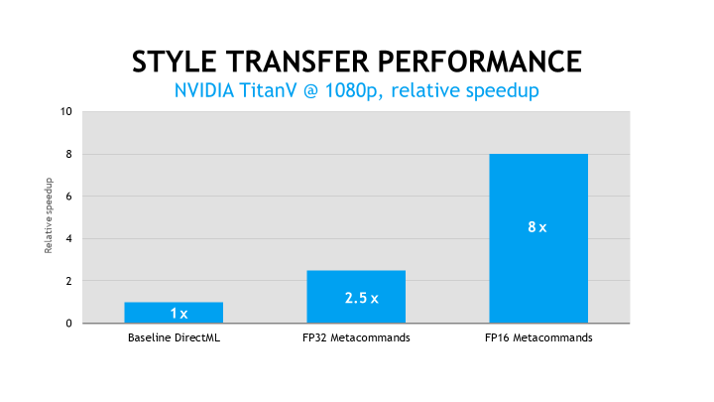

As mentioned previously, the principles of DirectX drive us to provide gamers and developers with the fastest technology possible. This means we are not stopping at our HLSL implementation of DirectML neurons – that’s pretty fast but we know that gamers require the utmost in performance. That’s why we’ve been working with graphics hardware vendors to give them the ability to implement even faster versions of those operators directly in the driver for upcoming releases of Windows. We are confident that when vendors implement the operators themselves (vs using our HLSL shaders), they will get better performance for two reasons: their direct knowledge of how their hardware works and their ability to leverage dedicated ML compute cores on their chips. Knowledge of cache sizes and SIMD lanes, plus more control over scheduling are a few examples of the types of advantages vendors have when writing metacommands. Unleashing hardware that is typically not utilized by D3D12 to benefit machine learning helps prove out incredible performance boosts.

Microsoft has partnered with NVIDIA, an industry leader in both graphics and AI in our design and implementation of metacommands. One result of this collaboration is a demo to showcase the power of metacommands. The details of the demo and how we got that performance will be revealed at our GDC talk (see below for details) but for now, here’s a sneak peek of the type of power we can get with metacommands in DirectML. In the preview release of WinML, the data is formatted as floating point 32 (FP32). Some networks do not depend on the level of precision that FP32 offers, so by doing math in FP16, we can process around twice the amount of data in the same amount of time. Since models benefit from this data format, the official release of WinML will support floating point 16 (FP16), which improves performance drastically. We see an 8x speed up using FP16 metacommands in a highly demanding DNN model on the GPU. This model went from static to real-time due to our collaboration with NVIDIA and the power of D3D12 metacommands used in DirectML.

PIX for Windows support available on Day 1

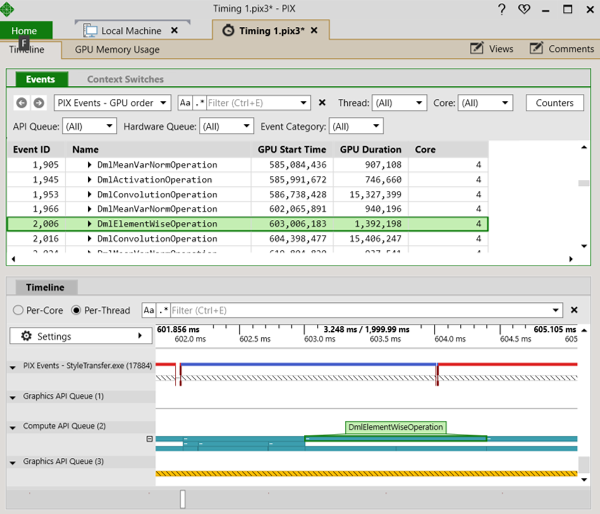

With any new technology, tooling is always vital to success, which is why we’ve ensured that our industry-leading PIX for Windows graphics tool is capable of helping developers with performance profiling models running on the GPU. As you can see below, operators show up where you’d expect them on the compute queue in the PIX timeline. This way, you can see how long each operator takes and where it is scheduled. In addition, you can add up all the GPU time in the roll up window in order to understand how long the network is taking overall.

Support for Windows Machine Learning in Unity ML-Agents

Microsoft and Unity share a goal of democratizing AI for gaming and game development. To advance that goal, we’d like to announce that we will be working together to provide support for Windows Machine Learning in Unity’s ML-Agents framework. Once this ships, Unity games running on Windows 10 platforms will have access to inference across all hardware and the hardware acceleration that comes with DirectML. This, combined with the convenience of using an ML-ready engine, will make getting started with Machine Learning in gaming easier than ever before.

Getting Started with Windows Machine Learning

Game developers can start testing out WinML and DirectML with their models today. They will get all the benefit of hardware breadth and hardware acceleration with HLSL implementations of operators. The benefits of metacommands will be coming soon as we release more features of DirectML. If you’re attending GDC, check out the talks we are giving below. If not, stay tuned to the DirectX blog for more updates and resources on how to get started after our sessions. Gamers can simply keep up to date with the latest version of Windows and they will start to see new features in games and applications on Windows as they are released.

UPDATE: For more instructions on how to get started, please check out the forums on DirectXTech.com. Here, you can read about how to get started with WinML, stay tuned in to updates when they happen, and post your questions/issues so we can help resolve them for you quickly.

GDC talks

If you’re a game developer and attending GDC on Thursday, March 22nd, please attend our talks to get a practical technical deep dive of what we’re offering to developers. We will be co-presenting with NV on our work to bring Machine Learning to games.

Using Artificial Intelligence to Enhance your Game (1 of 2) This talk will be focused on how we address how to get started with WinML and the breadth of hardware it covers.

UPDATE: Click here for the slides from this talk.

Using Artificial Intelligence to Enhance Your Game, Part 2 (Presented by NVIDIA) After a short recap of the first talk, we’ll dive into how we’re helping to provide developers the performance necessary to use ML in their games.

UPDATE: Click here for the slides from this talk.

Recommended Resources:

• NVIDIA’s AI Podcast is a great way to learn more about the applications of AI – no tech background needed. • If you want to get coding fast with CNTK, check out this EdX class – great for a developer who wants a hands-on approach. • To get a deep understanding of the math and theory behind deep learning, check out Andrew Ng’s Coursera Course

Appendix: Brief introduction to Machine Learning

“Shall we play a game?” – Joshua, War Games

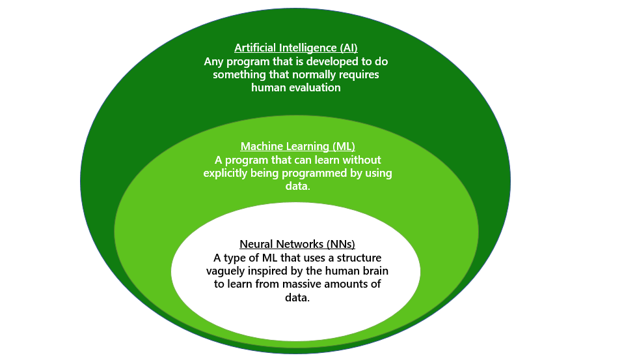

The concept of Artificial Intelligence in gaming is nothing new to the tech saavy gamer or sci-fi film fan, but the Microsoft Machine Learning team is working to enable game developers to take advantage of the latest advances in Machine Learning and start developing Deep Neural Networks for their games. We recently announced our AI platform for Windows AI developers and showed some examples of how Windows Machine Learning is changing way we do business, but we also care about changing the way that we develop and play games. AI, ML, DNN – are these all buzzwords that mean the same thing? Not exactly; we’ll dive in to what Neural Networks are, how they can make games better, and how Microsoft is enabling game developers to bring that technology to wherever you game best.

What are Neural Networks and where did they come from?

People have been speculating on how to make computers think more like humans for a long time and emulating the brain seems like an obvious first step. The behind research Neural Networks (NNs) started in the early 1940s and fizzled out in the late ’60s, due to the limitations in computational power. In the last decade, Graphics Processing Units (GPUs) have exponentially increased the amount of math that can be performed in a short amount of time (thanks to demand from the gaming industry). The ability to quickly do a massive amount of matrix math revitalized interest in neural networks – created by processing large amounts of data through layers of nodes (neurons) that can learn about properties of that data and those layers of nodes make up a model. That learning process is called training. If the model is correctly trained, when it is fed a new piece of data, it performs inference on that data and should correctly be able to predict the properties of data it has never seen before. That network becomes a deep neural network (DNN) if it has two or more hidden layers of neurons.

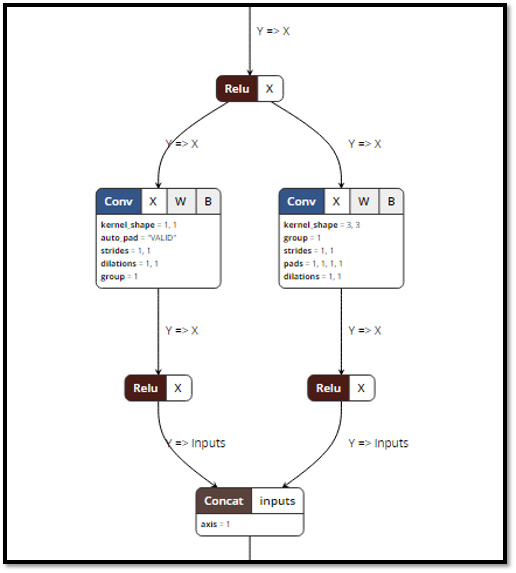

There are many types of Neural Networks and they all have different properties and uses. An example is a Convolutional Neural Network (CNN) that uses a matrix filtering system that identifies and breaks images down into their most basic characteristics, called features, and then uses that break down in the model to determine if new images share those characteristics. What makes a cat different from a dog? Humans know the difference just by looking, but how could a computer when they share a lot of characteristics – 4 legs, tails, whiskers, and fur. With CNNs, the model will learn the subtle differences in the shape of a cat’s nose versus a dog’s snout and use that knowledge to correctly classify images.

0 comments