Summary

Recently I was converting some decompression code to C# so that we could use it cross platform and to aid in our team’s effort to migrate our new analysis process to .NET 6. After I got the initial implementation done with the simplest, cleanest code that I could, I proceeded to profile it to make sure I wasn’t doing anything silly. I want to go over my normal process for this and what I found. Spoiler, I got a 2x performance improvement with under 30 minutes of work.

What is the code

The code in question has 2 main components, BlockCompressorStream and XPressStream.

BlockCompressorStream– Reads a block header containing the compressed and decompressed size and holds the block, max 65K, in memory to return to the next stream.XPressStream– Reads a compressed byte stream and decompresses it on the fly using the XPress decompression algorithm. The general algorithm is to read a byte or two, determine the “codeword”, then use that to inflate by either adding the next few bytes or copying them from the already decompressed stream.

The general structure of the code looks like so:

Digging for performance

To get a general idea of the performance I first started with the CPU Usage tool, I was curious how long it would take and what part of the algorithm is the hot spot. While I could launch a Visual Studio patch with my code, I opted for an easier route that scoped what I was profiling to just my decompression algorithm. During development I created a bunch of unit tests to verify the compression. One such test was to take a large, compressed file and decompress it to a known file that I can then check the hashes against. I wrote a simple console application in my solution that instantiates the unit test class and then just invokes the unit test, allowing me to reuse assets and quickly profile things as the “startup project”. After running the CPU Usage tool against this exe, I had the following:

This is what I expected to see since XPressStream.ReadBlock() is doing the actual decompression which is good, my mental model of what my code does matches reality.

What is a bit odd is that less than a third of the time is spent in the function (self), the rest of the time is in child functions (total – self). Looking at the CPU tool’s new flame chart I see most of the time seems to be spent reading in UInt16.

I assumed reading in the data would take some time, but I didn’t expect it to take 2/3 of my algorithms time. Peeking at the BlockCompressor.Read I can see it spending most of the time reading the stream which got me thinking maybe I’m not buffering enough. Looking at where I construct the BufferedStream, sure enough I’m using the default buffer size which normally is fine, but I know that my blocks can never be more than 65,536.

public BlockCompressorStream(Stream stream)

{

// We add a buffer stream around the stream to handle the buffering for us

this.stream = new BufferedStream(stream);

if (!this.ReadHeader())

{

throw new InvalidDataException();

}

}

I could buffer that much by default though it seems it bit much, instead I chose to buffer half as much 32,768. If tuning this was super important, I would profile the code with various sizes to determine the correct tradeoff of memory size to performance, but I’m not overly concerned and instead will just leave a comment for the next dev to hit this code. Rerunning the CPU Usage, I find that I didn’t quite double performance, but I got pretty close.

Poking around a little more I don’t immediately notice anything that is jumping out. Since we are using a buffered stream and I don’t allocate anything in the algorithm I figured I should verify my allocations are at a steady state. To do that I ran the .NET Object Allocation profiler on the test case and got the following:

Immediately I noticed the two GCs which I really didn’t expect. Maybe the BufferedStream allocation put us over budget for 1 GC but 2 seems a bit suspect. Looking at the collections tab I actually have 3 GCs, and they are due to “AllocSmall” indicating we are allocating a bunch of small objects and trying to collect them:

What is interesting is the top type we are allocating is a byte array of length 1, which is almost never correct. Jumping over to allocations I can confirm I’m actually allocating over 200K byte[1] arrays which is terrible. Looking at the backtrace it is coming from Stream.ReadByte, which is the default implementation my BlockCompressorStream is using.

Navigating to source through the context menu I see the code and I’m horrified to realize that yep, it is allocating a byte[1] so it can call into Read(byte[], int, int). Since BufferedStream is holding the buffered data in memory it should be able to return the byte from its current location. Checking on ReferenceSource I can confirm it is doing just that: bufferedstream.cs (microsoft.com)

Overriding Stream.ReadByte in my BlockCompressorStream and using the BufferedStream implementation I’m now in a much better allocation situation:

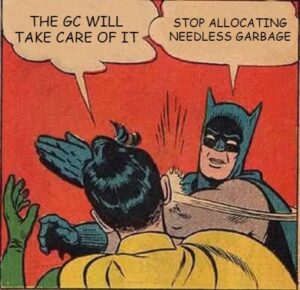

The .NET GC is really fast but giving it a bunch of garbage work to do is going to slow things down. Rerunning the CPU Usage tool to see if removing all these allocations I can confirm that it had a significant impact.

I reduced the time by almost 1/3 again. Compared with my original baseline trace I went from 2610 -> 1116 (2.3x), more than doubling performance with a little bit of investigation. At this point while I could continue to dig for performance, I got most of the low hanging fruit and I really shouldn’t spend time on this unless it is a hot path in the overall application.

Conclusion

Taking a little time to confirm my mental model of my code with real world data is enlightening. I spent less than 30 minutes and more than doubled the performance on my code and learned more about how Stream is implemented in .NET. Let me know what you found while profiling your code and what factor of improvement you were able to achieve with the tools!

![Top collected types showing System.Byte[1]](https://devblogs.microsoft.com/visualstudio/wp-content/uploads/sites/4/2022/04/Picture6.png)

![Backtrace of System.Byte[1] allocations](https://devblogs.microsoft.com/visualstudio/wp-content/uploads/sites/4/2022/04/Picture7.png)

![System.Byte[] allocations after fix, no more System.Byte[1]](https://devblogs.microsoft.com/visualstudio/wp-content/uploads/sites/4/2022/04/Picture8.png)

Hi Nik,

I did a lot of investigations about fast reading streams and i have found one special case (investigated with .Net 6)

scenario:

Filestream (!), reading only(!)

FileStream allows to define a buffer size during construction/opening. Defaultsize is 4K.

My first idea was to increase the buffer step by step 4K, 64K, 1MB....=> no improvements

last try: BufferSize=0; (!!) => lot faster.

Basically that make sense for my scenario:

buffer is overhead, that may help in random access scenarios,

but in a read only scenario the buffers implemented by OS/STORAGE should be enough/better.

Not sure if that helps in your scenario, but it is...

Thanks for the suggestion Werner! Sure enough, setting the read buffer to 0 removed half the Kernel32::ReadFile time. For the traces above 50 samples were spent in Kernel32::ReadFile before your suggestion and 20 after.

referencesource.microsoft.com contains the sources for the old .NET Framework, to look at the modern .NET implementation you can use source.dot.net instead.

Cool! I hadn’t seen source.dot.net yet. I always just kinda grepped through GitHub for modern .NET, this will save me a ton of time. Unfortunately, our code is still running .NET Framework, though we are trying to move our analysis process to .NET 6 during Visual Studio 2022 Update 3.

Hi Nik,

Thanks for sharing. Do you mind sharing the code where it overrides Stream.ReadByte in your BlockCompressorStream and using the BufferedStream implementation?

Thanks,

Michael

Sure! In the code below <code> is the buffered stream.

<code>