The societal case for carbon accounting of AI systems

Digital services consume a lot of energy and it goes without saying that in a world with accelerating climate change, we must be conscious in all parts of life with our carbon footprints. In the case of the software that we write, specifically, the AI systems we build, these considerations become even more important because of the large upfront computational resources that training some large AI models consume, and the subsequent carbon emissions resulting from it. Thus, effective carbon accounting for artificial intelligence systems is critical!

An equally important consideration is to think about the social inequalities perpetuated by the emphasis on large-scale models. It encourages a winner-take-all dynamic in the AI research ecosystem where only those who have access to large-scale compute and data infrastructure, typically well-funded industry and academic labs are able to embark on such research. Consequently, by being able to do so, they are able to publish results and secure more funding feeding the cycle further.

Ultimately, for any system that we build, there is an investment of resources: human, computational, and financial. We need to be able to assess more accurately the costs and benefits of utilizing different approaches. For example, neural architecture search (NAS) can help find more efficient architectures that we would not find through human-guided and targeted search and tuning. While NAS can be computationally expensive, if it yields a solution that is computationally- and carbon-efficient, and it is a system that is run many times over, the potential long-run savings can outweigh the short-run costs.

To be able to assess all of this, we need to have a more accurate cost estimation. While we are able to do so quite well for the financial side of things, getting a better handle on the carbon side of things will further clarify our trade-off analyses.

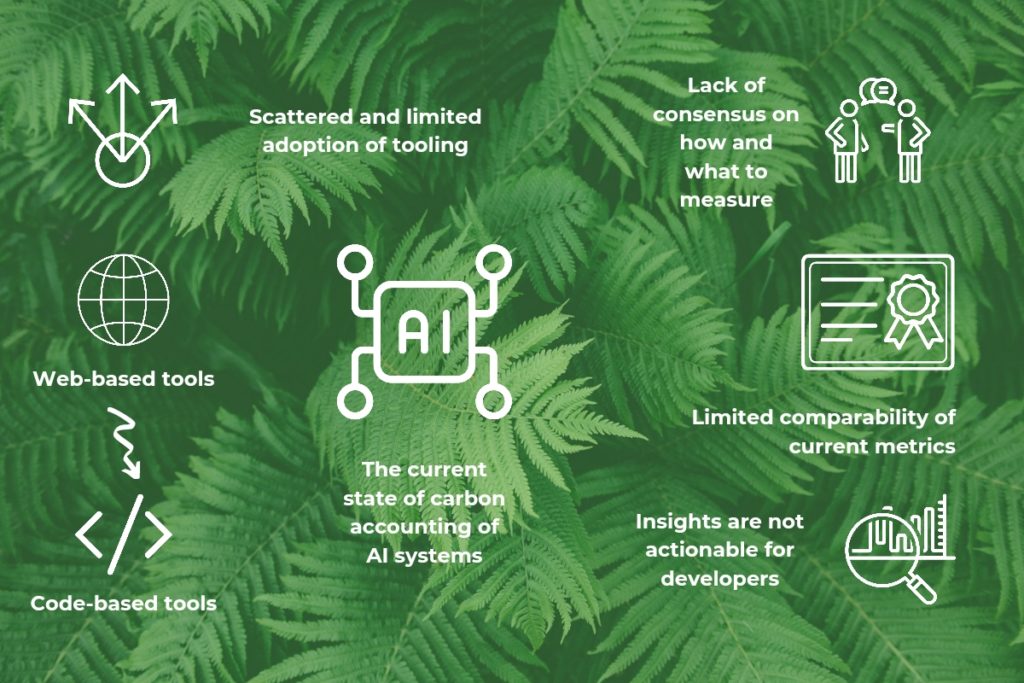

The current state of affairs of carbon-accounting tooling

Most of the tooling is split into two factions at the moment: web-based and code-based tools.

Web-based tools like MLCO2 calculator offer practitioners an option where after the training phase is complete, they can enter information about the cloud provider, the region where resources are hosted, training time, and type of hardware to estimate the CO2eq that were potentially emitted as a part of the training process of your AI system.

Code-based tools like energyusage and CodeCarbon provide developers the option of integrating snippets of code, akin to how you would track artifacts in your machine learning workflow with tools like MLFlow, to capture the energy consumption of different functions within your code. These are later converted to CO2eq by the tools to provide a comparable metric.

Unfortunately, due to the recency and general lack of awareness, the uptake of these tools has been quite limited. This is evidenced by both the number of downloads and stars for the code repositories on GitHub, but also from anecdotal evidence. You’d be hard-pressed to find fellow practitioners engaging with these tools in their day-to-day work. It continues to remain an abstract discussion and a distant concern, though the tide is shifting.

Where we’re headed and what’s happening

Introducing friction into workflows is a proven way to kill the adoption of a new process. The initial crop of tooling in this space, which was primarily web-based, was plagued by this problem. Just as documentation creation and maintenance is challenging, similarly having to enter information in a separate portal to calculate the carbon footprint breaks the natural workflow of a developer. The trend inching towards code-based tools is a positive step in that direction as it integrates more naturally into existing workflows. The latest integration that CodeCarbon offers with CometML is an example of this.

In addition, there is a rising awareness that our work in AI can have significant environmental impacts, which is driving this to become a topic of discussion and research at various top-tier machine learning conferences like NeurIPS, ICML, ICLR, ACL, etc.

But, not all is great!

We are currently at an inflection point: we need to arrive at a consensus that will help us agree on metrics and build tooling that aids in comparability. This is going to be critical if this movement is to be successful in the near future.

To start with, there is a lot of debate currently in terms of what might be the most effective way to capture the environmental impact of AI systems. There are some approaches that ask us to look at the entire lifecycle of all the products (hardware and software) that go into making an AI system. While comprehensive, these are hard to manifest accurately in practice. There is also debate on whether floating point operations, power consumption values from GPUs and CPUs, or other measures should be used to arrive at the CO2eq value that can be used to compare different systems. Most approaches today focus just on the training aspect of AI systems. But, if a model is going to be used for inference millions of times, that impact can quickly add up to be significant and rival the financial and environmental cost of training the system.

Finally, at the moment, there is a lack of actionable insight from the tools, both web- and code-based, that can help to trigger behavior change in the developers. This is the place we want to get to without which we would end up in a fruitless cycle of creating additional documentation that doesn’t lead to any action.

Let’s try and cross the chasm

For those familiar with Geoffrey Moore’s Crossing the Chasm, in the product adoption and diffusion lifecycle, there is a chasm that exists between the “early adopters” and the “early majority”. We are currently just past the “innovators” mark with the idea of carbon-accounting for AI systems even though the idea for the carbon impact of computing has been around for many years. To achieve a meaningful impact from embarking on this journey in the first place, we need to get to the stage of the late majority at which point the practice of carbon accounting for AI systems will become normalized.

But, most certainly we will have to cross the chasm on that journey and it will require us to borrow lessons from good product and tool design to be able to make that leap. In a saturated tooling world, especially with the onslaught of MLOps, adding more to the plates of developers will only aggravate the problem of low traction.

My prognosis for us to get there is that we will need to focus on the following items:

- Workflow-native tooling

- Comparability of metrics

- Verifiability of claims made about the carbon figures

- Standardization of metrics and reporting

- Certification

We are making progress towards #4 through the recently launched Green Software Foundation. In addition, I’ve done some work on #5 as a part of the Montreal AI Ethics Institute titled SECure: A Social and Environmental Certificate for AI Systems. But, there is a lot more to be done. The best way forward is to start implementing some of these ideas in practice, borrowing ideas liberally from the broader carbon accounting community, and testing and iterating on those ideas to tease out pieces that work well and those that don’t.

I’d encourage you to reach out to me or the SSE team at Microsoft if you’re working on something that can address any of the above points or if you have ideas on what can be done to improve the state of carbon accounting for AI systems.

0 comments