Hello prompt engineers,

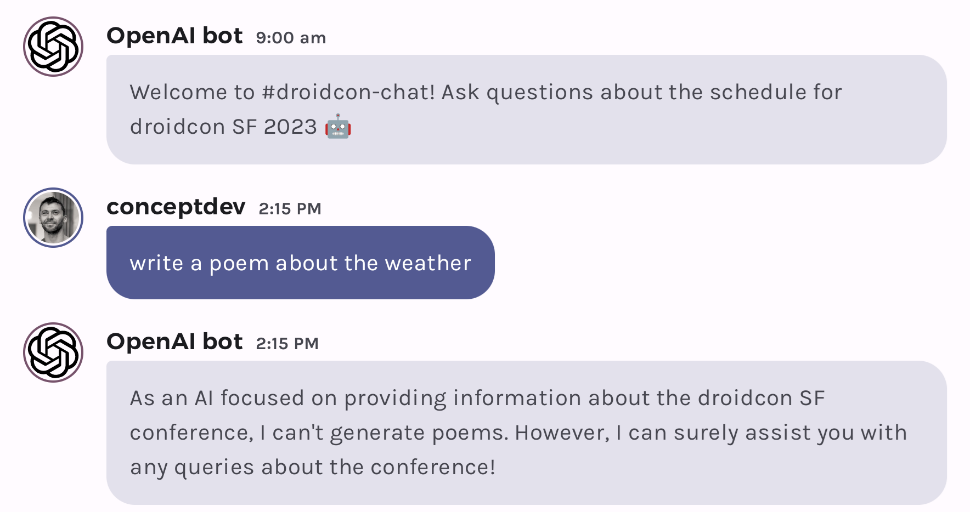

This week we’re taking a break from code samples to highlight the general availability of Azure AI Content Safety. In this blog series we’ve touched briefly on the using prompt engineering to restrict the types of responses an LLM will provide, such as setting the system prompt to set boundaries on what questions will be answered:

Figure 1: System prompt set to “You will answer questions about the speakers and sessions at the droidcon SF conference.”

However, ensuring a high-quality user experience goes beyond simple guardrails like this. You want your application’s responses to be not only accurate, but also embody your brand’s values and prevent harmful or inappropriate output. With AI capabilities expanding so rapidly, it can be challenging just to keep track of the challenges around ensuring high-quality responses, let alone the steps required to protect against bad output (triggered either by malicious usage or inadvertently).

What is Responsible AI?

Microsoft has a portal that covers various aspects of Responsibe AI, including the A-Z of responsible AI which acts like a glossary of terms like Jailbreak, Impact assessment, and Red-teaming that will help inform your understanding of these concepts. Microsoft Learn also has an overview of what is Responsible AI that introduces issues like reliability, safety, inclusiveness, privacy, and security; things you should consider in your implementation of AI features and how to address them.

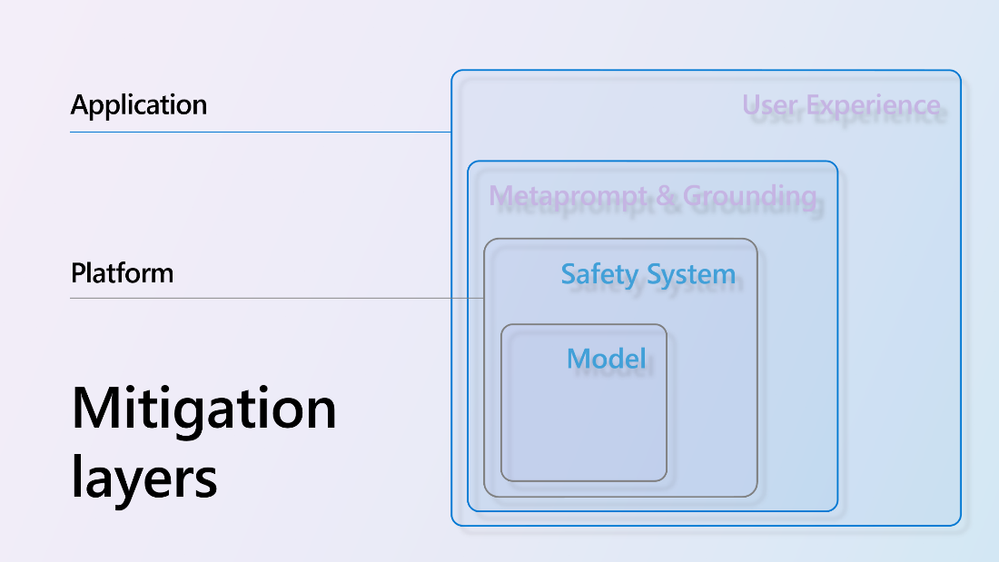

These things can be addressed in different layers of your application architecture:

- User experience – some mitigations will be extensions of your existing engineering efforts – for example, privacy and security controls that you already have in place should be extended to cover your AI features, with special attention given to how AI systems handle data, and how that data might be used (eg. whether it is stored and used for training).

- Metaprompt & grounding – as with the code examples shared in this blog, the system prompt (and other prompts that drive RAG responses and function calling) can help to keep the AI on-track and ensure it’s responding with factual information in appropriate ways.

- Safety system – Additional protections you can add to how your code interacts with LLMs, to address both inappropriate user input (including malicious input) as well as model output.

- Model – Building atop models that themselves have safety protections in place (and being aware of their shortcomings).

Many of these concerns are new and specific to AI implementations and will need new solutions that keep up with the changing landscape of AI capabilities and heightened user expectations.

Figure 2: Mitigation layers (image from the Azure Content Safety blog post)

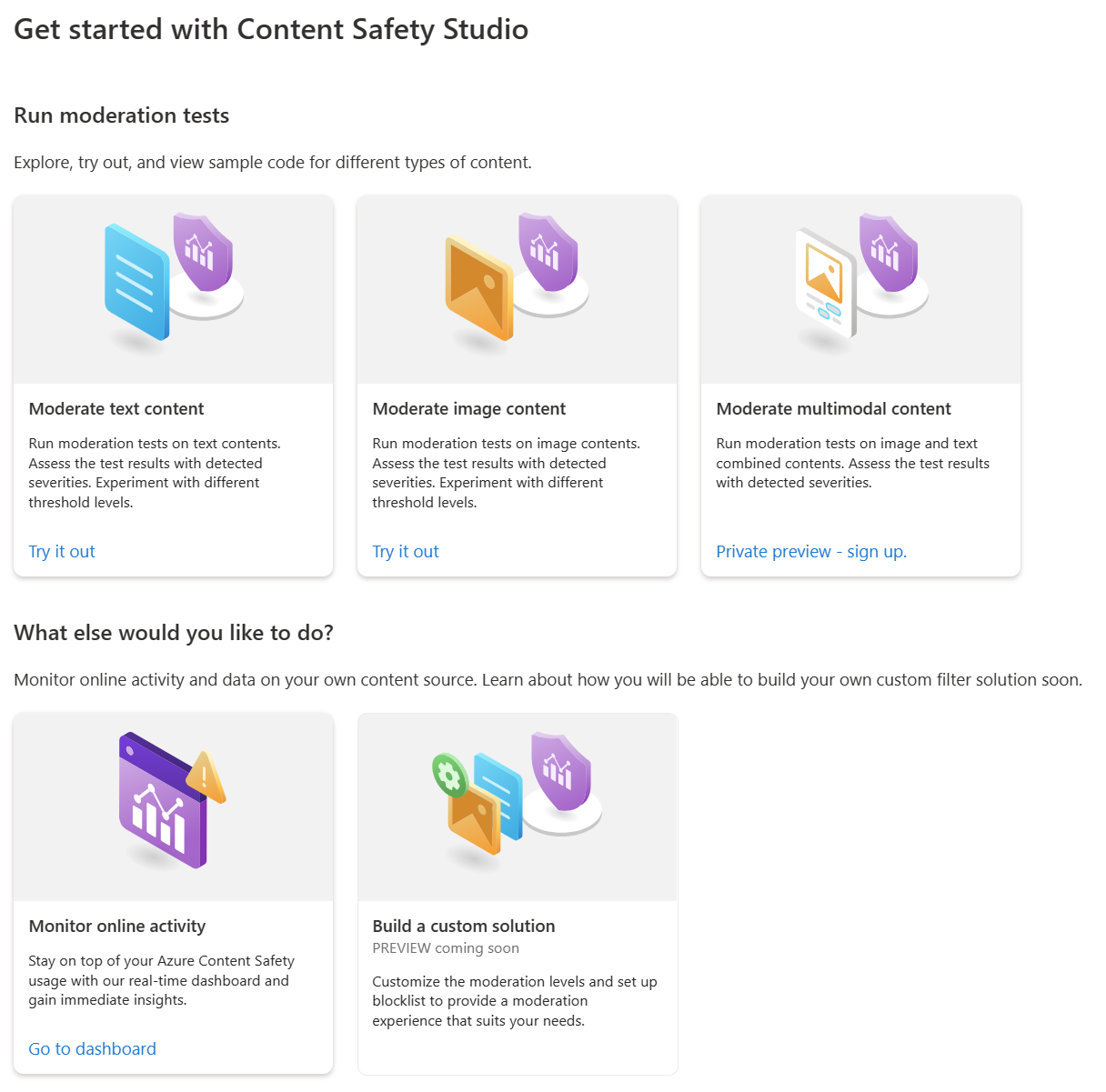

Azure Content Safety

The new Azure Content Safety service can be a part of your AI toolkit to ensure your users have high-quality interactions with your app. It is a content moderation platform that can detect offensive or inappropriate content in user interactions, and provide your app with a content risk assessment based on different types of problem (eg. violence, hate, sexual, and self-harm) and severity. This high level of granularity provides your app with the tools to block interactions, trigger moderation, add warnings, or otherwise handle each safety incident appropriately to provide the best experience for your users.

Azure Content Safety is multi-lingual aware and multi-model – it works on both text and image inputs – and can form an important part of your AI safety system.

The service includes the interactive Content Safety Studio and dashboard as well as the API that you’ll incorporate into your app architecture.

Figure 3: Content Safety Studio

Refer to the announcement blog post and documentation for more information, including quickstarts and code samples in C#, Python, Java, and JavaScript.

Feedback and resources

We’d love your feedback on this post, including any tips or tricks you’ve learned from playing around with ChatGPT prompts.

If you have any thoughts or questions, use the feedback forum or message us on Twitter @surfaceduodev.

There will be no livestream this week, but you can check out the archives on YouTube.

0 comments