Summary: Microsoft PFE, Georges Maheu, opens his security assessment toolbox to discuss a Windows PowerShell script he uses to look at Windows services.

Microsoft Scripting Guy, Ed Wilson, is here. Our guest blogger today is Georges Maheu. Georges is one of my best friends, and I had a chance to work with him again during my recent Canadian tour. While I was there, I asked him about writing a guest series, and we are fortunate that he did (you will love his four blogs). Here is a little byte about Georges.

Georges Maheu, CISSP, is a premier field engineer (PFE) and security technology lead for Microsoft Canada. As a senior PFE, he focuses on delegation of authority (how many domain admins do you really have?), on server hardening, and on using his scripts to perform security assessments. Georges has a passion for Windows PowerShell and WMI, and he uses them on a regular basis to gather information from Active Directory and computers. Georges also delivers popular scripting workshops to Microsoft Services Premier Support customers. Georges is a regular contributor to the Microsoft PFE blog, OpsVault.

Note: All the scripts and associated files for this blog series can be found in the Script Repository. Remember to unblock and open the zip file.

Take it away, Georges…

When premier customers call me, it is either because they have been compromised or because they are being audited. In either case, the first step is always the same: make an inventory. To assess risk, you need to understand what you are managing. Many customers have fancy gizmos to detect intrusion, but they often fail to look at the most simple things.

Hey, if the barn doors are wide open, why look for mice holes!

So, what does all of this have to do with auditors? Well, auditors (read accountants) have investigated hundreds of attacks and concluded that several of them were done by using generic accounts as penetration vectors. Therefore, being accountants (read very organized); some auditors are now assessing generic accounts as part of their auditing.

Here is a common scenario: An IT Pro created a generic account in 1999 to perform backups. Back then, the backup software required this service account to be a domain admin account. The password policy was six characters without complexity. Today, the account is still active; the password has never been changed.

Auditors come along and provide you with a list of accounts with “no password required” and a list of accounts with obsolete passwords. Your manager tells you to investigate where these accounts are being used and wants you to create a remediation plan to change the passwords on these accounts.

Generic accounts can be used by people (guest and administrator are two examples), used by applications, or used to run services. My security tool box contains several small scripts, an approach that I prefer to a Swiss army tool that tries to do everything.

Today, we will look at one of my tool box scripts. This script will make an inventory of all services running on your computers and will identify which ones are using non-standard service accounts. The inventory is presented in an Excel spreadsheet (accountants and managers love spreadsheets, so this is a bonus).

When you write scripts, always consider two factors: complexity and capacity (or performance). This first version of the script is linear and simple, but slow; it will do the work for a small to mid-size environment. Depending on your environment, it may take up to 15 minutes per server.

The first step is to read the list of targeted computers. You could get this list directly from Active Directory, but most people prefer to have control over the computers that are being queried. In any case, a simple Windows PowerShell command can be used to create such a list:

([adsisearcher]”objectCategory=computer”).findall() |

foreach-object {([adsi]$_.path).cn} |

out-file -Encoding ascii -FilePath computerList.txt

Check the results with:

get-content computerList.txt

Now that a list of computers has been created, let’s open the tool box and look at portions of this first version script. Not always knowing where the script is run from, the path is stored programmatically in the $scriptPath variable instead of being hard-coded. Because performance is important, time is also tracked.

clear-host

$startTime = Get-Date

$scriptPath = Split-Path -parent $myInvocation.myCommand.definition

Creating an Excel spreadsheet is done with office automation. The DisplayAlert property is set to $false to avoid the file-overwriting prompt when the file is saved at the end of the script. If the Visible property is set to $true, make sure not to click inside an Excel spreadsheet while the script is running because this will change the focus and generate a Windows PowerShell error.

$excel = New-Object -comObject excel.application

$excel.visible = $true # or = $false

$excel.displayAlerts = $false

$workBook = $excel.workbooks.add()

Let’s move on by initializing our environment. The computer list is stored in an array automatically with the Get-Content cmdlet, as shown here:

$computers = Get-Content “$scriptPath\Computers.txt”

$respondingComputers = 0 #Keep count of responding computers.

The properties returned by a WMI query are not necessarily in the desired order. To simplify the layout, variables that will be used to define the columns for the data in Excel are initialized here. Notice the service Name and StartName are in the first two columns. The presentation order can be rearranged based on specific requirements.

$mainHeaderRow = 3

$firstDataRow = 4

$columnName = 01

$columnStartName = 02

$columnDisplayName = 03

#removed some properties for brevity.

$columnSystemName = 24

$columnTagId = 25

$columnTotalSessions = 26

$columnWaitHint = 27

Names are then given to individual Excel spreadsheets and headers are added. The first sheet is used to keep statistics; the second one is really the core component of this report where all the exceptions will be recorded. These will be the accounts that the auditors will want you to manage.

$workBook.workSheets.item(1).name = “Info” #Sheets index start at 1.

$workBook.workSheets.item(2).name = “Exceptions”

#Delete the last sheet as there will be an extra one.

$workBook.workSheets.item(3).delete()

$infoSheet = $workBook.workSheets.item(“Info”)

$infoSheet.cells.item(1,1).value2 = “Nb of computers:”

$infoSheet.cells.item(1,2).value2 = $($computers).count

$infoSheet.cells.item(1,2).horizontalAlignment = -4131 #$xlLeft

$exceptionsSheet = $workBook.workSheets.item(“Exceptions”)

$exceptionsSheet.cells.item($mainHeaderRow,1) = “SystemName”

$exceptionsSheet.cells.item($mainHeaderRow,2) = “DisplayName”

$exceptionsSheet.cells.item($mainHeaderRow,3) = “StartName”

$exceptionsSheet.cells.item($mainHeaderRow,1).entireRow.font.bold = $true

A nice trick-of-the-trade is to write a message that might get overwritten. In this case, if data is found, this line gets overwritten by the data.

#The next line will be overwritten if exceptions are found.

$exceptionsSheet.cells.item($firstDataRow,1) = “No exceptions found”

The script can now start to process each computer. A new Excel tab is created for each computer and renamed with the computer name, a header is written, WMI is used to get the data, and the data is written to the Excel spreadsheet. Notice that the current spreadsheet is selected with $computerSheet.select(). This is not required, but it will show some activity on the screen. Again, do not click in the spreadsheet because this will change the selected cell and generate an error.

forEach ($computerName in $computers)

{

$computerName = $computerName.trim()

$workBook.workSheets.add() | Out-Null

“Creating sheet for $computerName”

$workBook.workSheets.item(1).name = $computerName

$computerSheet = $workBook.workSheets.item($computerName)

$computerSheet.select() #Show some activity on screen.

$error.Clear()

$services = Get-WmiObject win32_service -ComputerName $computerName

The script performs very basic error handling. If no errors are found by the WMI query, data is written to the spreadsheet; otherwise, the error is logged in a text file. The order in which the data is written is irrelevant because variables are used to indicate in which column each field is being written.

if (($error.count -eq 0))

{

Write-Host “Computer $computerName is responding.” -ForegroundColor Green

$row = $firstDataRow

#Write headers

$computerSheet.cells.item($mainHeaderRow,$columnCaption) = “Caption”

#removed some properties for brevity.

$computerSheet.cells.item($mainHeaderRow,$columnWaitHint) = “WaitHint”

$computerSheet.cells.item($mainHeaderRow,1).entireRow.font.bold = $true

forEach ($service in $services)

{

$service.displayName

$computerSheet.cells.item($row,$columnAcceptStop) = $service.AcceptStop

$computerSheet.cells.item($row,$columnCaption) = $service.Caption

$computerSheet.cells.item($row,$columnCheckPoint) = $service.CheckPoint

#removed some properties for brevity.

$computerSheet.cells.item($row,$columnWaitHint) = $service.WaitHint

$row++

The following section is where the crucial action occurs. The non-generic service accounts are identified here. This is done with an if statement and by writing the exceptions to the exception sheet.

################################################

# EXCEPTION SECTION

# To be customized based on your criteria

################################################

if ( $service.startName -notmatch “LocalService” `

-and $service.startName -notmatch “Local Service” `

-and $service.startName -notmatch “NetworkService” `

-and $service.startName -notmatch “Network Service” `

-and $service.startName -notmatch “LocalSystem” `

-and $service.startName -notmatch “Local System”)

{

$exceptionsSheet.cells.item($exceptionRow,1) = $service.systemName

$exceptionsSheet.cells.item($exceptionRow,2) = $service.displayName

$exceptionsSheet.cells.item($exceptionRow,3) = $service.startName

$exceptionRow++

} #if ($service.startName

} #forEach ($service in $services)

Then, the current spreadsheet is formatted and the responding computer counter is incremented.

$computerSheet.usedRange.entireColumn.autoFit() | Out-Null

$respondingComputers++

}

If the remote computer does not respond, a comment is added to the spreadsheet and additional information is provided in a log file.

else #if (($error.count -eq 0))

{

$computerSheet.cells.item($firstDataRow,1) = “Computer $computerName did not respond to WMI query.”

$computerSheet.cells.item($firstDataRow+1,1) = “See $($scriptPath)\Unresponsive computers for additional information”

$error.Clear()

Test-Connection -ComputerName $computerName -Verbose

Add-Content -Path “$($scriptPath)\Unresponsive computers” -Encoding Ascii `

–Value “$computerName did not respond to win32_pingStatus”

Add-Content -Path “$($scriptPath)\Unresponsive computers” -Encoding Ascii `

–Value $error[0]

Add-Content -Path “$($scriptPath)\Unresponsive computers” -Encoding Ascii `

–Value “—————————————————-“

Write-Host “Computer $computerName is not responding. Moving to next computer in the list.” `

-ForegroundColor red

} #if (($error.count -eq 0))

} #forEach computer

At this point, the script is almost done. The information sheet needs to be updated with our stats, and a few things need to be formatted and cleaned up before exiting.

$exceptionsSheet.usedRange.entireColumn.autoFit() | Out-Null

$infoSheet.cells.item(2,1).value2 = “Nb of responding computers:”

$infoSheet.cells.item(2,2).value2 = $respondingComputers

$infoSheet.cells.item(2,2).horizontalAlignment = -4131 #$xlLeft

$infoSheet.usedRange.entireColumn.autoFit() | Out-Null

$workBook.saveAs(“$($scriptPath)\services.xlsx”)

$workBook.close() | Out-Null

$excel.quit()

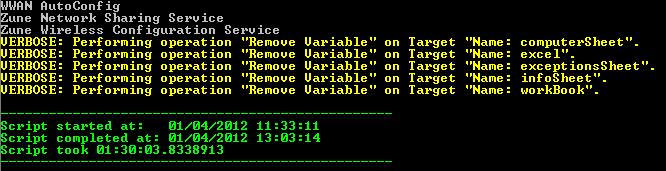

Com objects require special attention; otherwise, they remain in memory and do not release the calling program. Some coders keep track of all the objects that they create, and release them one by one. I prefer to use a generic approach such as:

#Remove all com related variables

Get-Variable -Scope script `

| Where-Object {$_.Value.pstypenames -contains ‘System.__ComObject’} `

| Remove-Variable -Verbose

[GC]::Collect() #.net garbage collection

[GC]::WaitForPendingFinalizers() #more .net garbage collection

Now that the script is done, let us see how long it takes to run. I always do benchmarks with a limited number of computers, and then estimate how long it will take to gather data from all the targeted computers.

$endTime = get-date

“” #blank line

Write-Host “————————————————-” -ForegroundColor Green

Write-Host “Script started at: $startTime” –ForegroundColor Green

Write-Host “Script completed at: $endTime” –ForegroundColor Green

Write-Host “Script took $($endTime – $startTime)” –ForegroundColor Green

Write-Host “————————————————-” -ForegroundColor Green

“” #blank line

A test run in my home lab gathered data from 50 computers in 90 minutes. That is less than two minutes per computer. Not bad, considering we have a nice Excel spreadsheet as a final report.

Having an inventory is the first step in closing one of the barn doors.

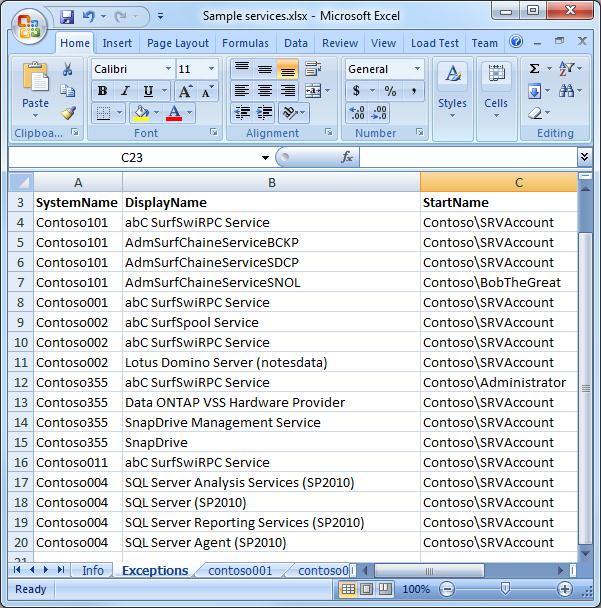

The next screen capture shows all the services that are using nonstandard service accounts.

This information helps to evaluate the impact of changing the password on the SRVAccount and BobTheGreat before the auditors start asking. This is also a good opportunity to determine if that xyz service really needs to run as Administrator.

I ran this script in some customer environments where it took more than 15 minutes per computer. This could be a serious limiting factor. Tomorrow, we will explore how the performance of this script can be dramatically improved from 90 minutes to less than three minutes.

Remember, all the scripts and files for this and for the next three blogs in the series can be found in the Script Repository. Simply open the zip file.

~Georges

Thank you, Georges, for the first installment of an awesome week of Windows PowerShell goodness.

I invite you to follow me on Twitter and Facebook. If you have any questions, send email to me at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, peace.

Ed Wilson, Microsoft Scripting Guy

0 comments