Enabling DevOps in A Hybrid Cloud Environment at DoD

In this post, Premier Field Engineer JJ Jacob explains how The Department of Defense (DoD) achieve their code deployment policies with Azure DevOps.

As a Premier Field Engineer working in the DoD space, I have been part of a team that has been charged with enabling Application Modernization of a legacy application for scheduling. The goal is to rewrite a pilot legacy application, modernize it for the cloud and take advantages full advantages of Azure DevOps to enable agility in planning, development, build and deployment. Through this effort, we are aiming to showcase the capabilities of leveraging a Hybrid Cloud environment for agile, cost-effective and efficient application lifecycle management required for many of the DoD’s legacy applications.

The planning, coding, and build/test and deployment of the pilot application has been staged on the Commercial Cloud using Azure DevOps to ensure that our planning and development efforts could proceed unrestrained by resources constraints typically experienced on DoD premises. Features are planned, prioritized for sprints and delivered using Agile Planning, TFVC (Team Foundation Server Version Control) repos, Continuous Integration and Continuous Delivery using DevTest capabilities. These enabled us to develop features at a pretty rapid pace and get early and frequent feedback from the customers.

One major requirement has been that the application would eventually be hosted on-prem at the DoD facility, both on the unclassified and classified network. This presented a challenge for us as to how systems developed in the Commercial Cloud could be brought into the relatively highly secure unclassified on-prem environment, and thereafter be promoted to the even more secure classified network.

We examined several options that Azure afforded us. For one thing, we could not develop the pilot application on the Microsoft Azure for Government (MAG) subscription. This was due to the constraints of budget, as well the non-availability of capabilities in MAG, in comparison to Commercial – such as DevOps, which is key to our pilot for our application modernization effort. Other options such as ExpressRoute were considered but, could not be accommodated due to budget constraints.

As we drilled down the requirements around how code could be transferred from the Commercial Cloud to the unclassified network at the DoD facility, we crystallized around the following key secure data transfer requirements at DoD:

- That code can be pulled into a higher information level (IL) environment from a lower IL environment. It cannot be pushed from a lower IL environment to a higher one.

- That such a pull must happen on a secure connection.

We have a fully implemented the DevOps orchestration on Commercial Cloud using Azure DevOps for our Continuous Integration (CI) and Continuous Deployment (CD) using all the Microsoft Azure hosted capabilities, without needing to setup any infrastructure for it. The challenge to solve was how to transfer the feature-ready and tested code from the Commercial Cloud (IL2) into the unclassified network, which has a higher IL requirement, without going the sneaker-net route.

Thankfully, the Azure Pipelines feature in Azure DevOps supports both the following options

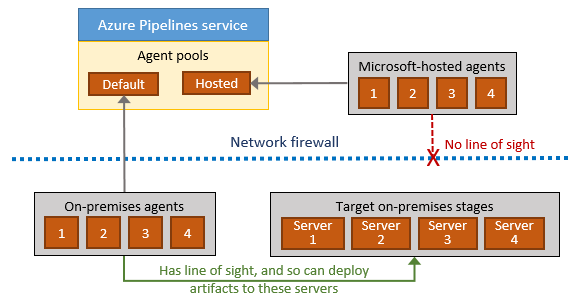

- To build and deploy using a Microsoft-hosted agent. With Microsoft-hosted agents, maintenance and upgrades are taken care of for you. Each time you run a pipeline; you get a fresh virtual machine. The virtual machine is discarded after one use. Like self-hosted agents, Microsoft-hosted agents can run jobs directly on the VM or in a container.

- To run build and deployment jobs using a self-hosted agent. You can use self-hosted agents in Azure Pipelines or Team Foundation Server (now Azure DevOps Server). Self-hosted agents give you more control to install dependent software needed for your builds, deployments, and ensure security compliance standards are met. Also, machine-level caches and configuration persist from run to run, which can boost speed.

The availability of these options meets our needs for rapid, continuous development and delivery of features on the commercial cloud through the use of Microsoft hosted agents for build and deployment, as well through the use of self-hosted agents on-prem, which address our need to pull in feature-ready, tested code into the secure unclassified network, securely, without any manual steps.

Self-hosted on-prem agents meet the following requirements:

- They are compliant with the Security Technical Implementation Guides (STIGs) required for the given DoD environment. This is enforced by the DoD agency’s GPO, and hence it is the responsibility of the customer to ensure the required security posture.

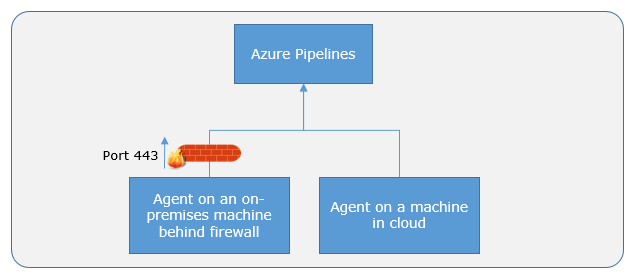

- All the messages from the agent to Azure Pipelines or TFS happen over HTTP or HTTPS, depending on how the agent is configured. The pull model on an on-prem, self-hosted agent communicates over Port 443, thus ensuring the secure connections per our requirements.

- The pull model is further enhanced by setting up Continuous integration in that there’s no need for a manual step for pulling the code onto the on-prem agent. A continuous integration build definition using the self-hosted agent, essentially polls for check-ins and triggers off the build process, which at the out start, includes pulling down code from the Repo. This meets our basic requirement of pulling the code into a higher IL environment from a lower IL2 environment such as the Azure Commercial Cloud.

These on-prem agents need to have internet connectivity to the Azure Pipeline services on the Azure Commercial cloud through the firewall as depicted in the following:

There are some special considerations for setting up on-premises agents, especially in the DoD environments.

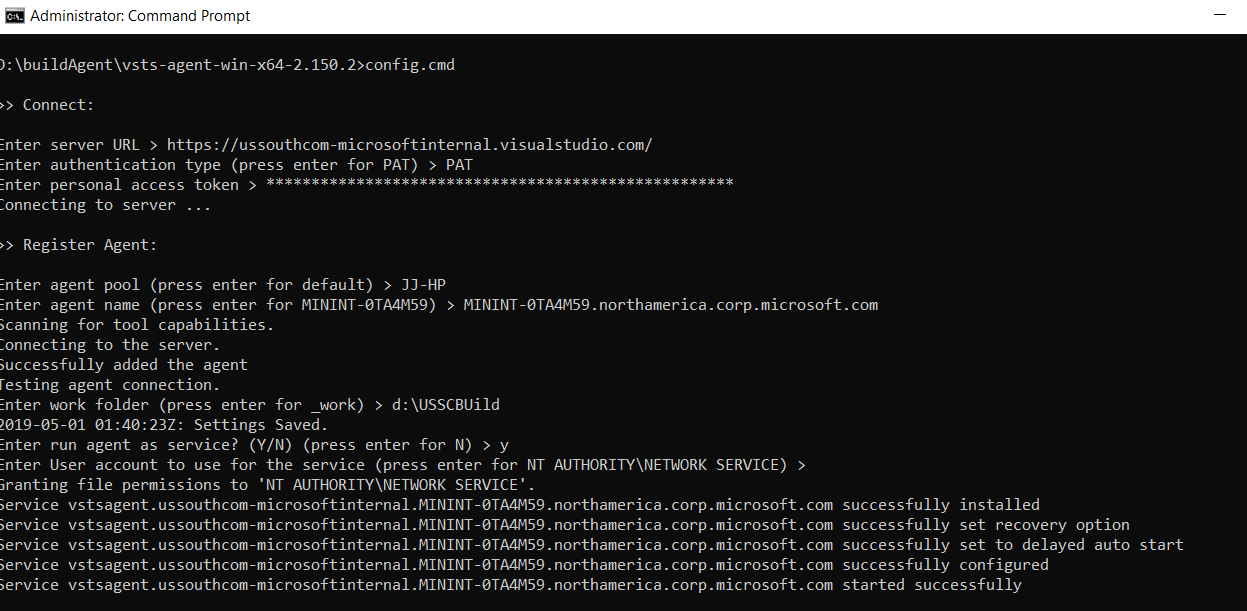

- The build agent needs to be assigned a public IP address, one that is whitelisted such that traffic coming through is not blocked

- The build agent has an A-record that will be used for configuring the build agent – a value required during the setup of the build agent on the on-prem machine(s).

- Establishing a Personal Access Token (PAT) to connect an agent with Azure Pipelines or TFS 2017 and newer. PAT is the only scheme that works with Azure Pipelines. PAT is used only at the time of registering the agent, and not for subsequent communication.

The following illustrates where the above are needed for configuring an on-prem self-hosted agent:

The creation of on-prem self-hosted agents on higher IL requirements-enforced DoD environments enables us to easily solve the issues of transferring code developed on Commercial Azure, without manual intervention, over a secure port 443, and at no additional cost to a given subscription.

Additionally it affords us the ability to develop and release code continually in a hybrid environment, ensuring that feature-ready and tested code can be developed without resource constraints on the Commercial Azure cloud, and then be tested further under the more secure constraints of the on-prem build/release environments such as the unclassified network. All this, without needing manual steps.

This model can be further enhanced by orchestrating a full DevOps lifecycle on an on-prem Azure DevOps Server instance, that specifically focusses on UAT testing for further deployment into the higher security unclassified and classified networks. By this time, our application has mostly completed feature readiness testing, and the focus would be ensuring that the application works within the constraints of the DoD requirements. This allows us to leverage the best of both worlds in a hybrid environment consisting of the Commercial Azure cloud using Azure DevOps, and the customer specific on-prem DevOps environment using Azure DevOps Server

Light

Light Dark

Dark

0 comments