What’s New For Parallelism in .NET 4.5

.NET 4 and Visual Studio 2010 saw the introduction of a wide range of new support for parallelism: the Task Parallel Library (TPL), Parallel LINQ (PLINQ), new synchronization and coordination primitives and collections (e.g. ConcurrentDictionary), an improved ThreadPool for handling parallel workloads, new debugger windows, new concurrency visualizations, and more. Since then, we’ve been hard at work on the .NET Framework 4.5 and Visual Studio 11. Here’s a glimpse into the Developer Previews released this week.

Better Performance

Task Parallel Library

More and more, TPL is becoming the foundation for all parallelism, concurrency, and asynchrony in the .NET Framework. That means it needs to be fast… really fast. Performance in .NET 4 is already good, but a lot of effort was spent in this release improving the performance of TPL, such that just by upgrading to .NET 4, important workloads will just get faster, with no code changes or even recompilation required. As just one example, consider a long chain of tasks, with one task continuing off another. We want to time how long it takes to set up this chain:

using System;

using System.Diagnostics;

using System.Threading.Tasks;

class Program

{

static void Main()

{

var sw = new Stopwatch();

while (true)

{

GC.Collect();

GC.WaitForPendingFinalizers();

GC.Collect();

var tcs = new TaskCompletionSource<object>();

var t = tcs.Task;

sw.Restart();

for (int i = 0; i < 1000000; i++)

t = t.ContinueWith(_ => (object)null);

var elapsed = sw.Elapsed;

GC.KeepAlive(tcs);

Console.WriteLine(elapsed);

}

}

}

Just by upgrading from .NET 4 to .NET 4.5, on the machine on which I’m writing this blog post, this code runs 400% faster! This is of course a microbenchmark that’s purely measuring a particular kind of overhead, but nevertheless it should give you a glimpse into the kind of improvements that exist in the runtime.

Parallel LINQ (PLINQ)

We’ve also invested effort in improving the performance of PLINQ. In particular, in .NET 4 there were a fair number of queries that would fall back to running sequentially, due to a variety of internal implementation details that caused the system to determine that running in parallel would actually run more slowly than running sequentially. We’ve been able to overcome many of those issues, such that more queries in .NET 4.5 will now automatically run in parallel. A prime example of this is a query that involves an OrderBy followed by a Take: in .NET 4, by default that query would run sequentially, and now in .NET 4.5 we’re able to obtain quality speedups for that same construction.

Coordination Data Structures

Not to be outshined, our concurrent collections and synchronization primitives have also been improved. This again follows the principle that you don’t need to make any code changes: you just upgrade and your code becomes more efficient. A good example of this is with updating the contents of a ConcurrentDictionary<TKey,TValue>. We’ve optimized some common cases to involve less allocation and synchronization. Consider the following code, which continually updates the same entry in the dictionary to have a new value:

using System;

using System.Collections.Concurrent;

using System.Diagnostics;

class Program

{

static void Main(string[] args)

{

while (true)

{

var cd = new ConcurrentDictionary<int, int>();

var sw = Stopwatch.StartNew();

cd.TryAdd(42, 0);

for (int i = 1; i < 10000000; i++)

{

cd.TryUpdate(42, i, i – 1);

}

Console.WriteLine(sw.Elapsed);

}

}

}

After upgrading my machine to .NET 4.5, this runs 15% faster than it did with .NET 4.

More Control

Partitioning

Most of the feedback we received on .NET 4 was extremely positive. Even so, we continually receive many requests for more control over this or that particular piece of functionality. One request we heard repeatedly was for better built-in control over partitioning. When you give PLINQ or Parallel.ForEach an enumerable to process, they use a default partitioning scheme that employs chunking, so that each time a thread goes back to the enumerable to get more data, it may grab more than one item at a time. While that can often be good for performance, such buffering is not always desirable. For example, if the enumerable represents data that’s being received over a network, it might be more desirable to process a piece of data as soon as it arrives rather than waiting for multiple pieces of data to arrive so that they can be processed in bulk. To address that, we’ve added a new enum to the System.Collections.Concurrent namespace: EnumerablePartitionerOptions. With this enum, you can specify that a partitioner should avoid the default chunking scheme and instead do no buffering at all, e.g.

Parallel.ForEach(Partitioner.Create(source, EnumerablePartitionerOptions.NoBuffering), item =>

{

// … process item

});

Reductions

Another common request was for more control over performing reductions. The ThreadLocal<T> class enables applications to maintain per-instance, per-thread state, and it exposes a Value instance property that allows a thread to get and set its local value. Often, however, there are situations where you’d like to be able to access the values from all threads, for example if you wanted to perform a reduction across the local values created by all threads involved in a computation. For that, we’ve added the new Values (plural) property, which returns an IEnumerable<T> of all of the local values. This new property can also be used to clean up the thread local data on each involved thread, e.g.

var resources = new ThreadLocal<BigResource>(

() => new BigResource(), trackAllValues: true);

var tasks = inputData

.Select(i => Task.Run(() => Process(i, resources.Value)))

.ToArray();

Task.WaitAll(tasks);

foreach (var resource in resources.Values) resource.Dispose();

Timeouts and Cancellation

Another common request was to be able to cancel operations after a particular time period elapsed. With .NET 4, we introduced CancellationTokenSource and CancellationToken, and with .NET 4.5, we’ve directly integrated timer support into these types. So, if you want to create a CancellationToken that will automatically be canceled after 30 seconds, you can do:

var ct = new CancellationTokenSource(TimeSpan.FromSeconds(30)).Token;

Task Creation

We’ve also provided more control over task creation. Two new options exist on TaskCreationOptions and TaskContinuationOptions: DenyChildAttach and HideScheduler. These allow you to better author code that interacts with 3rd-party libraries. New ContinueWith overloads also allow you to pass object state into continuations, which is important for languages that don’t support closures, useful as a performance enhancement to avoid closures, and quite helpful as a way to associate additional state with a Task (the state is available from the task’s AsyncState property, and is thusly visible in the Parallel Tasks window’s Async State).

Task Scheduling

Another common need has been more control over task scheduling. An important aspect of TPL is its scheduling abstraction, with all of TPL’s scheduling going through a TaskScheduler. The default TaskScheduler targets the .NET ThreadPool, but custom schedulers can be written to redirect work to arbitrary locations or to process work with various semantics. In .NET 4.5, in addition to the built-in default scheduler and the built-in scheduler for targeting a SynchronizationContext (available through TaskScheduler.FromCurrentSynchronizationContext), we’ve added a new set of schedulers that help to coordinate work in various ways. The new ConcurrentExclusiveSchedulerPair type provides two TaskScheduler instances exposed from its ConcurrentScheduler and ExclusiveScheduler properties. You can think of these schedulers as together providing the asynchronous equivalent of a reader/writer lock. Tasks scheduled to the concurrent scheduler are able to run as long as there no executing exclusive tasks. As soon as an exclusive task gets scheduled, no more concurrent tasks will be allowed to run, and once all currently running concurrent tasks have completed, the exclusive tasks will be allowed to run one at a time. The ConcurrentExclusiveSchedulerPair also supports restricting the number of tasks that may run concurrently. With that, you can use just the ConcurrentScheduler to schedule tasks such that only a maximum number will run at any given time. Or you could use just the ExclusiveScheduler to run queued tasks sequentially.

Async

Asynchrony has long been known as the way to achieve scalable and responsive systems. And yet asynchronous code has historically been quite difficult to write. No more. With .NET 4.5, C# and Visual Basic have first-class language support for writing asynchronous code, and this support directly integrates with the Task Parallel Library (F# in Visual Studio 2010 already supports integration of TPL with its asynchronous workflows feature). As there have been several CTPs of this functionality already, I’ll avoid discussing this important feature set at length and instead point you to https://msdn.com/async, which provides a plethora of documents and videos on the subject.

In addition to that Async CTP material, however, note that the solution in .NET 4.5 is much more advanced and robust than that which was included in the CTPs. For example, in .NET 4.5, this async support is fully integrated at the runtime level, whereas the CTP was built on top of public surface area. This means that whereas in the CTP all of the library goo necessary to support the compiler-generated code was in a separate AsyncCtpLibrary.dll layered on top of the public .NET 4 surface area, in .NET 4.5 this support is deeply integrated into the .NET Framework, providing for a more efficient and more robust experience. Similarly, in the CTPs, a large number of extension methods were included in the AsyncCtpLibrary.dll, wrapping existing .NET 4 APIs to provide Task-returning methods that composed well with the language support. In .NET 4.5, such functionality is instead baked right in. For example, in the CTP we exposed extension methods for Stream that added ReadAsync and WriteAsync methods based on wrapping the BeginRead/EndRead and BeginWrite/EndWrite methods on Stream. In .NET 4.5, Stream now has virtual ReadAsync and WriteAsync methods directly on the type, with multiple Stream instances overriding this functionality to provide better behavior than just delegating to the BeginRead/EndRead method (e.g. FileStream.ReadAsync and WriteAsync support cancellation). In .NET 4.5 and Visual Studio, async support is much more widespread. For example, the System.Xml.dll library (which had no async support in the Async CTP), now has dozens of asynchronous methods. ASP.NET has built-in support for writing asynchronous Task-based handlers. WCF has built-in support for writing asynchronous Task-based services. And so on.

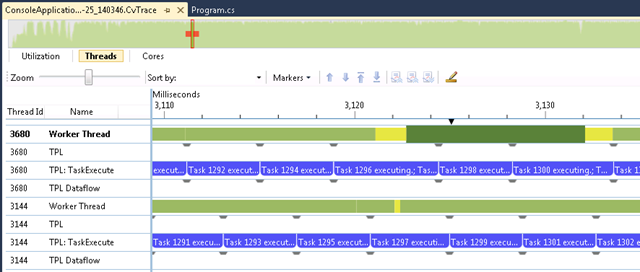

On the tooling front, Visual Studio now has debugging support for asynchronous methods! You can step through your asynchronous methods, stepping over “awaits” just as you would any other expression. You can even step out of asynchronous methods into continuations of that asynchronous method, into other asynchronous methods that were calling it, and so on. TPL is also now capable of outputting ETW events when asynchronous awaits occur, and the Concurrency Visualizer in Visual Studio allows you to visualize such events. This means you can now see events related to tasks and asynchrony along with all of the other useful information displayed in the Concurrency Visualizer Threads view.

Dataflow

You may have heard about a new library System.Threading.Tasks.Dataflow.dll, lovingly referred to as TPL Dataflow. We’ve had several CTPs of the library on .NET 4 available for download from the MSDN DevLabs site, but the library is now built-in with .NET 4.5. It contains powerful functionality for composing applications that involve producer/consumer relationships, that are based on message passing, or that utilize an actor/agent oriented architecture.

As an example of using the dataflow library, consider building a component that wants to accept and process incoming requests asynchronously. Previously, you might have used a queue with a lock, spinning up tasks to process the messages when they arrive, or using a dedicated thread blocked until the next message arrives. With the dataflow library, this kind of functionality is encapsulated in the ActionBlock<TInput>:

var ab = new ActionBlock<int>(i => ProcessMessage(i));

… // then later messages arrive and will be processed by the block

ab.Post(data1);

ab.Post(data2);

ab.Post(data2);

ActionBlock can easily process millions of messages per second, has support for a wide variety of options (e.g. how and where tasks are scheduled, bounding, cancellation, sequential and parallel processing, etc.), and is just one of many “blocks” present in the dataflow library (other blocks include TransformBlock, BufferBlock, BatchBlock, BroadcastBlock, JoinBlock, and more). These blocks can be wired together to create dataflow networks such that data flows through the network, being processed by each block concurrently with the work happening in all of the other blocks. This makes it easy to build components that interact with other components through message passing, with components that do processing asynchronously when new messages are delivered.

The TPL Dataflow library also integrates well with the aforementioned async support. For example, you can easily set up asynchronous producers and asynchronous consumers with code like the following:

var items = new BufferBlock<T>(

new DataflowBlockOptions { BoundedCapacity = 100 });

Task.Run(async delegate

{

while(true)

{

T item = Produce();

await items.SendAsync(item);

}

};

Task.Run(async delegate

{

while(true)

{

T item = await items.ReceiveAsync();

Process(item);

}

});

Better Tools

Parallel Watch

While the advancements made in .NET 4.5 for parallel programming are significant, the story goes beyond just the runtime level: Visual Studio has also been significantly enhanced with tools for building better parallelized .NET applications. Visual Studio 2010 saw the introduction of the Parallel Tasks and Parallel Stacks window. Visual Studio 2012 adds the Parallel Watch window, which allows you to easily inspect the results of arbitrary expressions across all relevant threads in your application. This is particularly relevant for constructs like Parallel.For and PLINQ, as you can now see in one window the state of all participating threads.

For when dealing with many threads, with the click of a button you can export this tabular data to a CSV file or to an Excel spreadsheet. You can also take advantage of an extensibility point in Parallel Watch to add custom visualizers for perusing this data.

Multi-process Support

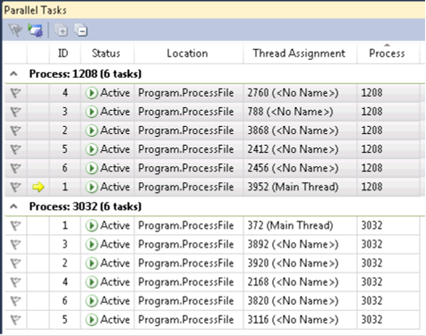

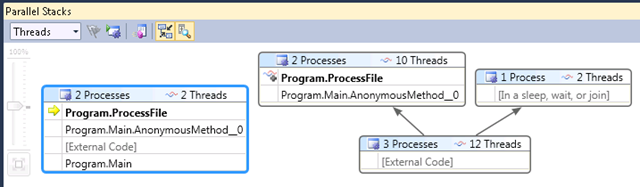

Across the parallelism-related windows in Visual Studio, you can now interact with state across multiple processes. For example, here in the Parallel Tasks window I’m able to see all of the tasks pulled from both processes to which I’ve attached the Visual Studio debugger:

and in Parallel Stacks:

Concurrency Visualizer Markers

Historically, it’s been very challenging to understand what concurrent applications do and how they run. To assist with this, Visual Studio 2010 saw the introduction of the Concurrency Visualizer, an ETW-based profiling tool that helps developers to visualize their CPU utilization, their thread activity, and their logical core activity throughout the lifetime of their application.

For Visual Studio 11, a lot of work has gone into making the Concurrency Visualizer even better. For one thing, it’s now fast, really fast: traces that used to take minutes or longer to process can now be processed in seconds. This makes it more feasible to use the Concurrency Visualizer as part of your normal development cycle, making a change and quickly visualizing its impact on your application’s behavior and performance. In fact, to further help towards this end, you can now quickly launch the Concurrency Visualizer with a simple key combination: shift+alt+F5. Give it a try!

Functionally, Concurrency Visualizer has been augmented with a bunch of new and helpful features. For example, it has built-in support for the new EventSource mechanism in the .NET Framework 4.5 for outputting ETW events from your code. The parallel libraries in .NET utilize this mechanism (e.g. events that trace the beginning and ending of parallel loops, events that trace the execution of tasks, events that monitor dataflow block creation, and so on), and as such the Concurrency Visualizer can automatically display such events.

Additionally, a lot of work has gone into the user experience for the Concurrency Visualizer. For instance, the “defender view” at the top of the threads page enables you to see how your current view maps back into the overall timeline of your application’s processing.

What’s Next

There’s still more to come. We’re looking forward to hearing your thoughts and feedback on this Developer Preview release, and in the meantime we’ll continue to be hard at work listening to your feedback and pushing this all forward.

Light

Light Dark

Dark

0 comments