Background

Recent advances in Machine Learning coupled with the growing ubiquity of instant messaging apps have revived interest in the decades-old concept of chatbots, now more commonly known as ‘bots’.

Microsoft has taken a leading position in this field with the release of the Bot Framework SDK and LUIS language understanding service.

However developing for natural language can still be daunting when approaching the field for the first time. We present below an example of integrating an existing natural language powered service in order to serve structured, FAQ-style content through a conversational interface.

The Problem

During a recent customer engagement we were asked to explore the possibility of publishing existing FAQ-style content via a conversational interface.

Common with almost every consumer-facing business, our customer’s call centre staff were spending significant amounts of their time answering simple queries that could otherwise have been found on the company’s web site. Perhaps serving this content via a conversational interface could give customers a lower friction method to directly find the answers to their questions rather than searching a potentially long list of questions looking for a match.

In addition, once made available through the Bot Framework, the same content can be simultaneously published to a wide variety of channels in a manner that makes detailed usage feedback almost trivial to collect.

With only two days to complete the engagement we were concerned that a traditional approach involving building domain-specific language understanding models would prove too labour intensive. We needed to pull a rabbit out of a hat somehow. Step forward QnA Maker.

Outline Solution

The QnA Maker service lets you easily create a knowledge base from existing online and editorial sources. You can tweak and train the responses and when satisfied with their quality, publish to an endpoint that can be accessed from your Bot. The service behind that endpoint takes care of all the complex natural language processing tasks needed to understand the question and returns the best answer it can find in the knowledge base.

Calling the service is done through a super simple HTTP call. You can even call it directly from your browser:

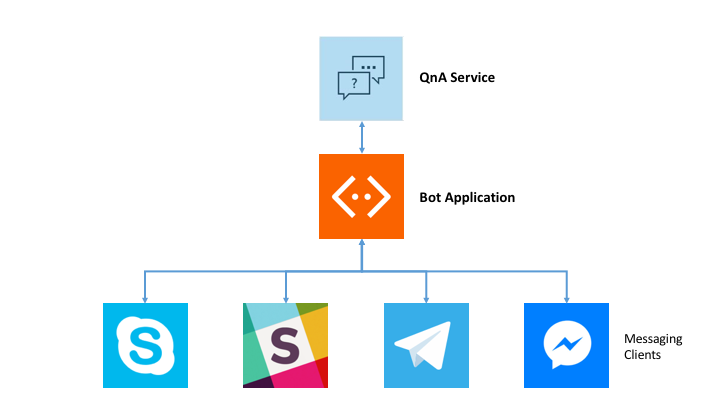

This makes publishing our FAQ almost trivial from within a Bot application and the top-level architecture similarly, extremely simple;

At its most basic level all we have to do is pass any message the user asks straight through to the QnA Maker service and return the response, after a little processing, directly back to the user.

In Node, our target language for this project, this is very simple:

var q = querystring.escape('What is cortana?');

// Using the request library here to simplify calling the QnA service

request(config.get('qnaUri') + q, function (error, response, body) {

session.send(response.answer);

});

where ‘qnaUri’ is our QnA service endpoint and ‘q’ is the verbatim, URI-escaped question.

Of course we can add as much extra complexity to the system as we wish, additional commands and logging of user behaviour perhaps, but at it’s core the solution really isn’t much more complex than what we’ve already shown, in fact the near-minimal implementation to just around 100 lines. Wow! That’s some heavy lifting the Bot Framework and the QnA Maker are already doing for us.

Implementation

The minimal implementation of the complete QnA bot runs to just around a hundred lines of code:

'use strict';

/*

qnamakerbot - Minimal demo of integrating a bot with the QnA Maker

(https://qnamaker.botframework.com/).

Uses the following libraries:

request (https://www.npmjs.com/package/request) - Simplifies calling HTTP services.

querystring (https://www.npmjs.com/package/query-string) - Escapes text strings suitable for using in HTTP queries.

restify (https://www.npmjs.com/package/restify) - Framework to simplify building RESTful APIs

botbuilder (https://www.npmjs.com/package/botbuilder) - The Microsoft Bot Framework SDK.

*/

var request = require('request');

var querystring = require('querystring');

var restify = require('restify');

var botbuilder = require('botbuilder');

// Config can appear in the environment, argv or in the local config

// file (don't check this in!!)

var config = require('nconf').env().argv().file({ file: './localConfig.json' });

function createChatConnector() {

// Create the chat connector which will hook us up to the Bot framework

// servers. This is all very standard stuff.

// Full details here: https://docs.botframework.com/en-us/node/builder/overview/#navtitle

let opts = {

// You generate these two values when you create your bot at

// https://dev.botframework.com/

appId: config.get('BOT_APP_ID'),

appPassword: config.get('BOT_APP_PASSWORD')

};

let chatConnector = new botbuilder.ChatConnector(opts);

let server = restify.createServer();

server.post('/qna', chatConnector.listen());

// Serve the static file containing the embedded web control

// directly from the local filesystem

server.get(//?.*/, restify.serveStatic({

directory: __dirname + '/html',

default: 'index.html',

}));

server.listen(3978, function () {

console.log('%s listening to %s', server.name, server.url);

});

return chatConnector;

}

function qna(q, cb) {

// Here's where we pass anything the user typed along to the

// QnA service. Super simple stuff!!

q = querystring.escape(q);

request(config.get('qnaUri') + q, function (error, response, body) {

if (error) {

cb(error, null);

}

else if (response.statusCode !== 200) {

// Valid response from QnA but it's an error

// return the response for further processing

cb(response, null);

}

else {

// All looks OK, the answer is in the body

cb(null, body);

}

});

}

function initialiseBot(bot) {

// Someone set up us the bot :-)

// Use IntentDialog to watch for messages that match regexes

let intents = new botbuilder.IntentDialog();

// Any message not matching the previous intents ends up here

intents.onDefault((session, args, next) => {

// Just throw everything into the qna service

qna(session.message.text, (err, result) => {

if (err) {

console.error(err);

session.send('Unfortunately an error occurred. Try again.');

}

else {

// The QnA returns a JSON: { answer:XXXX, score: XXXX: }

// where score is a confidence the answer matches the question.

// Advanced implementations might log lower scored questions and

// answers since they tend to indicate either gaps in the FAQ content

// or a model that needs training

session.send(JSON.parse(result).answer);

}

});

});

bot.dialog('/', intents);

}

function main() {

initialiseBot(new botbuilder.UniversalBot(createChatConnector()));

}

if (require.main === module) {

main();

}

Setting up the Services

The complete solution requires a couple of moving parts to be up and running in order to succesfully process questions from the user.

The Bot App – This is the code we actually write and is responsible for receiving messages from the Bot Framework, hosted in the cloud, and passing them along to the QnA service. When the QnA Service sends us a reply our app simply checks the validity of the response, extracts the answer from the reply body and sends it, via the Bot Framework, back to the user. During development this will run on our dev machine. When we want to go to production we’ll need to find a place to run this permanently in the cloud.

The Bot Framework – Hosted by Microsoft and running in the cloud this is the glue that links together the client applications, such as Skype, Slack, Telegram etc running on the user’s machine to the Bot app running either on our dev machine or in the cloud.

The QnA Service – Hosted by Microsoft and running in the cloud this is the service that will receive the question the user asks from our Bot application, search its knowledge base and return the most likely answer.

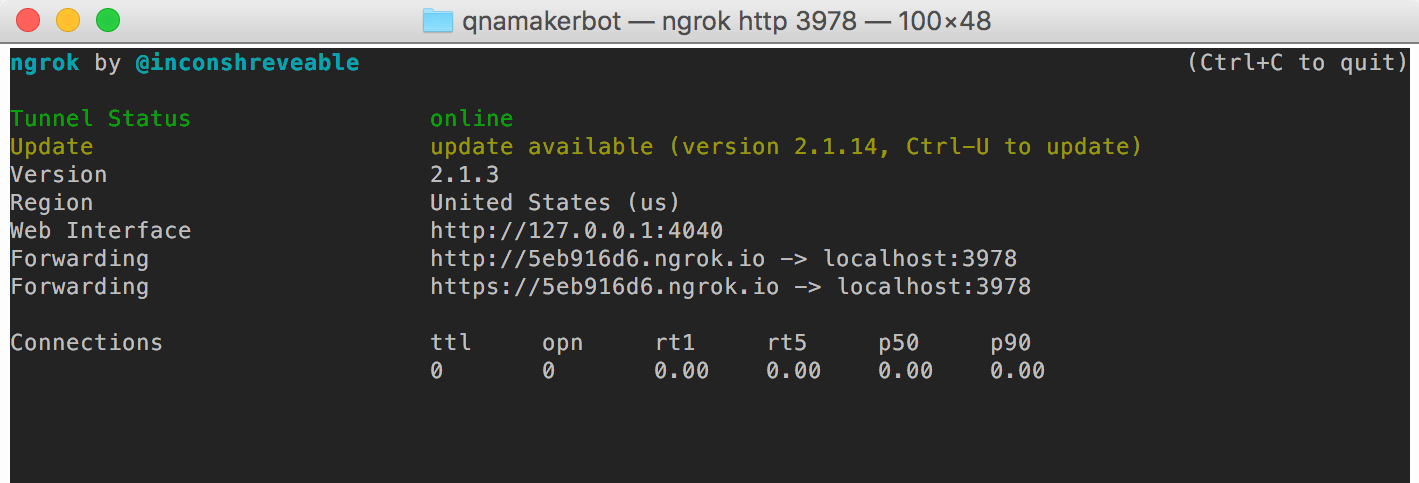

ngrok – ngrok helps us during development by making it easy for the Bot Framework components to connect to the Bot app instance running on our dev machines despite the dev machine likely sitting behind a fireweall.

Setup ngrok

ngrok’s a great tool that allows you to serve content to the public internet directly from your local machine. We’ll be using it to allow the cloud-hosted components of the Bot Framework to call into the Bot application running on your dev machine.

Download ngrok here.

Set it running:

tobe ~/src/qnamakerbot $ ngrok http 3978

You should see something like this:

The important bit here is the https forwarding address. Copy that URI (should look something like https://xxxxx.ngrok.io), we’re going to be using it the next section.

Leave ngrok running throughout the remaining steps.

Register Your Bot

Head over to the Bot Registration Page (MSA/Azure Subscription required) and fill out the details of your bot. Full details can be found here.

Under the Configuration section, there’s a section called ‘messaging endpoint’. This is how the framework is going to contact the bot app running on your machine. The ngrok URI you copied in the previous step goes in here. If you restart ngrok at any point the URI will change and you’ll have to update this value. When you come to deploy your bot outside of your own machine you’ll also have to come back here to update this value to the new, public address of your Bot instance.

IMPORTANT: When you click the button to generate your Microsoft App ID and password for your bot be sure you save the password somewhere safe. This is the only time you’ll be shown this and if you lose it you’ll have to go through all this setup stuff from the top again.

With everything filled in, hit Register to create all the back-end stuff that will connect the various channels to your Bot application instance. Let’s go ahead and get this hooked up to our actual bot.

Build the Bot

Clone the solution repo qnamakerbot:

tobe ~/src $ git clone https://github.com/CatalystCode/qnamakerbot.git

Cloning into 'qnamakerbot'...

remote: Counting objects: 400, done.

remote: Compressing objects: 100% (4/4), done.

remote: Total 400 (delta 0), reused 0 (delta 0), pack-reused 396

Receiving objects: 100% (400/400), 1.18 MiB | 2.07 MiB/s, done.

Resolving deltas: 100% (232/232), done.

Checking connectivity... done.

tobe ~/src $ cd qnamakerbot/

tobe ~/src/qnamakerbot $ tree

.

├── README.md

├── app.js

├── assets

│ └── qna.png

├── html

│ └── index.html

├── localConfig.json

└── package.json

2 directories, 6 files

A quick run through the project structure:

README.md – The Github readme. app.js – The entire bot app in a single file. All the code lives here. assets/qna.jpg – Image referenced from the README file. html/index.html – The file we’re going to serve from the app that contains the webchat control through which was can talk to the Bot. localConfig.json – Contains all our configuration secrets. We’ll need to fill this in later. package.json – Details about this package and its dependencies.

Before we go any further, let’s remember to install the required node packages:

tobe ~/src/qnamakerbot $ npm install

Now let’s take a look at those important configuration files:

tobe ~/src/qnamakerbot $ cat localConfig.json

{

"qnaUri" : "http://qnaservice.cloudapp.net/KBService.svc/GetAnswer?kbId=a3dae93561d24529b11a4c0eb8acd444&question=",

"BOT_APP_ID" : "<YOUR_APP_ID_HERE>",

"BOT_APP_PASSWORD" : "<YOUR_APP_PASSWORD_HERE>"

}

tobe ~/src/qnamakerbot $

localConfig.json is where we’re keeping all our secrets and configurables. You’ll need to edit this to fill out the details that will be specific to your Bot. It’s probably not a good idea to check this file into any publicly visible source control. Doing so could allow a malicious user to impersonate your app.

Here’s what those config settings do:

qnaUri – The QnA service endpoint. This one is preconfigured to point at a endpoint that serves the FAQ content for the Microsoft Bot Framework SDK. You’ll be replacing this with your own in the next section.

BOT_APP_ID – The Microsoft Application ID. Replace with the ID you were assigned when you registered your Bot.

BOT_APP_PASSWORD – The application password. Replace with the one you got at the same time as the application ID.

There’s just one more secret to fill in, an attribute for the webchat control:

tobe ~/src/qnamakerbot $ cat html/index.html

<html>

<head/>

<body>

<iframe src="https://webchat.botframework.com/embed/qnabot?s=<YOUR_APP_SECRET_HERE>" style="height: 502px; max-height: 502px;"></iframe>

</body>

</html>

YOUR_APP_SECRET_HERE – You’ll find this on your Bot configuration page (the same place you registered your Bot). Click ‘Edit’ next to Web Chat in the Channels section and you’ll be shown the secret to copy and paste into the IFRAME src attribute.

Running the Bot

With all that out of the way we can now run our Bot for the first time:

tobe ~/src/qnamakerbot $ node app.js

restify listening to http://[::]:3978

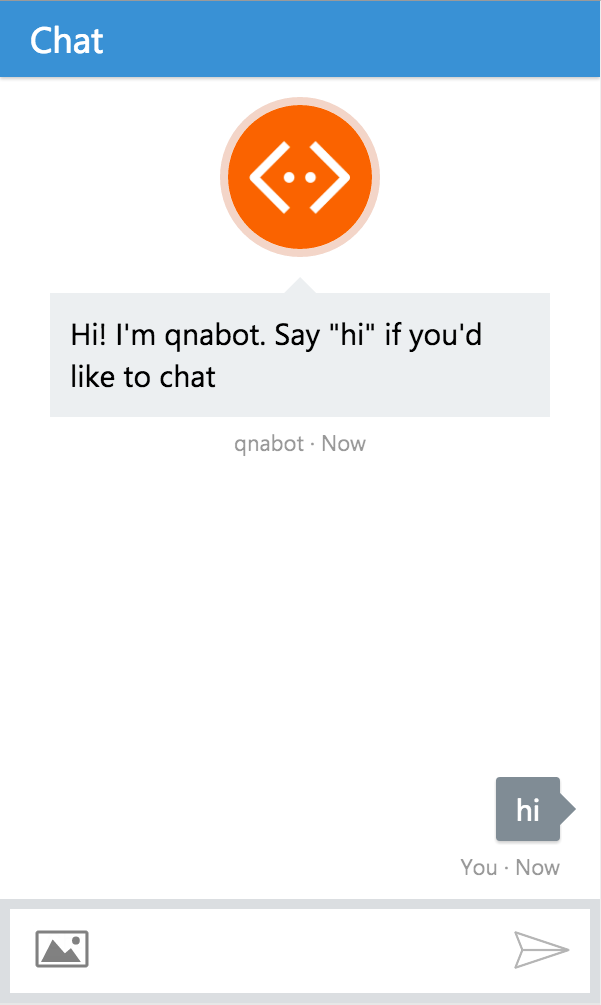

Head over to http://localhost:3978 and you should be looking at something like this:

Awesome! We now have a fully functioning Bot. Anything you type in the web chat control is sent, with some help from ngrok, via the Bot Framework to the app running on your desktop. From there a call is made to an existing QnA service that has been preconfigured to answer questions from the Micrsoft Bot SDK FAQ. Go ahead and ask a it a few questions to see everything working.

Now let’s go right ahead and set up a new QnA Service that can answer questions from whatever FAQ-style content we have to hand.

Configure the QnA Service

Head one over to the QnA Maker site (MSA login required). Choose Create new QnA service and give your bot a name.

Now we get to actually provide our QnA content. We have three options here which we can mix and match to create our knowledge base.

Web Crawling

The simplest is just to point QnA at an existing webpage which already contains our FAQ. QnA will crawl the site structure looking for text patterns that look like question/answer pairs to build its knowledge base.

The pre-configured QnA Service we’ve been using so far was trained on the Microsoft Bot SDK FAQ. All I had to do was paste this uri: https://docs.botframework.com/en-us/faq/ into the configuration to have it learn the FAQ contents.

Editorial Style

We can also add colon-separated QnA pairs directly into the text box in the editorial section. This is useful for adding bits of custom content that your existing FAQ base may not already contain.

Structured Text

You’ll get the most control over exactly what content QnA will process by using the final option which is to bulk upload the question/answer pairs in a text document structured so that the ingest process can easily separate the two. I had best success with the tab-separated option. NOTE: The .suffix of the file is significant here. If you want to use a tab-separated document it must have the .tsv suffix to be recognised as such. It’s also important that there are no duplicate questions in our document as this will cause the parser to exit early and you’ll end up with fewer questions than you thought you had.

Extraction

Once you’ve shown QnA where to get hold of your content choose ‘Extract’ to have it go and build its knowledge base. This should only take a short amount of time.

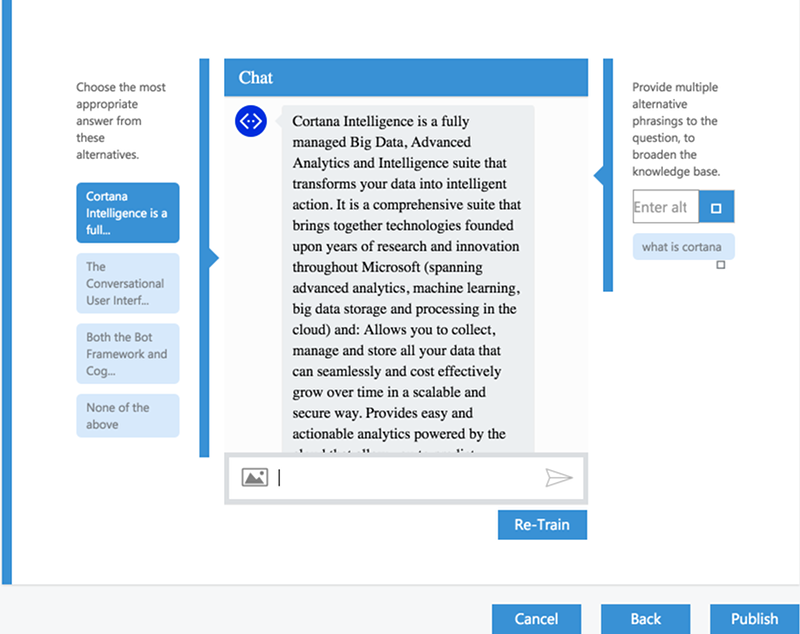

Once that’s done you’ll be able to play around with the knowledge base directly. Type a few questions into the web chat. If you see a few answers that don’t match your questions you now have the opportunity to tweak those.

Training the Knowledge Base

If some of the answers you’re getting don’t match the questions you asked QnA Maker gives you a super simple method to refine the knowledge base.

Everytime QnA Maker returns you an answer in training mode it also show you a selection of the other high-scoring answers for that questions. If you think one of those fits the question better just click on the bubble on the left-hand side to let QnA Maker know you think that was a better response. If none seem to match choose ‘None of the above’ to tell QnA to look for other answers next time.

Similarly if you think there are different ways of asking a question that QnA is not picking up on, you have the option to provide those in the edit box on the right hand side.

Most of the time QnA Maker does a really good job of figuring this stuff out for itself but it’s great to have the option to fine-tune the knowledge base should you need it.

Just click ‘Re-Train’ any time you want to try the new model and you should now find your edits taken into account when you next ask those problematic questions.

Publish the QnA Service

As easy as clicking the Publish button. You’ll be taken to a page showing you the address of your service endpoint. Copy this and use it as the value for the ‘qnaUri’ variable in the localConfig.json at the project root.

Restart the app and you should now be able to use the webchat control to query your FAQ content using natural language.

That’s it!

Well.. not quite. Of course once you’re ready to unleash your FAQ answering Bot on the world you’ll need to deploy your Bot app in the cloud somewhere and update the Bot configuration to point at that new URI. A full guide can be found here.

Summing Up

QnA Maker provides us with a very low friction path to servicing FAQ-style content through a conversational interface. All the complex natural language processing tasks usually associated with projects of this kind have been taken care of for us. QnA Maker is an excellent way to dip our toe into the world of conversational interfaces and get real results quickly.

And remember.. the code is freely available under an Open Source License from GitHub:

https://github.com/CatalystCode/qnamakerbot/tree/minimal

Suggestions for Further Development

What’s been presented here is close to the minimal possible solution for clarity’s sake. It’s easy to add whatever extra features we can imagine. Here are some ideas:

Consider using metadata to control response rendering

One technique that I’ve seen used to good effect is to add metadata to the answer text which is stripped out and used to influence how the response is rendered before it’s returned to the user. The Botbuilder SDK allows a variety of rich media responses to be returned to the user and does a good job of translating these into forms appropriate for the channels. Where appropriate you might choose to return a HeroCard or perhaps an embdedded video instead of block of text.

Add telemetry to better understand how well the FAQ performing

Data’s always useful to understand systems are being used. The QnA maker returns us a confidence score along with the answer text. In situations where that confidence falls below a certain threshold we could ask the user if the previous answer was helpful. The responses to that question could help identifiy areas where the content might extra attention to better serve the customer’s need.

0 comments