If you’re reading this, chances are high that you’re reading in at least one of the following ways:

- Visually, with the vision of a sighted individual

- Auditorily, with assistive technologies like screen readers

- Tactilely, with assistive technologies like refreshable braille displays

Assistive technologies are tools that often reduce technological barriers that individuals may otherwise experience. As such, they enable individuals experiencing disabilities to accomplish tasks, and different assistive technologies may serve the needs of individuals in different situations. Assistive technologies are wide-ranging; among others, they include screen readers and screen magnification software (especially helpful for individuals with visual impairments), as well as alternative keyboards and motion or eye tracking software (especially helpful for individuals with motor impairments).

In this blog post, I’ll be focusing primarily on-screen readers, which are used by individuals with visual impairments, to access information that is otherwise inaccessible to them. However, it is important to be aware of as many various assistive technologies as you can be, as app developers striving to build the most accessible apps.

Studying Screen Readers

According to the World Health Organization, there are over 2.2 billion people experiencing visual impairments at this very moment. That means that more than 27% of the global population can benefit from screen readers, among other assistive technologies, when navigating the digital world.

As the world of apps continues to grow, so too does the number of screen reader users who use apps. It has never been more important to understand and support the screen reader experience on both web and mobile. Check out the Web Accessibility in Mind (WebAIM)’s Screen Reader User Survey #8 Results to learn more.

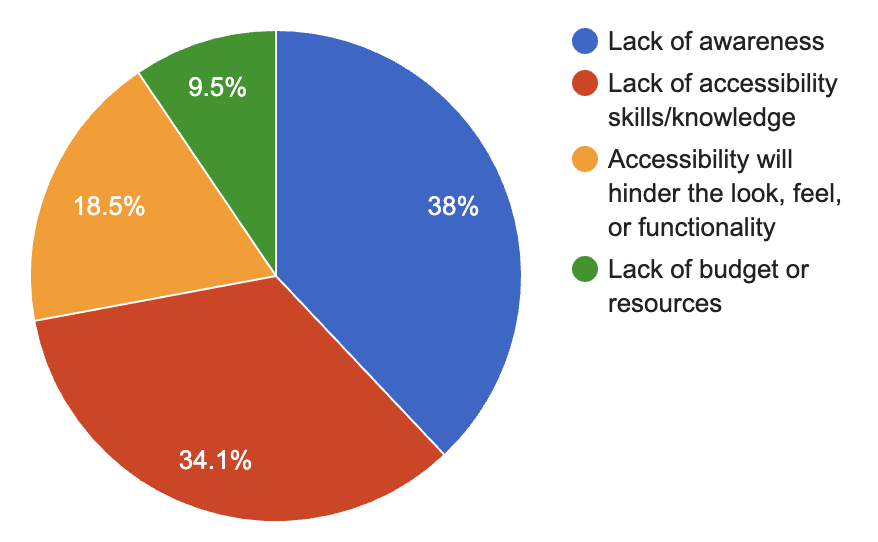

When WebAIM asked screen reader users, “Which of the following do you think is the primary reason that many developers do not create accessible web sites?”, an overwhelming majority of 72.1% replied that they believed developers simply lacked awareness or lacked the accessibility skills and knowledge.

In the last blog post, I encouraged you to familiarize yourself with assistive technologies like screen readers:

Next time you run your app, activate the screen reader for the respective platform — for example, VoiceOver on iOS, TalkBack on Android, Narrator on Windows — and connect a Bluetooth keyboard to your device. Building empathy by understanding the assistive technologies your users use is key to developing accessible apps.

If you haven’t yet tried using a screen reader, now is the time to do so!

Exercising Empathy

For those of you who are reading solely visually, as a sighted person, then you are likely to be less, if at all, familiar with how screen readers function. If this sounds like you, here’s a quick exercise to try out:

- Activate a screen reader from your device.

- On iOS: Go to Settings > Accessibility > VoiceOver. Turn VoiceOver on.

- On Android: Go to Settings > Accessibility > TalkBack. Turn TalkBack on.

- On Windows: Go to Settings > Ease of Access > Narrator. Turn Narrator on.

- On macOS: Go to System Preferences > Accessibility > VoiceOver. Turn VoiceOver on.

- Block the display.

- Turn off the display. Some platforms offer a supported method of turning off the display, primarily for screen reader users who seek privacy and also cannot or do not need to see the screen. If your platform offers such a method, like Screen Curtain on iOS, this is a handy feature to check out. If this is your first time using the feature, just be sure you’ll know how to turn it back off!

- Decrease brightness to zero. A quick alternative to blocking the display is by setting the screen’s brightness down to zero. I’ve found this method to be especially helpful on Desktop platforms.

- Cover your screen in some other way. If your device doesn’t appear to offer a straightforward way of covering your device, get creative! Cut out a dark piece of paper and tape it over your screen.

- Navigate your device.

- Navigate by tapping and swiping through the screen with one finger if you’re on a touch device, or by using keyboard controls if your device is connected to a keyboard. Engage with screen reader tutorials to develop better control of screen reader navigation and fortify your understanding of the screen reader experience.

- Empathize with how different the experience is from what you’re familiar with.

This exercise can help sighted users to begin to understand the screen reader experience and can help sighted app developers understand the importance of supporting the screen reader experience in their apps. However, it is important to remember that this is just one small exercise incomprehensive of the broad range of screen reader experiences.

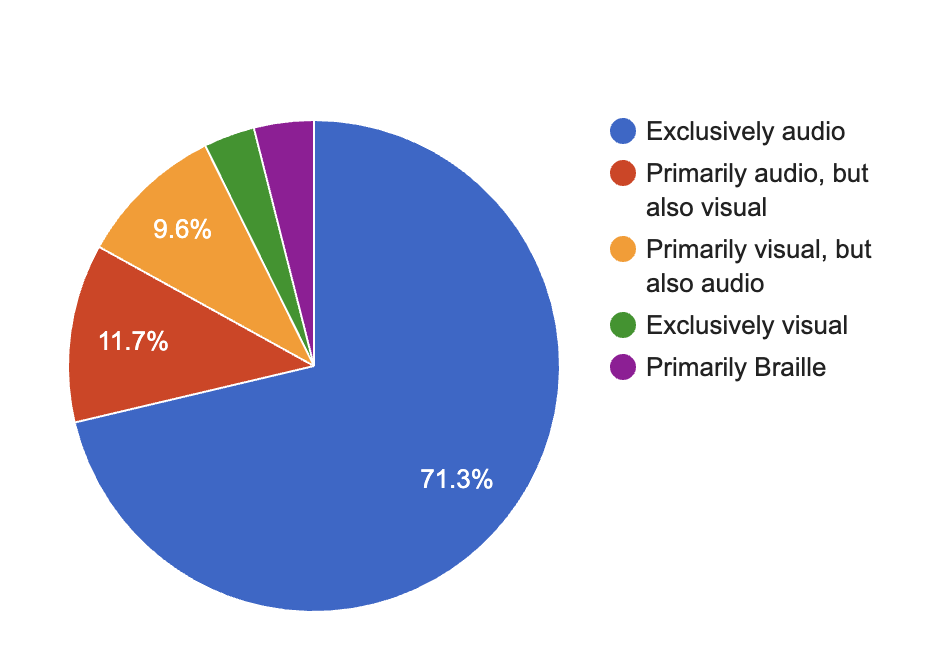

For example, although blocking the screen may be a helpful exercise, screen reader users have varying levels of sight. As captured in the following pie chart, also from WebAIM, 71.3% of screen reader users rely exclusively on screen reader audio, while 24.8% rely also or exclusively on visual content, and 3.9% rely primarily on Braille output.

Note: We’ve listed some widely used screen readers that are offered by native platforms and that our team is most familiar with. However, this list is in no way exhaustive or indicative of quality or usage. See the WebAIM survey results for a more representative list.

Supporting Screen Readers

As app developers, a major way in which we can ensure our apps are accessible is by ensuring that they support the screen reader experience.

Making your apps screen reader accessible

If you activate a screen reader and open your app, you may be surprised to notice that the screen reader is able to appropriately read and navigate through parts, if not most, of your app before you even begin to support screen readers more intentionally. This is largely due to the fact that the native platforms and frameworks underlying your apps often try their best to automatically make your app as screen reader accessible as possible! For example, if your app uses Xamarin.Forms, screen readers will read the text from text-based controls by default.

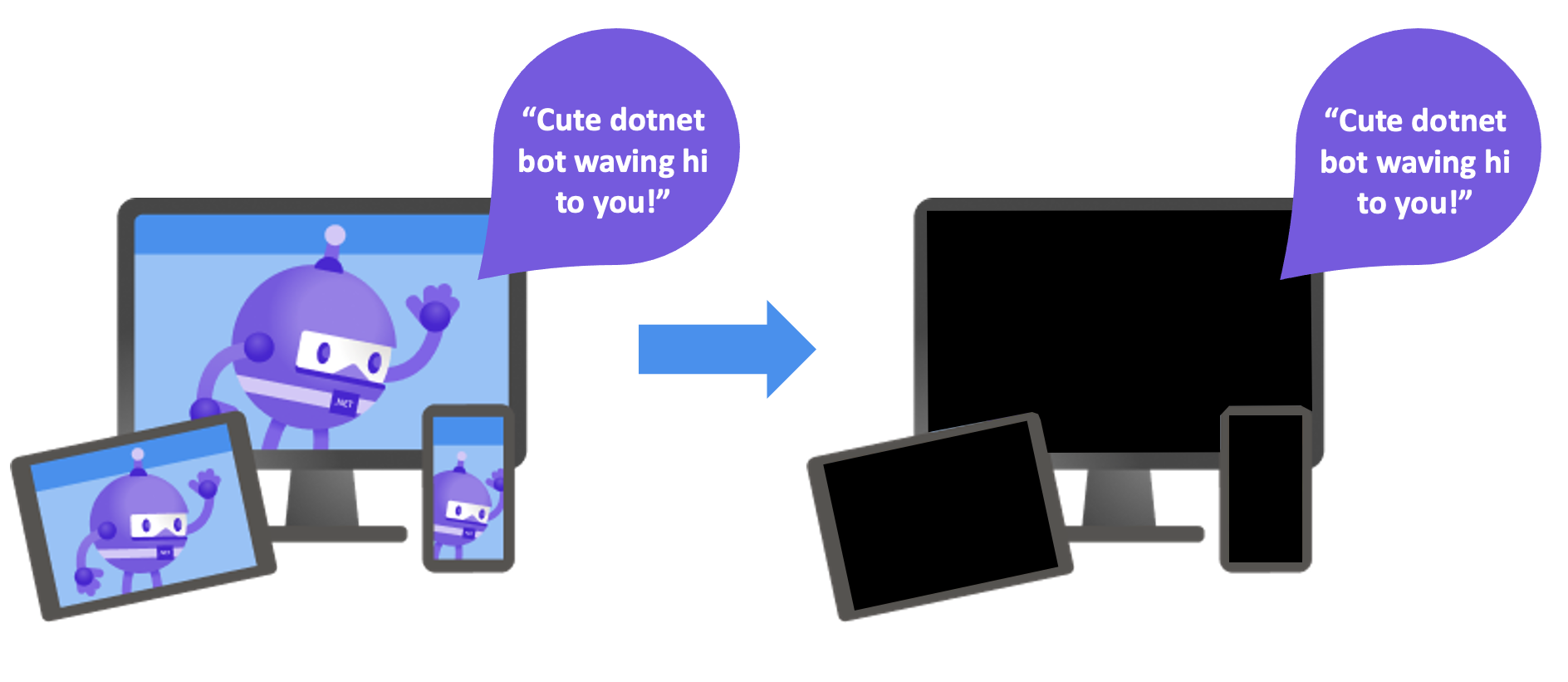

Despite the magic of the underlying platforms and frameworks, however, an app can never be fully screen reader accessible if the app developer is not intentional about making it so. A very common case is the need to make images more accessible by providing alternative text. If developers are not intentional about providing alternative text to images, screen readers usually will not read back anything, as would be the case with this Xamarin.Forms image:

<Image Source="dotnet_bot.png" />Fortunately, your app’s underlying platforms and frameworks often provide a set of accessibility APIs that you can leverage to easily make this image screen reader accessible. At the most fundamental level, these APIs allow developers to specify what parts of their app they want to be reached by screen readers and what they want the screen readers to read aloud. In Xamarin.Forms, this is made possible via Automation Properties:

<Image

Source="dotnet_bot.png"

AutomationProperties.Name="Cute dotnet bot waving hi to you!" />Now, this image is screen reader accessible and will be read back “Cute dotnet bot waving hi to you!”. To learn more about Xamarin.Forms automation properties, be sure to check out the documentation, the new learning module on creating accessible Xamarin.Forms apps, and my talk and demo on this topic.

Testing your apps for screen reader accessibility

The best and most full-proof way of testing for screen reader accessibility will always be to put your app in front of individuals who do use screen readers regularly. Whenever possible, the best way to create inclusive products is to have an inclusive development team that reflects your diverse audience.

But as someone who might not use screen readers regularly, how do you ensure that your app is screen reader accessible? In addition to working with screen reader users directly, app developers can also leverage a myriad of tools to assist in the process. Some that our Xamarin.Forms developers use include:

- Accessibility Insights for testing accessibility in Windows and Android apps

- Accessibility Scanner for testing accessibility in Android apps

- Accessibility Inspector for testing accessibility in iOS and macOS apps

- Android Studio Layout Inspector for debugging Android apps

- Xcode View Debugger for debugging iOS and macOS apps

However, none of these tools can perfectly emulate the screen reader user experience, and the best way to test and troubleshoot your apps for accessibility will always be manually, via exercises like the one described at the beginning of this post.

Looking Forward to .NET MAUI

In .NET MAUI, the next evolution of Xamarin.Forms, we are stirring up many exciting things, including improvements related to accessibility. We are actively evolving our accessibility APIs, hearing your feedback, and looking forward to offering you even more soon.

Through .NET MAUI Preview 3, we introduced a new set of Semantic Properties to help developers improve the screen reader accessibility of their apps, and which I talk more about on The Xamarin Show. If you haven’t already, check out these properties in action in the .NET 6 mobile samples, stay tuned with what properties we’re introducing next, and let us know what you think!

If you’re excited to start exploring the new APIs in your Xamarin.Forms apps today, be sure to check out the latest preview of the Xamarin Community Toolkit, where we’ve implemented some of the new APIs that will be in .NET MAUI, and also check out this exploratory repository where we’re continually exploring new accessibility APIs that you can also experiment with. And if you missed the most recent Let’s Learn .NET session focusing on accessibility you can watch it on-demand! We hope to give you the tools to create the most inclusive apps possible and would love to hear more from you on how we can do so.

Stay tuned for the next blog posts in this accessibility series, and in the meantime, please reach out to join our monthly accessibility calls, and share all your thoughts and ideas with us in the comments, the .NET MAUI repo, Discord, and Twitter (@therachelkang)!

Hi,

does Windows 10 OS support USB HID Braille displays?

I’m very excited to see what MAUI brings in terms of accessibility! We’ve been struggling to make our Xamarin Forms app accessible: as soon as you start playing around with custom controls, it becomes extremely difficult to control Voiceover/Talkback, and UI Tests are not possible with XF accessibility implementation.