Machine learning can be used to add smart capabilities to mobile applications and enhance the user experience. There are situations where inferencing on-device is required or preferable over cloud-based solutions. Key drivers include:

- Availability: works when the device is offline

- Privacy: data can remain on the device

- Performance: on-device inferencing is often faster than sending data to the cloud for processing

- Cost efficiencies: on-device inferencing can be free and more efficient by reducing data transfers between device and cloud

Android and iOS provide built-in capabilities for on-device inferencing with technologies such as TensorFlow Lite and Core ML.

ONNX Runtime has recently added support for Xamarin and can be integrated into your mobile application to execute cross-platform on-device inferencing of ONNX (Open Neural Network Exchange) models. It already powers machine learning models in key Microsoft products and services across Office, Azure, Bing, as well as other community projects. You can create your own ONNX models, using services such as Azure Custom Vision, or convert existing models to ONNX format.

This post walks through an example demonstrating the high-level steps for leveraging ONNX Runtime in a Xamarin.Forms app for on-device inferencing. An existing open-source image classification model (MobileNet) from the ONNX Model Zoo has been used for this example.

Getting Started

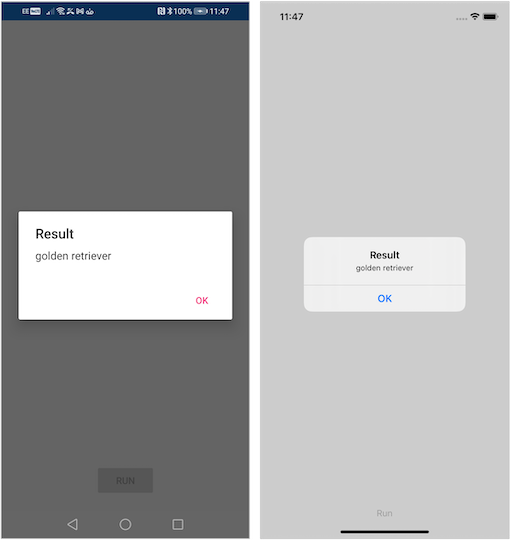

Our sample Xamarin.Forms app classifies the primary object in the provided image, a golden retriever in this case, and displays the result with the highest score.

The following changes were made to the Blank App template which includes a common .NET Standard project along with Android and iOS targets.

- Updating NuGet packages for the entire solution

- Adding the OnnxRuntime NuGet package to each project

- Adding the sample photo, classification labels, and MobileNet model to the common project as embedded resources

- Setting the C# language version for the common project to Latest Major

- Updating info.plist, from the iOS project, to specify a minimum system version of 11.0

- Adding a new class called MobileNetImageClassifier to handle the inferencing

- Updating the templated MainPage XAML to include a Run Button

- Handling the Button Clicked event in the MainPage code-behind to run the inferencing

Inferencing With ONNX Runtime

Two classes from the common project we’ll be focused on are:

MobileNetImageClassifier

MobileNetImageClassifier encapsulates the use of the model via ONNX Runtime as per the model documentation. You can see visualizations of the model’s network architecture, including the expected names, types, and shapes (dimensions) for its inputs and outputs using Netron.

This class exposes two public methods GetSampleImageAsync and GetClassificationAsync. The former loads a sample image for convenience and the latter performs the inferencing on the supplied image. Here’s a breakdown of the key steps.

Initialization

Initialization involves loading those embedded resource files representing the model, labels, and sample image. The asynchronous initialization pattern is used to simplify its use downstream while preventing use of those resources before initialization work has completed. Our constructor starts the asynchronous initialization by calling InitAsync. The initialization Task is stored so several callers can await the completion of the same operation. In this case, the GetSampleImageAsync and GetClassificationAsync methods.

const int DimBatchSize = 1;

const int DimNumberOfChannels = 3;

const int ImageSizeX = 224;

const int ImageSizeY = 224;

const string ModelInputName = "input";

const string ModelOutputName = "output";

byte[] _model;

byte[] _sampleImage;

List<string> _labels;

InferenceSession _session;

Task _initTask;

...

public MobileNetImageClassifier()

{

_ = InitAsync();

}

Task InitAsync()

{

if (_initTask == null || _initTask.IsFaulted)

_initTask = InitTask();

return _initTask;

}

async Task InitTask()

{

var assembly = GetType().Assembly;

// Get labels

using var labelsStream = assembly.GetManifestResourceStream($"{assembly.GetName().Name}.imagenet_classes.txt");

using var reader = new StreamReader(labelsStream);

string text = await reader.ReadToEndAsync();

_labels = text.Split(new string[] { Environment.NewLine }, StringSplitOptions.RemoveEmptyEntries).ToList();

// Get model and create session

using var modelStream = assembly.GetManifestResourceStream($"{assembly.GetName().Name}.mobilenetv2-7.onnx");

using var modelMemoryStream = new MemoryStream();

modelStream.CopyTo(modelMemoryStream);

_model = modelMemoryStream.ToArray();

_session = new InferenceSession(_model);

// Get sample image

using var sampleImageStream = assembly.GetManifestResourceStream($"{assembly.GetName().Name}.dog.jpg");

using var sampleImageMemoryStream = new MemoryStream();

sampleImageStream.CopyTo(sampleImageMemoryStream);

_sampleImage = sampleImageMemoryStream.ToArray();

}Preprocessing

Raw images must be transformed according to the requirements of the model so it matches how the model was trained. Image data is then stored in a contiguous sequential block of memory, represented by a Tensor object, as input for inferencing.

Our first step in this process is to resize the original image if necessary so height and width is at least 224. In this case, images are first resized so the shortest edge is 224 then center-cropped so the longest edge is also 224. For the purposes of this example, SkiaSharp has been used to handle the requisite image processing.

using var sourceBitmap = SKBitmap.Decode(image);

var pixels = sourceBitmap.Bytes;

if (sourceBitmap.Width != ImageSizeX || sourceBitmap.Height != ImageSizeY)

{

float ratio = (float)Math.Min(ImageSizeX, ImageSizeY) / Math.Min(sourceBitmap.Width, sourceBitmap.Height);

using SKBitmap scaledBitmap = sourceBitmap.Resize(new SKImageInfo(

(int)(ratio * sourceBitmap.Width),

(int)(ratio * sourceBitmap.Height)),

SKFilterQuality.Medium);

var horizontalCrop = scaledBitmap.Width - ImageSizeX;

var verticalCrop = scaledBitmap.Height - ImageSizeY;

var leftOffset = horizontalCrop == 0 ? 0 : horizontalCrop / 2;

var topOffset = verticalCrop == 0 ? 0 : verticalCrop / 2;

var cropRect = SKRectI.Create(

new SKPointI(leftOffset, topOffset),

new SKSizeI(ImageSizeX, ImageSizeY));

using SKImage currentImage = SKImage.FromBitmap(scaledBitmap);

using SKImage croppedImage = currentImage.Subset(cropRect);

using SKBitmap croppedBitmap = SKBitmap.FromImage(croppedImage);

pixels = croppedBitmap.Bytes;

}The second step is to normalize the resulting image pixels and store them in a flat array that can be used to create the Tensor object. In this case, the model expects the R, G, and B values to be in the range of [0, 1] normalized using mean = [0.485, 0.456, 0.406] and std = [0.229, 0.224, 0.225]. The loop below iterates over the image pixels one row at a time, applies the requisite normalization to each value, then stores each in the channelData array. Our channelData array stores the normalized R,G, and B values sequentially; first all the R values, then all the G, then all the B (instead of the original sequence i.e. RGB, RGB, etc.

var bytesPerPixel = sourceBitmap.BytesPerPixel;

var rowLength = ImageSizeX * bytesPerPixel;

var channelLength = ImageSizeX * ImageSizeY;

var channelData = new float[channelLength * 3];

var channelDataIndex = 0;

for (int y = 0; y < ImageSizeY; y++)

{

var rowOffset = y * rowLength;

for (int x = 0, columnOffset = 0; x < ImageSizeX; x++, columnOffset += bytesPerPixel)

{

var pixelOffset = rowOffset + columnOffset;

var pixelR = pixels[pixelOffset];

var pixelG = pixels[pixelOffset + 1];

var pixelB = pixels[pixelOffset + 2];

var rChannelIndex = channelDataIndex;

var gChannelIndex = channelDataIndex + channelLength;

var bChannelIndex = channelDataIndex + (channelLength * 2);

channelData[rChannelIndex] = (pixelR / 255f - 0.485f) / 0.229f;

channelData[gChannelIndex] = (pixelG / 255f - 0.456f) / 0.224f;

channelData[bChannelIndex] = (pixelB / 255f - 0.406f) / 0.225f;

channelDataIndex++;

}

}This channelData array is then used to create the requisite Tensor object as input to the InferenceSession Run method. The dimensions (1, 3, 224, 224) represent the shape of the Tensor required by the model. In this case, mini-batches of 3-channel RGB images that are expected to have a height and width of 224.

var input = new DenseTensor<float>(channelData, new[]

{

DimBatchSize,

DimNumberOfChannels,

ImageSizeX,

ImageSizeY

});Inferencing

An InferenceSession is the runtime representation of an ONNX model. It’s used to run the model with a given input returning the computed output values. Both the input and output values are collections of NamedOnnxValue objects representing name-value pairs of string names and Tensor objects.

using var results = _session.Run(new List<NamedOnnxValue>

{

NamedOnnxValue.CreateFromTensor(ModelInputName, input)

});Postprocessing

This model outputs a score for each classification. Our code resolves the Tensor by name and gets the highest score value for simplicity in this example. The corresponding label item is then used as the return value for the GetClassificationAsync method. Additional work would be required to calculate the softmax probability if you wanted to include an indication of confidence alongside the label.

var output = results.FirstOrDefault(i => i.Name == ModelOutputName);

var scores = output.AsTensor<float>().ToList();

var highestScore = scores.Max();

var highestScoreIndex = scores.IndexOf(highestScore);

var label = _labels.ElementAt(highestScoreIndex);MainPage

Our MainPage XAML features a single Button for running the inference via the MobileNetImageClassifier. It resolves the sample image then passes it into the GetClassificationAsync method before displaying the result via an alert.

var sampleImage = await _classifier.GetSampleImageAsync();

var result = await _classifier.GetClassificationAsync(sampleImage);

await DisplayAlert("Result", result, "OK");Optimizations and Tips

This first-principles example demonstrates basic inferencing with ONNX Runtime and leverages the default options for the most part. There are several optimizations recommended by the ONNX Runtime documentation that can be particularly beneficial for mobile.

Reuse InferenceSession objects

You can accelerate inference speed by reusing the same InferenceSession across multiple inference runs to avoid unnecessary allocation/disposal overhead.

Consider whether to set values directly on an existing Tensor or create using an existing array

Several examples create the Tensor object first and then set values on it directly. This is simpler and easier to follow since it avoids the need to perform the offset calculations used in this example. However, it’s faster to prepare a primitive array first then use that to create the Tensor object. The trade-off here is between simplicity and performance.

Experiment with different EPs (Execution Providers)

ONNX Runtime executes models using the CPU EP (Execution Provider) by default. It’s possible to use the NNAPI EP (Android) or the Core ML EP (iOS) for ORT format models instead by using the appropriate SessionOptions when creating an InferenceSession. These may or may not offer better performance depending on how much of the model can be run using NNAPI / Core ML and the device capabilities. It’s worth testing with and without the platform-specific EPs then choosing what works best for your model. There are also several options per EP. See NNAPI Options and Core ML Options for more detail on how this can impact performance and accuracy.

The platform-specific EPs can be configured via the SessionOptions. For example:

Use NNAPI on Android

options.AppendExecutionProvider_Nnapi();Use Core ML on iOS

options.AppendExecutionProvider_CoreML(CoreMLFlags.COREML_FLAG_ONLY_ENABLE_DEVICE_WITH_ANE)SessionOptionsContainer can be used to simplify use of platform-specific SessionOptions from common platform-agnostic code. For example:

Register named platform-specific SessionOptions configuration

// Android

SessionOptionsContainer.Register("sample_options", (sessionOptions)

=> sessionOptions.AppendExecutionProvider_Nnapi());

// iOS

SessionOptionsContainer.Register("sample_options", (sessionOptions)

=> sessionOptions.AppendExecutionProvider_CoreML(

CoreMLFlags.COREML_FLAG_ONLY_ENABLE_DEVICE_WITH_ANE));

Use in common platform-agnostic code

// Apply named configuration to new SessionOptions

var options = SessionOptionsContainer.Create("sample_options");

...

// Alternatively, apply named configuration to existing SessionOptions

options.ApplyConfiguration("sample_options");Quantize models to reduce size and execution time

If you have access to the data that was used to train the model you can explore quantizing the model. At a high-level, quantization in ONNX Runtime involves mapping higher precision floating point values to lower precision 8-bit values. This topic is covered in detail in the ONNX performance tuning documentation, but the idea is to trade off some precision in order to reduce the size of the model and increase performance. It can be especially relevant for mobile apps where package size, battery life, and hardware constraints are key considerations. You can test this by switching out the mobilenetv2-7.onnx model, used in this example, for the mobilenetv2-7-quantized.onnx model included in the same repo. Quantization can only be performed on those models that use opset 10 and above. However, models using older opsets can be updated using the VersionConverter tool.

There are several pre-trained ready to deploy models available

There are several existing ONNX models available such as those highlighted in the ONNX Model Zoo collection. Not all of these are optimized for mobile but you’re not limited to using models already in ONNX format. You can convert existing pre-trained ready to deploy models from other popular sources and formats, such as PyTorch Hub, TensorFlow Hub, and SciKit-Learn. The following resources provide guidance on how to convert each of these respective models to ONNX format:

Your pre-processing must of course match how the model was trained and so you’ll need to find the model-specific instructions for the model you convert.

Disable Hot Reload if you hit a MissingMethodException related to ReadOnlySpan

In Visual Studio 2022, Hot Reload loads some additional dependencies including System.Memory and System.Buffers which may cause conflicts with packages such as ONNX Runtime. You can Disable Hot Reload as a workaround until the issue has been addressed.

Summary

The intent of this post was to provide a helpful on-ramp for those looking to leverage ONNX Runtime in their Xamarin.Forms apps. Be sure to checkout the official getting started and tutorial content as well as the Xamarin samples.

Thank you for providing so much detail on xamarin.forms. While working in xamarin development company and being a continuous reader on the particular, I never encounter such a great blog. You explained every point so well even an amateur like me understand easily. Hats off to you.

Hello, Thanks for an amazing article. Can you confirm what is the typical inference performance you see(ms/image sample). Have you tried comparing inference performance when using the standard OnnxRuntime vs OnnxRuntime with Cuda or DirectML. Is hardware acceleration possible to reduce the inference time?

Hi N Q,

Thanks for your question. I've not measured the performance of this example specifically. Performance will vary between models and will depend on the execution provider and the hardware being used. Cuda and DirectML execution providers aren't available for mobile. You can choose between the default CPU execution provider and NNAPI (Android) / CoreML (iOS). The ONNX Runtime mobile performance tuning documentation covers this topic in greater detail. You'll need to compare the performance of each to see what works best for your model. If you have access to the model training data you can also explore whether you...

Hi Mike,

thanks for your replies.

Tried to remake the existing code into object detection but couldn’t get anything, will there be articles or materials on object detection with onnx in xamarin? Thanks for your hard work!

Hi ilsur-dev111,

Thanks for reaching out. If you think a specific example would be helpful please do make a suggestion for additional content on the OnnxRuntime Inference Examples github repo.

Hi Mike,

thanks for your replies.

sadly i stumbled upon another issue on iOS (even without AOT):

https://github.com/microsoft/onnxruntime/issues/10048

As before, the problem is not in your onnxruntime, this time i think it might be in the xamarin-macios project.

Just to let you know.

In my eyes the .Net ecosystem is at a point, where every subproject only care for there stuff, and if they integrate well with other .net component is up to us developers to find out, pretty unnerving if you go back and forth between xamarin-macios/mono/xamarin.forms/c#-compiler/Visual-Studio/BCL/System.*-Nuget teams to get an issue solved. And this takes month if not years.

So, in the end, developers...

Being able to use onnx RT in Xamarin is very good news. Actually it is the best news I’ve read for Xamarin in 2021.

If I looked correctly (from the loaded assemblies) Emit.ILGeneration is used. Is that correct?

If that’s the case, it might be helpful to add some words about Emit and AOT for iOS. I didn’t test it yet, but I suspect it not to work out of the box, but theoretically it can be solved by adding the “—interpreter” switch to mtouch, doesn’t it?

Hi Jens,

The sample builds and runs using AOT without the need for additional mtouch arguments. We’re not doing any dynamic code generation, just loading embedded resources from the app assembly. While System.Reflection.Emit can’t be used with AOT, use of the System.Reflection API is ok including Assembly.GetManifestResourceStream.

Hi Mike,

thanks for the reply.

I finally had the time to test it on iOS (simulator 13.2 and iphone 13) and it threw what i was worrying about, a PlatformNotSupportedException.

<code>

Stack:

<code>

Simultor and XCode 13.2

I'll give it a try on a real iPhone.

App crashes on an iPhone 13 too.

<code>

It works on UWP and Android though.

The Xamarin App is an empty XF5 App as created by the VS 2022 XF project wizard.

Hi Jens,

Looks like this issue is related to use of the Microsoft.ML package rather than ONNX Runtime. Can I assume correctly that the sample code builds and runs ok for you?

Hi Jens,

Thanks for confirming the sample works for you on iOS (simulator and physical device). There’s an open github issue which discusses Microsoft.ML support specifically

this comment has been deleted.