Hello Android developers,

One of the advantages of the ONNX runtime is the ability to run locally on a variety of devices, including mobile devices. This means that your users get fast response times, but also comes with the need to respect mobile device limitations such as app size and the ability to support performance enhancements.

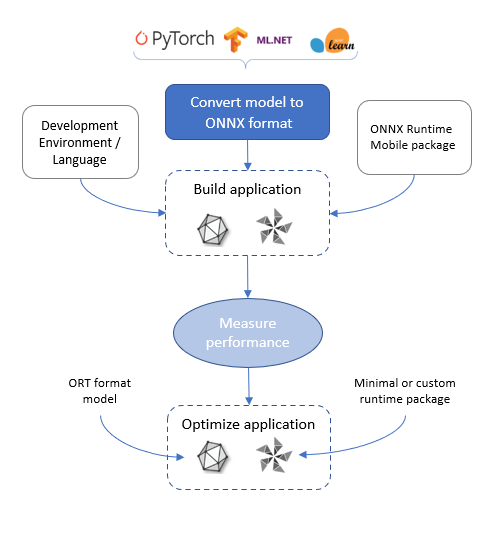

For a general overview of how to build a mobile app with ONNX machine learning, follow this high level development flow:

Mobile vs full package reference

When you add the ONNX runtime to your app project, you can choose to reference pre-built packages that include support for selected operators and opset versions that work for most popular models. These “mobile packages” incur a smaller size footprint for your app, but mean your model can only use the supported opsets and operators.

It’s also possible to use a “full package” with all ONNX opsets and operators, at the cost of larger app binary size.

For Android developers, packages are hosted on mavenCentral and referenced in your module’s build.gradle file:

dependencies {

// choose one of the two below:

implementation 'com.microsoft.onnxruntime:onnxruntime-android:latest.release' // full package

//implementation 'com.microsoft.onnxruntime:onnxruntime-mobile:latest.release' // mobile package

}

To help you decide, the mobile model export helpers for ONNX runtime are scripts that can help you determine whether a model can work on the mobile package, detect or update the opset version for a model, and more.

Mobile usability checker

The model usability checker for mobile will help you determine whether the model you wish to use will work with the “mobile package” (ie. check the opsets/versions required) and also whether the platform native execution providers (such as NNAPI for Android) are likely to improve performance.

The guidance from the usability checker will help you confirm that your model can be deployed to mobile devices, which runtime package you need, how to update the ONNX opset (if required), and how to convert the model to the ORT format required to use with the runtime.

Examples

Examples using the ONNX runtime mobile package on Android include the image classification and super resolution demos. You can see where to apply some of these scripts in the sample build instructions.

Resources and feedback

More information about the ONNX Runtime is available at onnxruntime.ai and also on YouTube.

If you have any questions about applying machine learning, or would like to tell us about your apps, use the feedback forum or message us on Twitter @surfaceduodev.

There won’t be a livestream this week, but check out the archives on YouTube. We’ll see you online again soon!

0 comments