Summary: Use Windows PowerShell to parse a folder that contains data dumps.

Microsoft Scripting Guy, Ed Wilson, is here. This morning I got up, and headed to the Farmers Market in town. I picked up a fresh melon for breakfast and some locally made cheese. Melon and cheese with a nice up of English Breakfast tea…not a bad meal.

I am sitting on the back porch, munching melon, sipping tea, and checking my email on my Surface Pro 3. (The cheese was pretty much gone as soon as I sliced it—not saying what happened to it, just that it did not make it to the porch.)

I decided that I need to do one more thing for my Data Manipulation Week. I am going to modify the function from yesterday (see A Function to Clean Up Data Import), so that I can pass a file or a folder full of files to it, and do the data transformation.

The situation

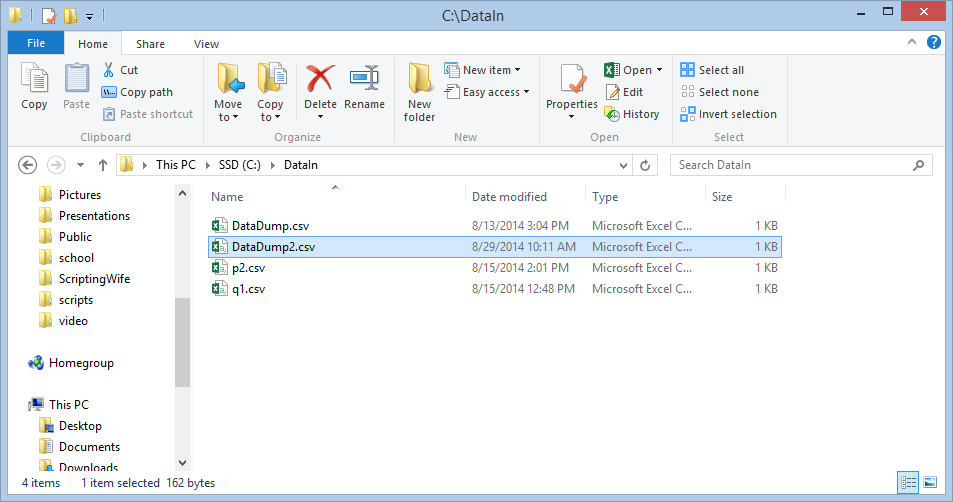

I have a folder that contains several data dumps. In fact, some of the data dumps are not even germane to my database. Luckily, the data dumps that I need all begin with the word DataDump. Here is a look at the DataIn folder:

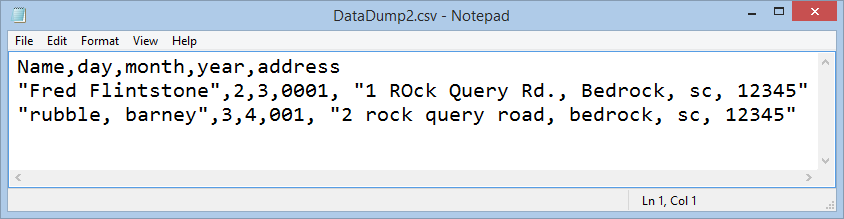

The DataDump2.csv file contains a couple of additional records—in fact, there are a couple new errors that I did not see in my previous DataDump file (such as a capital “O” in the street name ROck Query Road. This is shown here:

Dude, how hard is that? Not very…

When I first thought about parsing a directory and finding all the DataDump files, I thought, well…like…I will need to write a new script.

But I do not have to do that. The reason is that I have a nice function that accepts a path to a file. So all I need to do is to collect my paths to files, and I am set. To do that, I use the Get-ChildItem cmdlet, and I specify that I want to recurse through the folder. I can use the –filter parameter to specify that I only want files that begin with the word DataDump.

When I have a collection of FileInfo objects that point to the CSV files, I want to process each of the files and run my Convert-Data function on them. Here is the command I use (gci is an alias for Get-ChildItem and % is an alias for ForEach-Object):

gci C:\DataIn -Filter datadump* -Recurse | % {Convert-Data -path $_.FullName}

That is it. I did not need to write a script to do this.

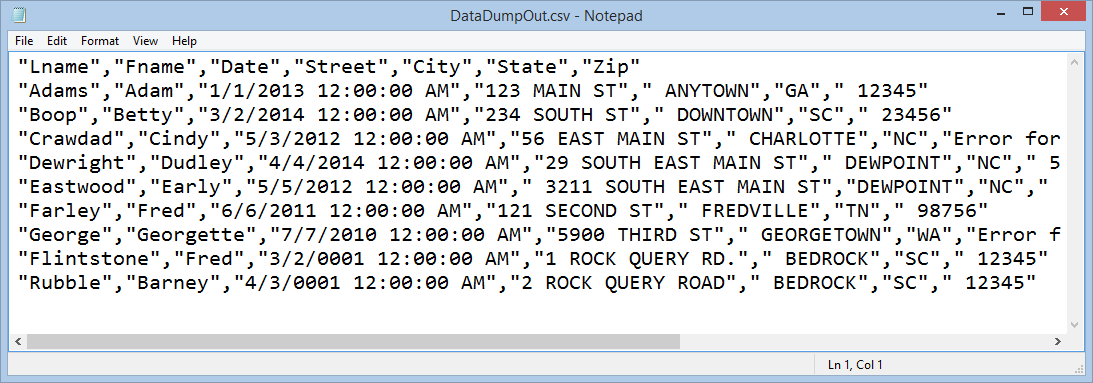

A new consolidated CSV file…

Now I need a new consolidated CSV file so that I can use it to import data into my new database. No problem, I use the previous command (I simply use the Up arrow). Then at the end, I pipe it to Export-CSV and I point it to the DataOut folder. Here is the command:

gci C:\DataIn -Filter datadump* -Recurse | % {Convert-Data -path $_.FullName} | Export-Csv -Path C:\DataOut\DataDumpOut.csv -Append -NoTypeInformation

And here is the newly created CSV file:

Join me tomorrow when I will begin a discussion about tuples…you will love it.

I invite you to follow me on Twitter and Facebook. If you have any questions, send email to me at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, peace.

Ed Wilson, Microsoft Scripting Guy

0 comments