Summary: Microsoft PowerShell MVP, Marco Shaw, shows how to use Windows PowerShell to download TechEd 2012 session content.

Microsoft Scripting Guy, Ed Wilson, is here. Today we have a guest blogger, Windows PowerShell MVP, Marco Shaw. For more from Marco, read his other Hey, Scripting Guy! guest blogs.

Here’s Marco…

I wrote a Windows PowerShell script last year to automatically download Microsoft TechEd sessions. I also wrote a guest Hey, Scripting Guy! Blog about that script: Download TechEd Sessions Automatically by Using PowerShell.

That script had a few different features like being able to get session information, and it had some logic for retrieving specific tracks. The script also used the OData service that Microsoft was providing at the time. I wasn’t able to find an OData service for any TechEd 2012 sessions, but Channel 9 on MSDN does provide an RSS feed for all of the Microsoft TechEd events (and others such as BUILD, too).

I was a bit lazy (and excited) when TechEd North America and Europe were held this past June. I didn’t write a script that time because I was almost downloading them as they were released. But that wasn’t very efficient— was downloading them on different computers and downloading some more than once…

Now that TechEd New Zealand is over and some of the sessions are appearing online, and TechEd Australia is coming up as I write this, I decided to redo my script, but possibly make it much smaller. It’s going to be smaller because it seems that I’m interested in just about everything these days, so the logic for picking certain tracks won’t be useful anymore.

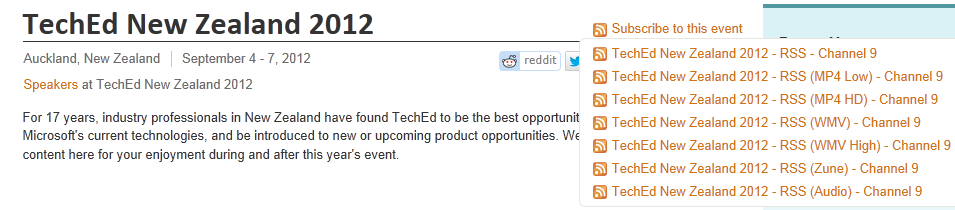

Channel 9 not only enables you to stream the session live, but it offers all kinds of different video formats for you to download (including links to the PowerPoint presentations if they are available). So you can go directly to the site, go the Events, and then click TechEd. From there you can pick the event you’re interested in and hover over Subscribe to this event to see all of the available RSS feeds. This is shown in the following image.

You can view the RSS feed directly in your browser. It shows things like the session overview and provides a link to the WMV file.

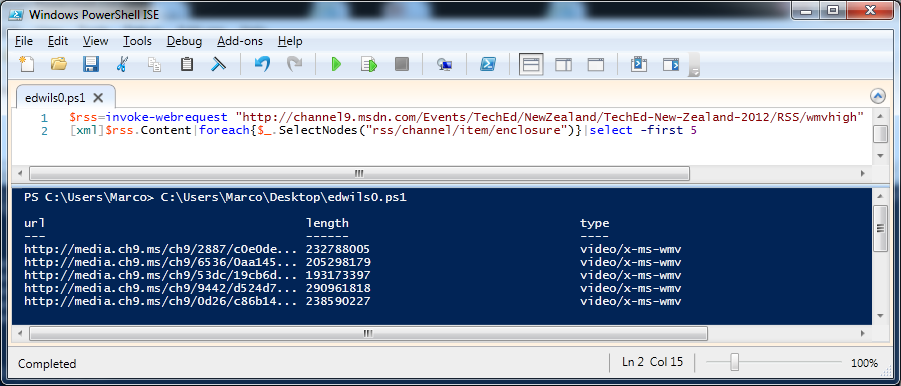

To automate the download, you can right-click and copy the URL to the RSS feed that interests you, depending on the format that you’d like to download. In my case, I wanted to get the WMV High downloads, so I ended up with the following URL.

Note You’ll need Windows PowerShell 3.0 to do this. If you do not have Windows PowerShell 3.0, you can download the Windows Management Framework 3.0 from the Microsoft Download Center.

$rss=invoke-webrequest “https://channel9.msdn.com/Events/TechEd/NewZealand/TechEd-New-Zealand-2012/RSS/wmvhigh”

RSS is basically XML, but I played around with the variable a bit and ended up with this command.

[xml]$rss.Content

There may have been several ways for me to handle $rss to get what I needed to continue, but this is what worked for me to help me get at the URL for each video.

[xml]$rss.Content|foreach{$_.SelectNodes(“rss/channel/item/enclosure”)}

At the end of the previous line, I use an XPath query to get at the video links. XPath queries can be very useful when you need to get to particular nodes in an XML structure. What the previous command basically does is look at the resulting XML, then it gives me all of the enclosure XML elements inside of a structure that looks like the following.

<rss>

<channel>

<item>

…

<enclosure url=”” length=”” type=””/>

…

</item>

<item>

…

<enclosure url=”” length=”” type=””/>

…

</item>

<item>

…

<enclosure url=”” length=”” type=””/>

…

</item>

…

</channel>

</rss>

Here’s a small sample of what I ended up with.

That helps me access the download URL for all of the sessions, but I wanted to add a bit more to the logic:

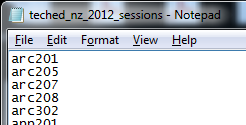

- I typed all of the sessions that interested me into a TXT file (I used the List view on the Channel 9 site to quickly scan all of the session abstracts).

- I wanted to make sure I didn’t already have the session downloaded.

- I wanted some way to stop the script without having to break a download in progress.

$my_list simply contains a list of all of the sessions that currently interest me. This is shown here.

Now, let’s talk about performance, because even a script that might be a few lines can sometimes be improved. What I have is list1 ($rss), and list2 (from my TXT file). When I started with Windows PowerShell over five years ago, I remember facing a similar issue. Being new, my approach was to loop through list1, and for every entry, loop through list2 to see if there’s a match.

The script I wrote over five years ago did what I needed it to do in an acceptable timeframe, but I was left wondering if it could be better.

Consider if, for example, the lists were 100 entries each. Based on the approach I used above, I would be comparing 100 x 100 times! I would read the first line in list1, then run through all of the entries in list2 to look for a match, then I would read the second line in list1, and again run through all of the entries in list2. Eventually, I would be at line #100 in list1, and would do one final scan all of the entries in list2. That’s a lot of processing—maybe for nothing!

The non-optimal, pseudo-code could look something like this.

get-content list1|foreach{$tmp=current_line;get-content list2|foreach{if($_ -eq $tmp){get_the_file}}}

If you follow this pseudo-code, you might have just passed out! I know. I’m almost there…

Yes, there are some things that I could have done, like add a break near the end so the last script block looked more like “{get_the_file;break}”. But that still wouldn’t make the script something to be really proud of.

I decided to go to the community for some help, so I went to one of the online communities (probably NNTP back then). Someone mentioned that I could use a solution that uses an array and the -Contains operator, and he provided a simplified example. So instead of 10,000 scans from my previous example, Windows PowerShell would simply look at an array to determine if it contained the string. The result: blazing fast compared to my original approach!

So using that logic again here, I come up with this:

$rss=invoke-webrequest “https://channel9.msdn.com/Events/TechEd/NewZealand/TechEd-New-Zealand-2012/RSS/wmvhigh”

$my_list=get-content “C:\Users\Marco\Desktop\teched_nz_2012_sessions.txt”

[xml]$rss.Content|foreach{$_.SelectNodes(“rss/channel/item/enclosure”)}|where{$my_list -contains ($_.url.split(“/”)[-1].substring(0,6))}

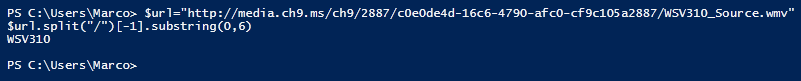

The last part might be a bit harder to digest. I’m taking the full text string, which is the download location of the WMV file, and chopping it up. I need to do this so I can determine if the current session is on my “wanted list.” A raw URL looks like this:

http://media.ch9.ms/ch9/2887/c0e0de4d-16c6-4790-afc0-cf9c105a2887/WSV310_Source.wmv

I’m only interested in the last part (WSV310), which is the actual session information. So I chose to split the string and get a section of it as shown here.

Now, I also need to address items #2 and #3. The following polished version addresses both of these items.

I added the logic to check if the download file already existed on my local system. I also added a simple Start-Sleep to introduce a small pause in the script. The pause is just long enough for me to interrupt the script before the next download so that I can shut down my laptop to bring it with me somewhere.

Adding the logic to check if the download file already exists addresses two things:

- If I happen to download files from another computer, I can simply drop them into the $destination folder, and my script will not try to download them again.

- If I start to download the sessions, but they all haven’t been posted yet, I could run my script daily to make sure I grab anything new that has been posted since the last run.

I could potentially edit the if statement to alert me of sessions that weren’t found. It is quite possible that a particular session was never encoded in the quality I want, so I can then look at the sessions that don’t have WMV High encoding and decide that maybe I need to go with a lower quality download. To help with this, I could potentially dump the session names to a second text file, look up the RSS feed for that download, then automate those downloads also.

The item that seems to have the highest probability of failing is the Start-BitsTransfer line. This would be the first place, and maybe only, that I would consider using a Try/Catch statement to handle any terminating errors. I chose not to add any error checking, because running the script again won’t cause any unwanted transfers.

I did choose to do some manual work this time around, and this can be left as an exercise for the reader. For example, you could improve the script so it retrieves the RSS feed, scrolls through all of the sessions, asks the user if they want to download the session, saves this to a file (or even to memory), does some calculations to determine if there’s enough disk space, and begins the download.

Another idea might be that after the download is complete, the script could check the local size against the size reported in the RSS feed. I didn’t look into it, but I recently noticed that some of my WMV files were very small, so something wrong must have happened while downloading. Each WMV file is roughly 200-300 MB in size on average.

The complete script is available via the Scripting Guys Script Repository: Automatically Download Microsoft TechEd 2012 Sessions with Windows PowerShell 3.0.

~ Marco

Thank you, Marco, for writing a really cool blog, which is not only fun but also contains useful information.

Join me tomorrow for guest blog by Microsoft PowerShell MVP, Bartek Bielawski, about using his AST module. It is cool.

I invite you to follow me on Twitter and Facebook. If you have any questions, send email to me at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, peace.

Ed Wilson, Microsoft Scripting Guy

0 comments