Introduction

In this blog post, we explore how Large Language Models (LLMs) can extract valuable insights from customer feedback. By analyzing feedback, businesses can make customer-centric decisions, ultimately enhancing satisfaction. The post highlights the insights gathered from an engagement with a leading retailer, where LLMs were used to extract information from shopper feedback. We delve into extracting themes, sentiment, and competitor comparisons, as well as crafting effective prompts for optimal results from LLMs.

The problem

Organizations employ various strategies to enhance customer satisfaction. Collecting customer feedback and analyzing it to address concerns are common among these practices. However, manually reviewing large volumes of feedback can be time-consuming, especially when there are tens of thousands of feedbacks received everyday. Traditional NLP models are found to be ineffective especially for handling large review comments. In this post, we provide an approach to use LLM to generate actionable insights from feedbacks to improve the products and services of the retailer, thus helping the business to stay ahead of their competitions.

Objective

Prompt engineering is the practice of developing and optimizing prompts to effectively use the Large Language Models for variety of applications. The main objective of this blog is to walk you through the process of crafting an effective prompt using the iterative approach and refining it to obtain a desired output. We will discuss how to design the prompt to extract the themes, sentiment and the competitor comparisons from the shopper’s feedback for Retail stores. A high-level architecture, that mainly uses the Azure OpenAI with GPT Model, will also be covered briefly in this post. Though the architecture that we discuss in this blog is related to insights generation from shoppers’ reviews comments for their shopping experience in physical stores, it can be adopted to any industries that receive customer feedback to understand the sentiment and act on those sentiment to improve product and services.

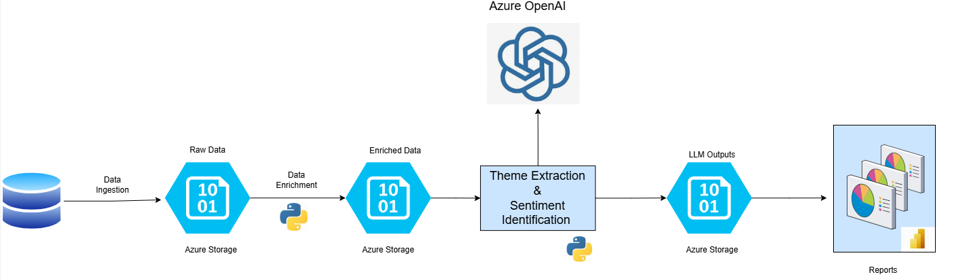

Architecture

The high level architecture is shown below. The customers (shoppers) feedbacks are available from a data source. A data pipeline can be built to ingest the data from the data store for any data processing activities such as data cleansing and data enrichments before utilizing large language models to generate insights from the feedback. Themes Extraction and Sentiment Generator module does the job of calling the OpenAI to generate the themes from each of the review comments. For each of themes, OpenAI also generates the appropriate sentiment and the competitor comparisons. We will not be covering a detailed architecture in this blog as the main purpose of this blog is on crafting the prompt for generating the desired insights from feedback by minimizing the hallucinations from LLMs.

Themes are some of the underlying message or insights conveyed to business to improve the customer experience. Themes in a Retail scenario can be the category of the shopping experience expressed by a shopper. Some examples of themes are: Checkout experience, Store Cleanliness, Product Availability, Product Freshness etc. The feedback that the customer provides will have an associated themes and sentiment. For example, shoppers would have provided a feedback on lack of some products in the store. This is a negative sentiment for the theme, Product Availability. Another customer would have mentioned that the store was very clean. This is a positive sentiment for the theme, Store Cleanliness.

Prompt Engineering for Insights Generation

To achieve the best and relevant outcome from LLM, it is important to arrive at a prompt by way of iterative and evolutionary approach. In this section we will present some of the prompt engineering techniques to use for extracting the themes, sentiment and competitor comparisons from the feedback comments. Note that the prompt snippets provided in this post might not be the best prompt that suits for all use cases. The prompts can be refined by trying out the many prompt engineering techniques that are evolving over time. The Resource section at the end of this post provides a few resources you can refer to apply some of the recommended prompt engineering techniques while crafting the prompt.

Enrichment of data

The feedback data might need some enrichment activity before it can be used to extract insights. Once you have the feedback data, the first thing to look at is how to enrich the feedback with other fields from the source data. Sometimes, the feedback generation tool provides an option to select a category against which customers have provided comments. In this case, prefixing the category to the comments provides a good context to the comments. The feedback data might need some cleanup such as removing one-word comments or the comments that are meaningless such as Nil, Null, No comments, etc. It is always good to validate the data against the schema to avoid hallucinations and any exceptions from LLM during the insight generation process. Building a data pipeline that performs data ingestion, data cleansing, data enrichment and schema validation and making the refined data available for the LLM calls is the first step towards getting best outcome from LLM.

Start with basic prompt

Building the prompt is an iterative process. Start with a very basic prompt. It can be as simple as “Extract all the themes mentioned in the provided feedback and for each of the themes generate the sentiment”. See the consistency of theme generation by running it many times on daily or weekly feedback data. There is always a possibility that you don’t often get consistent themes generated for each run for the same data as well as data from different days. The reason is that a theme can be represented in various ways. For example, Cleanliness, Neatness, Tidiness all pertain to the cleanliness of the store. However, different runs may extract different themes with the same underlying meaning. This results in inconsistent themes that can’t be used for any analytics purpose such as creating trend analysis graphs for themes.

Here is a sample prompt that you can start with to generate insights from review comments.

system_prompt = f"""System message - You are an AI assistant that helps to analyze a customer reviews.

The output of the analysis should be a list of topics that are mentioned in the review.

The format of the output should be a json with topics as the keys.

The topic should be as general as possible, ex : Cleanliness, availability, quality, service etc.

"""Refine the prompt

Prompt engineering techniques play an important role in refining the prompt to get a desired outcome. Themes differ for industry verticals. For example, the themes in retail may be different from themes in the banking industry, that will be different in Hospitality industry. One of the techniques to generate consistent themes is to identify a pre-defined set of themes. One approach to identifying a predefined set of themes is to repeatedly apply the prompt to feedback data over different time periods, then manually review the generated themes and group those that are similar. Once the grouping of the themes is identified, select the most appropriate themes from each of the groups. For example, from the group that contains items ‘Cleanliness’, ‘Neatness’, ‘Tidiness the right theme to select might be ‘Cleanliness‘. A subject matter expert from the retail domain can help in coming up with the predefined set of themes for the retail Industry.

Here is a sample of predefined themes that is relevant to retail stores:

Checkout Experience

Insufficient Staff

Product Availability

Product Pricing

Product Quality

Product Variety

Shopping Experience

Store Cleanliness

Store Layout Once the relevant themes are identified, the prompt can be fine tuned to generate the themes from the above predefined themes. Here is an example of the prompt that uses a predefined list to generate the themes from the feedback.

System message: As an intelligent AI assistant, your role is to critically analyze the customer reviews to generate review category

These reviews are the feedback and suggestion given by the customer when they came to shop in stores.

Here's how you'll do it:

- Customer reviews will be presented in the format 'review comments'.

- Your task is to analyze each review, and classify them under pre-defined themes.

- You will be provided with predefined themes. You have to choose the theme which best describes the review.

- The output should be in a Json format.

Here is the list of predefined themes:

Checkout Experience

Insufficient Staff

Product Availability

Product Pricing

Product Quality

Product Variety

Shopping Experience

Store Cleanliness

Store LayoutTo generate the LLM output in a desired format, such as key-value pair, the output format can be mentioned in the prompt.

Prompt for identifying the sentiments

In addition to extracting the themes from feedback, the LLM can be used to identify the sentiment from the feedback. The earlier prompt can be modified to generate the sentiment for each of the themes.

Here is an example of the prompt that extracts the themes and the sentiment from customer feedbacks.

System message: As an intelligent AI assistant, your role is to critically analyze the customer reviews to generate review category

These reviews are the feedback and suggestion given by the customer when they came to shop in stores.

Here's how you'll do it:

- Customer reviews will be presented in the format 'review comments'.

- Your task is to analyze each review, and classify them under pre-defined themes.

- You will be provided with predefined themes. You have to choose the theme which best describes the review.

- You also have to extract sentiment from the review for each of the themes. The sentiment can be "positive", "negative" or "neutral".

- The output should be in a Json format.

Here is the list of predefined themes:

Checkout Experience

Insufficient Staff

Product Availability

Product Pricing

Product Quality

Product Variety

Shopping Experience

Store Cleanliness

Store LayoutPrompt for extracting competitor comparisons

Feedback often references competitors in relation to the products and services offered by your company. It is crucial to understand how customers perceive your company compared to the competitors. By analyzing feedback comments and identifying the competitors comparisons and sentiment, your company can address any negative aspects and enhance customer satisfaction.

The earlier prompt can be enhanced to include the extraction of competitors comparisons from feedback.

Here is an example of the prompt that extracts the competitors comparisons from feedbacks.

System message: As an intelligent AI assistant, your role is to critically analyze the customer reviews to generate review category

These reviews are the feedback and suggestion given by the customer when they came to shop in stores.

Here's how you'll do it:

- Customer reviews will be presented in the format 'review comments'.

- Your task is to analyze each review, and classify them under pre-defined themes.

- You will be provided with predefined themes. You have to choose the theme which best describes the review.

- You also have to extract sentiment from the review for each of the themes. The sentiment can be "positive", "negative" or "neutral".

- You also have to list any other supermarkets which are <your company name>'s competitors like '<competitor 1>', '<competitor 2>', or '<competitor 3 >'. If a competitor is not mentioned, list 'NA'.

- The output should be in a Json format.

Here is the list of predefined themes:

Checkout Experience

Insufficient Staff

Product Availability

Product Pricing

Product Quality

Product Variety

Shopping Experience

Store Cleanliness

Store LayoutReports generation from LLM outputs

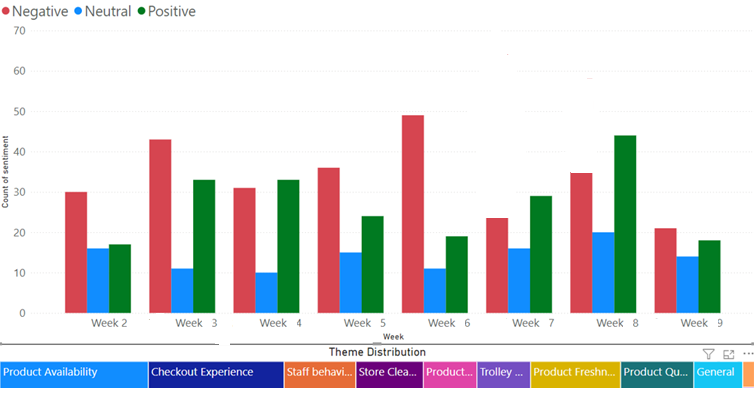

Utilizing the output from LLMs effectively is crucial for businesses seeking to derive meaningful insights and take informed actions. Various reports can be generated from this output. These reports may use graphs to visualize both positive and negative sentiment. Additionally, they can highlight the themes that significantly influenced these sentiments, identifying the most impactful factors. By creating weekly trend reports, businesses can validate the effectiveness of past actions in enhancing shopper’s positive sentiment. Furthermore, businesses can provide a drill-down feature that allows users to view the actual comments from shoppers directly from the sentiment analysis graph. Store managers can utilize these reports to enhance customer satisfaction by taking effective actions. The insights generated at the store level can then be aggregated to higher levels in the store hierarchy, including district, state, regional, and national levels. Business managers at each level can access reports that assist them in making informed decisions within the store hierarchy.

Summary

In this blog post, we explored how Large Language Models (LLMs) can be used to extract valuable insights from customer feedback. It presented a high-level architecture that leveraged the Azure OpenAI service with GPT models to extract themes, sentiments, and competitor comparisons from customer feedback. The article underscored the significance data enrichment and prompt engineering in achieving the desired outputs from LLMs. This post serves as a reference for anyone seeking to use LLMs to extract meaningful insights from customer feedbacks. Something that is not covered in this blogs is the importance of evaluating the output from LLM, using different evaluation techniques, before the solution can be rolled out to production. If the reviews or feedbacks contains any sensitive data, make sure those are masked before the reviews can be send to LLM to generate insights so that the solution built follows Responsible AI principles.

Resources

Azure OpenAI Service Documentation

Introduction to Prompt Engineering

Acknowledgements

I extend my gratitude to my colleagues whose contributions to the engagement shaped the insights presented in this blog

The feature image was generated using Bing Image Creator. Terms can be found here.