Four years ago, around the time .NET Core 2.0 was being released, I wrote Performance Improvements in .NET Core to highlight the quantity and quality of performance improvements finding their way into .NET. With its very positive reception, I did so again a year later with Performance Improvements in .NET Core 2.1, and an annual tradition was born. Then came Performance Improvements in .NET Core 3.0, followed by Performance Improvements in .NET 5. Which brings us to today.

The dotnet/runtime repository is the home of .NET’s runtimes, runtime hosts, and core libraries. Since its main branch forked a year or so ago to be for .NET 6, there have been over 6500 merged PRs (pull requests) into the branch for the release, and that’s excluding automated PRs from bots that do things like flow dependency version updates between repos (not to discount the bots’ contributions; after all, they’ve actually received interview offers by email from recruiters who just possibly weren’t being particularly discerning with their candidate pool). I at least peruse if not review in depth the vast majority of all those PRs, and every time I see a PR that is likely to impact performance, I make a note of it in a running log, giving me a long list of improvements I can revisit when it’s blog time. That made this August a little daunting, as I sat down to write this post and was faced with the list I’d curated of almost 550 PRs. Don’t worry, I don’t cover all of them here, but grab a large mug of your favorite hot beverage, and settle in: this post takes a rip-roarin’ tour through ~400 PRs that, all together, significantly improve .NET performance for .NET 6.

Please enjoy!

Table Of Contents

- Benchmarking Setup

- JIT

- GC

- Threading

- System Types

- Arrays, Strings, Spans

- Buffering

- IO

- Networking

- Reflection

- Collections and LINQ

- Cryptography

- “Peanut Butter”

- JSON

- Interop

- Startup

- Tracing

- Size

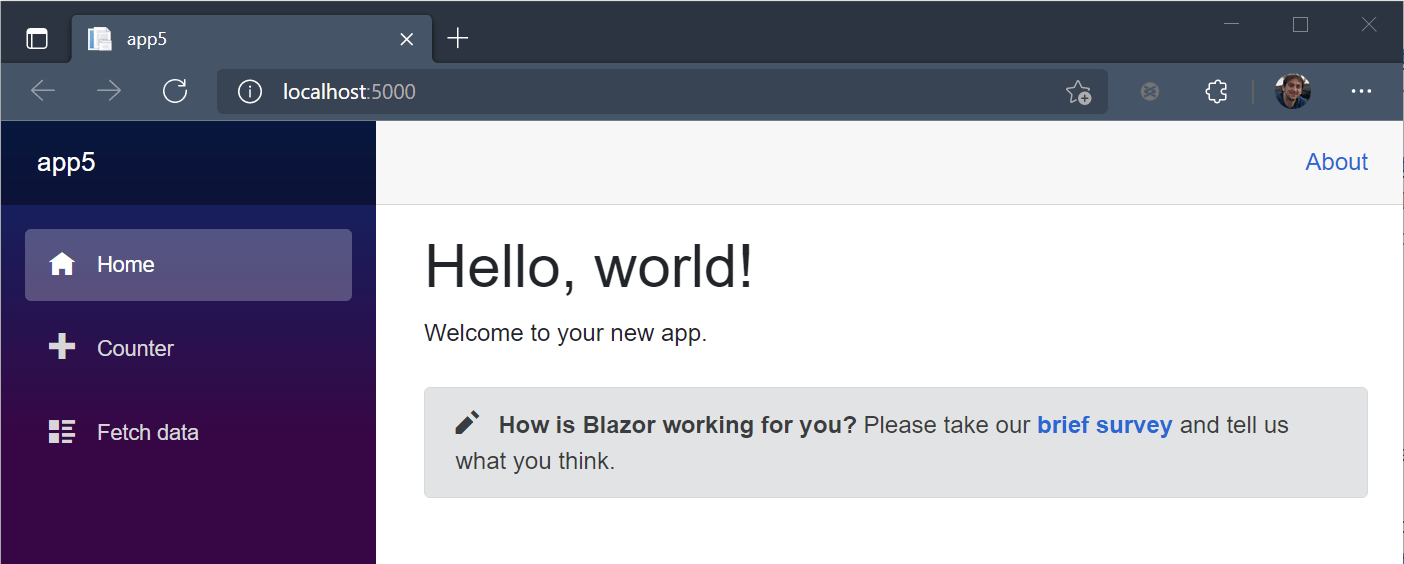

- Blazor and mono

- Is that all?

Benchmarking Setup

As in previous posts, I’m using BenchmarkDotNet for the majority of the examples throughout. To get started, I created a new console application:

dotnet new console -o net6perf

cd net6perfand added a reference to the BenchmarkDotNet nuget package:

dotnet add package benchmarkdotnetThat yielded a net6perf.csproj, which I then overwrote with the following contents; most importantly, this includes multiple target frameworks so that I can use BenchmarkDotNet to easily compare performance on them:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<OutputType>Exe</OutputType>

<TargetFrameworks>net48;netcoreapp2.1;netcoreapp3.1;net5.0;net6.0</TargetFrameworks>

<Nullable>annotations</Nullable>

<AllowUnsafeBlocks>true</AllowUnsafeBlocks>

<LangVersion>10</LangVersion>

<ServerGarbageCollection>true</ServerGarbageCollection>

</PropertyGroup>

<ItemGroup>

<PackageReference Include="benchmarkdotnet" Version="0.13.1" />

</ItemGroup>

<ItemGroup Condition=" '$(TargetFramework)' == 'net48' ">

<Reference Include="System.Net.Http" />

</ItemGroup>

</Project>I then updated the generated Program.cs to contain the following boilerplate code:

using BenchmarkDotNet.Attributes;

using BenchmarkDotNet.Running;

using BenchmarkDotNet.Columns;

using BenchmarkDotNet.Configs;

using BenchmarkDotNet.Reports;

using BenchmarkDotNet.Order;

using Perfolizer.Horology;

using System;

using System.Buffers;

using System.Buffers.Binary;

using System.Buffers.Text;

using System.Collections;

using System.Collections.Concurrent;

using System.Collections.Generic;

using System.Collections.Immutable;

using System.Collections.ObjectModel;

using System.Diagnostics.CodeAnalysis;

using System.Diagnostics;

using System.Diagnostics.Tracing;

using System.Globalization;

using System.IO;

using System.Linq;

using System.Net;

using System.Net.Http;

using System.Net.Sockets;

using System.Net.WebSockets;

using System.Numerics;

using System.Reflection;

using System.Runtime.CompilerServices;

using System.Runtime.InteropServices;

using System.Security.Cryptography;

using System.Security.Cryptography.X509Certificates;

using System.Text;

using System.Threading;

using System.Threading.Tasks;

using System.IO.Compression;

#if NETCOREAPP3_0_OR_GREATER

using System.Text.Encodings.Web;

using System.Text.Json;

using System.Text.Json.Serialization;

#endif

[DisassemblyDiagnoser(maxDepth: 1)] // change to 0 for just the [Benchmark] method

[MemoryDiagnoser(displayGenColumns: false)]

public class Program

{

public static void Main(string[] args) =>

BenchmarkSwitcher.FromAssembly(typeof(Program).Assembly).Run(args, DefaultConfig.Instance

//.WithSummaryStyle(new SummaryStyle(CultureInfo.InvariantCulture, printUnitsInHeader: false, SizeUnit.B, TimeUnit.Microsecond))

);

// BENCHMARKS GO HERE

}With minimal friction, you should be able to copy and paste a benchmark from this post to where it says // BENCHMARKS GO HERE, and run the app to execute the benchmarks. You can do so with a command like this:

dotnet run -c Release -f net48 -filter "**" --runtimes net48 net5.0 net6.0This tells BenchmarkDotNet:

- Build everything in a release configuration,

- build it targeting the .NET Framework 4.8 surface area,

- don’t exclude any benchmarks,

- and run each benchmark on each of .NET Framework 4.8, .NET 5, and .NET 6.

In some cases, I’ve added additional frameworks to the list (e.g. netcoreapp3.1) to highlight cases where there’s a continuous improvement release-over-release. In other cases, I’ve only targeted .NET 6.0, such as when highlighting the difference between an existing API and a new one in this release. Most of the results in the post were generated by running on Windows, primarily so that .NET Framework 4.8 could be included in the result set. However, unless otherwise called out, all of these benchmarks show comparable improvements when run on Linux or on macOS. Simply ensure that you have installed each runtime you want to measure. I’m using a nightly build of .NET 6 RC1, along with the latest released downloads of .NET 5 and .NET Core 3.1.

Final note and standard disclaimer: microbenchmarking can be very subject to the machine on which a test is run, what else is going on with that machine at the same time, and sometimes seemingly the way the wind is blowing. Your results may vary.

Let’s get started…

JIT

Code generation is the foundation on top of which everything else is built. As such, improvements to code generation have a multiplicative effect, with the power to improve the performance of all code that runs on the platform. .NET 6 sees an unbelievable number of performance improvements finding their way into the JIT (just-in-time compiler), which is used to translate IL (intermediate language) into assembly code at run-time, and which is also used for AOT (ahead-of-time compilation) as part of Crossgen2 and the R2R format (ReadyToRun).

Since it’s so foundational to good performance in .NET code, let’s start by talking about inlining and devirtualization. “Inlining” is the process by which the compiler takes the code from a method callee and emits it directly into the caller. This avoids the overhead of the method call, but that’s typically only a minor benefit. The major benefit is it exposes the contents of the callee to the context of the caller, enabling subsequent (“knock-on”) optimizations that wouldn’t have been possible without the inlining. Consider a simple case:

[MethodImpl(MethodImplOptions.NoInlining)]

public static int Compute() => ComputeValue(123) * 11;

[MethodImpl(MethodImplOptions.NoInlining)]

private static int ComputeValue(int length) => length * 7;Here we have a method, ComputeValue, which just takes an int and multiplies it by 7, returning the result. This method is simple enough to always be inlined, so for demonstration purposes I’ve used MethodImplOptions.NoInlining to tell the JIT to not inline it. If I then look at what assembly code the JIT produces for Compute and ComputeValue, we get something like this:

; Program.Compute()

sub rsp,28

mov ecx,7B

call Program.ComputeValue(Int32)

imul eax,0B

add rsp,28

ret

; Program.ComputeValue(Int32)

imul eax,ecx,7

retCompute loads the value 123 (0x7b in hex) into the ecx register, which holds the argument to ComputeValue, calls ComputeValue, then takes the result (from the eax register) and multiples it by 11 (0xb in hex), returning that result. We can see ComputeValue in turn takes the input from ecx and multiplies it by 7, storing the result into eax for Compute to consume. Now, what happens if we remove the NoInlining:

; Program.Compute()

mov eax,24FF

retThe multiplications and method calls have evaporated, and we’re left with Compute simply returning the value 0x24ff, as the JIT has computed at compile-time the result of (123 * 7) * 11, which is 9471, or 0x24ff in hex. In other words, we didn’t just save the method call, we also transformed the entire operation into a constant. Inlining is a very powerful optimization.

Of course, you also need to be careful with inlining. If you inline too much, you bloat the code in your methods, potentially very significantly. That can make microbenchmarks look very good in some circumstances, but it can also have some bad net effects. Let’s say all of the code associated with Int32.Parse is 1,000 bytes of assembly code (I’m making up that number for explanatory purposes), and let’s say we forced it to all always inline. Every call site to Int32.Parse will now end up carrying a (potentially optimized with knock-on effects) copy of the code; call it from 100 different locations, and you now have 100,000 bytes of assembly code rather than 1,000 that are reused. That means more memory consumption for the assembly code, and if it was AOT-compiled, more size on disk. But it also has other potentially deleterious affects. Computers use very fast and limited size instruction caches to store code to be run. If you have 1000 bytes of code that you invoke from 100 different places, each of those places can potentially reuse the bytes previously loaded into the cache. But give each of those places their own (likely mutated) copy, and as far as the hardware is concerned, that’s different code, meaning the inlining can result in code actually running slower due to forcing more evictions and loads from and to that cache. There’s also the impact on the JIT compiler itself, as the JIT has limits on things like the size of a method before it’ll give up on optimizing further; inline too much code, and you can exceed said limits.

Net net, inlining is hugely powerful, but also something to be employed carefully, and the JIT methodically (but necessarily quickly) weighs decisions it makes about what to inline and what not to with a variety of heuristics.

In this light, dotnet/runtime#50675, dotnet/runtime#51124, dotnet/runtime#52708, dotnet/runtime#53670, and dotnet/runtime#55478 improved the JIT by helping it to understand (and more efficiently understand) what methods were being invoked by the callee; by teaching the inliner about new things to look for, e.g. whether the callee could benefit from folding if handed constants; and by teaching the inliner how to inline various constructs it previously considered off-limits, e.g. switches. Let’s take just one example from a comment on one of those PRs:

private int _value = 12345;

private byte[] _buffer = new byte[100];

[Benchmark]

public bool Format() => Utf8Formatter.TryFormat(_value, _buffer, out _, new StandardFormat('D', 2));Running this for .NET 5 vs .NET 6, we can see a few things changed:

| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| Format | .NET 5.0 | 13.21 ns | 1.00 | 1,649 B |

| Format | .NET 6.0 | 10.37 ns | 0.78 | 590 B |

First, it got faster, yet there was little-to-no work done within Utf8Formatter itself in .NET 6 to improve the performance of this benchmark. Second, the code size (which is emitted thanks to using the [DisassemblyDiagnoser] attribute in our Program.cs) was cut to 35% of what it was in .NET 5. How is that possible? In both versions, the employed TryFormat call is a one-liner that delegates to a private TryFormatInt64 method, and the developer of that method decided to annotate it with MethodImplOptions.AggressiveInlining, which tells the JIT to override its heuristics and inline the method if it’s possible rather than if it’s possible and deemed useful. That method is a switch on the input format.Symbol, branching to call various other methods based on the format symbol employed (e.g. ‘D’ vs ‘G’ vs ‘N’). But we’ve actually already passed by the most interesting part, the new StandardFormat('D', 2) at the call site. In .NET 5, the JIT deems it not worthwhile to inline the StandardFormat constructor, and so we end up with a call to it:

mov edx,44

mov r8d,2

call System.Buffers.StandardFormat..ctor(Char, Byte)As a result, even though TryFormat gets inlined, in .NET 5, the JIT is unable to connect the dots to see that the 'D' passed into the StandardFormat constructor will influence which branch of that switch statement in TryFormatInt64 gets taken. In .NET 6, the JIT does inline the StandardFormat constructor, the effect of which is that it effectively can shrink the contents of TryFormatInt64 from:

if (format.IsDefault)

return TryFormatInt64Default(value, destination, out bytesWritten);

switch (format.Symbol)

{

case 'G':

case 'g':

if (format.HasPrecision)

throw new NotSupportedException(SR.Argument_GWithPrecisionNotSupported);

return TryFormatInt64D(value, format.Precision, destination, out bytesWritten);

case 'd':

case 'D':

return TryFormatInt64D(value, format.Precision, destination, out bytesWritten);

case 'n':

case 'N':

return TryFormatInt64N(value, format.Precision, destination, out bytesWritten);

case 'x':

return TryFormatUInt64X((ulong)value & mask, format.Precision, true, destination, out bytesWritten);

case 'X':

return TryFormatUInt64X((ulong)value & mask, format.Precision, false, destination, out bytesWritten);

default:

return FormattingHelpers.TryFormatThrowFormatException(out bytesWritten);

}to the equivalent of just:

TryFormatInt64D(value, 2, destination, out bytesWritten);avoiding the extra branches and not needing to inline the second copy of TryFormatInt64D (for the 'G' case) or TryFormatInt64N, both which are AggressiveInlining.

Inlining also goes hand-in-hand with devirtualization, which is the act in which the JIT takes a virtual or interface method call, determines statically the actual end target of the invocation, and emits a direct call to that target, saving on the cost of the virtual dispatch. Once devirtualized, the target may also be inlined (subject to all of the same rules and heuristics), in which case it can avoid not only the virtual dispatch overhead, but also potentially benefit from the further optimizations inlining can enable. For example, consider a function like the following, which you might find in a collection implementation:

private int[] _values = Enumerable.Range(0, 100_000).ToArray();

[Benchmark]

public int Find() => Find(_values, 99_999);

private static int Find<T>(T[] array, T item)

{

for (int i = 0; i < array.Length; i++)

if (EqualityComparer<T>.Default.Equals(array[i], item))

return i;

return -1;

}A previous release of .NET Core taught the JIT how to devirtualize EqualityComparer<T>.Default in such a use, resulting in an ~2x improvement over .NET Framework 4.8 in this example.

| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| Find | .NET Framework 4.8 | 115.4 us | 1.00 | 127 B |

| Find | .NET Core 3.1 | 69.7 us | 0.60 | 71 B |

| Find | .NET 5.0 | 69.8 us | 0.60 | 63 B |

| Find | .NET 6.0 | 53.4 us | 0.46 | 57 B |

However, while the JIT has been able to devirtualize EqualityComparer<T>.Default.Equals (for value types), not so for its sibling Comparer<T>.Default.Compare. dotnet/runtime#48160 addresses that. This can be seen with a benchmark like the following, which compares ValueTuple instances (the ValueTuple<>.CompareTo method uses Comparer<T>.Default to compare each element of the tuple):

private (int, long, int, long) _value1 = (5, 10, 15, 20);

private (int, long, int, long) _value2 = (5, 10, 15, 20);

[Benchmark]

public int Compare() => _value1.CompareTo(_value2);| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| Compare | .NET Framework 4.8 | 17.467 ns | 1.00 | 240 B |

| Compare | .NET 5.0 | 9.193 ns | 0.53 | 209 B |

| Compare | .NET 6.0 | 2.533 ns | 0.15 | 186 B |

But devirtualization improvements have gone well beyond such known intrinsic methods. Consider this microbenchmark:

[Benchmark]

public int GetLength() => ((ITuple)(5, 6, 7)).Length;The fact that I’m using a ValueTuple'3 and the ITuple interface here doesn’t matter: I just selected an arbitrary value type that implements an interface. A previous release of .NET Core enabled the JIT to avoid the boxing operation here (from casting a value type to an interface it implements) and emit this purely as a constrained method call, and then a subsequent release enabled it to be devirtualized and inlined:

| Method | Runtime | Mean | Ratio | Code Size | Allocated |

|---|---|---|---|---|---|

| GetLength | .NET Framework 4.8 | 6.3495 ns | 1.000 | 106 B | 32 B |

| GetLength | .NET Core 3.1 | 4.0185 ns | 0.628 | 66 B | – |

| GetLength | .NET 5.0 | 0.1223 ns | 0.019 | 27 B | – |

| GetLength | .NET 6.0 | 0.0204 ns | 0.003 | 27 B | – |

Great. But now let’s make a small tweak:

[Benchmark]

public int GetLength()

{

ITuple t = (5, 6, 7);

Ignore(t);

return t.Length;

}

[MethodImpl(MethodImplOptions.NoInlining)]

private static void Ignore(object o) { }Here I’ve forced the boxing by needing the object to exist in order to call the Ignore method, and previously that was enough to disable the ability to devirtualize the t.Length call. But .NET 6 now “gets it.” We can also see this by looking at the assembly. Here’s what we get for .NET 5:

; Program.GetLength()

push rsi

sub rsp,30

vzeroupper

vxorps xmm0,xmm0,xmm0

vmovdqu xmmword ptr [rsp+20],xmm0

mov dword ptr [rsp+20],5

mov dword ptr [rsp+24],6

mov dword ptr [rsp+28],7

mov rcx,offset MT_System.ValueTuple~3[[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib]]

call CORINFO_HELP_NEWSFAST

mov rsi,rax

vmovdqu xmm0,xmmword ptr [rsp+20]

vmovdqu xmmword ptr [rsi+8],xmm0

mov rcx,rsi

call Program.Ignore(System.Object)

mov rcx,rsi

add rsp,30

pop rsi

jmp near ptr System.ValueTuple~3[[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib]].System.Runtime.CompilerServices.ITuple.get_Length()

; Total bytes of code 92and for .NET 6:

; Program.GetLength()

push rsi

sub rsp,30

vzeroupper

vxorps xmm0,xmm0,xmm0

vmovupd [rsp+20],xmm0

mov dword ptr [rsp+20],5

mov dword ptr [rsp+24],6

mov dword ptr [rsp+28],7

mov rcx,offset MT_System.ValueTuple~3[[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib]]

call CORINFO_HELP_NEWSFAST

mov rcx,rax

lea rsi,[rcx+8]

vmovupd xmm0,[rsp+20]

vmovupd [rsi],xmm0

call Program.Ignore(System.Object)

cmp [rsi],esi

mov eax,3

add rsp,30

pop rsi

ret

; Total bytes of code 92Note in .NET 5 it’s tail calling to the interface implementation (jumping to the target method at the end rather than making a call that will need to return back to this method):

jmp near ptr System.ValueTuple~3[[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib],[System.Int32, System.Private.CoreLib]].System.Runtime.CompilerServices.ITuple.get_Length()whereas in .NET 6 it’s not only devirtualized but also inlined the ITuple.Length call, with the assembly now limited to moving the answer (3) into the return register:

mov eax,3Nice.

A multitude of other changes have impacted devirtualization as well. For example, dotnet/runtime#53567 improves devirtualization in AOT ReadyToRun images, and dotnet/runtime#45526 improves devirtualization with generics such that information about the exact class obtained is then made available to improve inlining.

Of course, there are many situations in which it’s impossible for the JIT to statically determine the exact target for a method call, thus preventing devirtualization and inlining… or does it?

One of my favorite features of .NET 6 is PGO (profile-guided optimization). PGO as a concept isn’t new; it’s been implemented in a variety of development stacks, and has existed in .NET in multiple forms over the years. But the implementation in .NET 6 is something special when compared to previous releases; in particular, from my perspective, “dynamic PGO”. The general idea behind profile-guided optimization is that a developer can first compile their app, using special tooling that instruments the binary to track various pieces of interesting data. They can then run their instrumented application through typical use, and the resulting data from the instrumentation can then be fed back into the compiler the next time around to influence how the compiler compiles the code. The interesting statement there is “next time”. Traditionally, you’d build your app, run the data gathering process, and then rebuild the app feeding in the resulting data, and typically this would all be automated as part of a build pipeline; that process is referred to as “static PGO”. However, with tiered compilation, a whole new world is available.

“Tiered compilation” is enabled by default since .NET Core 3.0. For JIT’d code, it represents a compromise between getting going quickly and running with highly-optimized code. Code starts in “tier 0,” during which the JIT compiler applies very few optimizations, which also means the JIT compiles code very quickly (optimizations are often what end up taking the most time during compilation). The emitted code includes some tracking data to count how frequently methods are invoked, and once members pass a certain threshold, the JIT queues them to be recompiled in “tier 1,” this time with all the optimizations the JIT can muster, and learning from the previous compilation, e.g. an accessed static readonly int can become a constant, as its value will have already been computed by the time the tier 1 code is compiled (dotnet/runtime#45901 improves the aforementioned queueing, using a dedicated thread rather than using the thread pool). You can see where this is going. With “dynamic PGO,” the JIT can now do further instrumentation during tier 0, to track not just call counts but all of the interesting data it can use for profile-guided optimization, and then it can employ that during the compilation of tier 1.

In .NET 6, dynamic PGO is off by default. To enable it, you need to set the DOTNET_TieredPGO environment variable:

# with bash

export DOTNET_TieredPGO=1

# in cmd

set DOTNET_TieredPGO=1

# with PowerShell

$env:DOTNET_TieredPGO="1"That enables gathering all of the interesting data during tier 0. On top of that, there are some other environment variables you’ll also want to consider setting. Note that the core libraries that make up .NET are installed with ReadyToRun images, which means they’ve essentially already been compiled into assembly code. ReadyToRun images can participate in tiering, but they don’t go through a tier 0, rather they go straight from the ReadyToRun code to tier 1; that means there’s no opportunity for dynamic PGO to instrument the binary for dynamically gathering insights. To enable instrumenting the core libraries as well, you can disable ReadyToRun:

$env:DOTNET_ReadyToRun="0"Then the core libraries will also participate. Finally, you can consider setting DOTNET_TC_QuickJitForLoops:

$env:DOTNET_TC_QuickJitForLoops="1"which enables tiering for methods that contain loops: otherwise, anything that has a backward jump goes straight to tier 1, meaning it gets optimized immediately as if tiered compilation didn’t exist, but in doing so loses out on the benefits of first going through tier 0. You may hear folks working on .NET referring to “full PGO”: that’s the case of all three of these environment variables being set, as then everything in the app is utilizing “dynamic PGO”. (Note that the ReadyToRun code for the framework assemblies does include implementations optimized based on PGO, just “static PGO”. The framework assemblies are compiled with PGO, used to execute a stable of representative apps and services, and then the resulting data is used to generate the final code that’s part of the shipped assemblies.)

Enough setup… what does this do for us? Let’s take an example:

private IEnumerator<int> _source = Enumerable.Range(0, int.MaxValue).GetEnumerator();

[Benchmark]

public void MoveNext() => _source.MoveNext();This is a pretty simple benchmark: we have an IEnumerator<int> stored in a field, and our benchmark is simply moving the iterator forward. When compiled on .NET 6 normally, we get this:

; Program.MoveNext()

sub rsp,28

mov rcx,[rcx+8]

mov r11,7FFF8BB40378

call qword ptr [7FFF8BEB0378]

nop

add rsp,28

retThat assembly code is the interface dispatch to whatever implementation backs that IEnumerator<int>. Now let’s set:

$env:DOTNET_TieredPGO=1and try it again. This time, the code looks very different:

; Program.MoveNext()

sub rsp,28

mov rcx,[rcx+8]

mov r11,offset MT_System.Linq.Enumerable+RangeIterator

cmp [rcx],r11

jne short M00_L03

mov r11d,[rcx+0C]

cmp r11d,1

je short M00_L00

cmp r11d,2

jne short M00_L01

mov r11d,[rcx+10]

inc r11d

mov [rcx+10],r11d

cmp r11d,[rcx+18]

je short M00_L01

jmp short M00_L02

M00_L00:

mov r11d,[rcx+14]

mov [rcx+10],r11d

mov dword ptr [rcx+0C],2

jmp short M00_L02

M00_L01:

mov dword ptr [rcx+0C],0FFFFFFFF

M00_L02:

add rsp,28

ret

M00_L03:

mov r11,7FFF8BB50378

call qword ptr [7FFF8BEB0378]

jmp short M00_L02A few things to notice, beyond it being much longer. First, the mov r11,7FFF8BB40378 followed by call qword ptr [7FFF8BEB0378] sequence for doing the interface dispatch still exists here, but it’s at the end of the method. One optimization common in PGO implementations is “hot/cold splitting”, where sections of a method frequently executed (“hot”) are moved close together at the beginning of the method, and sections of a method infrequently executed (“cold”) are moved to the end of the method. That enables better use of instruction caches and minimizes loads necessary to bring in likely-unsed code. So, this interface dispatch has moved to the end of the method, as based on PGO data the JIT expects it to be cold / rarely invoked. Yet this is the entirety of the original implementation; if that’s cold, what’s hot? Now at the beginning of the method, we see:

mov rcx,[rcx+8]

mov r11,offset MT_System.Linq.Enumerable+RangeIterator

cmp [rcx],r11

jne short M00_L03This is the magic. When the JIT instrumented the tier 0 code for this method, that included instrumenting this interface dispatch to track the concrete type of _source on each invocation. And the JIT found that every invocation was on a type called Enumerable+RangeIterator, which is a private class used to implement Enumerable.Range inside of the Enumerable implementation. As such, for tier 1 the JIT has emitted a check to see whether the type of _source is that Enumerable+RangeIterator: if it isn’t, then it jumps to the cold section we previously highlighted that’s performing the normal interface dispatch. But if it is, which, based on the profiling data, is expected to be the case the vast majority of the time, it can then proceed to directly invoke the Enumerable+RangeIterator.MoveNext method, devirtualized. Not only that, but it decided it was profitable to inline that MoveNext method. That MoveNext implementation is then the assembly code that immediately follows. The net effect of this is a bit larger code, but optimized for the exact scenario expected to be most common:

| Method | Mean | Code Size |

|---|---|---|

| PGO Disabled | 1.905 ns | 30 B |

| PGO Enabled | 0.7071 ns | 105 B |

The JIT optimizes for PGO data in a variety of ways. Given the data it knows about how the code behaves, it can be more aggressive about inlining, as it has more data about what will and won’t be profitable. It can perform this “guarded devirtualization” for most interface and virtual dispatch, emitting both one or more fast paths that are devirtualized and possibly inlined, with a fallback that performs the standard dispatch should the actual type not match the expected type. It can actually reduce code size in various circumstances by choosing to not apply optimizations that might otherwise increase code size (e.g. inlining, loop cloning, etc.) in blocks discovered to be cold. It can optimize for type casts, emitting checks that do a direct type comparison against the actual object type rather than always relying on more complicated and expensive cast helpers (e.g. ones that need to search ancestor hierarchies or interface lists or that can handle generic co- and contra-variance). The list will continue to grow over time as the JIT learns more and more how to, well, learn.

Lots of PRs contributed to PGO. Here are just a few:

- dotnet/runtime#44427 added support to the inliner that utilized call site frequency to boost the profitability metric (i.e. how valuable would it be to inline a method).

- dotnet/runtime#45133 added the initial support for determining the distribution of concrete types used at virtual and interface dispatch call sites, in order to enable guarded devirtualization. dotnet/runtime#51157 further enhanced this with regards to small struct types, while dotnet/runtime#51890 enabled improved code generation by chaining together guarded devirtualization call sites, grouping together the frequently-taken code paths where applicable.

- dotnet/runtime#52827 added support for special-casing

switchcases when PGO data is available to support it. If there’s a dominantswitchcase, where the JIT sees that branch being taken at least 30% of the time, the JIT can emit a dedicatedifcheck for that case up front, rather than having it go through theswitchwith the rest of the cases. (Note this applies to actual switches in the IL; not all C#switchstatements will end up asswitchinstructions in IL, and in fact many won’t, as the C# compiler will often optimize smaller or more complicated switches into the equivalent of a cascading set ofif/else ifchecks.)

That’s probably enough for now about inlining. There are other categories of optimization critical to high-performance C# and .NET code, as well. For example, bounds checking. One of the great things about C# and .NET is that, unless you go out of your way to circumvent the protections put in place (e.g. by using the unsafe keyword, the Unsafe class, the Marshal or MemoryMarshal classes, etc.), it’s near impossible to experience typical security vulnerabilities like buffer overruns. That’s because all accesses to arrays, strings, and spans are automatically “bounds checked” by the JIT, meaning it ensures before indexing into one of these data structures that the index is properly within bounds. You can see that with a simple example:

public int M(int[] arr, int index) => arr[index];for which the JIT will generate code similar to this:

; Program.M(Int32[], Int32)

sub rsp,28

cmp r8d,[rdx+8]

jae short M01_L00

movsxd rax,r8d

mov eax,[rdx+rax*4+10]

add rsp,28

ret

M01_L00:

call CORINFO_HELP_RNGCHKFAIL

int 3

; Total bytes of code 28The rdx register here stores the address of arr, and the length of arr is stored 8 bytes beyond that (in this 64-bit process), so [rdx+8] is arr.Length, and the cmp r8d, [rdx+8] instruction is comparing arr.Length against the index value stored in the r8d register. If the index is equal to or greater than the array length, it jumps to the end of the method, which calls a helper that throws an exception. That comparison is the “bounds check.”

Of course, such bounds checks add overhead. For most code, the overhead is negligible, but if you’re reading this post, there’s a good chance you’ve written code where it’s not. And you certainly rely on code where it’s not: a lot of lower-level routines in the core .NET libraries do rely on avoiding this kind of overhead wherever possible. As such, the JIT goes to great lengths to avoid emitting bounds checking when it can prove going out of bounds isn’t possible. The prototypical example is a loop from 0 to an array’s Length. If you write:

public int Sum(int[] arr)

{

int sum = 0;

for (int i = 0; i < arr.Length; i++) sum += arr[i];

return sum;

}the JIT will output code like this:

; Program.Sum(Int32[])

xor eax,eax

xor ecx,ecx

mov r8d,[rdx+8]

test r8d,r8d

jle short M02_L01

M02_L00:

movsxd r9,ecx

add eax,[rdx+r9*4+10]

inc ecx

cmp r8d,ecx

jg short M02_L00

M02_L01:

ret

; Total bytes of code 29Note there’s no tell-tale call followed by an int3 instruction at the end of the method; that’s because no call to a throw helper is required here, as there’s no bounds checking needed. The JIT can see that, by construction, the loop can’t walk off either end of the array, and thus it needn’t emit a bounds check.

Every release of .NET sees the JIT become wise to more and more patterns where it can safely eliminate bounds checking, and .NET 6 follows suit. dotnet/runtime#40180 and dotnet/runtime#43568 from @nathan-moore are great (and very helpful) examples. Consider the following benchmark:

private char[] _buffer = new char[100];

[Benchmark]

public bool TryFormatTrue() => TryFormatTrue(_buffer);

private static bool TryFormatTrue(Span<char> destination)

{

if (destination.Length >= 4)

{

destination[0] = 't';

destination[1] = 'r';

destination[2] = 'u';

destination[3] = 'e';

return true;

}

return false;

}This represents relatively typical code you might see in some lower-level formatting, where the length of a span is checked and then data written into the span. In the past, the JIT has been a little finicky about which guard patterns here are recognized and which aren’t, and .NET 6 makes that a whole lot better, thanks to the aforementioned PRs. On .NET 5, this benchmark would result in assembly like the following:

; Program.TryFormatTrue(System.Span~1<Char>)

sub rsp,28

mov rax,[rcx]

mov edx,[rcx+8]

cmp edx,4

jl short M01_L00

cmp edx,0

jbe short M01_L01

mov word ptr [rax],74

cmp edx,1

jbe short M01_L01

mov word ptr [rax+2],72

cmp edx,2

jbe short M01_L01

mov word ptr [rax+4],75

cmp edx,3

jbe short M01_L01

mov word ptr [rax+6],65

mov eax,1

add rsp,28

ret

M01_L00:

xor eax,eax

add rsp,28

ret

M01_L01:

call CORINFO_HELP_RNGCHKFAIL

int 3The beginning of the assembly loads the span’s reference into the eax register and the length of the span into the edx register:

mov rax,[rcx]

mov edx,[rcx+8]and then each assignment into the span ends up checking against this length, as in this sequence from above where we’re executing destination[2] = 'u':

cmp edx,2

jbe short M01_L01

mov word ptr [rax+4],75To save you from having to look at an ASCII table, lowercase ‘u’ has an ASCII hex value of 0x75, so this code is validating that 2 is less than the span’s length (and jumping to call CORINFO_HELP_RNGCHKFAIL if it’s not), then storing 'u' into the 2nd element of the span ([rax+4]). That’s four bounds checks, one for each character in "true", even though we know they’re all in-bounds. The JIT in .NET 6 knows that, too:

; Program.TryFormatTrue(System.Span~1<Char>)

mov rax,[rcx]

mov edx,[rcx+8]

cmp edx,4

jl short M01_L00

mov word ptr [rax],74

mov word ptr [rax+2],72

mov word ptr [rax+4],75

mov word ptr [rax+6],65

mov eax,1

ret

M01_L00:

xor eax,eax

retMuch better. Those changes then also allowed undoing some hacks (e.g. dotnet/runtime#49450 from @SingleAccretion) in the core libraries that had previously been done to work around the lack of the bounds checking removal in such cases.

Another bounds-checking improvement comes in dotnet/runtime#49271 from @SingleAccretion. In previous releases, there was an issue in the JIT where an inlined method call could cause subsequent bounds checks that otherwise would have been removed to now no longer be removed. This PR fixes that, the effect of which is evident in this benchmark

private long[] _buffer = new long[10];

private DateTime _now = DateTime.UtcNow;

[Benchmark]

public void Store() => Store(_buffer, _now);

[MethodImpl(MethodImplOptions.NoInlining)]

private static void Store(Span<long> span, DateTime value)

{

if (!span.IsEmpty)

{

span[0] = value.Ticks;

}

}; .NET 5.0.9

; Program.Store(System.Span~1<Int64>, System.DateTime)

sub rsp,28

mov rax,[rcx]

mov ecx,[rcx+8]

test ecx,ecx

jbe short M01_L00

cmp ecx,0

jbe short M01_L01

mov rcx,0FFFFFFFFFFFF

and rdx,rcx

mov [rax],rdx

M01_L00:

add rsp,28

ret

M01_L01:

call CORINFO_HELP_RNGCHKFAIL

int 3

; Total bytes of code 46

; .NET 6.0.0

; Program.Store(System.Span~1<Int64>, System.DateTime)

mov rax,[rcx]

mov ecx,[rcx+8]

test ecx,ecx

jbe short M01_L00

mov rcx,0FFFFFFFFFFFF

and rdx,rcx

mov [rax],rdx

M01_L00:

ret

; Total bytes of code 27In other cases, it’s not about whether there’s a bounds check, but what code is emitted for a bounds check that isn’t elided. For example, dotnet/runtime#42295 special-cases indexing into an array with a constant 0 index (which is actually fairly common) and emits a test instruction rather than a cmp instruction, which makes the code both slightly smaller and slightly faster.

Another bounds-checking optimization that’s arguably a category of its own is “loop cloning.” The idea behind loop cloning is the JIT can duplicate a loop, creating one variant that’s the original and one variant that removes bounds checking, and then at run-time decide which to use based on an additional up-front check. For example, consider this code:

public static int Sum(int[] array, int length)

{

int sum = 0;

for (int i = 0; i < length; i++)

{

sum += array[i];

}

return sum;

}The JIT still needs to bounds check the array[i] access, as while it knows that i >= 0 && i < length, it doesn’t know whether length <= array.Length and thus doesn’t know whether i < array.Length. However, doing such a bounds check on each iteration of the loop adds an extra comparison and branch on each iteration. Loop cloning enables the JIT to generate code that’s more like the equivalent of this:

public static int Sum(int[] array, int length)

{

int sum = 0;

if (array is not null && length <= array.Length)

{

for (int i = 0; i < length; i++)

{

sum += array[i]; // bounds check removed

}

}

else

{

for (int i = 0; i < length; i++)

{

sum += array[i]; // bounds check not removed

}

}

return sum;

}We end up paying for the extra up-front one time checks, but as long as there’s at least a couple of iterations, the elimination of the bounds check pays for that and more. Neat. However, as with other bounds checking removal optimizations, the JIT is looking for very specific patterns, and things that deviate and fall off the golden path lose out on the optimization. That can include something as simple as the type of the array itself: change the previous example to use byte[] instead of int[], and that’s enough to throw the JIT off the scent… or, at least it was in .NET 5. Thanks to dotnet/runtime#48894, in .NET 6 the loop is now cloned, as can be seen from this benchmark:

private byte[] _buffer = Enumerable.Range(0, 1_000_000).Select(i => (byte)i).ToArray();

[Benchmark]

public void Sum() => Sum(_buffer, 999_999);

public static int Sum(byte[] array, int length)

{

int sum = 0;

for (int i = 0; i < length; i++)

{

sum += array[i];

}

return sum;

}| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| Sum | .NET 5.0 | 471.3 us | 1.00 | 54 B |

| Sum | .NET 6.0 | 350.0 us | 0.74 | 97 B |

; .NET 5.0.9

; Program.Sum()

sub rsp,28

mov rax,[rcx+8]

xor edx,edx

xor ecx,ecx

mov r8d,[rax+8]

M00_L00:

cmp ecx,r8d

jae short M00_L01

movsxd r9,ecx

movzx r9d,byte ptr [rax+r9+10]

add edx,r9d

inc ecx

cmp ecx,0F423F

jl short M00_L00

add rsp,28

ret

M00_L01:

call CORINFO_HELP_RNGCHKFAIL

int 3

; Total bytes of code 54

; .NET 6.0.0

; Program.Sum()

sub rsp,28

mov rax,[rcx+8]

xor edx,edx

xor ecx,ecx

test rax,rax

je short M00_L01

cmp dword ptr [rax+8],0F423F

jl short M00_L01

nop word ptr [rax+rax]

M00_L00:

movsxd r8,ecx

movzx r8d,byte ptr [rax+r8+10]

add edx,r8d

inc ecx

cmp ecx,0F423F

jl short M00_L00

jmp short M00_L02

M00_L01:

cmp ecx,[rax+8]

jae short M00_L03

movsxd r8,ecx

movzx r8d,byte ptr [rax+r8+10]

add r8d,edx

mov edx,r8d

inc ecx

cmp ecx,0F423F

jl short M00_L01

M00_L02:

add rsp,28

ret

M00_L03:

call CORINFO_HELP_RNGCHKFAIL

int 3

; Total bytes of code 97Not just bytes, but the same issue manifests for arrays of non-primitive structs. dotnet/runtime#55612 addressed that. Additionally, dotnet/runtime#55299 improved loop cloning for various loops over multidimensional arrays.

Since we’re on the topic of loop optimization, consider loop inversion. “Loop inversion” is a standard compiler transform that’s aimed at eliminating some branching from a loop. Consider a loop like:

while (i < 3)

{

...

i++;

}Loop inversion involves the compiler transforming this into:

if (i < 3)

{

do

{

...

i++;

}

while (i < 3);

}In other words, change the while into a do..while, moving the condition check from the beginning of each iteration to the end of each iteration, and then add a one-time condition check at the beginning to compensate. Now imagine that i == 2. In the original structure, we enter the loop, i is incremented, and then we jump back to the beginning to do the condition test, it’ll fail (as i is now 3), and we’ll then jump again to just past the end of the loop. Now consider the same situation with the inverted loop. We pass the if condition, as i == 2. We then enter the do..while, i is incremented, and we check the condition. The condition fails, and we’re already at the end of the loop, so we don’t jump back to the beginning and instead just keep running past the loop. Summary: we saved two jumps. And in either case, if i was >= 3, we have exactly the same number of jumps as we just jump to after the while/if. The inverted structure also often affords additional optimizations; for example, the JIT’s pattern recognition used for loop cloning and the hoisting of invariants depend on the loop being in an inverted form. Both dotnet/runtime#50982 and dotnet/runtime#52347 improved the JIT’s support for loop inversion.

Ok, we’ve talked about inlining optimizations, bounds checking optimizations, and loop optimizations. What about constants?

“Constant folding” is simply a fancy term to mean a compiler computing values at compile-time rather than leaving it to run-time. Folding can happen at various levels of compilation. If you write this C#:

public static int M() => 10 + 20 * 30 / 40 ^ 50 | 60 & 70;the C# compiler will fold this while compiling to IL, computing the constant value 47 from all of those operations:

IL_0000: ldc.i4.s 47

IL_0002: retFolding can also happen in the JIT, which is particularly valuable in the face of inlining. If I have this C#:

public static int M() => 10 + N();

public static int N() => 20;the C# compiler doesn’t (and in many cases shouldn’t) do any kind of interprocedural analysis to determine that N always returns 20, so you end up with this IL for M:

IL_0000: ldc.i4.s 10

IL_0002: call int32 C::N()

IL_0007: add

IL_0008: retBut with inlining, the JIT is able to generate this for M:

L0000: mov eax, 0x1e

L0005: rethaving inlined the 20, constant folded 10 + 20, and gotten the constant value 30 (hex 0x1e). Constant folding also goes hand-in-hand with “constant propagation,” which is the practice of the compiler substituting a constant value into an expression, at which point compilers will often be able to iterate, apply more constant folding, do more constant propagation, and so on. Let’s say I have this non-trivial set of helper methods:

public bool ContainsSpace(string s) => Contains(s, ' ');

private static bool Contains(string s, char c)

{

if (s.Length == 1)

{

return s[0] == c;

}

for (int i = 0; i < s.Length; i++)

{

if (s[i] == c)

return true;

}

return false;

}Based on whatever their needs were, the developer of Contains(string, char) decided that it would very frequently be called with string literals, and that single character literals were common. Now if I write:

[Benchmark]

public bool M() => ContainsSpace(" ");the entirety of the generated code produced by the JIT for M is:

L0000: mov eax, 1

L0005: retHow is that possible? The JIT inlines Contains(string, char) into ContainsSpace(string), and inlines ContainsSpace(string) into M(). The implementation of ContainsSpace(string, char) is then exposed to the fact that string s is " " and char c is ' '. It can then propagate the fact that s.Length is actually the constant 1, which enables deleting as dead code everything after the if block. It can then see that s[0] is in-bounds, and remove any bounds checking, and can see that s[0] is the first character in the constant string " ", a ' ', and can then see that ' ' == ' ', making the entire operation return a constant true, hence the resulting mov eax, 1, which is used to return a Boolean value true. Neat, right? Of course, you may be asking yourself, “Does code really call such methods with literals?” And the answer is, absolutely, in lots of situations; the PR in .NET 5 that introduced the ability to treat "literalString".Length as a constant highlighted thousands of bytes of improvements in the generated assembly code across the core libraries. But a good example in .NET 6 that makes extra-special use of this is dotnet/runtime#57217. The methods being changed in this PR are expected to be called from C# compiler-generated code with literals, and being able to specialize based on the length of the string literal passed effectively enables multiple implementations of the method the JIT can choose from based on its knowledge of the literal used at the call site, resulting in faster and smaller code when such a literal is used.

But, the JIT needs to be taught what kinds of things can be folded. dotnet/runtime#49930 teaches it how to fold null checks when used with constant strings, which as in the previous example, is most valuable with inlining. Consider the Microsoft.Extensions.Logging.Console.ConsoleFormatter abstract base class. It exposes a protected constructor that looks like this:

protected ConsoleFormatter(string name)

{

Name = name ?? throw new ArgumentNullException(nameof(name));

}which is a fairly typical construct: validating that an argument isn’t null, throwing an exception if it is, and storing it if it’s not. Now look at one of the built-in types derived from it, like JsonConsoleFormatter:

public JsonConsoleFormatter(IOptionsMonitor<JsonConsoleFormatterOptions> options)

: base(ConsoleFormatterNames.Json)

{

ReloadLoggerOptions(options.CurrentValue);

_optionsReloadToken = options.OnChange(ReloadLoggerOptions);

}Note that base (ConsoleFormatterNames.Json) call. ConsoleFormatterNames.Json is defined as:

public const string Json = "json";so this base call is really:

base("json")When the JIT inlines the base constructor, it’ll now be able to see that the input is definitively not null, at which point it can eliminate as dead code the ?? throw new ArgumentNullException(nameof(name), and the entire inlined call will simply be the equivalent of Name = "json".

dotnet/runtime#50000 is similar. As mentioned earlier, thanks to tiered compilation, static readonlys initialized in tier 0 can become consts in tier 1. This was enabled in previous .NET releases. For example, you might find code that dynamically enables or disables a feature based on an environment variable and then stores the result of that into a static readonly bool. When code reading that static field is recompiled in tier 1, the Boolean value can be considered a constant, enabling branches based on that value to be trimmed away. For example, given this benchmark:

private static readonly bool s_coolFeatureEnabled = GetCoolFeatureEnabled();

private static bool GetCoolFeatureEnabled()

{

string envVar = Environment.GetEnvironmentVariable("EnableCoolFeature");

return envVar == "1" || "true".Equals(envVar, StringComparison.OrdinalIgnoreCase);

}

[MethodImpl(MethodImplOptions.NoInlining)]

private static void UsedWhenCoolEnabled() { }

[MethodImpl(MethodImplOptions.NoInlining)]

private static void UsedWhenCoolNotEnabled() { }

[Benchmark]

public void CallCorrectMethod()

{

if (s_coolFeatureEnabled)

{

UsedWhenCoolEnabled();

}

else

{

UsedWhenCoolNotEnabled();

}

}since I’ve not set the environment variable, when I run this and examine the resulting tier 1 assembly for CallCorrectMethod, I see this:

; Program.CallCorrectMethod()

jmp near ptr Program.UsedWhenCoolNotEnabled()

; Total bytes of code 5That is the entirety of the implementation; there’s no call to UsedWhenCoolEnabled anywhere in sight, because the JIT was able to prune away the if block as dead code based on s_coolFeatureEnabled being a constant false. The aforementioned PR builds on that capability by enabling null folding for such values. Consider a library that exposes a method like:

public static bool Equals<T>(T i, T j, IEqualityComparer<T> comparer)

{

comparer ??= EqualityComparer<T>.Default;

return comparer.Equals(i, j);

}comparing two values using the specified comparer, and if the specified comparer is null, using EqualityComparer<T>.Default. Now, with our benchmark we pass in EqualityComparer<int>.Default.

[Benchmark]

[Arguments(1, 2)]

public bool Equals(int i, int j) => Equals(i, j, EqualityComparer<int>.Default);

public static bool Equals<T>(T i, T j, IEqualityComparer<T> comparer)

{

comparer ??= EqualityComparer<T>.Default;

return comparer.Equals(i, j);

}This is what the resulting assembly looks like with .NET 5 and .NET 6:

; .NET 5.0.9

; Program.Equals(Int32, Int32)

mov rcx,1503FF62D58

mov rcx,[rcx]

test rcx,rcx

jne short M00_L00

mov rcx,1503FF62D58

mov rcx,[rcx]

M00_L00:

mov r11,7FFE420C03A0

mov rax,[7FFE424403A0]

jmp rax

; Total bytes of code 51

; .NET 6.0.0

; Program.Equals(Int32, Int32)

mov rcx,1B4CE6C2F78

mov rcx,[rcx]

mov r11,7FFE5AE60370

mov rax,[7FFE5B1C0370]

jmp rax

; Total bytes of code 33On .NET 5, those first two mov instructions are loading the EqualityComparer<int>.Default. Then with the call to Equals<T>(int, int, IEqualityComparer<T> inlined, that test rcx, rcx is the null check for the EqualityComparer<int>.Default passed as an argument. If it’s not null (it won’t be null), it then jumps to M00_L00, where those two movs and a jmp are a tail call to the interface Equals method. On .NET 6, you can see those first two instructions are still there, and the last three instructions are still there, but the middle four instructions (test, jne, mov, mov) have evaporated, because the compiler is now able to propagate the non-nullness of the static readonly and eliminate completely the comparer ??= EqualityComparer<T>.Default; from the inlined helper.

dotnet/runtime#47321 also adds a lot of power with regards to folding. Most of the Math methods can now participate in constant folding, so if their inputs end up as constants for whatever reason, the results can become constants as well, and with constant propagation, this leads to the potential for serious reduction in run-time evaluation. Here’s a benchmark I created by copying some of the sample code from the System.Math docs, editing it to create a method that computes the height of a trapezoid.

[Benchmark]

public double GetHeight() => GetHeight(20.0, 10.0, 8.0, 6.0);

[MethodImpl(MethodImplOptions.AggressiveInlining)]

public static double GetHeight(double longbase, double shortbase, double leftLeg, double rightLeg)

{

double x = (Math.Pow(rightLeg, 2.0) - Math.Pow(leftLeg, 2.0) + Math.Pow(longbase, 2.0) + Math.Pow(shortbase, 2.0) - 2 * shortbase * longbase) / (2 * (longbase - shortbase));

return Math.Sqrt(Math.Pow(rightLeg, 2.0) - Math.Pow(x, 2.0));

}These are what I get for benchmark results:

| Method | Runtime | Mean | Ratio | Code Size |

|---|---|---|---|---|

| GetHeight | .NET 5.0 | 151.7852 ns | 1.000 | 179 B |

| GetHeight | .NET 6.0 | 0.0000 ns | 0.000 | 12 B |

Note the time spent for .NET 6 has dropped to nothing, and the code size has dropped from 179 bytes to 12. How is that possible? Because the entire operation became a single constant. The .NET 5 assembly looked like this:

; .NET 5.0.9

; Program.GetHeight()

sub rsp,38

vzeroupper

vmovsd xmm0,qword ptr [7FFE66C31CA0]

vmovsd xmm1,qword ptr [7FFE66C31CB0]

call System.Math.Pow(Double, Double)

vmovsd qword ptr [rsp+28],xmm0

vmovsd xmm0,qword ptr [7FFE66C31CC0]

vmovsd xmm1,qword ptr [7FFE66C31CD0]

call System.Math.Pow(Double, Double)

vmovsd xmm2,qword ptr [rsp+28]

vsubsd xmm3,xmm2,xmm0

vmovsd qword ptr [rsp+30],xmm3

vmovsd xmm0,qword ptr [7FFE66C31CE0]

vmovsd xmm1,qword ptr [7FFE66C31CF0]

call System.Math.Pow(Double, Double)

vaddsd xmm2,xmm0,qword ptr [rsp+30]

vmovsd qword ptr [rsp+30],xmm2

vmovsd xmm0,qword ptr [7FFE66C31D00]

vmovsd xmm1,qword ptr [7FFE66C31D10]

call System.Math.Pow(Double, Double)

vaddsd xmm1,xmm0,qwor44562d ptr [rsp+30]

vsubsd xmm1,xmm1,qword ptr [7FFE66C31D20]

vdivsd xmm0,xmm1,[7FFE66C31D30]

vmovsd xmm1,qword ptr [7FFE66C31D40]

call System.Math.Pow(Double, Double)

vmovsd xmm2,qword ptr [rsp+28]

vsubsd xmm0,xmm2,xmm0

vsqrtsd xmm0,xmm0,xmm0

add rsp,38

ret

; Total bytes of code 179with at least five calls to Math.Pow on top of a bunch of double addition, subtraction, and square root operations, whereas with .NET 6, we get:

; .NET 6.0.0

; Program.GetHeight()

vzeroupper

vmovsd xmm0,qword ptr [7FFE5B1BCE70]

ret

; Total bytes of code 12which is just returning a constant double value. It’s hard not to smile when seeing that.

There were additional folding-related improvements. dotnet/runtime#48568 from @SingleAccretion improved the handling of unsigned comparisons as part of constant folding and propagation; dotnet/runtime#47133 from @SingleAccretion changed in what phase of the JIT certain folding is performed in order to improve its impact on inlining; and dotnet/runtime#43567 improved the folding of commutative operators. Further, for ReadyToRun, dotnet/runtime#42831 from @nathan-moore ensured that the Length of an array created from a constant could be propagated as a constant.

Most of the improvements we’ve talked about thus far are cross-cutting. Sometimes, though, improvements are much more focused, with a change intended to improve the code generated for a very specific pattern. And there have been a lot of those in .NET 6. Here are a few examples:

-

- dotnet/runtime#37245. When implicitly casting a

stringto aReadOnlySpan<char>, the operator performs anullcheck on the input, such that it’ll return an empty span if the string is null. The operator is aggressively inlined, however, and so if the call site can prove that the string is not null, the null check can be eliminated.[Benchmark] public ReadOnlySpan<char> Const() => "hello world";

- dotnet/runtime#37245. When implicitly casting a

; .NET 5.0.9

; Program.Const()

mov rax,12AE3A09B48

mov rax,[rax]

test rax,rax

jne short M00_L00

xor ecx,ecx

xor r8d,r8d

jmp short M00_L01

M00_L00:

cmp [rax],eax

cmp [rax],eax

add rax,0C

mov rcx,rax

mov r8d,0B

M00_L01:

mov [rdx],rcx

mov [rdx+8],r8d

mov rax,rdx

ret

; Total bytes of code 53

; .NET 6.0.0

; Program.Const()

mov rax,18030C4A038

mov rax,[rax]

add rax,0C

mov [rdx],rax

mov dword ptr [rdx+8],0B

mov rax,rdx

ret

; Total bytes of code 31-

- dotnet/runtime#37836.

BitOperations.PopCountwas added in .NET Core 3.0, and returns the “popcount”, or “population count”, of the input number, meaning the number of bits set. It’s implemented as a hardware intrinsic if the underlying hardware supports it, or via a software fallback otherwise, but it’s also easily computed at compile time if the input is a constant (or if it becomes a constant from the JIT’s perspective, e.g. if the input is astatic readonly). This PR turnsPopCountinto a JIT intrinsic, enabling the JIT to substitute a value for the whole method invocation if it deems that appropriate.[Benchmark] public int PopCount() => BitOperations.PopCount(42);

- dotnet/runtime#37836.

; .NET 5.0.9

; Program.PopCount()

mov eax,2A

popcnt eax,eax

ret

; Total bytes of code 10

; .NET 6.0.0

; Program.PopCount()

mov eax,3

ret

; Total bytes of code 6-

- dotnet/runtime#50997. This is a great example of improvements being made to the JIT based on an evolving need from the kinds of things libraries end up doing. In particular, this came about because of improvements to string interpolation that we’ll discuss later in this post. Previously, if you wrote the interpolated string

$"{_nullableValue}"where_nullableValuewas, say, anint?, this would result in astring.Formatcall that passes_nullableValueas anobjectargument. Boxing thatint?translates into either null if the nullable value isnullor boxing itsintvalue if it’s not null. With C# 10 and .NET 6, this will instead result in a call to a generic method, passing in the_nullableValuestrongly-typed asT==int?, and that generic method then checks for various interfaces on theTand uses them if they exist. In performance testing of the feature, this exposed a measurable performance cliff due to the code generation employed for the nullable value types, both in allocation and in throughput. This PR helped to avoid that cliff by optimizing the boxing involved for this pattern of interface checking and usage.private int? _nullableValue = 1; [Benchmark] public string Format() => Format(_nullableValue); private string Format(T value, IFormatProvider provider = null) { if (value is IFormattable) { return ((IFormattable)value).ToString(null, provider); } return value.ToString(); }

- dotnet/runtime#50997. This is a great example of improvements being made to the JIT based on an evolving need from the kinds of things libraries end up doing. In particular, this came about because of improvements to string interpolation that we’ll discuss later in this post. Previously, if you wrote the interpolated string

| Method | Runtime | Mean | Ratio | Code Size | Allocated |

|---|---|---|---|---|---|

| Format | .NET 5.0 | 87.71 ns | 1.00 | 154 B | 48 B |

| Format | .NET 6.0 | 51.88 ns | 0.59 | 100 B | 24 B |

- dotnet/runtime#50112. For hot code paths, especially those concerned about size, there’s a common “throw helper” pattern employed where the code to perform a throw is moved out into a separate method, as the JIT won’t inline a method that is discovered to always throw. If there’s a common check being employed, that’s often then put it into its own helper. So, for example, if you wanted a helper method that checked to see if some reference type argument was null and then threw an exception if it was, that might look like this:

public static void ThrowIfNull( [NotNull] object? argument, [CallerArgumentExpression("argument")] string? paramName = null) { if (argument is null) Throw(paramName); } [DoesNotReturn] private static void Throw(string? paramName) => throw new ArgumentNullException(paramName);And, in fact, that’s exactly what the new

ArgumentNullException.ThrowIfNullhelper introduced in dotnet/runtime#55594 looks like. The trouble with this, however, is that in order to call theThrowIfNullmethod with a string literal, we end up needing to materialize that string literal as a string object (e.g. for astring inputargument,nameof(input), aka"input"). If the check were being done inline, the JIT already has logic to deal with that, e.g. this:[Benchmark] [Arguments("hello")] public void ThrowIfNull(string input) { //ThrowIfNull(input, nameof(input)); if (input is null) throw new ArgumentNullException(nameof(input)); }produces on .NET 5:

; Program.ThrowIfNull(System.String) push rsi sub rsp,20 test rdx,rdx je short M00_L00 add rsp,20 pop rsi ret M00_L00: mov rcx,offset MT_System.ArgumentNullException call CORINFO_HELP_NEWSFAST mov rsi,rax mov ecx,1 mov rdx,7FFE715BB748 call CORINFO_HELP_STRCNS mov rdx,rax mov rcx,rsi call System.ArgumentNullException..ctor(System.String) mov rcx,rsi call CORINFO_HELP_THROW int 3 ; Total bytes of code 74In particular, we’re talking about that

call CORINFO_HELP_STRCNS. But with the check and throw moved into the helper, that lazy initialization of the string literal object doesn’t happen. We end up with the assembly for the check looking nice and slim, but from an overall memory perspective, it’s likely a regression to force all of those string literals to be materialized. This PR addressed that, by ensuring the lazy initialization still happens, only if we’re about to throw, even with the helper being used.[Benchmark] [Arguments("hello")] public void ThrowIfNull(string input) { ThrowIfNull(input, nameof(input)); } private static void ThrowIfNull( [NotNull] object? argument, [CallerArgumentExpression("argument")] string? paramName = null) { if (argument is null) Throw(paramName); } [DoesNotReturn] private static void Throw(string? paramName) => throw new ArgumentNullException(paramName);; .NET 5.0.9 ; Program.ThrowIfNull(System.String) test rdx,rdx jne short M00_L00 mov rcx,1FC48939520 mov rcx,[rcx] jmp near ptr Program.Throw(System.String) M00_L00: ret ; Total bytes of code 24 ; .NET 6.0.0 ; Program.ThrowIfNull(System.String) sub rsp,28 test rdx,rdx jne short M00_L00 mov ecx,1 mov rdx,7FFEBF512BE8 call CORINFO_HELP_STRCNS mov rcx,rax add rsp,28 jmp near ptr Program.Throw(System.String) M00_L00: add rsp,28 ret ; Total bytes of code 46

-

- dotnet/runtime#43811 and dotnet/runtime#46237. It’s fairly common, in particular in the face of inlining, to end up with sequences that have redundant comparison operations. Consider a fairly typical expression when dealing with nullable value types:

if (i.HasValue) { Use(i.Value); }. Thati.Valueaccess invokes theNullable<T>.Valuegetter, which itself checksHasValue, leading to a redundant comparison with the developer-writtenHasValuecheck in the guard. This specific example has led some folks to adopt a pattern of usingGetValueOrDefault()after aHasValuecheck, since somewhat ironicallyGetValueOrDefault()just returns thevaluefield without any additional checks. But there shouldn’t be a penalty for writing the simpler code that makes logical sense. And thanks to this PR, there isn’t. The JIT will now walk the control flow graph to see if any dominating block (basically, code we had to go through to get to this point) has a similar compare.[Benchmark] public bool IsGreaterThan() => IsGreaterThan(42, 40); [MethodImpl(MethodImplOptions.NoInlining)] private static bool IsGreaterThan(int? i, int j) => i.HasValue && i.Value > j;

- dotnet/runtime#43811 and dotnet/runtime#46237. It’s fairly common, in particular in the face of inlining, to end up with sequences that have redundant comparison operations. Consider a fairly typical expression when dealing with nullable value types:

; .NET 5.0.9

; Program.IsGreaterThan(System.Nullable~1<Int32>, Int32)

sub rsp,28

mov [rsp+30],rcx

movzx eax,byte ptr [rsp+30]

test eax,eax

je short M01_L00

test eax,eax

je short M01_L01

cmp [rsp+34],edx

setg al

movzx eax,al

add rsp,28

ret

M01_L00:

xor eax,eax

add rsp,28

ret

M01_L01:

call System.ThrowHelper.ThrowInvalidOperationException_InvalidOperation_NoValue()

int 3

; Total bytes of code 50

; .NET 6.0.0

; Program.IsGreaterThan(System.Nullable~1<Int32>, Int32)

mov [rsp+8],rcx

cmp byte ptr [rsp+8],0

je short M01_L00

cmp [rsp+0C],edx

setg al

movzx eax,al

ret

M01_L00:

xor eax,eax

ret

; Total bytes of code 26-

- dotnet/runtime#49585. Learning from others is very important. Division is typically a relatively slow operation on modern hardware, and thus compilers try to find ways to avoid it, especially when dividing by a constant. In such cases, the JIT will try to find an alternative, which typically involves some combination of shifting and multiplying by a “magic number” that’s derived from the particular constant. This PR implements the techniques from Faster Unsigned Division by Constants to improve the magic number selected for a certain subset of constants, enabling better code generation when dividing by numbers like 7 or 365.

private uint _value = 12345; [Benchmark] public uint Div7() => _value / 7;

- dotnet/runtime#49585. Learning from others is very important. Division is typically a relatively slow operation on modern hardware, and thus compilers try to find ways to avoid it, especially when dividing by a constant. In such cases, the JIT will try to find an alternative, which typically involves some combination of shifting and multiplying by a “magic number” that’s derived from the particular constant. This PR implements the techniques from Faster Unsigned Division by Constants to improve the magic number selected for a certain subset of constants, enabling better code generation when dividing by numbers like 7 or 365.

; .NET 5.0.9

; Program.Div()

mov ecx,[rcx+8]

mov edx,24924925

mov eax,ecx

mul edx

sub ecx,edx

shr ecx,1

lea eax,[rcx+rdx]

shr eax,2

ret

; Total bytes of code 23

; .NET 6.0.0

; Program.Div()

mov eax,[rcx+8]

mov rdx,492492492493

mov eax,eax

mul rdx

mov eax,edx

ret

; Total bytes of code 21-

- dotnet/runtime#45463. It’s fairly common to see code check whether a value is even by using

i % 2 == 0. The JIT can now transform that into code more likei & 1 == 0to arrive at the same answer but with less ceremony.[Benchmark] [Arguments(42)] public bool IsEven(int i) => i % 2 == 0;

- dotnet/runtime#45463. It’s fairly common to see code check whether a value is even by using

; .NET 5.0.9

; Program.IsEven(Int32)

mov eax,edx

shr eax,1F

add eax,edx

and eax,0FFFFFFFE

sub edx,eax

sete al

movzx eax,al

ret

; Total bytes of code 19

; .NET 6.0.0

; Program.IsEven(Int32)

test dl,1

sete al

movzx eax,al

ret

; Total bytes of code 10-

- dotnet/runtime#44562. It’s common in high-performance code that uses cached arrays to see the code first store the arrays into locals and then operate on the locals. This enables the JIT to prove to itself, if it sees nothing else assigning into the array reference, that the array is invariant, such that it can learn from previous use of the array to optimize subsequent use. For example, if you iterate

for (int i = 0; i < arr.Length; i++) Use(arr[i]);, it can eliminate the bounds check on thearr[i], as it trustsi < arr.Length. However, if this had instead been written asfor (int i = 0; i < s_arr.Length; i++) Use(s_arr[i]);, wheres_arris defined asstatic readonly int[] s_arr = ...;, the JIT would not eliminate the bounds check, as the JIT wasn’t satisfied thats_arrwas definitely not going to change, despite thereadonly. This PR fixed that, enabling the JIT to see this static readonly array as being invariant, which then enables subsequent optimizations like bounds check elimination and common subexpression elimination.static readonly int[] s_array = { 1, 2, 3, 4 }; [Benchmark] public int Sum() { if (s_array.Length >= 4) { return s_array[0] + s_array[1] + s_array[2] + s_array[3]; } return 0; }

- dotnet/runtime#44562. It’s common in high-performance code that uses cached arrays to see the code first store the arrays into locals and then operate on the locals. This enables the JIT to prove to itself, if it sees nothing else assigning into the array reference, that the array is invariant, such that it can learn from previous use of the array to optimize subsequent use. For example, if you iterate

; .NET 5.0.9

; Program.Sum()

sub rsp,28

mov rax,15434127338

mov rax,[rax]

cmp dword ptr [rax+8],4

jl short M00_L00

mov rdx,rax

mov ecx,[rdx+8]

cmp ecx,0

jbe short M00_L01

mov edx,[rdx+10]

mov r8,rax

cmp ecx,1

jbe short M00_L01

add edx,[r8+14]

mov r8,rax

cmp ecx,2

jbe short M00_L01

add edx,[r8+18]

cmp ecx,3

jbe short M00_L01

add edx,[rax+1C]

mov eax,edx

add rsp,28

ret

M00_L00:

xor eax,eax

add rsp,28

ret

M00_L01:

call CORINFO_HELP_RNGCHKFAIL

int 3

; Total bytes of code 89

; .NET 6.0.0

; Program.Sum()

mov rax,28B98007338

mov rax,[rax]

mov edx,[rax+8]

cmp edx,4

jl short M00_L00

mov rdx,rax

mov edx,[rdx+10]

mov rcx,rax

add edx,[rcx+14]

mov rcx,rax

add edx,[rcx+18]

add edx,[rax+1C]

mov eax,edx

ret

M00_L00:

xor eax,eax

ret

; Total bytes of code 48-

- dotnet/runtime#49548. This PR optimized various patterns involving comparisons against 0. Given an expression like

a == 0 && b == 0, the JIT can now optimize that to be equivalent to(a | b) == 0, replacing a branch and second comparison with anor.[Benchmark] public bool AreZero() => AreZero(1, 2); [MethodImpl(MethodImplOptions.NoInlining)] private static bool AreZero(int x, int y) => x == 0 && y == 0;

- dotnet/runtime#49548. This PR optimized various patterns involving comparisons against 0. Given an expression like

; .NET 5.0.9

; Program.AreZero(Int32, Int32)

test ecx,ecx

jne short M01_L00

test edx,edx

sete al

movzx eax,al

ret

M01_L00:

xor eax,eax

ret

; Total bytes of code 16

; .NET 6.0.0

; Program.AreZero(Int32, Int32)

or edx,ecx

sete al

movzx eax,al

ret

; Total bytes of code 9I can’t cover all of the pattern changes in as much detail, but there have been many more, e.g.

- dotnet/runtime#46253 converted the

Interlocked.AndandInterlocked.Ormethods introduced in .NET 5 into JIT intrinsics on ARM64. - dotnet/runtime#46243 and dotnet/runtime#45311 avoided cast helpers from being emitted for

(T)array.Clone()andobject.MemberwiseClone(). - dotnet/runtime#43947 added support for unrolling single-iteration loops.

- dotnet/runtime#54864 enabled more methods to be tail-called by allowing implicit widening.

- dotnet/runtime#53214 eliminated redundant

testinstructions in some situations. - dotnet/runtime#44419 enabled common subexpression elimination (CSE) for floating-point constants.

- dotnet/runtime#45604 from @alexcovington optimized division like

-i / 7to instead be emitted as the equivalent ofi / -7, saving on a negation operation. - dotnet/runtime#48589 extended support for throw helpers that are non-void returning.

- dotnet/runtime#52298 optimized how floating-point constants are assigned to ref parameters.

- dotnet/runtime#32000 from @damageboy taught the JIT how to remove double-negation (e.g.

~(~x)). - dotnet/runtime#49238 enabled the JIT to elide some additional null checks.

- dotnet/runtime#35627 caused the JIT to emit better instructions for

i < 0checks. - dotnet/runtime#42164 yielded better code generation for floating-point

-XandMathF.Abs(X)operations. - dotnet/runtime#41772 enabled use of the BMI2

rorxinstruction as part of rotate operations (BitOperations.RotateRight). - dotnet/runtime#55614 increased the number of loops in a given method that the JIT will optimize from 16 to 64.

- dotnet/runtime#51158 avoided some unnecessary spilling when storing into fields.

- dotnet/runtime#50813 updated the JIT’s knowledge of the execution characteristics of several operations (SQRT, RCP, RSQRT).

At this point, I’ve spent a lot of blog real estate writing a love letter to the improvements made to the JIT in .NET 6. There’s still a lot more, but rather than share long sections about the rest, I’ll make a few final shout outs here:

- Value types have become more and more critical to optimize for, as developers focused on driving down allocations have turned to structs for salvation. However, historically the JIT hasn’t been able to optimize structs as well as one might have hoped, in particular around being able to keeps struct in registers aggressively. A lot of work happened in .NET 6 to improve the situation, and while there’s still some more to be done in .NET 7, things have come a long way. dotnet/runtime#43870, dotnet/runtime#39326, dotnet/runtime#44555, dotnet/runtime#48377, dotnet/runtime#55045, dotnet/runtime#55535, dotnet/runtime#55558, and dotnet/runtime#55727, among others, all contributed here.

- Registers are really, really fast memory used to store data being used immediately by instructions. In any given code, there are typically many more variables in use than there are registers, and so something needs to determine which of those variables gets to live in which registers when. That process is referred to as “register allocation,” and getting it right contributes significantly to how well code performs. dotnet/runtime#48308 from @alexcovington, dotnet/runtime#54345, dotnet/runtime#47307, dotnet/runtime#45135, and dotnet/runtime#52269 all contributed to improving the JIT’s register allocation heuristics in .NET 6. There’s also a great write-up in dotnet/runtime about some of these tuning efforts.

- “Loop alignment” is a technique in which nop instructions are added before a loop to ensure that the beginning of the loop’s instructions fall at an address most likely to minimize the number of fetches required to load the instructions that make up that loop. Rather than trying to do justice to the topic, I recommend Loop alignment in .NET 6, which is very well written and provides excellent details on the topic, including highlighting the improvements that came from dotnet/runtime#44370, dotnet/runtime#42909, and dotnet/runtime#55047.

- Checking whether a type implements an interface (e.g.

if (something is ISomething)) can be relatively expensive, and in the worst case involves a linear walk through all of a type’s implemented interfaces to see whether the specified one is in the list. The implementation here is relegated by the JIT to several helper functions, which, as of .NET 5, are now written in C# and live in theSystem.Runtime.CompilerServices.CastHelperstype as theIsInstanceOfInterfaceandChkCastInterfaceinterface methods. It’s not an understatement to say that the performance of these methods is critical to many applications running efficiently. So, lots of folks were excited to see dotnet/runtime#49257 from @benaadams, which managed to improve the performance of these methods by ~15% to ~35%, depending on the usage.

GC

There’s been a lot of work happening in .NET 6 on the GC (garbage collector), the vast majority of which has been in the name of switching the GC implementation to be based on “regions” rather than on “segments”. The initial commit for regions is in dotnet/runtime#45172, with over 30 PRs since expanding on it. @maoni0 is shepherding this effort and has already written on the topic; I encourage reading her post Put a DPAD on that GC! to learn more in depth. But here are a few key statements from her post to help shed some light on the terminology: