Our team loves a good Capture the Flag (CTF) event! Those are usually a fun way to keep team morale high while up-skilling on new technologies or freshening up the team’s existing knowledge base.

Traditionally, Capture the Flag events are cybersecurity exercises in which “flags” are secretly hidden in a program or website and competitors steal them from other competitors (attack/defense-style CTFs), or the organizers (jeopardy-style challenges). However, more engineering practices can be taught and practiced as a CTF event and may even not use the term CTF for it, such as our team’s OpenHack content packs which are very similar to what CTF is all about, and include topics such as AI-Powered Knowledge Mining, ML and DevOps, containers, Serverless and Azure security.

Open-Source CTF frameworks make it easy to turn any challenge into a CTF event with configurable challenge pages, leader boards, and other expected features of such an event, using zero-code. For instance, OWASP’s Juice-Shop has a CTF plugin that supports several common CTF platforms that you can provision and run for your teams to do security training on.

One of the most popular open CTF platforms is CTFd. It is easy to use, customize, and built with open-source components. It offers several plans for managed hosting and features you may choose from, or you could deploy and maintain your environment. Managing an environment has cost and maintenance implications, but you own the data, you can integrate it with your organization’s network if required, and typically costs less. Furthermore, using Platform as a Service (PaaS), maintained by your cloud vendor, has the benefit of both worlds; Free, open-source software, and much easier maintenance and IT handling than using virtualized infrastructure components.

This post will help you set up a self-hosted CTFd environment using nothing but Azure PaaS, so your CTF environment is scalable, secured, and easy to maintain, regardless of your team’s size. Code and deployment scripts used in the post are found in this GitHub repository, and you are encouraged to drop a discussion or an issue or contribute.

The Infrastructure Setup

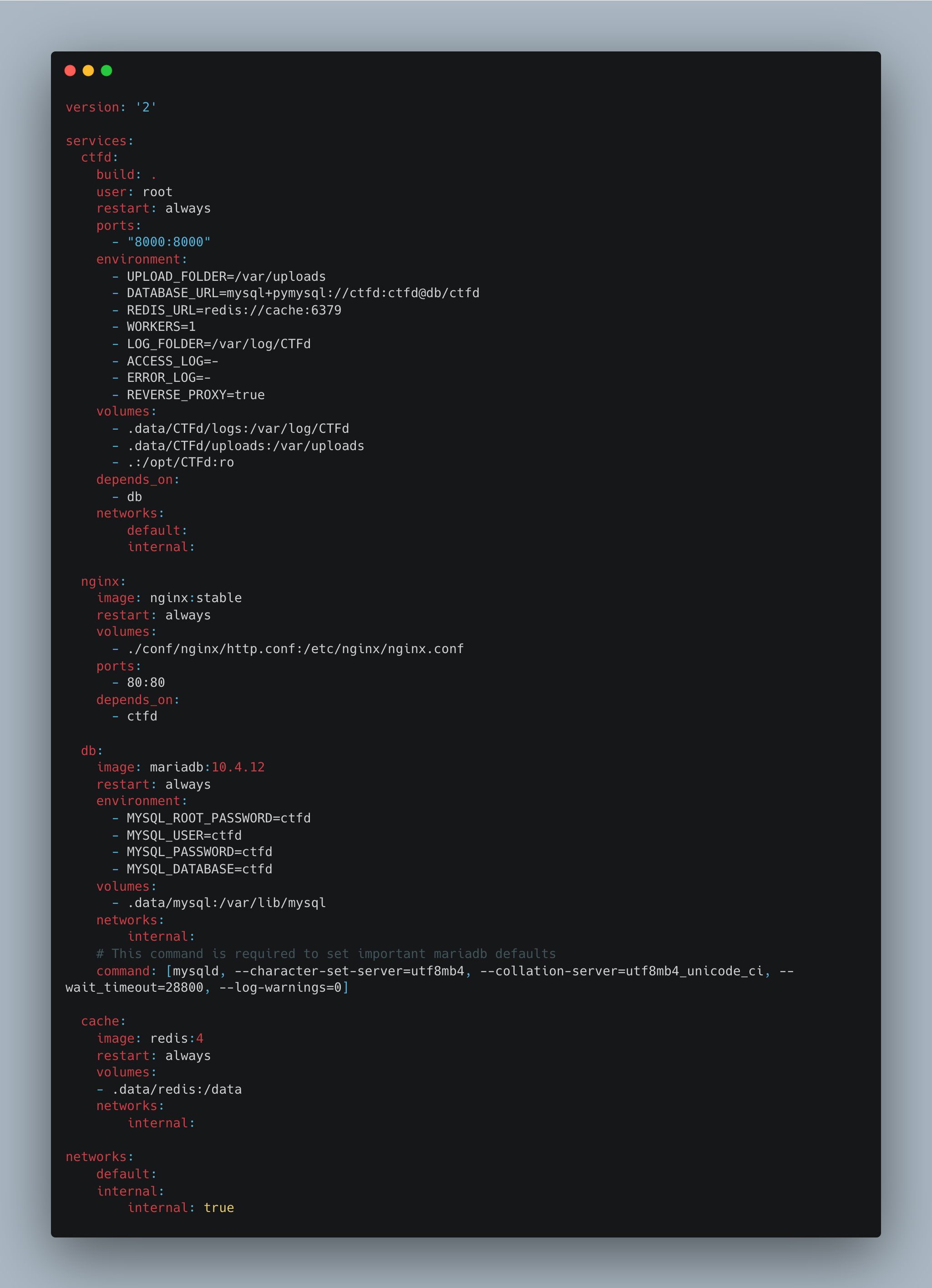

Let’s start by exploring the local deployment’s docker-compose file provided in the CTFd repository and plan its translation to Azure cloud components.

Docker Compose Architecture

Four services and two networks are defined:

- Default network: Network which is accessible by clients of CTFd.

- Internal network: Private network for backend services.

- CTFd: The application server that depends on db service. It is accessible on the default network but also has access to the internal network. It is configured with the db and the cache URIs which include their secrets.

- Nginx: Reverse proxy exposing CTFd service.

- Db: MariaDB container with some unique settings. Accessible through the internal network.

- Cache: Redis cache that is accessible through the internal network.

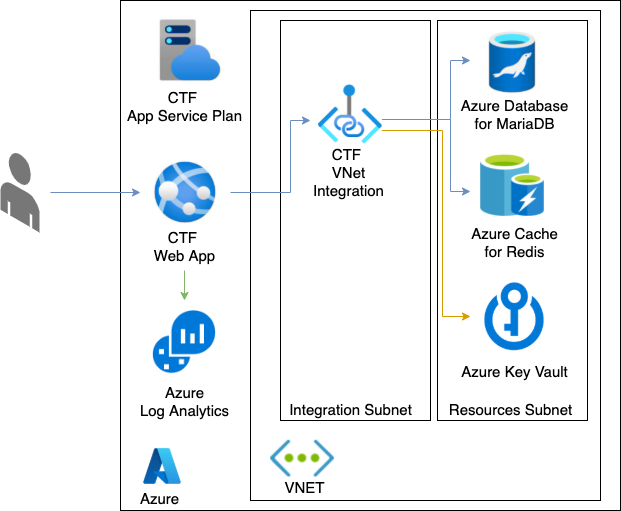

Azure Deployment Architecture

Planning the Azure deployment that will host the same functionalities using platform and networking components:

- Virtual Network: Maintain private access to internal backend services by using VNet.

- CTFd App Service and App Service Plan: An Azure App Service for containers (and its App Service Plan) that can scale to multiple units and replaces both docker-compose services CTFd and nginx. Using the App Service feature for VNet Integration gives the App Service access to resources in the private VNet, without actually hosting the App Service in it. Hosting an App Service within a virtual network is enabled only for App Service Environments, which cost significantly more.

- Azure Database for MariaDB: A managed MariaDB community database that is accessible only from within the VNet by using a Private Endpoint.

- Azure Cache for Redis: A managed Redis cache that is accessible only from within the VNet by using a Private Endpoint.

- Azure Key Vault: Securely store keys and passwords for MariaDB and Redis. The keys can be configured directly to the CTFd App Service configuration, much like the Docker Compose definition has them, but always prefer storing such keys in secrets management solutions. This service is also accessible only from within the VNet.

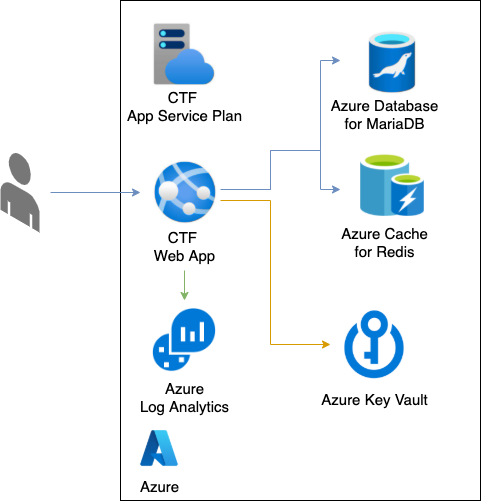

A simpler variation of this deployment reduces the overhead of VNet and the resources required to inject Azure PaaS components to VNet (Private endpoint and its additional networking resources):

Azure Infrastructure as Code

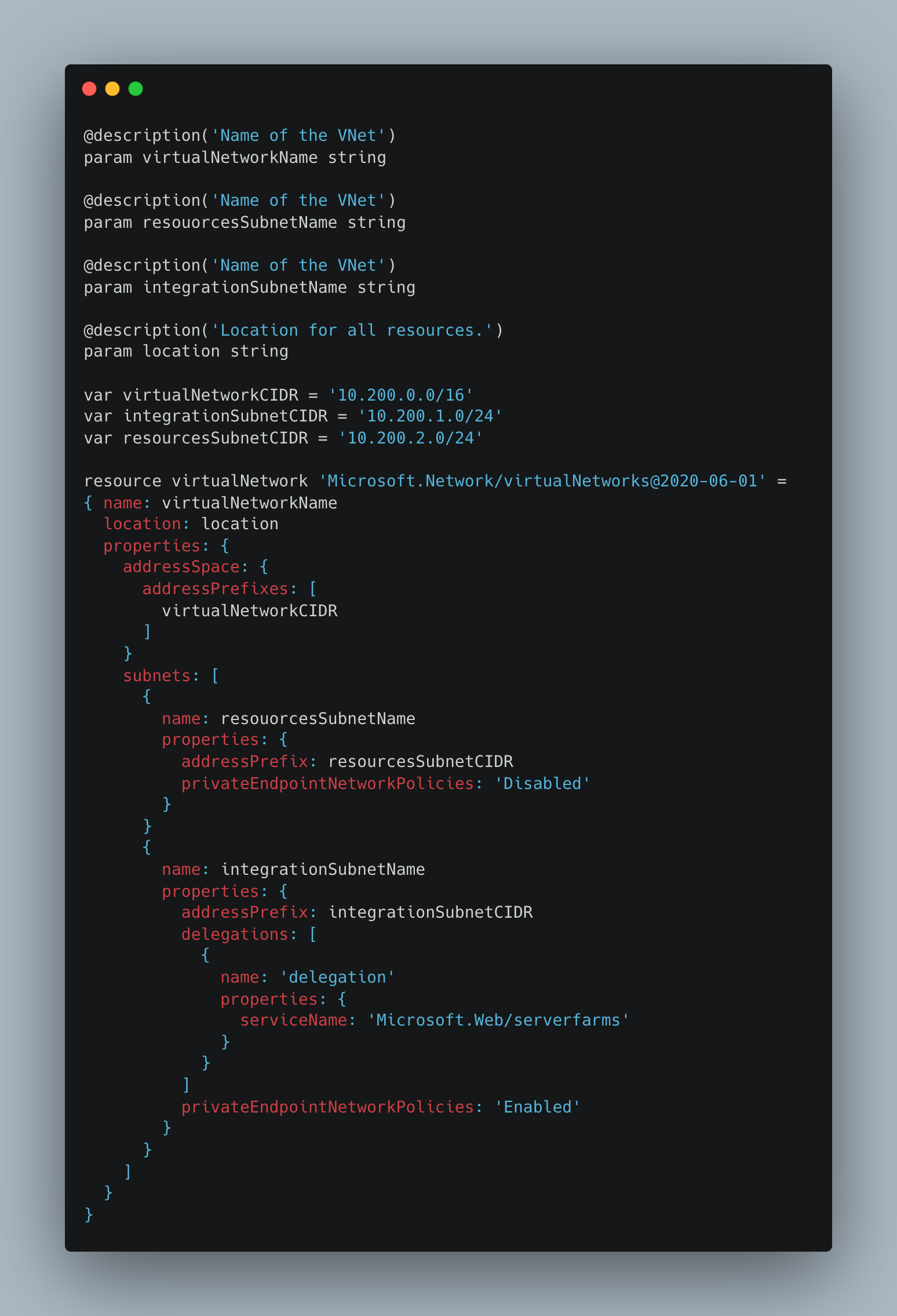

Let’s define the resources using Bicep, Azure’s domain-specific language for describing and deploying resources. Using modules for each layer, starting with the virtual network, the CTFd application server and finally the backend services. The final bicep artifact should be able to deploy all submodules so that the resources are either deployed as public PaaS services, which is a more straightforward deployment, or as PaaS services in a private VNet, which is more secure.

Virtual Network

The virtual network module defines two subnets, one hosting the private endpoints of MariaDB and Redis Cache and the second hosting the VNet integration element, which handles egress into the network from the App Service. Visit this page for a reference on how App Services communicates through VNet in the same way as we’re doing here.

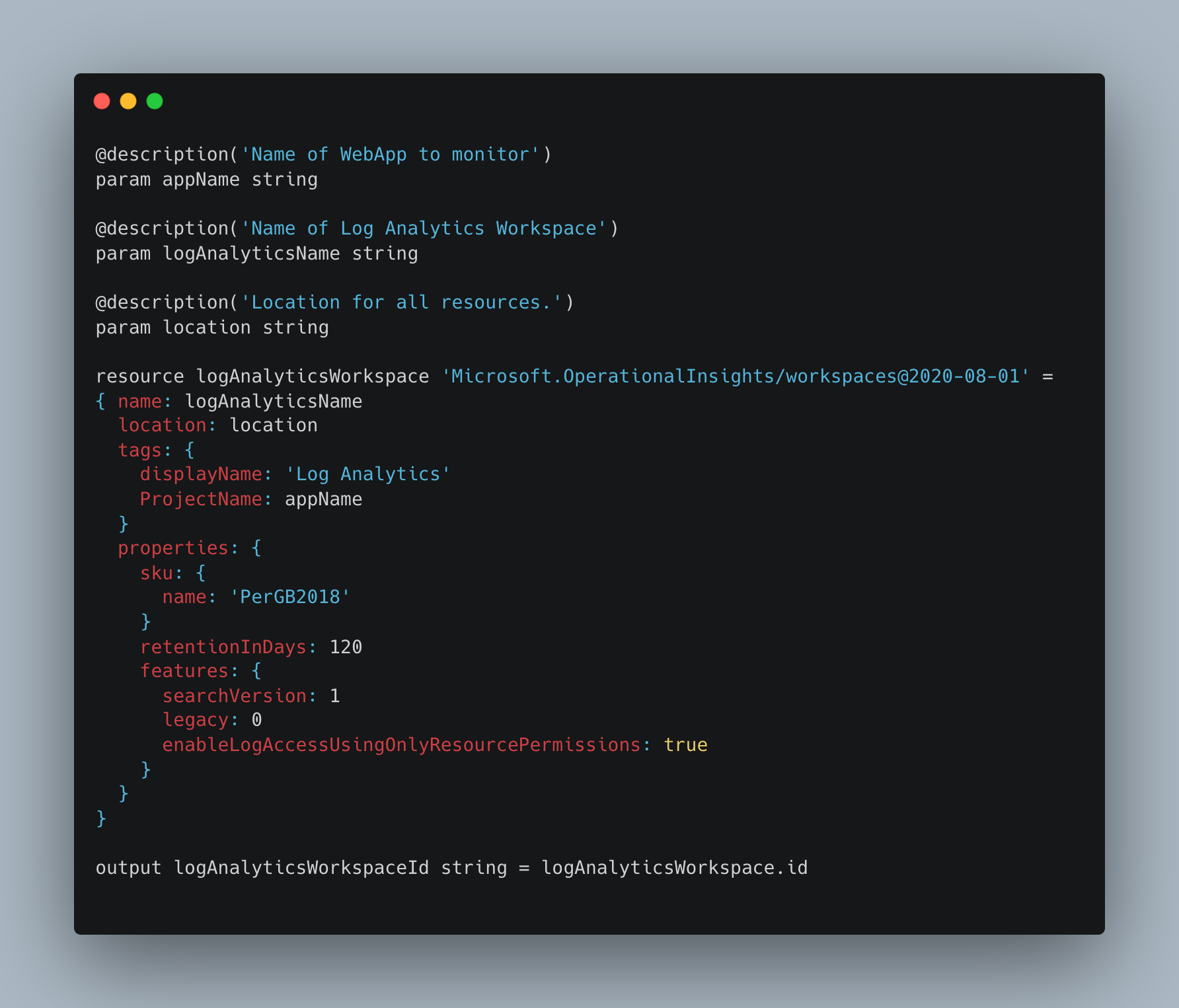

Log Analytics

The Log Analytics module provisions a Log Analytics workspace and outputs its workspace ID for use in the next module.

CTF WebApp

Next, we define the CTFd application server module, since we require its managed identity’s Service Principal ID for the next element, Azure Key Vault. That is configured using the identity property. The App Service is configured with MariaDB and Redis Cache URIs using Key Vault integration to avoid having the secrets as part of the App Service configuration.

VNet integration enables the App Service to communicate with services in a VNet and is handled if the module’s input parameter for VNet is set to true by setting the virtualNetworkSubnetId and vnetRouteAllEnabled accordingly.

Finally, the CTFd docker hub image is set, and the managed identity’s Service Principal ID is output from the module.

Diagnostic Settings component uses Workspace ID to configure sending the container logs to Log Analytics.

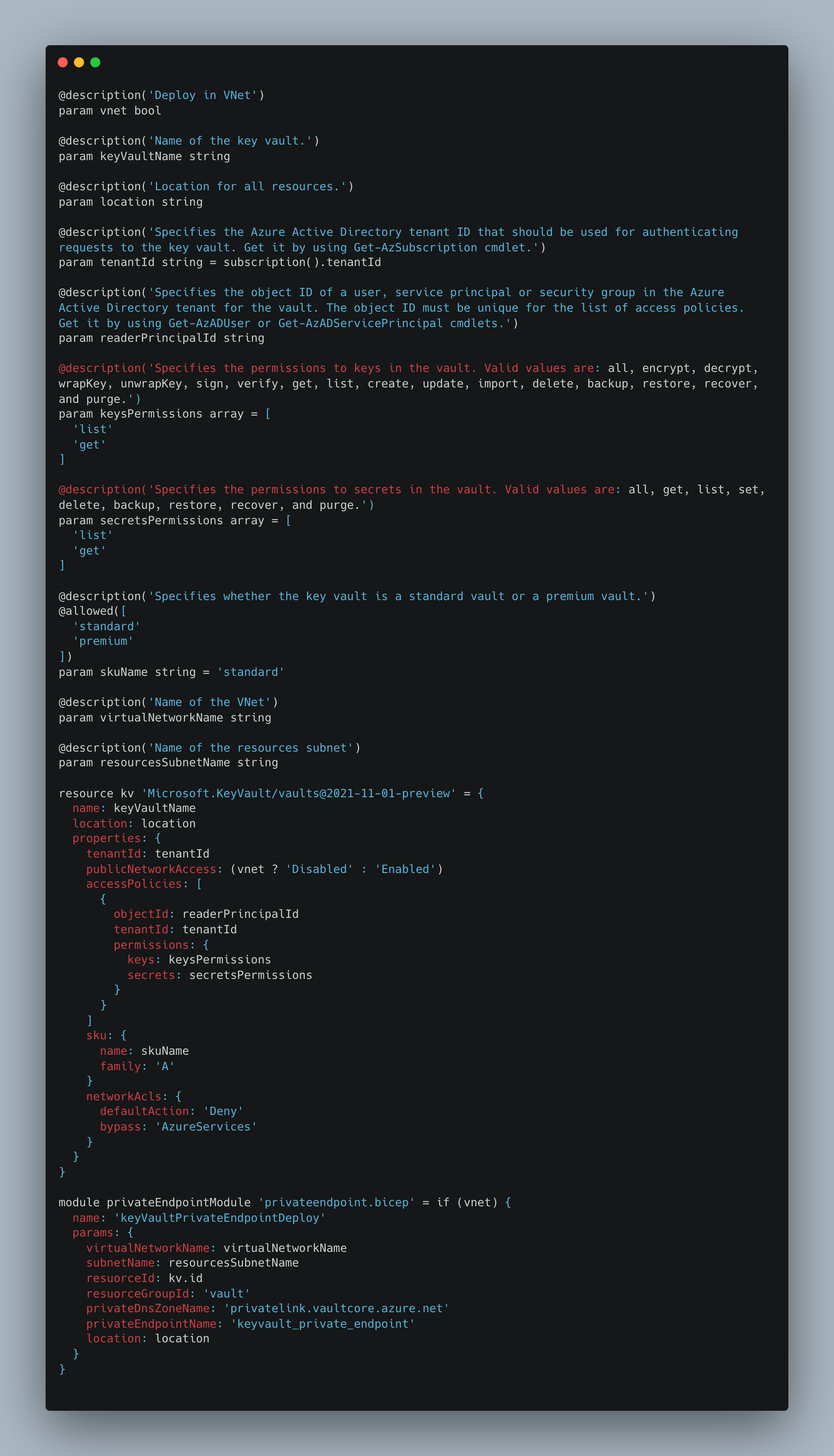

Azure Key Vault

The Azure Key Vault module sets up an instance of Key Vault with access policy allowing the App Service’s managed identity access to list and get the keys and secrets in the vault.

Note that no secrets are yet in the vault, as that will be handled in later modules, and that the Key Vault instance is optionally configured to grant access only from a VNet.

Describing the network component for integration with VNet is done using a submodule that is described later, and by setting the publicNetworkAccess parameter to Enabled (without VNet) or Disabled (with VNet).

Private Endpoint

This module is used by the Key Vault, MariaDB and Redis modules to define the networking components required to integrate an Azure PaaS with a virtual network. For more info on defining private endpoints with bicep, visit this guide.

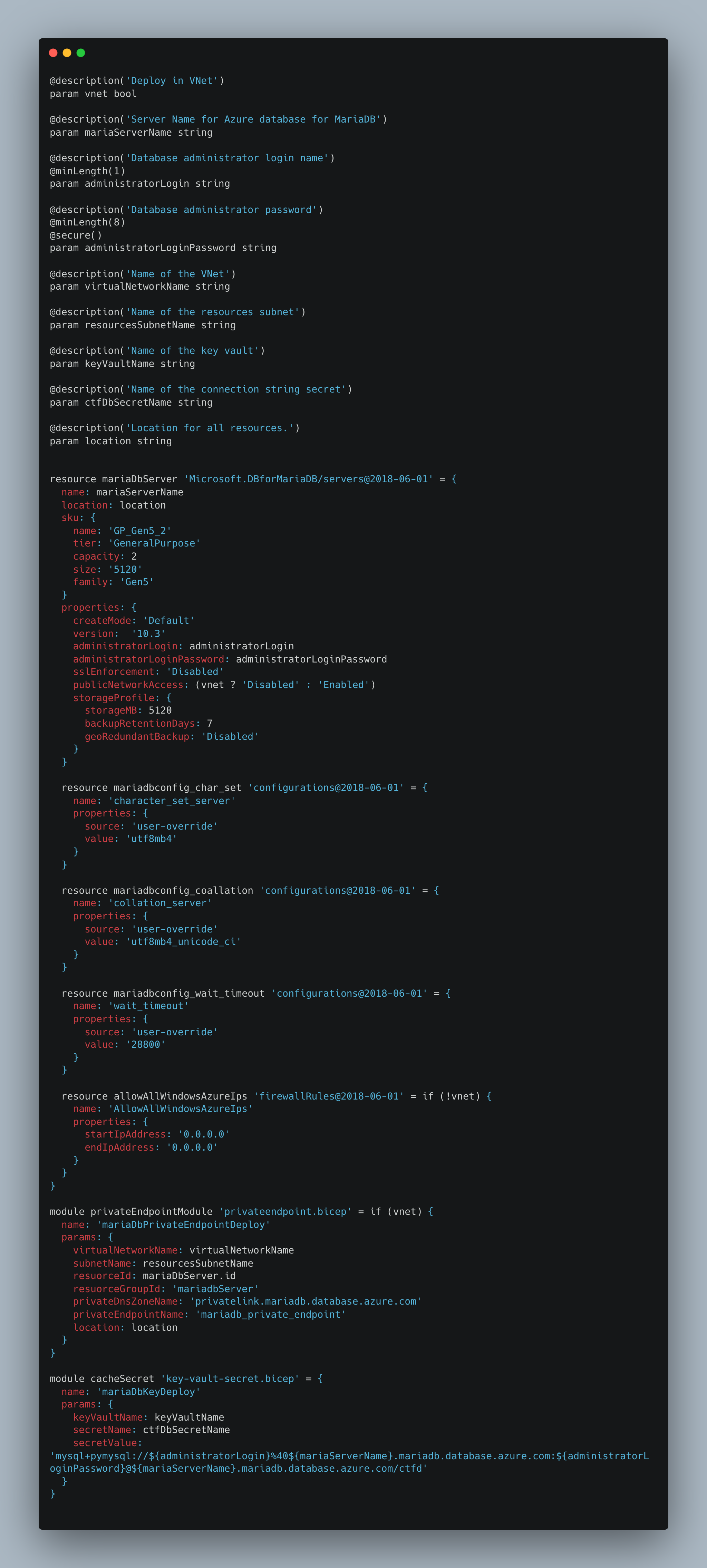

MariaDB

This module contains resource definitions for MariaDB and uses a submodule for the networking components required to connect it to a VNet, and another submodule for handling provisioning the database secret URI into the Key Vault. The module’s first parameter determines if the database will be integrated with a VNet and controls the condition for deploying the submodule and the database’s publicNetworkAccess parameter setting it to Enabled (without VNet) or Disabled (with VNet). Note the MariaDB unique configurations, that were set in CTFd’s docker compose, and are set to the service using its configuration sub-elements. Finally, the module builds a connection URI that includes the administrator user’s login and password and provides it to the submodule that adds key vault secrets.

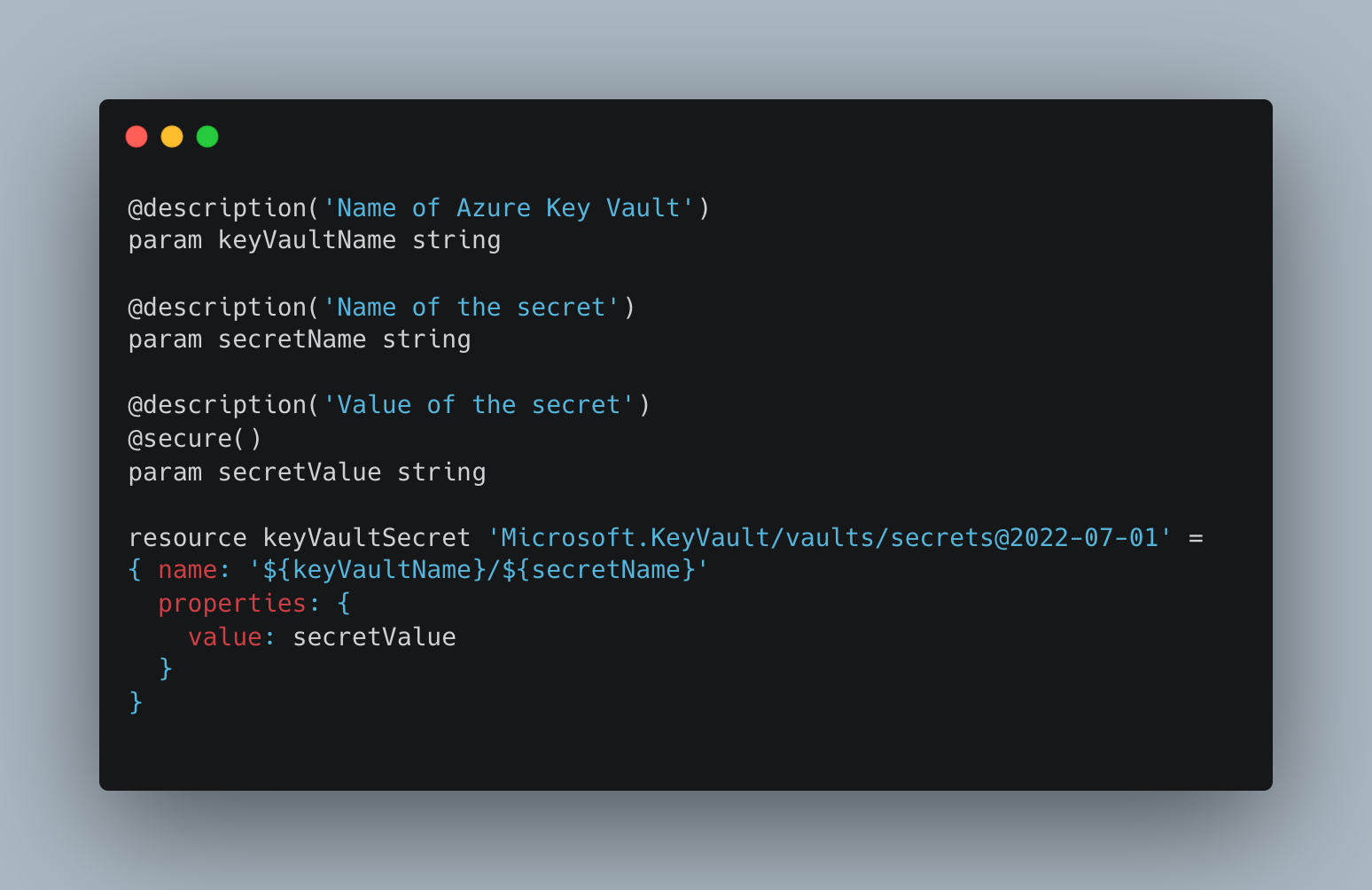

Key Vault Secret

This simple module adds a secret to Azure Key Vault.

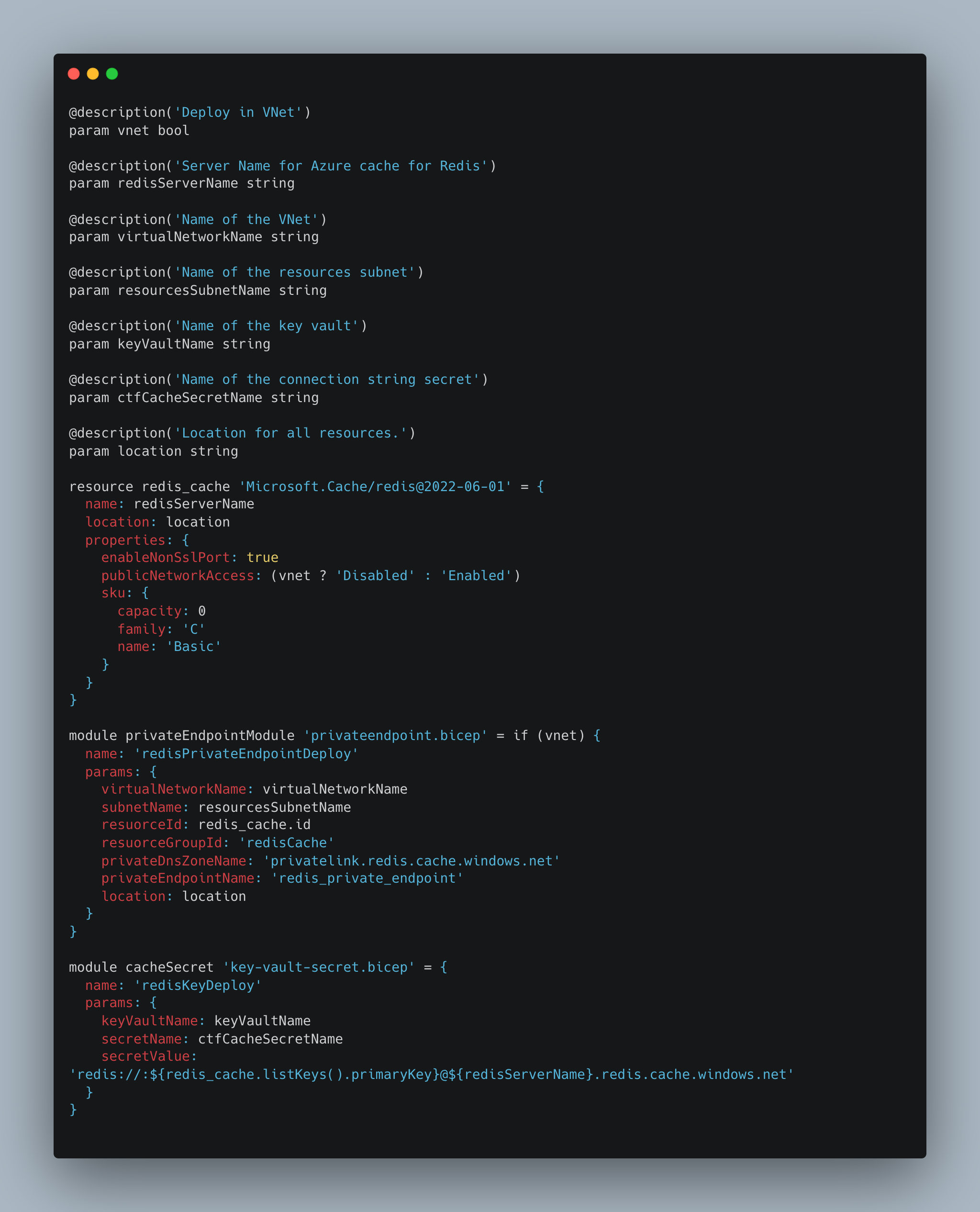

Redis

This module is very similar too the Key Vault and MariaDB bicep definitions; we define the Redis resource, and optionally integrate it to the VNet with a submodule that defines the private endpoint as shown previously and set its publicNetworkAccess parameter accordingly.

Additionally, we add the Redis URI which contains its secret, that is retrieved using the built in function listKeys(), to the Key Vault using the key vault secret submodule.

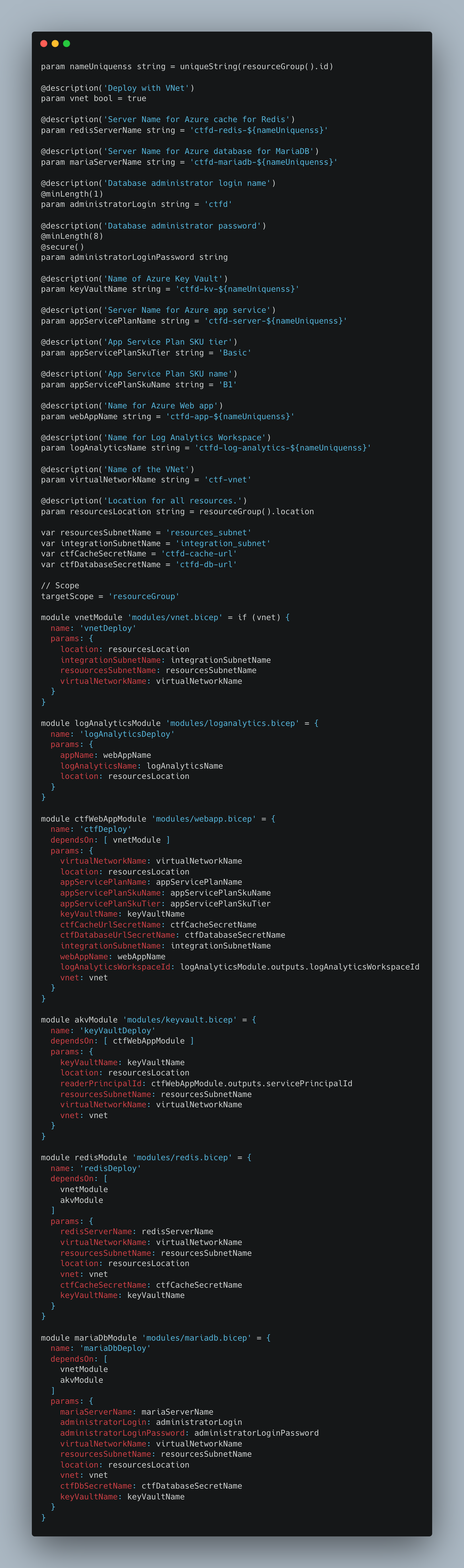

Putting it all Together

After the hard work we’ve put into our modules, aggregating them into a bicep deployment is easy enough and self-explanatory. The deployment has a single required parameter for the database administrator password (for security reasons that cannot have a default value), but other parameters are overridable as well:

- vnet – Determine if the resources are deployed with a VNet, default is true (boolean).

- redisServerName – Name of Redis cache (string).

- mariaServerName – Name of MariaDB (string).

- administratorLogin – MariaDB admin name (string).

- administratorLoginPassword – MariaDB admin password, the only required parameter (string).

- keyVaultName – Name of the key vault service (string).

- appServicePlanName – Name of app service plan (string).

- appServicePlanSkuTier – App Service Plan SKU tier (string).

- appServicePlanSkuName – App Service Plan SKU name (string).

- webAppName – Name of app service webapp (string).

- logAnalyticsName – Name for Log Analytics Workspace (string).

- virtualNetworkName – Name of virtual network (string).

- resourcesLocation – Location of resources, defaults to the resource group location (string).

Run the Code

To deploy the bicep definition to your Azure subscription, run the following commands:

export DB_PASSWORD='YOUR PASSWORD'

export RESOURCE_GROUP_NAME='RESOURCE GROUP NAME'

az deployment group create --resource-group $RESOURCE_GROUP_NAME --template-file ctfd.bicep --parameters administratorLoginPassword=$DB_PASSWORDNow go ahead setting up your team’s CTF game and enjoy; happy hacking!