We’re thrilled to share the exciting news that the integrated vector database is now officially available to all users of Azure Cosmos DB for MongoDB vCore. This groundbreaking feature paves the way for a multitude of fresh opportunities in the realm of secure and resilient AI-driven applications while making Azure Cosmos DB for MongoDB vCore your go-to data source.

We’re also announcing new capabilities to enable you to perform faster, more accurate operations with ease:

- HNSW vector index algorithm (preview) provides fast and accurate vector searches at scale. Enabling your apps to perform more than 2,000 QPS with sub-100ms latency on 3 million

- Integrations: Use Azure Cosmos DB vCore with your favorite LLM orchestration framework such as Semantic Kernel, LangChain, or LlamaIndex

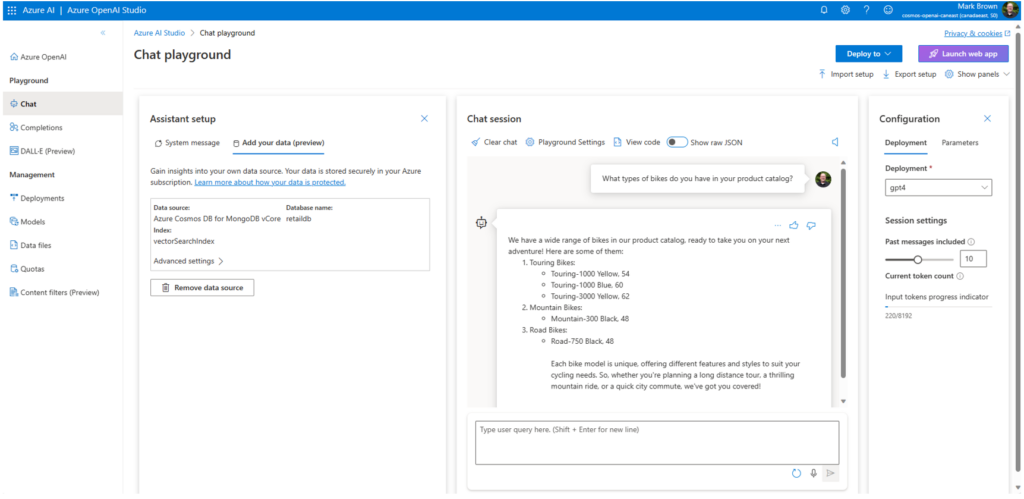

- Azure OpenAI Service On Your Data: Quickly and easily use your own data in Azure Cosmos DB for MongoDB vCore with Azure OpenAI completions models using the Azure OpenAI Studio.

The integrated vector database enables you to seamlessly integrate AI-powered applications, including those harnessing OpenAI embeddings, with your existing data residing in Azure Cosmos DB. You can effortlessly store, index, and query high-dimensional vector data directly within Azure Cosmos DB for MongoDB vCore, eliminating the necessity of transferring your data to more costly alternatives to meet your vector database needs.

This unified solution simplifies the development of your AI applications by reducing complexity and improving overall efficiency. By using the integrated vector database, you’ll gain access to a wealth of semantically relevant insights from your data, leading to the creation of more precise and potent applications. The possibilities are now limitless!

Create a vector index

Azure Cosmos DB for MongoDB vCore supports two types of vector index algorithms that you can define when creating an index:

- IVF (generally available), or Inverted File Indexes which partitions the vectors into clusters and assigns each vector to its nearest cluster center. IVF offers robust stability and performance.

- HNSW (preview), or Hierarchical Navigable Small Worlds, which builds a multi-layer graph where each layer has fewer vectors and connections than the previous one. HNSW enables you to scale your indexes to large volumes while still maintaining low latency, high throughput (queries per second), and accuracy.

Each vector index has parameters that you can tune to adjust your latency or accuracy performance and select a similarity metric of your choice (cosine, Euclidean, and inner product). Learn how to create a vector index.

Perform a vector search

Once your data is inserted into your Azure Cosmos DB for MongoDB vCore database and collection, and your vector index is defined, you can perform a vector similarity search against a targeted query vector, obtain the top k most relevant items in your collection, and view the similarity score indicating how close the returned items are to your query vector. Learn how to perform a vector search.

Integrate with LLM orchestration tools

Azure Cosmos DB for MongoDB vCore’s integration with the LLM orchestration tools Semantic Kernel, LangChain, and LlamaIndex provides you with more flexibility in developing your applications. You can now effortlessly index and search over your data, ensuring lightning-fast query performance and data retrieval with ease of development with your favorite tooling.

Integration with Azure OpenAI Service On Your Data

Originally announced in June 2023, Azure OpenAI Service On Your Data allows users to quickly and easily integrate data in Azure with the power of OpenAI large language models (LLMs) such as GPT-4. This experience helps streamline proof-of-concept development, enables quick experimentation, and even allows you to fast-deploy a web application chat powered by your Azure OpenAI completions model, all from the Azure OpenAI Studio interface.

Next Steps

Vector database is a game-changer for developers looking to use AI capabilities in their applications. Azure Cosmos DB for MongoDB vCore offers a single, seamless solution for transactional raw data and vector data utilizing embeddings from the Azure OpenAI Service API or other solutions. You’re now equipped to create smarter, more efficient, and user-focused applications that stand out. Check out these resources to help you get started:

- Vector Database in Azure Cosmos DB MongoDB vCore documentation

- Clone or fork our samples repository and End-to-End RAG Pattern solution for MongoDB vCore with HNSW support

- Learn more about vector embeddings with Azure OpenAI Service

Get Started with Azure Cosmos DB for free

Azure Cosmos DB is a fully managed NoSQL, relational, and vector database for modern app development with SLA-backed speed and availability, automatic and instant scalability, and support for open-source PostgreSQL, MongoDB, and Apache Cassandra. Learn more about Azure Cosmos DB for MongoDB vCore’s free tier here. To stay in the loop on Azure Cosmos DB updates, follow us on Twitter, YouTube, and LinkedIn.

Hello. It seems like the preview version of the Vector Search returned more accurate results than the newer version. We created a collection with the preview in a DEV environment (before November when the preview version was available). When comparing it to an identical collection in a Production environment (but created after November), we get much better results in the DEV environment. Any ideas why that is? Can we use the version that was available in Preview? Thank you.

Hey Andres, thanks for reaching out.

You should not have seen a performance decrease with the GA version of vector search. Can you please send me an email with some additional context? We’d like to investigate this further with you: James.Codella@microsoft.com

Thank you!

-James