Retrieval Augmented Generation, or RAG, is one of the hottest topics at the moment as it opens up the possibility of interacting with data using natural language, which is a long-time dream finally coming true.

It is very likely that a lot of your data is already stored or will be stored in Azure SQL, so a common request is to have an example on how to apply the RAG pattern to your own data stored an Azure SQL database.

This blog post is all about that. Let’s start from the basics and make sure the RAG pattern is clearly understood.

RAG Pattern 101

To make the explanation easy to understand, even if you are completely new to the topic, let’s start from a simple scenario. You have a database where you have stored details – title, abstract, time, speakers – of all the session of a conference. A good example could be the just passed .NET Focus on AI conference or the forthcoming .NET Conf 2024 conference, or one of my favorites, the VS Live conference.

Why RAG?

You already know that Language Models like GPT-4 or Phi-3 can accept any text you’ll provide them, and they can generate answer to almost any question you may want to ask. So, why a specific pattern like RAG is needed? Why can’t we just send all the titles and the abstract and all the information stored in the database to the LM and call it a day? Then we could ask anything using a simple API call and our work we’ll be done.

There are two reasons why you don’t want to do that.

First of all, if you are asking something related to Blazor, there is no need to give the LM details about a session that is completely out of scope: it will not help the LM to answer and could actually make the answer less precise (see: “Lost in the Middle: How Language Models Use Long Contexts“). Secondly, the cost of an AI call is based on how many tokens the sent text must be split into – tokenization is something that happen transparently behind the scenes – and so the less text you send, the less token you’ll be sending, which means that you’ll be using less resources. More efficiency, less digital waste (which means less power consumed), lower price to pay for: it’s a win for everyone!

RAG Steps

The first step of the RAG pattern is to filter out all the data that is not relevant to the question being asked. For this first step, typically, a semantic search is performed on the text. To do a semantic search, embeddings, and thus vectors, are used to do vector similarity search and return only the relevant results. Since Azure SQL is a powerful modern relational and multi-model database, you can enrich vector search other all other filtering capabilities that it has already. Filtering by exact values (for example all sessions on a certain day), by JSON data (for example sessions with certain tags) or even using geospatial filters (for example session delivered withing 1 km from me).

Once you have the relevant data you can then efficiently send it to the LM, along with the question you want to ask, to have the answer in natural language, without wasting resources and money.

A diagram that shows the two steps of the RAG pattern is here so that you can easily visualize the process and see how it is applied to the sample data we’re using in this post.

Now that you are familiar with the RAG pattern, is time to see how it can be realized using Azure services.

RAG pattern in Azure

There are many ways to implement the RAG pattern in Azure. I personally love the serverless approach provided by the cloud, so I’m using serverless services in this sample. If you prefer a containerized approach, keep in mind that everything described here can be easily hosted in a container, if you prefer to do so.

The high-level architecture of the RAG pattern applied to Azure is the following:

The Azure Services being used are:

- Azure Static Web Apps

- Azure OpenAI

- Azure Functions

- Azure Functions SQL Trigger Binding

- Azure SQL Database

- Data API builder

Frontend

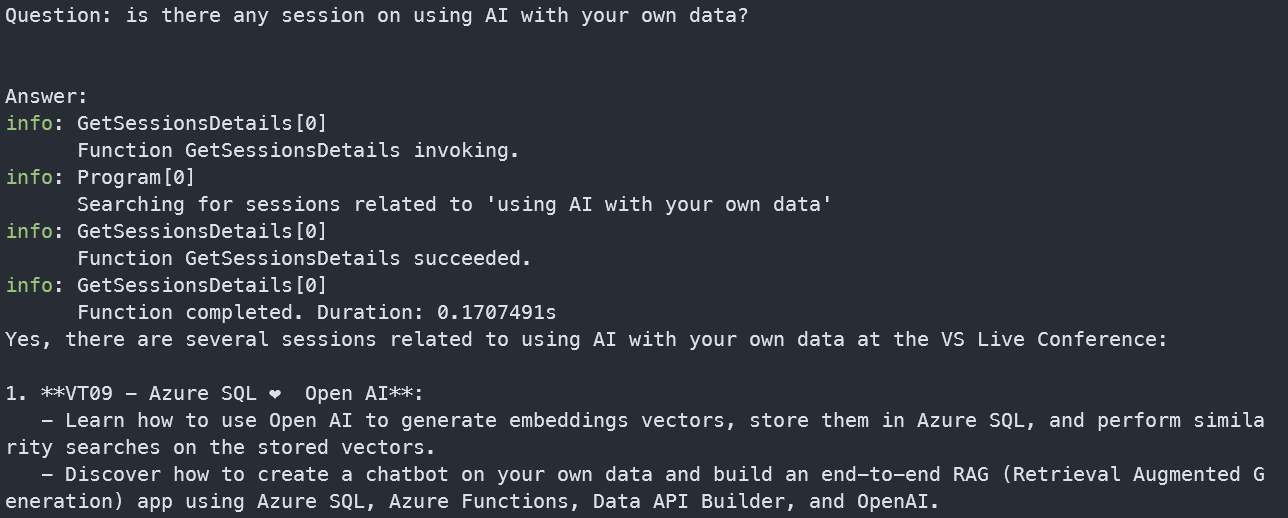

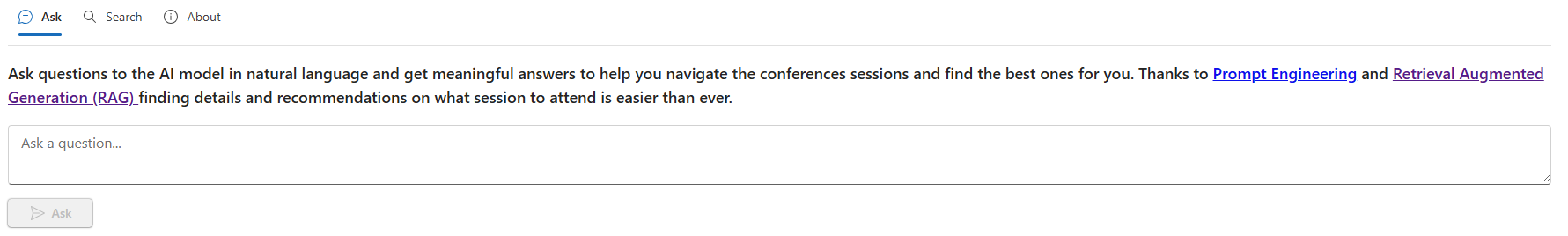

The fronted is a simple React application hosted in Azure Static Web Apps. It is used to allow users to ask a question that will then be answered applying the RAG pattern. There is also the option to just do similarity search to clearly see the difference in terms of response between a simple similarity search and the full RAG pattern.

Backend

Azure Functions are used to handle the question asked by the user and to orchestrate the RAG pattern. Once the question is asked, the Azure Function called will do similarity search in Azure SQL, then pack the results into a pipe-separated format

string.Join("\r", sessions.Select(s => $"{s.Title}|{s.Abstract}|{s.Speakers}|{s.Start}|{s.End}"));and then send the question, the list of session and the following prompt to the LM, hosted in Azure OpenAI:

"You are a system assistant who helps users find the right session to watch from the conference, based off the sessions that are provided to you. Sessions will be provided in an assistant message in the format of `title|abstract|speakers|start-time|end-time`. You can use only the provided session list to help you answer the user's question. If the user asks a question that is not related to the provided sessions, you can respond with a message that you can't help with that question."Data API builder is used to easily expose stored procedures that are called via REST calls from the frontend to show how many sessions have been indexes (in the “About” tab) and to call the find_session procedure that does vector search (available in the “Search” tab). Data API builder automatically expose desired database objects as REST or GraphQL endpoints, which is great to quickly deploy a CRUD service that can be called by any framework, in just a few minutes.

Azure Functions are also used to immediately turn session title and abstract into an embedding as soon changes are made to the database table. This is done by calling Azure OpenAI embedding model. Azure SQL Trigger Binding is what make possible to have tables monitored for changes and then react to those changes by executing some code in the Azure Function itself. It is extremely lightweight (it uses native Azure SQL Change Tracking capabilities behind the scenes) and it provides all the flexibility and computation power needed for almost anything.

Database

Azure SQL’s support for natively storing and querying vectors is in Early Adopter Preview. It includes the ability to store vectors in a compact binary format and to calculate distance between two vectors – and thus calculate the semantic similarity of related topics as vectors in this sample are the embeddings of session title and abstract – so that filtering only the relevant session given a user query is as easy as writing the following query:

select top(10)

id,

title,

vector_distance('cosine', @qv, embeddings) as cosine_distance

from

web.sessions

order by

cosine_distancethe query will return the 10 most similar sessions, given the search vector @qv that contains the embedding of the topic being searched. Getting the embeddings for some text can be done in many ways with many languages, but at the end of the day is just a REST call, so in Azure SQL it can be easily done using sp_invoke_external_rest_endpoint as shown in this sample Get_Embeddings procedure.

Code and Demo

That’s it. Implementing the RAG pattern in Azure SQL is incredibly easy. If you want to see it by yourself, I’ve presented about this topic just a few days ago at .NET Conf Focus on AI, where I demoed the full end-to-end pattern. You can get the repo and run the demo either locally (except for Azure SQL DB, but hey! there is a free tier for that!) or in Azure and then from there you can start to use your data instead of the sample demo data provided and you’ll be on a good path already for allowing your users to chat with your data.

Conclusion

For this sample, as you have noticed, I used SQL and .NET directly, even though there are many libraries out there that are trying to abstract and simplify the whole process. My goal for this post was to make sure that you learn and understand how things work behind the scenes so when you’ll be using any of the amazing libraries available (be it Semantic Kernel or LangChain) they will not be just a magic black box, but you know exactly what is happening behind the scenes.

And, anyway, samples using those libraries I just mentioned will follow soon, so stay tuned!

0 comments