We are happy to announce that Native vector support in Azure SQL Database and Azure SQL Managed Instance is moving to General Availability this summer. Deployments are already underway, and several regions have begun receiving the feature.

What is going GA?

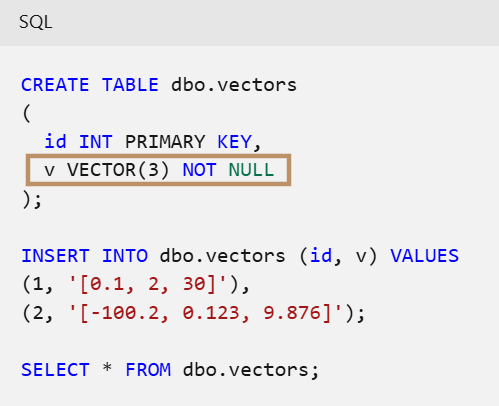

Integrated Vector Data Type

Azure SQL introduces a dedicated VECTOR data type that simplifies the creation, storage, and querying of high-dimensional vector embeddings vector embeddings directly within your relational database.

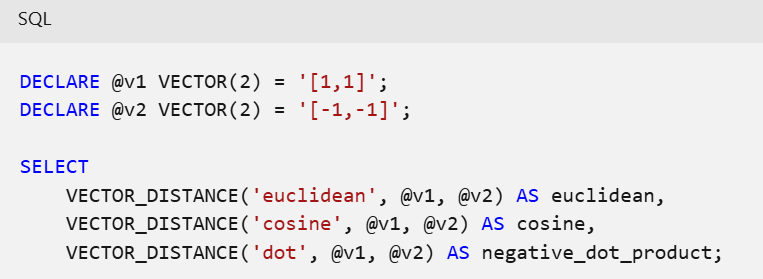

Built-in Vector Functions

Includes essential vector operations like: VECTOR_DISTANCE , VECTOR_NORM VECTOR_NORMALIZE. Read more here

Use Cases

With the General Availability of Native vector support, Azure SQL is no longer just a place to store and query relational data. It is a platform for building intelligent, context-aware, and production-ready AI applications. Whether you’re enabling semantic search, powering RAG pipelines, or orchestrating multi-agent workflows, everything you need is now available directly inside your database.

Here are some examples of what you can do with it:

🔍 Semantic Search

For Example : A user visits your internal help portal and types: > “How do I reset my password?”

Instead of relying on exact keyword matches, Azure SQL uses vector similarity to retrieve the most relevant documents, even if they use different phrasing like:

- “Steps to recover account access”

- “Changing login credentials”

- “Forgotten password recovery guide”

These documents are stored in the SQL Database with precomputed vector embeddings. The user’s query is embedded and compared using VECTOR_DISTANCE function, returning the closest matches , all within a single SQL query.

The user sees the most helpful articles ranked by semantic relevance, not just keyword overlap improving discoverability and user satisfaction.

📚 Retrieval-Augmented Generation (RAG)

Example: You’re building a chatbot that answers questions about your company’s HR policies. A user asks:> “What’s the parental leave policy?”

Here’s how the RAG pipeline works with Azure SQL:

- Embed the query The user’s question is converted into a vector embedding using an embedding model (e.g., OpenAI or Azure OpenAI)

- Retrieve relevant content Azure SQL performs a vector similarity search against a table of HR policy documents which contain pre computed vector embeddings stored in the Vector Datatype It finds the top 3 most relevant entries, such as

- “Parental Leave Policy – Updated 2024”

- “Maternity and Paternity Benefits Overview”

- “Leave of Absence Guidelines for New Parents”

- Generate a grounded response These documents are passed to an LLM (like GPT-4) along with the original question. The model generates a response like: > “Our parental leave policy provides up to 16 weeks of paid leave for primary caregivers and 6 weeks for secondary caregivers. This applies to both birth and adoptive parents. For more details, refer to the HR portal or contact your HRBP.”

This approach ensures the answer is accurate, up-to-date, and grounded in your company’s actual policies

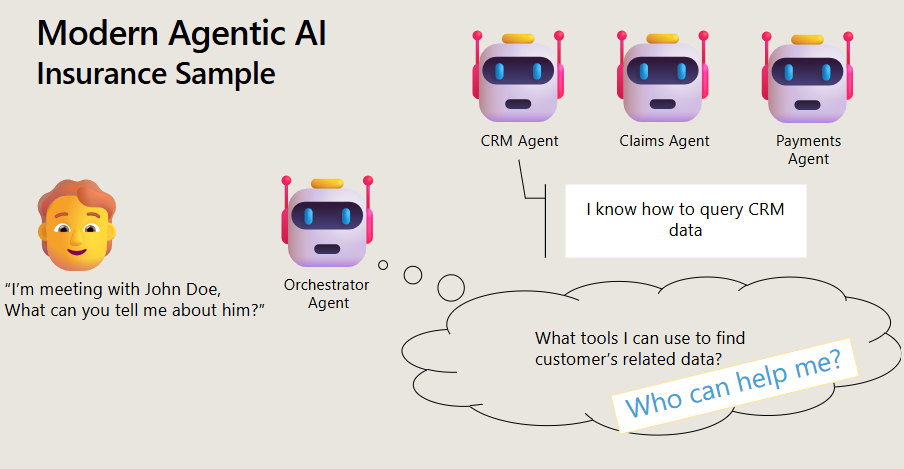

🤖Conversational Agents

Conversational Agent Example: Insurance Scenario A user asks: >“I’m meeting with John Doe. What can you tell me about him?”

The system activates a collaborative agentic workflow, where each agent interacts with specific tables in the database:

- Orchestrator Agent Parses the request and determines which agents to engage. It understands the schema and knows that customer-related data is distributed across multiple domains.

- CRM Agent Retrieves John Doe’s profile, including ID, contact details, and relationship history .Queries the dbo.customers table to retrieve John Doe’s profile:

- Claims Agent Pulls all claims associated with John Doe—status, amounts, and incident types. Uses the CustomerId from the CRM agent to fetch claims from dbo.claims table

- Payments Agent IT fetches payment history, outstanding balances, and reimbursement timelines. Retrieves payment history from the dbo.payments table:

Each agent has an expert-level view of its domain and knows how to query its respective tables. The orchestrator coordinates their outputs and composes a unified summary for the user—such as John Doe’s ID, address, claims history, and payment status.

This modular design allows the system to scale and respond intelligently to complex, multi-step queries making it a true agentic AI solution built on top of Azure SQL.

👉 Want to see this in action? Check out this video

The power of this capability goes far beyond individual patterns. Let’s look at how it fits into the broader AI ecosystem and how our customers are using it

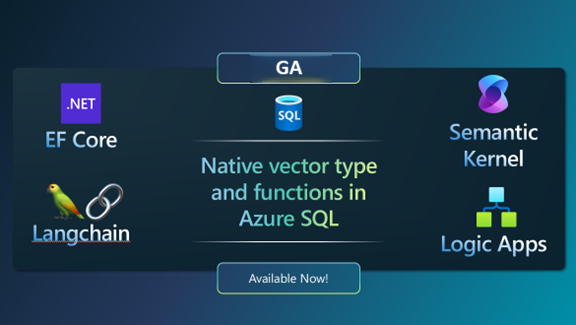

Rich Ecosystem Integrations

Native vector support in Azure SQL is deeply integrated with the broader Microsoft AI ecosystem—enabling seamless development across tools like Semantic Kernel, LangChain, EF Core and Logic Apps

Enhancements to TDS Protocol

Vectors in Azure SQL are stored in an optimized binary format but are exposed as JSON arrays for developer convenience. With GA, supported drivers can now leverage enhancements to the TDS protocol to transmit vector data more efficiently in binary format. This reduces payload size, eliminates the overhead of JSON parsing, and preserves full floating-point precision—resulting in faster, more accurate performance for high-dimensional vector workloads in AI and ML scenarios . More info will be updated here.

GA = Goodbye Limitations

With GA, we’ve removed several limitations that existed during preview. From improved indexing support (preview) to expanded driver compatibility, tighter integration with Azure SQL’s enterprise-grade security model. The GA release is more robust, scalable, and production-ready. You can find the full list of changes and lifted restrictions in our documentation here.

🔦Customer Spotlight:

Mondra, a sustainability intelligence platform, is using Azure SQL’s native vector support and Azure OpenAI to power their AI assistant, Sherpa. This agentic solution helps food retailers analyze the environmental impact of over 60,000 products and 1 million ingredients—reducing lifecycle assessment time from weeks to just four hours

“When we process new data, we have a massive amount that needs to go out immediately. Azure SQL Database Hyperscale was the only tier that allowed us to do a massive data push without affecting the user experience in our platform.” — Marco De Sanctis, CTO, Mondra

Sherpa combines Vector search, Semantic Kernel, and agentic orchestration to deliver personalized insights, generate reports, and support scenario analysis—all while maintaining performance, scalability, and data privacy. It’s a real-world example of how native vector support in Azure SQL enables intelligent, production AI applications. Read the full story here

What’s Next:

Azure SQL combines enterprise-grade performance with intelligent query optimization. Vector similarity search is executed alongside traditional SQL queries enabling hybrid workloads without compromising speed or scale. And we’re not stopping there.

DiskANN-Based Vector Index

The next evolution in performance is already underway with DiskANN, a high-performance, disk-based approximate nearest neighbor indexing algorithm. DiskANN-based vector indexing is currently available in private preview for Azure SQL and in public preview for SQL Server 2025. This unlocks fast vector search at scale, even on massive datasets that exceed memory limits. Read more here

New Set of AI Functions

New family of built-in AI functions that bring model-driven intelligence directly into your database These are currently in preview in SQL Server 2025 and will be coming soon to Azure SQL:

- AI_GENERATE_EMBEDDINGS – Creates embeddings using a pre-created AI model definition stored in the database

- AI_GENERATE_CHUNKS – Generates text fragments (“chunks”) based on type, size, and source expression

- CREATE EXTERNAL MODEL – Defines external model endpoints for inference, including location, authentication, and purpose

🚀Zero to RAG in under 5 minutes:

Too much to digest and just getting started? We’ve got you! Whether you’re new to Azure, exploring Azure SQL for the first time, or just beginning your journey with Retrieval-Augmented Generation (RAG), this is your moment. Native vector support in Azure SQL is designed to be beginner-friendly, developer-ready, and enterprise-tough. You don’t need to be an AI expert to get started.

We’ve built a one-click accelerator that automates the entire Azure SQL + Azure OpenAI setup for a RAG application in under 5 minutes, reducing manual configuration to just click and go. A Streamlit web UI that showcases Azure SQL’s native vector search on sample data (e.g. semantic product search or resume matching) with no coding required . No complex setup. No external dependencies. Just clone, deploy, and explore.

💡Dont move your data to AI. Bring AI to your data

Explore the docs, try the samples, and start transforming your data into intelligence.

- Take a look at the official documentation here.

- You can also use this GitHub Repo full of samples: https://github.com/Azure-Samples/azure-sql-db-vector-search.

- If you are looking for end-to-end samples, take a look here https://aka.ms/sqlai-samples where you’ll find:

- Retrieval Augmented Generation (RAG) on your own data using LangChain

- RAG and Natural-Language-to-SQL (NL2SQL) together to build a complete chatbot on your own data, using Semantic Kernel

- A tool to quickly vectorize data you already have in your database and enable it for AI

- And much more!

If you have specific requests, don’t forget to submit them through the Azure SQL and SQL Server feedback portal, where other users can also contribute and help us prioritize future developments. We look forward to hearing your ideas!

0 comments