The Azure Depth Platform program is delighted to have Paul O’Sullivan – System Engineer, and Nicolas Le Dortz – System Engineer Manager at Analog Devices as our guest blog authors for this edition of the Azure Depth Platform blog.

Paul O’Sullivan Nicolas LeDortz

Analog Devices (ADI) is building on the core Microsoft ToF pixel technology to deliver complete ToF solutions to a broad customer base in areas such as industrial factory automation, inventory management, automotive infotainment, augmented reality, computational photography and videography. This blog post will describe some of the advantages of continuous-wave CMOS ToF technology over traditional 2D and 3D imaging solutions for machine vision applications and will provide a high level system overview of a ToF camera system.

3D Camera Applications

Many machine vision applications now require high resolution 3D depth images to replace or augment standard 2D imaging. These solutions rely on the 3D camera to provide reliable depth information to guarantee safety, especially when machines are operating in close proximity to humans. The cameras also need to provide reliable depth information while operating in challenging environments such as in large spaces with highly reflective surfaces and in the presence of other moving objects. Many products to date have used low resolution range-finder type solutions to provide depth information to augment 2D imaging. However, this approach has many limitations. For applications that benefit from higher resolution 3D depth information, continuous-wave (CW) CMOS ToF cameras such as the camera in the Azure Kinect DK provide the highest performance solutions on the market. Some of the system features enabled by high resolution CW ToF sensor technology are described in more detail in Table 1. These system features also translate to consumer use cases such as video bokeh, facial authentication, and measurement applications, as well as automotive use cases such as driver alertness monitoring and automated in-cabin configuration.

| System Feature | Enablers |

| Depth Precision and Accuracy |

|

| Dynamic range |

|

| Ease of use |

|

| Outdoor operation |

|

| 2D/3D fusion |

|

| Multi-system operation |

|

Table 1. CW ToF System Features

ToF Camera System Overview

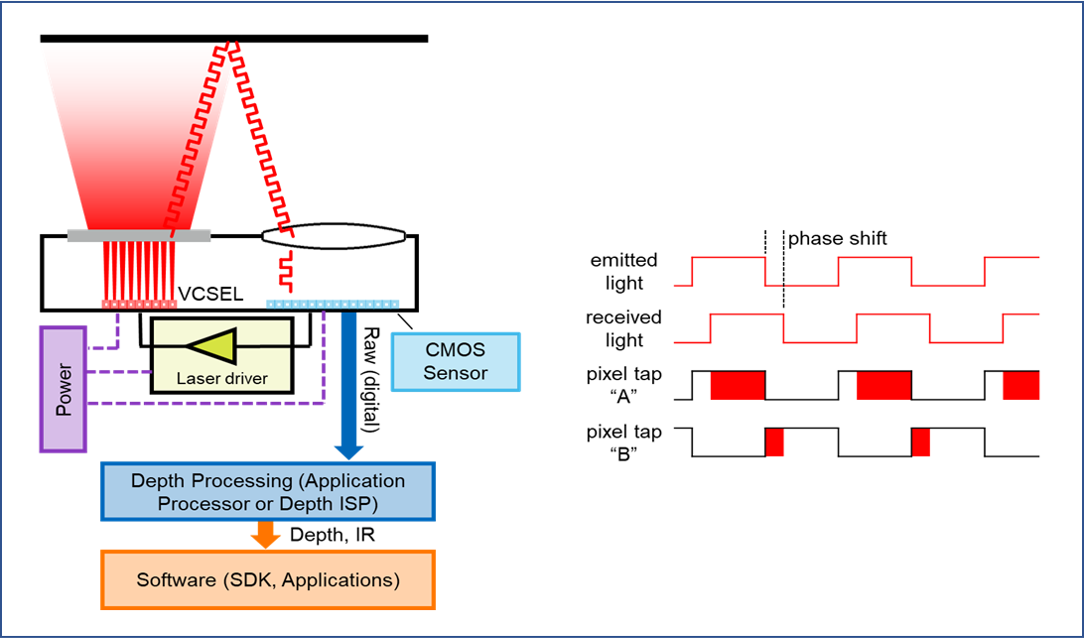

A CW time-of-flight camera is comprised of several elements (see Figure 1):

- a light source (VCSEL or edge-emitting laser diode) that emits light in the near infrared domain. The most commonly used wavelengths are 850 nm and 940 nm. The light source is usually a diffuse source (“flood” illumination) that emits a beam of light with a certain divergence (a.k.a. field of illumination or FOI) to illuminate the scene in front of the camera.

- a laser driver that modulates the intensity of the light emitted by the light source

- a sensor with a pixel array that collects the returning light from the scene, and outputs values for each pixel

- a lens that focuses the returning light on the sensor array,

- a bandpass filter co-located with the lens that filters out light outside of a narrow bandwidth around the light source wavelength

- a processing algorithm that converts output raw frames from the sensor into depth images or point clouds.

Figure 1. Overview of CW ToF sensor technology

An earlier blog post entitled “Understanding indirect ToF Depth Sensing” describes in more detail how ToF depth measurements are calculated based on the phase shift of the returning light.

There are numerous system features to consider when developing a high-performance ToF camera, some of which are briefly introduced here.

Image sensor

The image sensor is a key component in a TOF camera. The effects of most depth estimation non-idealities (bias, depth noise, multipath artifacts) are reduced when the average modulation frequency of the system increase. It is therefore important that the sensor has a high demodulation contrast (ability to separate photo-electrons between tap A and tap B) at high modulation frequency (100’s of MHz). The sensor also needs to have a high quantum efficiency (QE) in the near-infrared wavelengths (e.g. 850 nm and 940 nm), so that less optical power is needed to generate photo-electrons in the pixel. Finally, a low readout noise helps with the dynamic range of the camera by allowing to detect low return signals (far or low reflectivity objects).

Illumination

The laser driver modulates the light source (e.g. VCSEL) at high modulation frequency. In order to maximize the amount of “useful” signal at the pixel for a given optical power, the optical waveform needs to have fast rise and fall times with clean edges. The combination of laser, laser driver, and PCB layout in the illumination sub-system are all critical to achieve this. There is also some characterization required to find the optimal optical power and duty cycle settings to maximize the amplitude of the fundamental in the Fourier transform of the modulation waveform. Finally, the optical power also needs to be delivered in a safe manner with some safety mechanisms built in at the laser driver and system level to ensure class 1 eye safety limits are respected at all times.

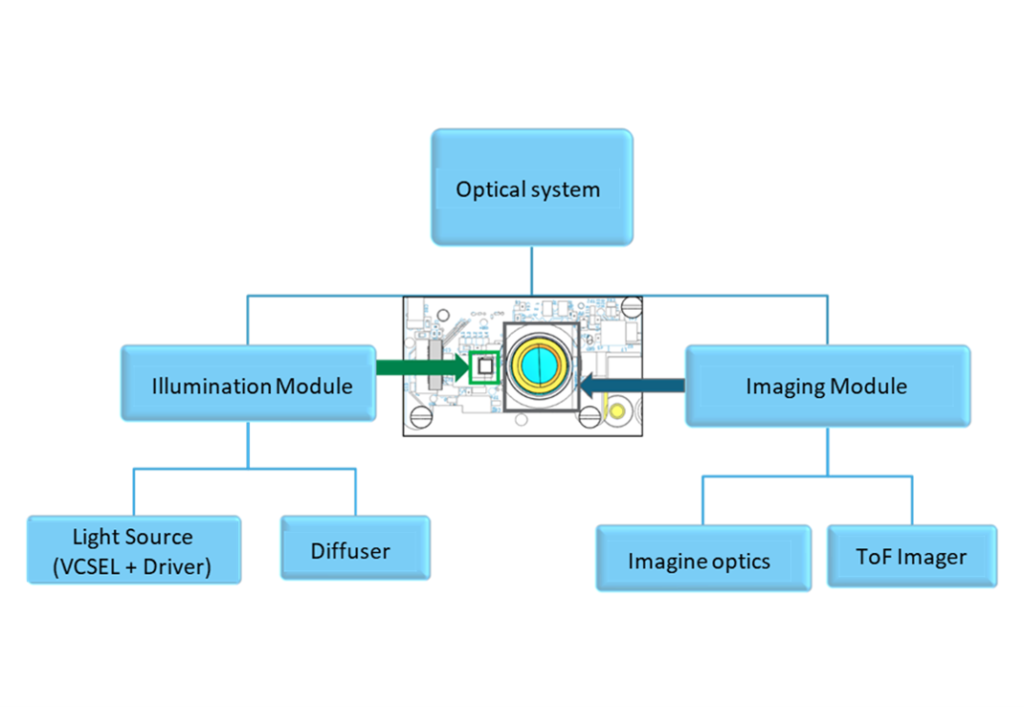

Optics

Optics play a key role in ToF cameras. ToF cameras have certain distinct characteristics that drive special optics requirements. Firstly, the field of illumination of the light source should match the field of view of the lens for optimum efficiency. It is also important that the lens itself should have high aperture (low f/#) for better light collection efficiency. Large apertures can lead to other tradeoffs around vignetting, shallow depth of field, and lens design complexity. A low chief ray angle lens design can also help reduce the bandpass filter bandwidth, which improves ambient light rejection and therefore improves outdoor performance. The optical subsystem should also be optimized for the desired wavelength of operation (e.g. anti-reflective coatings, bandpass filter design, lens design) to maximize throughput efficiency and minimize stray light. There are also many mechanical requirements to ensure optical alignment is within the desired tolerances for the end application.

Figure 2. Optical system architecture

Power Management

Power management is also critically important in a high performance 3D ToF camera module design. The laser modulation and pixel modulation generate short bursts of high peak currents, which places some constraints on the power management solution. There are some features at the sensor integrated-circuit (IC) level that can help reduce the peak power consumption of the imager. There are also power management techniques that can be applied at the system level to help ease the requirements on the power source (e.g. battery, USB). The main analog supplies for a ToF imager typically require a regulator with good transient response and low noise.

Depth Processing

Finally, another large part of the system level design is the depth processing algorithm. The ToF image sensor outputs raw pixel data from which the phase information needs to be extracted. This operation requires different steps that include noise filtering, phase unwrapping, and temperature correction. The output of the phase unwrapping block is a measurement of the distance travelled by the light from the laser, to the scene, back to the pixel, often called “range” or “radial distance”. The radial distance is generally converted into point cloud information, which represents the information for a particular pixel by its real world coordinates (X,Y,Z). Often, end-applications only use the “Z” image map (depth map) instead of the full point cloud. Converting radial distance into point cloud requires knowing the lens intrinsics and distortion parameters. Those parameters are estimated during geometric calibration of the camera module. The depth processing algorithm can also output other information such as active brightness images (amplitude of the return laser signal), passive 2D IR images, and confidence levels which can all be used in end applications. The depth processing can be done on the camera module itself, for example using a dedicated depth compute processor IC, or in a general purpose application processor somewhere else in the system.

An overview of the different system level components is shown in Table 2.

| System Level Component | Key Features |

| ToF Imager |

|

| Illumination source |

|

| Optics |

|

| Power Management |

|

| Depth Processing |

|

Table 2. 3D ToF camera system level components

The ADSD3100 is a CMOS indirect Time-of-Flight (iToF) imager for building 3D depth sensing and vision systems that is available today. In addition to the iToF pixel array, the chip integrates all functional blocks required for read out, including the analog to digital converters (ADC), pixel biasing circuitry, and sensor control logic, enabling an efficient system implementation. The ADSD3100 interfaces electrically to a host system over a mobile industry processor interface (MIPI), Camera Serial Interface 2 (CSI-2) interface. A lens plus optical band-pass filter for the imager and an infrared light source plus an associated driver are required to complete the working subsystem.

0 comments