Brief: Microsoft ToF technology is now available to industry and Azure customers can access premium processing for enhanced 3D depth sensing that delivers improvements in spatial frequencies, ambience resilience, pixel resolution, processing time, and more.

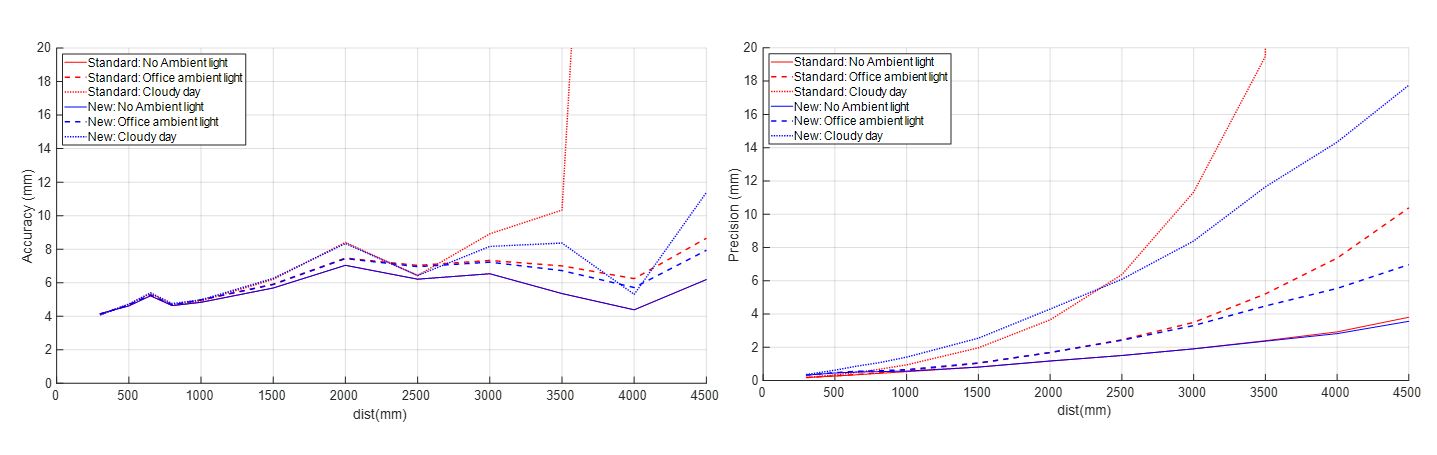

Microsoft has made a strategic decision to make its Time-of-Flight technology accessible outside of Microsoft, to enable camera makers, solution providers, and ISVs to drive innovative 3D sensing capabilities across multiple industries and scenarios, such as: Robotics, IoT, logistics, factory automation, retail, fitness, healthcare and more… Working across industries and scenarios poses a few major technological challenges, such as the need for some applications to process depth data in real time on the Edge (due to latency considerations), while dealing with massive data quantities which can highly benefit from the power of cloud processing. At Microsoft, we listen to partner and customer feedback, we learn from POCs we jointly run with our close partners at customer sites, and we continue to improve our technology platform to make the application development journey for computer vision developers faster, better and cheaper One of the concepts we created in our depth platform is of Advanced features, to allow Azure customers to get better performance and broader capabilities. Our first Advanced feature concept is called – Advanced Raw to Depth, which is basically a new pipeline for Real time or quasi real time applications. This advanced pipeline can be executed on the Edge and is able to produce a boost in evaluating the depth correctly when the signal to noise ratio is low, for example – in cases where objects have low Near InfraRed reflectivity, or in challenging environmental conditions (e.g.: daylight operations). The Advanced Raw to Depth capability allows you to keep the depth texture in high SNR. Figure 1 below shows the difference in accuracy (on the left) and precision (on the right) for a constant reflectivity flat wall at different distances, and measured using the standard pipeline vs new advanced pipeline. As it is shown in the accuracy figure, the standard algorithm breaks at 3.5 m for a cloudy day, while the new advanced pipeline continues to provide reasonable data to cover the total range of the experiment. The new advanced pipeline also shows an increase in precision performance of 35% at 3m in cloudy day conditions when compared with the standard pipeline.

Figure 1. Accuracy (on the left) and precision (on the right) of the standard and new pipeline performed, for a constant reflectivity flat wall at different distances. As it is shown in the accuracy figure the standard algorithm breaks at 3.5 m for a cloudy day, while the new pipeline continues providing reasonable data up to cover the total range of the experiment. The new pipeline also shows an increase in precision performance of 35% at 3m in cloudy day conditions when compared with the standard.

Figure 2 below, shows how this is translated into depth images, where two targets were placed in our lab with no ambient light, which is the most favorable condition for the standard pipeline. From a qualitative point of view it can be observed that the “salt and pepper” noise disappears in walls, far target, and ceiling, being an indicator that the new pipeline is able to produce consistent data at lower Signal to Noise Ratio.

Figure 2. 2 targets were placed in our lab with no ambient light, which is the most favorable condition for the standard pipeline. From a qualitative point of view it can be observed that the “salt and pepper” noise disappears in walls, far target, and ceiling, being an indicator that the new pipeline is able to produce consistent data at lower Signal to Noise Ratio.

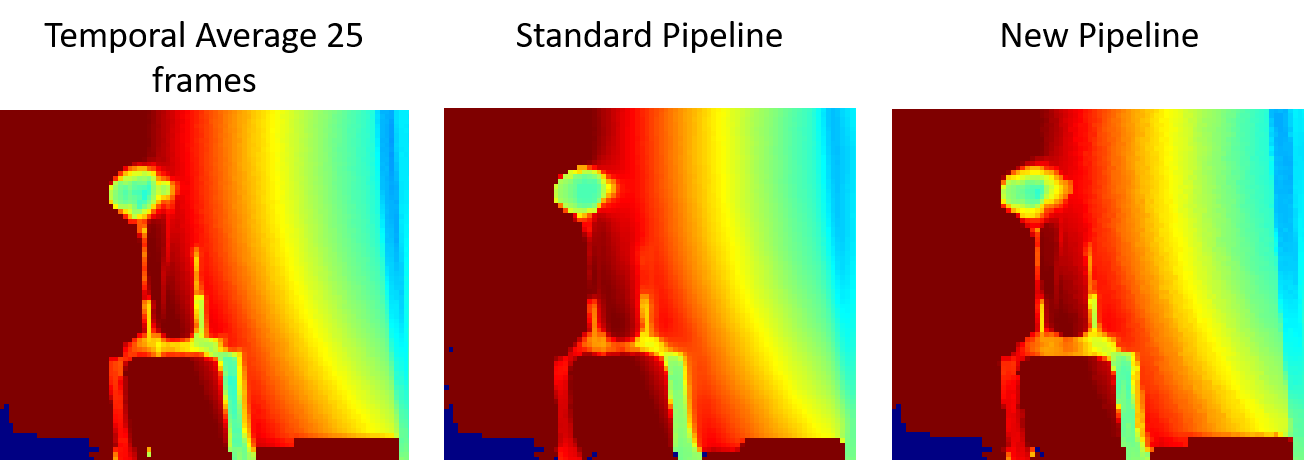

Beyond the performance in terms of accuracy and precision, you should notice the edges have been better preserved, reducing, and in many cases mitigating the “so-called” webbing effect. figure 3 shows the result of averaging temporally 25 depth frames in the lab with no ambient light, on the left, the result of the standard pipeline in the middle, and the new pipeline on the right. As it can be observed the new pipeline is able to provide a more detailed image, preserving more of the original information present in the temporally averaged depth image.

Figure 3 shows the result of averaging temporally 25 depth frames in the lab with no ambient light, on the left, the result of the standard pipeline in the middle, and the new pipeline on the right. As it can be observed the new pipeline is able to provide a more detailed image, preserving more of the original information present in the temporally averaged depth image.

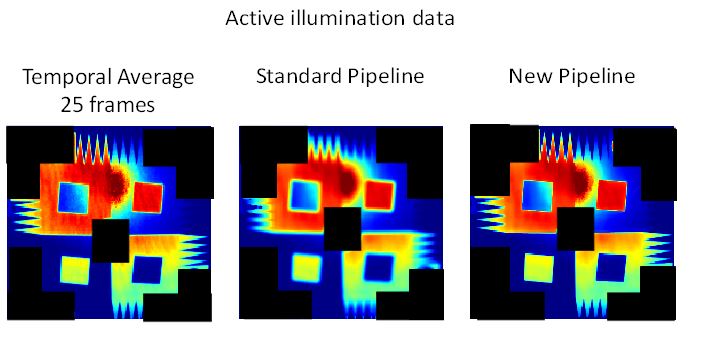

In addition to the advances in depth performance, this first Advance feature has been developed to produce a better quality on the active illuminated image, providing our customers, for example, the possibility to extract information in low light conditions, where conventional cameras start to produce very noisy data. Figure 4 shows the active illumination of the exact same scene with the targets discussed in figure 2, but including the temporal average of 25 frames for reference (on the left). For the sake of simplicity the foreground panel has been magnified. In the active illumination data the blue areas correspond with black painted surfaces (low SNR), while the red represents white areas (high SNR). Please note the specular reflection affecting both panels, especially the black one, and how the blur is restricted without affecting the top right square. It is clear that the new pipeline can provide a sharper image quality, by the definition showed in the QR codes, the different sawtooth, and the square shapes at the center of the target.

Figure 4 shows the active illuminated image of the targets previously shown in figure 2, but including the temporal average of 25 frames for reference (on the left).For the sake of simplicity, the foreground panel has been magnified, in the active illumination data the blue areas correspond with black painted surfaces (low SNR), while the red represents white areas (high SNR). Please note the specular reflection affecting both panels, especially the black one, and how the blur is restricted without affecting the top right square. It is clear that the new pipeline can provide a sharper image quality, by the definition showed in the QR codes, the different sawtooth, and the square shapes at the center of the target.

We invite you to comment on this blog post and share your feedback and thoughts.

If you have a question related to our program and are interested to engage, please email us: azure3d@microsoft.com

Hi, Thanks for the write up. Will any of these functions be included as part of the Azure Kinect DK SDK, Or as a separate library? I noticed the Intel Realsense SDK has post-processing filters to smooth out depth data

Awesome work, that looks like some really promising results!

Do I understand it correctly that these improvements are purely software-based?

If so, will they also be available for the current Kinect for Azure Devices in the future?

Hi Cristopher,

Thank you. Yes, you understood correctly the improvements are purely software based. Regarding to your other question, it can be possible, but that decision, as far as I know, has not been made yet.

Thank you! I think this is definitly an improvement a lot of the Azure Kinect users (myself included) might find interesting!