Copilot agent mode is the next evolution in AI-assisted development—and it’s now generally available in the Visual Studio June update.

Agent mode turns GitHub Copilot into an autonomous pair programmer capable of handling multi-step development tasks from end to end. It builds a plan, executes it, adapts along the way, and loops through tasks until completion.

Agent mode can analyze your codebase, propose and apply edits, run commands, respond to build or lint errors, and self-correct. You can integrate additional tools from MCP servers to expand the agent’s capabilities. Ask Mode, on the other hand works with you in a conversational way – you guide it with prompts, give it context, and steer the direction. Where Ask Mode helps you think through a problem, Agent Mode executes the goal.

To try agent mode out, open Copilot Chat, click the Ask button, and switch to Agent.

From Goals to Working Code

Agent mode is built for real-world scenarios. You can give it high-level tasks like:

- “Add ‘buy now’ functionality to my product page.”

- “Add a retry mechanism to this API call with exponential backoff and a unit test.”

- “Create a new Blazor web app that does X”

Copilot will try to identify the right files, apply changes, run builds, and fix errors—all while keeping you in control with editable previews, undo, and a live action feed. The more context and detail you provide, the better the results. Agent mode works best when it understands your intent clearly. The more precise your instructions and context, the more effective the outcome.

Tool calling

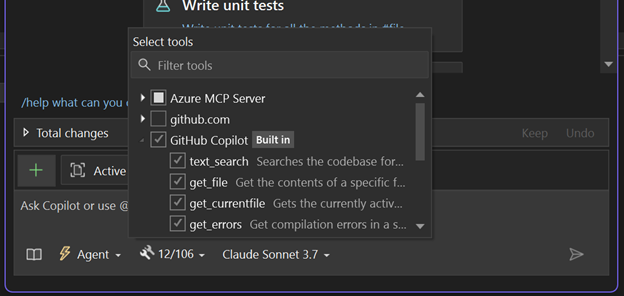

At its core, agent mode uses tool calling to access a growing set of capabilities inside Visual Studio. When given a goal, it selects and executes the right tools step by step. You can explore the available tools via the tool’s dropdown (icon with two wrenches) in the Copilot Chat Window.

Want it to be more powerful? You can extend the agent by adding tools from an ecosystem of Model Context Protocol (MCP) extenders.

Agent mode + MCP

Model Context Protocol (MCP) is a protocol designed to seamlessly connect AI agents with a variety of external tools and services, similar to how HTTP standardized web communication. The aim is to enable any client to integrate robust tool servers such as databases, code search, and deployment systems, without writing customer connections for each tool. With MCP, the agent can be configured to access rich, real-time context from across your development stack:

- GitHub repositories

- CI/CD pipelines

- Monitoring and telemetry systems

- And more

MCP is open source and extensible, so you can connect any compatible server. Common integrations include GitHub, Azure, and external providers like Perplexity. Check out the MCP official server repository to learn more.

Visual Studio uses the native mcp.json file for MCP server configuration, and also detects compatible configurations set up by other development environments, such as .vscode/mcp.json. Learn more here.

Once connected, the agent can take smarter actions. For example, if you add tools from the GitHub MCP server, your agent can retrieve and create issues on your behalf, check repo history, search GitHub, etc. A Figma MCP server provides the agent access to your design mockups.

This is what makes agent mode truly extensible: it plugs into your environment and acts with real understanding of your tools, systems, and workflows.

We’re incredibly excited about this new prompt-first experience, and how it empowers developers to move faster while staying in full control. We’re continuously evolving it, and your input plays a big role—please keep the feedback coming here. And make sure to include your logs. It helps us get to the root faster and fix the problem.

Beyond agent mode: more AI updates to try in the June release

But wait, we’re not done! The team included additional features in this release that are intended to improve your experience with Copilot in Visual Studio.

- Reuse and Share Prompt Files Easily: Create reusable prompt files in your repository, allowing you and your team to share and run custom prompts with ease. A prompt file is a standalone markdown file containing a prompt that you can run directly in chat, reducing the number of requests you need to type.

- Gemini 2.5 Pro and GPT-4.1 are now available: Developers love working with different models with chat and agent mode to get the desired results they are looking for. Now, Gemini 2.5 Pro and GPT-4.1 are available, offering improved reasoning and generation capabilities for your coding workflows.

- Reference the Output Window as part of your chat context: Troubleshoot runtime behavior more effectively.

- Monitor your usage of GitHub Copilot directly from Visual Studio.

- General availability of the Agents Toolkit 17.14 with improvements for building Microsoft 365 apps and intelligent agents.

AI-POWERED FLEET MANAGEMENT

🚗 Fleetblox Cloud Garage is compatible with 43 car makes, seamlessly connecting to over 177 million vehicles through a single platform. 🌍 With global coverage across North America and Europe, our advanced AI-driven solution 🤖 optimizes fleet management, ensuring maximum operational efficiency ⚙️ and streamlined performance—all in …

MCP support itself is still in preview?