The world has been busy building renewable energy these last few years. Since the costs of solar and wind energy have dropped below the costs of operating existing fossil fuel plants, particularly coal, wind, and solar have grown to contribute significant portions of electricity consumption globally. These renewable energy sources create windows of low cost, low emissions power when they generate.

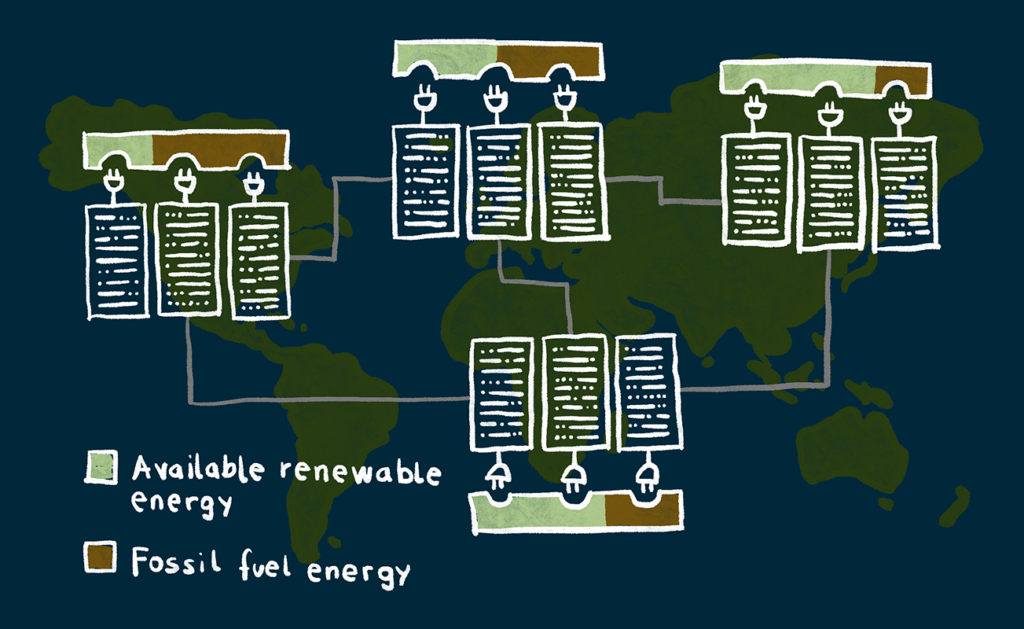

In any given place, renewable generation will rise and fall depending on the weather and time of day, but there will always be renewable generation somewhere in the world. If a region has a large supply of renewables, more than it needs, it’s often not possible to move that renewable energy to another region. It is not feasible to build electricity interconnectors between continents, say between Europe and the US. However, we already have global networks of datacentres connected by Fibre. Rather than moving electricity over long distances, it’s much cheaper and easier to move electricity consumption over existing fiber connections. Instead of moving renewable energy, move the thing that was going to consume renewable energy, in this case, compute. This has a secondary benefit of shifting the whole demand curve towards times of renewables, reducing the requirement for fossil fuel or energy storage backup on the grid.

There is an old mantra for datacentre operation of “follow the moon” where batch computation jobs were run in whatever datacentre was night-time to avail of cheaper night-time electricity. Welcome to the new world of “follow the green”.

The Hidden Compute Behind Everyday Life

You arrive home and plug in your car. You don’t worry about when it actually charges, because your electricity company gives you free electricity to power all of your driving, in return for being able to use the battery to stabilize the grid at night. To do this, your electricity company uses computation to predict and analyze electricity prices, weather, and electricity grid conditions, what time you need your car ready, and other variables to optimize

This type of forecasting and optimization powers the modern applications you and many people around the world use, comprising the digital economy. It requires a vast amount of compute, and most of this takes place in large datacentres, also known as the cloud, around the world. When and where these computation jobs are run have a significant impact on the Carbon they release. If the job is routed to the datacentre that has the highest amount of renewable energy, and the lowest Carbon emissions per unit compute at that time, the resultant emissions for the same computation can be reduced considerably.

Applying this type of geo-routing also has another long term benefit: as more energy demand moves to places with higher renewable energy at that time, and energy demand moves away from places when renewable energy isn’t available, the whole demand curve in each region starts to move towards renewable energy. This allows more renewable energy on the grid and reduces the need for fossil fuel reserve or energy storage to cover times when renewables aren’t generating.

How do I do it?

Simple: When writing cloud applications, add functionality to check what region has the lowest Carbon emissions intensity at that time, and schedule the computation there. This data is available from services such as WattTime.org and ElectricityMap.org. When using digital services, ask the providers if they could be built to run on green energy wherever it’s available globally.

The differences can be stark. In Balancing Power Systems With Datacenters Using a Virtual Interconnector, we built a simple geo-routing service that routed computation jobs to the cleanest datacentre at that time. It resulted in a 31.3% increase in the renewable portion of the energy powering the computation and a corresponding decrease in the overall emissions. At certain times of very high wind or solar generation, when some is being curtailed (or dumped) because there is not enough local demand for it, the running computation in that area results in zero emissions computation which can actually make money – as power markets can go negative when there is too much power. This situation is happening more and more frequently as grids continue to add renewable energy, so the opportunity to reduce emissions by thoughtfully routing compute is increasing over time. It’s worth noting that routing computation between datacentres increases network load, which incurs energy consumption but will be more than offset for large computation jobs by running those jobs in datacentres with low emissions intensity at that time. The important metric to optimize is the gCO2 released per unit compute.

Call to Action

So. Writing code? Write it so that it follows renewable energy around the world to drastically reduce your and your customers’ Carbon emissions. Find out more at principles.green

0 comments