Hello prompt engineers,

The past few weeks we’ve been extending JetchatAI’s sliding window which manages the size of the chat API calls to stay under the model’s token limit. The code we’ve written so far has used a VERY rough estimate for determining the number of tokens being used in our LLM requests:

val tokens = text.length / 4 // hack!

This very simple approximation is used to calculate prompt sizes to support the sliding window and history summarization functions. Because it’s not an accurate result, it’s either inefficient or risks still exceeding the prompt token limit.

Turns out that there is an Android-compatible open-source tokenizer suitable for OpenAI – JTokkit – so this week we’ll update the Tokenizer class to use this more accurate approach.

More accurate token counts

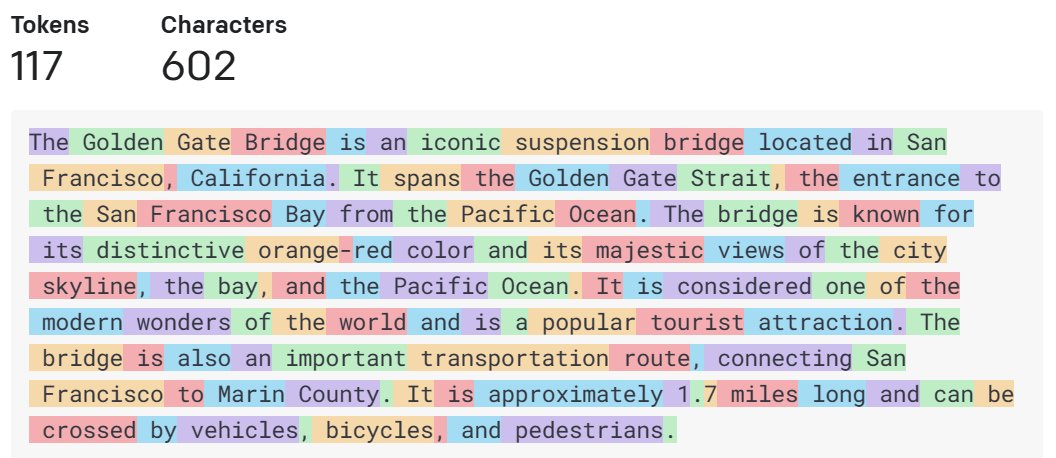

Here are some strings from past blog posts, evaluated with the Java tokenizer and OpenAI’s online version. The first example is shown here is a screenshot from the OpenAI website tokenizer – it’s a response from the LLM for the query “tell me about the golden gate bridge” with the tokens highlighted in alternating colors:

Here’s a table comparing the original “divide by 4” estimate to the JTokkit open-source package for a few different context strings:

|

Content |

Original “/4” estimate |

JTokkit GPT-3.5 |

The Golden Gate Bridge is an iconic suspension bridge located in San Francisco, California. It spans the Golden Gate Strait, the entrance to the San Francisco Bay from the Pacific Ocean. The bridge is known for its distinctive orange-red color and its majestic views of the city skyline, the bay, and the Pacific Ocean. It is considered one of the modern wonders of the world and is a popular tourist attraction. The bridge is also an important transportation route, connecting San Francisco to Marin County. It is approximately 1.7 miles long and can be crossed by vehicles, bicycles, and pedestrians.

|

150 |

117 |

What's the weather in San Diego |

7 |

8 |

Some popular places in San Francisco are:

1. Golden Gate Bridge

2. Alcatraz Island

3. Fisherman's Wharf

4. Lombard Street

5. Union Square

6. Chinatown

7. Golden Gate Park

8. Painted Ladies (Victorian houses)

9. Pier 39

10. Cable Cars

|

58 |

71 |

currentWeather{

"latitude": "37.773972",

"longitude": "-122.431297"

}

|

18 |

24 |

{latitude:"37.773972",longitude:"-122.431297",temperature:"68",unit:"F",forecast:"[This Afternoon, Sunny. High near 68, with temperatures falling to around 64 in the afternoon. West southwest wind around 14 mph.]"} |

53 |

57 |

These examples show the JTokkit package calculation result often varies greatly from the previous code, and being based on the algorithm provided by OpenAI should be much more accurate.

Adding to the Jetchat-based sample

In theory, we should be able to substitute our existing ‘rough estimate’ function with the more accurate Java package and not impact the functionality of the JetchatAI demo. Here’s the PR with the code changes – adding the package and then updating the Tokenizer class.

First add the package in build.gradle.kts:

implementation("com.knuddels:jtokkit:0.6.1")

Then follow the instructions in the JTokkit getting started docs to update the Tokenizer class – both counting tokens and truncating a string based on token length:

class Tokenizer {

companion object {

var registry: EncodingRegistry = Encodings.newLazyEncodingRegistry()

fun countTokensIn (text: String?): Int {

if (text == null) return 0

val encoding: Encoding = registry.getEncodingForModel(ModelType.GPT_3_5_TURBO)

return encoding.countTokens(text)

}

fun trimToTokenLimit (text: String?, tokenLimit: Int): String? {

val encoding = registry.getEncoding(ModelType.GPT_3_5_TURBO)

val encoded = encoding.encodeOrdinary(text, tokenLimit)

if (encoded.isTruncated) {

return encoding.decode(encoded.tokens)

}

return text // wasn't truncated

}

}

}

The more accurate token count should improve the efficiency of the sliding window algorithm, so that more context is included in each API request without the risk of exceeding the token count.

Pricing

Beside avoiding exceeding the token limit for requests, the other reason you might want to count tokens is to track usage – OpenAI APIs are charged per thousand tokens (see the pricing page). For example (in USD), the cheapest GPT-4 API costs 3 cents for 1K input tokens and 6 cents for 1K output tokens. The cheapest GPT-3.5 Turbo averages less than 1/5th of a cent per 1K tokens – twenty to thirty times cheaper than GPT-4!

You might choose to calculate, log, and track token usage just during your research, development, and testing to get a sense of how much your service is going to cost. With appropriate permissions and safeguards you might also log and track token usage in production, where it could be used to create a per-user quota or rate-limiting system to prevent excessive costs or abuse.

Not quite there…

While we’re now able to more accurately calculate the tokens in our user- and LLM-generated queries and responses, we cannot determine the exact size of the payload being processed by the model due to the specific formatting that occurs when a chat completion request is constructed. This JTokkit recipe contains some tips on how to more accurately determine the total token count for a request if you’d like to get even more accurate results.

Resources and feedback

See the Azure OpenAI documentation for more information on the wide variety of services available for your apps.

We’d love your feedback on this post, including any tips or tricks you’ve learning from playing around with ChatGPT prompts.

If you have any thoughts or questions, use the feedback forum or message us on Twitter @surfaceduodev.

There will be no livestream this week, but you can check out the archives on YouTube.

0 comments