Hello prompt engineers,

OpenAI has been in the news a lot recently, with the release of ChatGPT 4 and the integration of Large Language Model (LLM)-driven features into a variety of products and services including Bing, GitHub, and Microsoft 365 applications.

Inspired by Syncfusion’s blog post on adding ChatGPT to their .NET blazor text editor, in this post I’m going to add a similar feature to our existing Android Source Editor sample. You can view Syncfusion’s C# implementation on GitHub and our simplified Kotlin version in this pull request.

Get started with OpenAI

To get started with OpenAI, sign up on the platform developers page and take a look at the documentation. Although there is a free tier that provides a limited number of requests, I signed up for a paid account (and set a reasonable paid limit). Once you have an account you can immediately try the interactive chat experience at chat.openai.com.

If you are completely new to ChatGPT, consider following the quickstart and reviewing some examples before continuing.

We can start to test our prompt ideas directly in the ChatGPT UI with the HTML content embedded in the demo.

These are two prompts that we’ll add to our Android demo:

SHORTEN = "Strip away extra words in the below html content and provide a clear message as html content.\n\n" GRAMMARCHECK = "Check grammar and spelling in the below html content and provide the result as html content.\n\n"

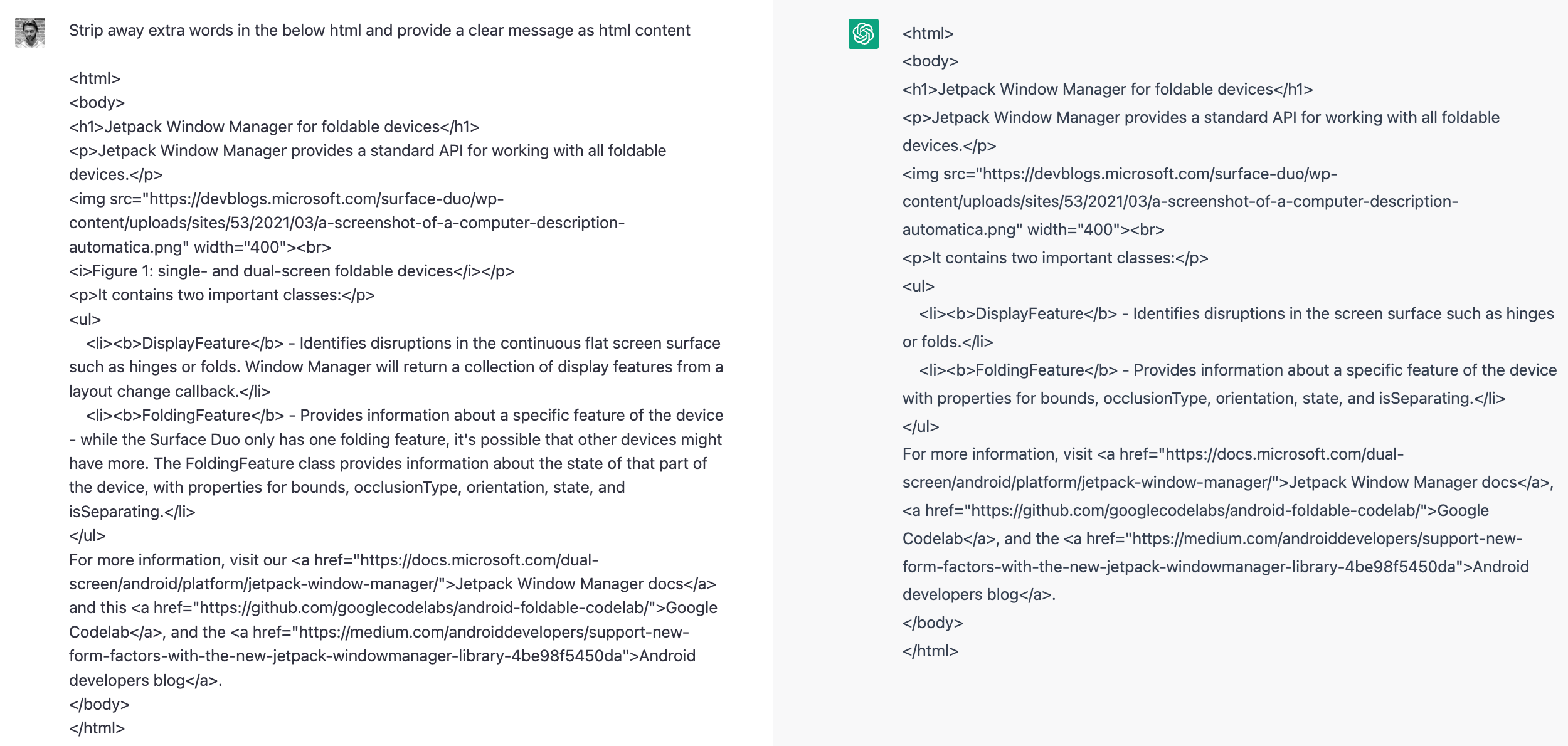

Here’s a screenshot showing the test ChatGPT interaction – the entire query consists of the prompt text followed by the HTML content, and the output contains the result:

Figure 1: Using ChatGPT to summarize an existing HTML page – prompt on the left and resulting ‘summarized’ HTML on the right

Test both prompts – a short summary and a grammar- and spell-checked version of the content. To better test the spell checking feature, add some intentionally bad typos and grammar into your test data. You can continue to experiment with different prompts to achieve many different text outputs, but once you’re finished playing, keep reading to get started with the developer API.

OpenAI API

Because we want to access ChatGPT from an Android application, we’ll need to use the provided API. The API consists of web requests and responses in a JSON format, using an authentication header for access control and billing.

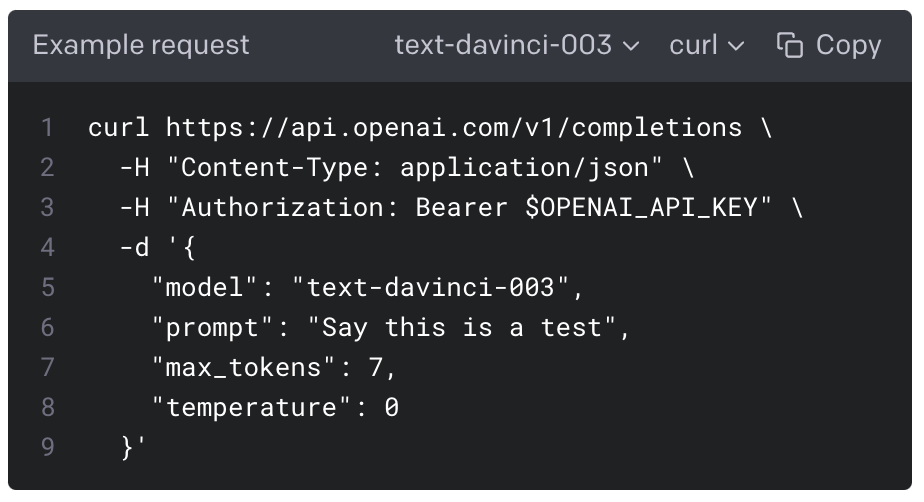

When you are logged in to OpenAI you can generate authentication tokens from this page, and then you can test any of the API endpoints listed in the documentation using cURL, such as the completions endpoint that we will use in our demo:

Figure 2: Accessing the OpenAI API via curl

Once you have confirmed that your API key is working using cURL, you can modify the prompt text to use the test content from above (don’t forget to make sure it’s valid JSON). Once you’re happy with the results from the API, it’s time to add these features to the Android app.

Adding to Android

For this example, we’re going to make three fairly minor changes to the existing Source Editor Android sample app:

- A new class for OpenAPI-specific information like authorization key, endpoint URL, and prompt text

-

A method that creates the OpenAPI JSON request, sends it via an

HttpClient, and parses the result - UI changes to add ChatGPT feature buttons to the navigation bar

You can easily browse the changes in this pull request on GitHub.

Constants class

This class contains the API details needed to access ChatGPT, including the URL and your API key (remember to keep this secret, and DON’T check it into source control):

internal object Constants {

// The OpenAI API key.

internal const val OPENAI_KEY = "{OPENAPI_API_KEY_GOES_HERE}"

// The OpenAI API model name.

internal const val OPENAI_MODEL_COMPLETIONS = "text-davinci-003"

// The OpenAI API endpoint.

internal const val API_ENDPOINT_COMPLETIONS = "https://api.openai.com/v1/completions"

// ChatGPT prompts.

internal const val SHORTEN = "Strip away extra words in the below html content and provide a clear message as html content.\n\n"

internal const val GRAMMARCHECK = "Check grammar and spelling in the below html content and provide the result as html content.\n\n"

}

HttpClient API method

This method is called from the new feature buttons, and it passes in the prompt to be sent to ChatGPT. The code formats a valid JSON body, constructs the HTTP request with the required authorization header, and parses the ChatGPT answer from valid responses:

private suspend fun createCompletion(prompt: String): String {

val openAIPrompt = mapOf(

"model" to Constants.OPENAI_MODEL_COMPLETIONS,

"prompt" to prompt,

"temperature" to 0.5,

"max_tokens" to 1500,

)

val content:String = gson.toJson(openAIPrompt).toString()

val response = httpClient.post(Constants.API_ENDPOINT_COMPLETIONS) {

headers {

append(HttpHeaders.Authorization, "Bearer " + Constants.OPENAI_KEY)

}

contentType(ContentType.Application.Json)

setBody (content)

}

if (response.status == HttpStatusCode.OK) {

val jsonContent = response.bodyAsText()

val choices = Json.parseToJsonElement(jsonContent).jsonObject["choices"]!!.toString()

val result = Json.parseToJsonElement(choices.removeSurrounding("[", "]"))

var text = result.jsonObject["text"]!!.toString()

return text

}

// Other Http status codes

return "Not OK status: " + response.status

}

The code shown above is edited for clarity – the sample on GitHub has more configuration options and error handling.

UI updates

In order to call the ChatGPT features, new SVG images resources are added to the project, included in component_action_toolbar.xml, and wired up with calls to the createCompletion method in MainActivity.kt.

Testing the demo

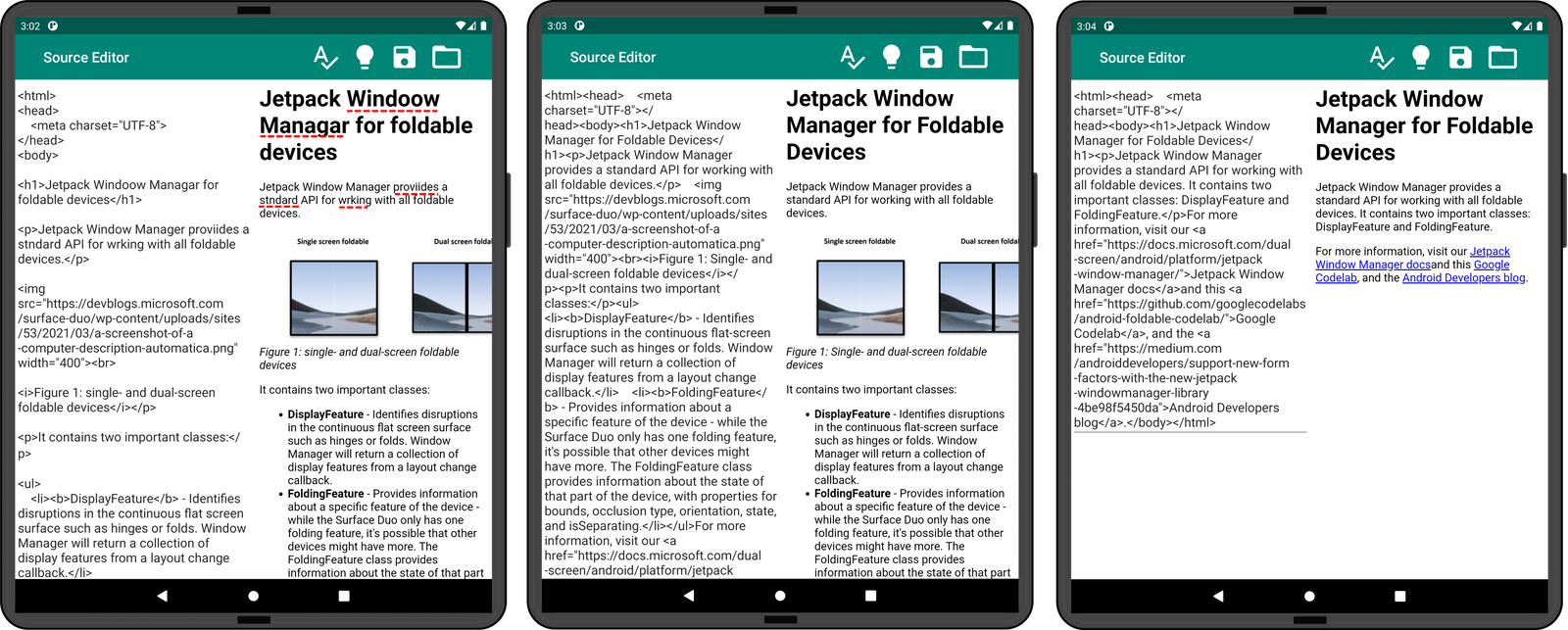

The ChatGPT API call is triggered by new buttons added to the navigation bar. Run the sample (which now includes purposeful typos in the HTML, highlighted on the left screenshot in Figure 3) and choose either of the new grammar or summarization features via the toolbar buttons. After a short delay for the network request and processing time, the editable content and preview will be updated with the results from the OpenAI service:

Figure 3: Android application showing the original (typos highlighted manually) and results of the GRAMMARCHECK and SHORTEN prompts on the source HTML.

An interesting side-effect of the SHORTEN prompt is that even though it doesn’t contain an explicit request to fix spelling and grammar, the output does not contain the typos present in the source HTML. In both cases, the HTML formatting and links are preserved. Both prompts also preserve the DisplayFeature and FoldingFeature enum names, even though they are not ‘correctly spelled’ according to any dictionary.

These prompts are just two examples of features that might make sense in a text editor application. Other things you could try include making the content more or less formal, translating to other languages, changing the formatting (eg. from HTML to Markdown), or providing an input for the user to generate content from their own prompts. An undo feature would also be useful if you’re unhappy with the final results!

Feedback and resources

Here’s a summary of the links shared in this post:

If you have any questions about ChatGPT or how to apply AI in your apps, use the feedback forum or message us on Twitter @surfaceduodev.

No livestream this week, but check out the archives on YouTube.

Very interesting, thank you