Bringing AI to the Edge While Future-Proofing Deployments at Scale

What do we mean by AI at the edge? This means that we’re simply moving some aspects of the AI process out of centralized data centers closer to where the data originates and where decisions are made in the physical world. This trend is being driven by the exponential growth of devices and data, with reasons including reducing latency and network bandwidth consumption and ensuring autonomy, security and privacy. Today the edge component of AI typically involves deploying inferencing models local to the data source but even that will evolve over time.

It’s important to consider that many of the general considerations for deploying AI in the cloud carry over to the edge. For instance, results must be validated in the real world — just because a particular model works in a pilot environment doesn’t guarantee that the success will be replicated when it’s deployed in practice at scale when external factors of camera angle and lighting come into play. Just Google “AI chihuahua muffin” for an example of the real-world challenges in detecting similar objects.

Another growing challenge is dealing with the proliferation of deep fakes that can throw off business results, drive false sentiments on social media, or worse. Further, developing ethical AI and data trust will also be key in ensuring that the AI is being deployed in a responsible and impactful way, regardless of whether it’s at the edge or in the cloud.

Defining the Edge

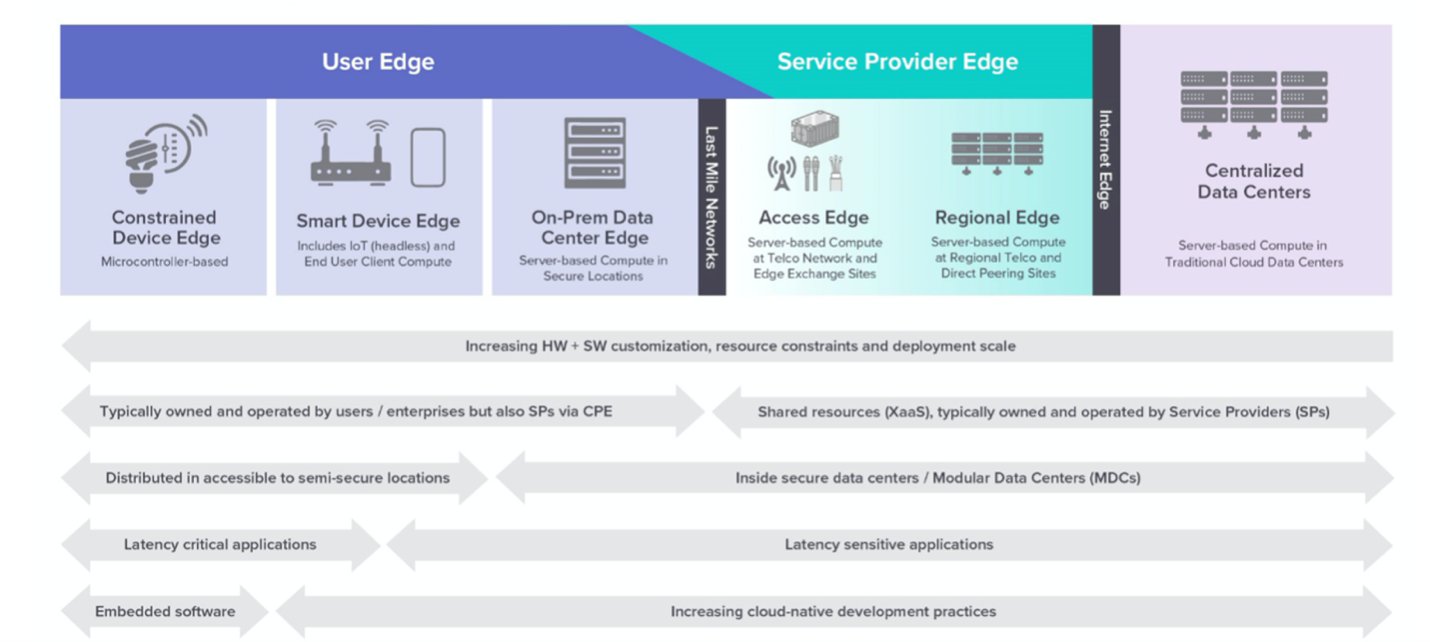

When it comes to the edge, first it’s important to understand the key considerations spanning the edge to cloud continuum. During 2020, ZEDEDA worked closely with the Linux Foundation’s LF Edge community to create a comprehensive taxonomy for edge computing based on inherent technical and logistical trade-offs.

The taxonomy outlined by LF Edge in their white paper goes a long way to toward resolving the current market confusion around the “edge” because most existing taxonomies are biased to one market lens — e.g., telco, IT, Operations Technology (OT), consumer — and have ambiguous breakdowns between categories. Instead, the LF Edge taxonomy breaks the continuum down into top level tiers and subcategories based on hard technical tradeoffs, for example, whether a piece of hardware has the resources available to support abstraction in the form of virtualization or containers or not, whether it is on a LAN or a WAN relative to the users/processes it serves (compute for time-critical vs. sensitive workloads will always be deployed on a LAN), and whether compute hardware is deployed in a secure data center or is physically accessible.

(Caption: Image courtesy of LF Edge)

A key concept is that environments get more complex as you get closer to the physical world, and for this reason deploying AI at the edge introduces additional challenges. First and foremost is accommodating the heterogeneity of edge hardware spanning form factor and compute capability — devices that can be as simple as a constrained sensor conveying temperature changes to as complex as the brains of an autonomous vehicle which is basically a data center on wheels.

Challenges of AI at the Edge

As a general rule, the closer deployments get to the physical world, the more resource-constrained they become. As such, deploying AI models at the User Edge compared to in secure, centralized data centers introduces a number of different complexities. While many of the same principles can be applied across the continuum, inherent technical tradeoffs dictate that tool sets can’t be exactly the same spanning server-class infrastructure at the Service Provider and On-prem Data Center Edges and the increasingly constrained and diverse hardware at the Smart and Constrained Device Edges as described in the LF Edge paper. Deploying edge compute and AI tools outside of physically secure data centers also introduces new requirements such as a Zero Trust security model and Zero Touch deployment capability to accommodate non-IT skill sets.

Stakeholders from IT and OT administrators to developers and data scientists need robust remote orchestration tools to not only be able to initially deploy and manage infrastructure and AI models at scale in the field, but also continue to monitor and assess the overall health of the system. ZEDEDA’s focus is helping customers orchestrate compute hardware and applications at the distributed edge which is aligned with the Smart Device Edge bleeding into the fringes of On Prem Data Center Edge in the LF Edge taxonomy. Traditional data center orchestration tools are not well-suited for the distributed edge because they are too resource-intensive, don’t comprehend the scale factor or pre-suppose a near-constant network connection to the central console which often isn’t the case in remote edge environments.

Deploying AI Models Along the Edge Continuum

The decision on where to train and deploy AI models can be determined by balancing considerations across six vectors: scalability, latency, autonomy, bandwidth, security, and privacy.

In a perfect world we’d just run all AI workloads in the cloud where compute is centralized and readily scalable, however the benefits of centralization must be balanced out with the remaining factors that tend to drive decentralization. For example, we’ll depend on edge AI for latency-critical use cases and for which autonomy is a must – you would never make a decision to deploy a vehicle’s airbag from the cloud when milliseconds matter, regardless of how fast and reliable your broadband network may be under normal circumstances. As a general rule, latency-critical applications will leverage edge AI close to the process, running at the Smart and Constrained Device Edges as defined by LF Edge. Meanwhile latency-sensitive applications will often take advantage of higher tiers at the Service Provider Edge and in the cloud because of the scale factor spanning many end users.

In terms of bandwidth consumption, the deployment location of AI solutions spanning the User and Service Provider Edges will be based on a balance of the cost of bandwidth (e.g., land line vs. cellular vs. satellite come with increasing expense), the capabilities of devices involved and the benefits of centralization for scalability. For example, use cases involving computer vision or vibration measurements can lead to great bandwidth expense, because both involve continuous streams of high-bandwidth data. For these use cases, the analysis will often be done locally, and only critical events will be triggered to backend systems, such as messages along the lines of “the person at the door looks suspicious” or “please send a tech, this machine is about to fail”.

When it comes to security, edge AI will be used in safety-critical applications where a breach has serious consequences and therefore cloud connectivity is either not allowed or only enabled via a data diode, for example monitoring for process drift in a nuclear power plant. Finally, AI will be increasingly deployed at the edge for privacy-sensitive applications in which Personally Identifiable Information (PII) must be stripped before a subset of data is backhauled to the cloud for further analysis.

Accelerating the Adoption of AI at the Edge

Edge AI requires the efforts of a number of different industry players to come together. We need hardware OEMs and silicon providers for processing; cloud scalers to provide tools and datasets; telcos to manage the connectivity piece; software vendors to help productize frameworks and AI models; domain expert system integrators to develop industry-specific models, and security providers to ensure the process is secure.

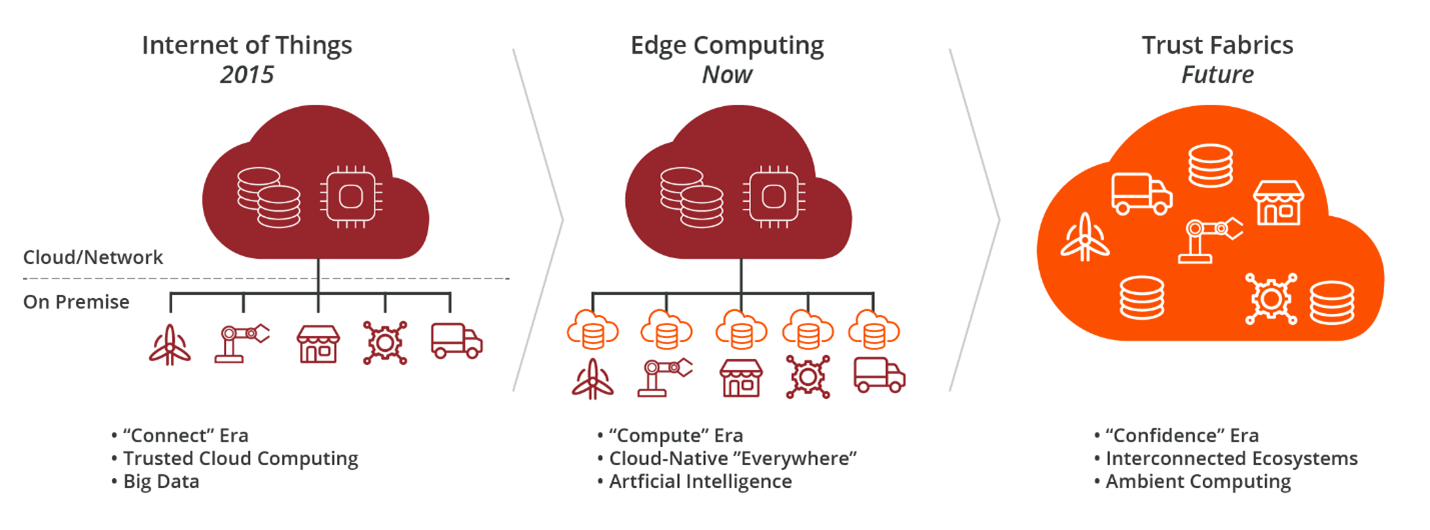

In addition to having the right stakeholders, it’s about building an ecosystem based on common, open frameworks for interoperability with investment focused on the value add on top. Today there is a plethora of choices for platforms and AI tools sets which is confusing, but it’s more of the state of the market than necessity. A key point of efforts like LF Edge is to work in the open-source community to build more open, interoperable, consistent and trusted infrastructure and application frameworks so developers and end users can focus on surrounding value add. Throughout the history of technology, open interoperability has always won out over proprietary strategies when it comes to scale.

(Caption: Evolution in a connected world requires an open edge)

In the long run, the most successful digital transformation efforts will be led by organizations that have the best domain knowledge, algorithms, applications and services, not those that reinvent foundational plumbing. In addition to the great foundational capabilities provided by cloud scalers such as Microsoft, open-source software has become a critical enabler across enterprises of all sizes — facilitating the creation of de-facto standards and minimizing “undifferentiated heavy lifting” through a shared technology investment. It also drives interoperability which is key for realizing maximum business potential in the long term through interconnecting ecosystems… but that’s another blog for the future!

Maximize the Potential for Edge AI at Scale

In addition to having the right ecosystem including domain experts that can pull solutions together, a key factor for edge AI success is having a consistent delivery or orchestration mechanism for both compute and AI tools. The reality is that to date many edge AI solutions have been lab experiments or limited field trials, not yet deployed and tested at scale. But as organizations start to scale their solutions in the field, they quickly realize the challenges.

At ZEDEDA, we consistently see that manual deployment of edge computing using brute-force scripting and command-line interface (CLI) interaction becomes cost-prohibitive for customers at around 50 distributed nodes (although our customers have told us it can become unmanageable with as few as ten!) .

In order to scale, enterprises need to build on an orchestration solution that takes into account the unique needs of the distributed edge in terms of diversity, resource constraints and security, and helps admins, developers and data scientists alike keep tabs on their deployments in the field. This includes having visibility into any potential issues that could lead to inaccurate analyses or total failure. Further, it’s important that this foundation is based on an open model to maximize potential in the long run.

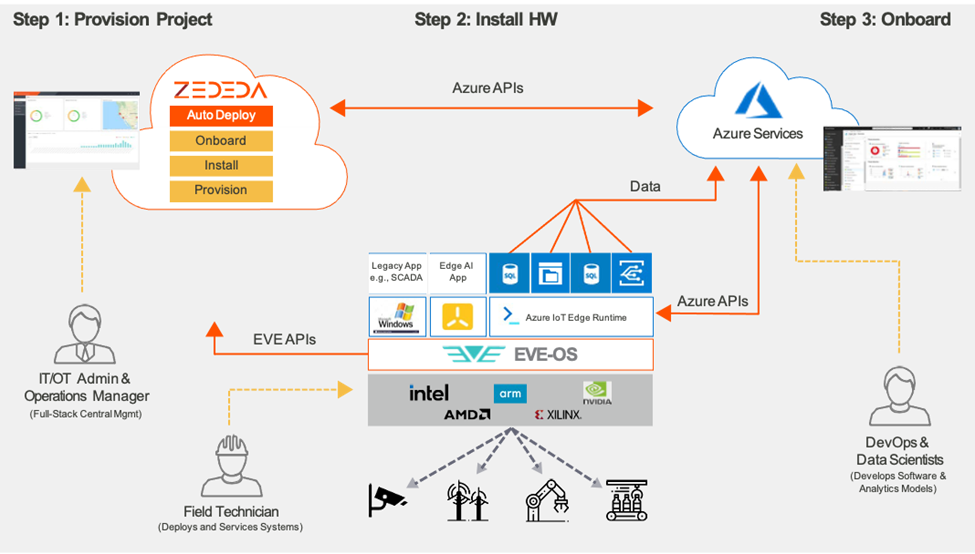

(Caption: ZEDEDA cloud-native orchestration solution for the distributed edge)

The Easy Button for AI at the Edge with ZEDEDA and Microsoft

ZEDEDA has worked with Microsoft to directly integrate its orchestration solution with Azure IoT Hub to address the scaling and security challenges of the complex edge environment. This integration simplifies deployment and full lifecycle management of Azure IoT solutions at the edge with choice of hardware including single-click bulk deployment of the Azure IoT Edge runtime with Azure IoT DPS integration, Azure IoT Edge modules, and any additional applications (such as AI models) required for a given use case. With scale and security, developers can quickly deploy all Azure IoT Edge services on large fleets of nodes with a single click and manage the full lifecycle of both the software and hardware.

With ZEDEDA’s orchestration solution, developers can easily deploy containerized AI modules on edge hardware running in the field, even alongside existing applications in virtual machines. By decoupling applications from the underlying infrastructure and supporting existing software investments, ZEDEDA provides customers with a transition path to increasingly software-defined environments powered by new AI innovations.

(Caption: ZEDEDA Edge Orchestration for Azure IoT)

In Closing

As the edge AI space develops, it’s important to avoid becoming locked into a particular tool set, and instead opt to build a future-proofed infrastructure that accommodates a rapidly changing technology landscape and that can scale as you interconnect your business with other ecosystems. Here at ZEDEDA, our mission is to provide enterprises with an optimal solution for deploying workloads and infrastructure at the distributed edge where traditional data center solutions aren’t applicable, and we’re doing it based on an open, vendor-neutral model that provides freedom of choice for hardware, AI framework, apps and clouds. To learn more about ZEDEDA and our offering, visit us at www.zededa.com or check out our listing on the Azure Marketplace.

Light

Light Dark

Dark

0 comments