This is a follow up blog post to Unlock the Power of Telemetry in Semantic Kernel SDK. We are excited to share we have added logging and metering for prompt, completion, and total tokens for each request to Azure OpenAI/Open AI using Semantic Kernel. This feature will help you monitor and optimize your API usage and costs, as well as troubleshoot any issues that may arise.

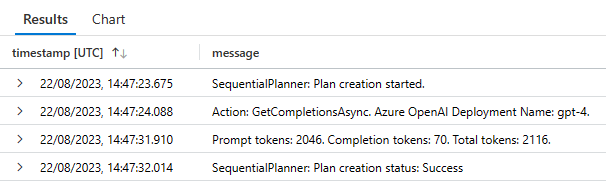

Example of logging in Azure Application Insights

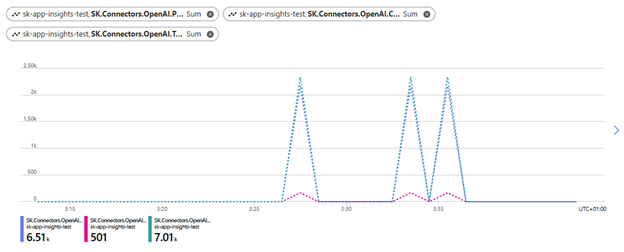

Example of metering in Azure Application Insights

With this update, Microsoft.SemanticKernel.Connectors.AI.OpenAI package captures the metrics listed below.

- SemanticKernel.Connectors.OpenAI.PromptTokens – number of prompt tokens used.

- SemanticKernel.Connectors.OpenAI.CompletionTokens – number of completion tokens used.

- SemanticKernel.Connectors.OpenAI.TotalTokens – total number of tokens used.

Benefits of logging and metering with Semantic Kernel

Logging and metering your requests with Semantic Kernel have several key benefits:

- You can easily keep track of your API usage and costs, as each token corresponds to a unit of billing. You can also compare the token usage across different models and parameters to find the optimal settings for your use case.

- You can troubleshoot any issues or errors that may occur during your requests, as each request is logged with its prompt, completion, and token count. You can also use the logs to analyze the performance and quality of your completions.

- You can leverage the power and flexibility of Semantic Kernel to orchestrate your requests with other AI services or plugins, while still having full visibility and control over your token usage.

Next Steps

- Try out Semantic Kernel by visiting our Github repo. Don’t forget to support our project by star’ing our repo.

- You can also check out our documentation for more examples and tutorials on how to use Semantic Kernel.

- Join our community to contribute or if you have question.

We hope you enjoy using Semantic Kernel to create amazing AI apps.

0 comments