Large language models such as GPT4 and chatGPT have created a revolution in the way people build innovative new applications and experiences. I describe how I got started with building LLM applications and point to some resources I created in this process that I hope will help in your own exploration into this exciting area of software application development using powerful large language models.

A personal learning journey into LLM Applications

In my day job at Microsoft, I focus mostly on AI infrastructure and platforms. But I was also curious to learn how to build LLM applications, as I want to better understand the users on our platforms and also hope to develop some LLM apps I can use personally and professionally. As I was getting started, it was pretty clear that LangChain was (and is) a popular library used to build these apps, which lowers the barriers for beginners by abstracting a lot of complexity with an easy-to-use interface and providing out of the box integrations with several tools to help build workflow chains. I started to dabble with LangChain and built a few simple programs. But it was only after I completed a (free!) short course on LangChain from deeplearning.ai a few months back, that I was starting to see a bigger picture emerging in my mind, past all the noise and hype you see on social media. I loved that this course covered a lot of fundamental ground in a brisk paced course with just one hour of lecture and a few example notebooks I could work at my own pace later. Kudos and thanks to Dr Andrew Ng and Harrison Chase the founder of LangChain for creating this wonderful resource for any developer, of any skill level to gently get started with this new era of LLM applications.

My next step in that journey was to figure out how people are building production LLM applications and started talking to a few practitioners at Microsoft who were building Copilot and LLM experiences. It was clear that the LLM tooling was still evolving rapidly, and the ecosystem was (and still is) nascent to build production applications end to end. The developers often experiment with multiple tools (including rolling their own in some cases or even mixing and matching tools for their needs) as they rationalize the right tool to use for the right task, balancing ease of use with level of control desired for more complex applications.

Expanding the toolbox

One of the tools that is used by several teams at Microsoft is Semantic Kernel (SK) — an open-source framework that augments your LLM app building experiences with memory, planning and plugins to integrate with external tools. The Semantic kernel has been in the works within Microsoft for a while even before chatGPT and GPT4 became part of the public consciousness. During the SK team’s early experimentation with taming LLM interactions and outputs to make them more useful, they started to crystallize a point of view on how to build applications in the age of powerful LLMs by articulating the Schillace Laws of AI and incorporating those principles into the Semantic Kernel SDK to help developers build production quality applications. SK integrates with other open-source tools as well as several Microsoft services like Microsoft Graph for data, Azure Cognitive Search for vector search and storage, Azure Machine Learning‘s Prompt Flow.

There is a lot of interest recently in building autonomous agents powered by LLMs. Semantic Kernel supports a feature called Planner — an essential component for building complex agents where an LLM and contextual data is used to automatically create orchestrations across LLMs and external tools to solve for a user’s goal statement while giving you the developer great control over how and what plans are generated. SK also supports multiple languages like C#, Java in addition to Python so one an embed the LLM functionality and agent orchestration into enterprise applications.

Porting tutorials to Semantic Kernel

Given these, the next goal was to learn Semantic Kernel and started by porting over the deeplearning.ai LangChain tutorial examples to Semantic Kernel with minimal modifications to the original code. This way one can start with something familiar and help understand the similarities and differences in underlying concepts better. All the examples are published as Jupyter Notebooks in a Github repository.

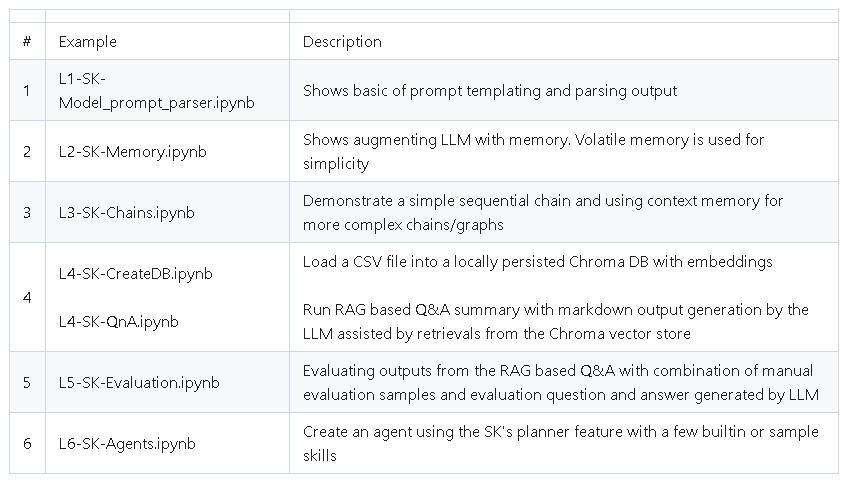

In this repository, there are six example notebooks that takes the user gently from basic prompting to building simple agents and Retrieval Augmented Generation (RAG) applications.

I hope developers especially those that already know LangChain wanting to expand their toolbox will find this tutorial notebooks useful to get a quick introduction to Semantic Kernel, before you dive deeper into the more advanced features and integrations. Semantic Kernel Python SDK users also will find some of the examples useful as a reference to augment the samples that come with Semantic Kernel. I welcome feedback and contributions to this repository as well as hear about your experiences building applications with these LLM tools.

Resources

Semantic Kernel port of the deeplearning.ai LangChain course notebooks

DeepLearning.ai LangChain Course

0 comments