Increase Performance by Slowing Down Your PowerShell Script

Summary: Microsoft PFE, Georges Maheu, further optimizes the Windows PowerShell script he presented earlier this week.

Microsoft Scripting Guy, Ed Wilson, is here. Our guest blogger today is Georges Maheu. Georges presented a script three days ago to gather Windows services information in an Excel spreadsheet. Although the script did an adequate job for a small number of servers, it did not scale well for hundreds of servers. On day one, the script took 90 minutes to document 50 computers. On day two, it took less than three minutes. Day three went down to 43 seconds. Today, Georges wants to do even better! Here are quick links to his previous blogs:

Day 1: Beat the Auditors, Be One Step Ahead with PowerShell

Day 2: Speed Up Excel Automation with PowerShell

Day 3: Speed Up Excel Automation with PowerShell Jobs

Note: All of the files from today, in addition to files for the entire week are in a zip file in the Script Repository. You can read more from Georges on the PFE Blog: OpsVault.

Now, once again, here’s Georges…

There comes a time when there is no point to optimizing a script further. Moving from 90 minutes down to less than one minute was well worth the investment in time! But going from 43 seconds to 30 is not worth the effort for this script.

Today, we tackle typical issues that are encountered when collecting large volumes of information in a distributed environment. Excel can handle 1000 computers—that’s cool, but not very practical beyond those numbers.

There are a few options for dealing with large volumes of information: one could store all the information in a database and write nice reports by using queries. Personally, I could not create a decent database to save my life! Therefore, I will go to the next option, which is to use individual files for each computer.

Instead of writing the information in an Excel tab, the information will be exported to a file in .csv format by using the Export-CSV cmdlet.

First, the following lines are replaced:

$data = ($services `

| Select-Object $properties `

| ConvertTo-Csv -Delimiter “`t” -NoTypeInformation ) -join “`r`n”

[Windows.Clipboard]::setText($data) | Out-Null

$computerSheet.range(“a$mainHeaderRow”).pasteSpecial(-4104) |

Out-Null #Const xlPasteAll = -4104

With these lines:

$services |

Select-Object $properties |

Export-CSV “$script:customerDataPath\$($currentJobName).csv” `

-Encoding ASCII -NoTypeInformation

Then, all the code that is related to Excel is removed from the script.

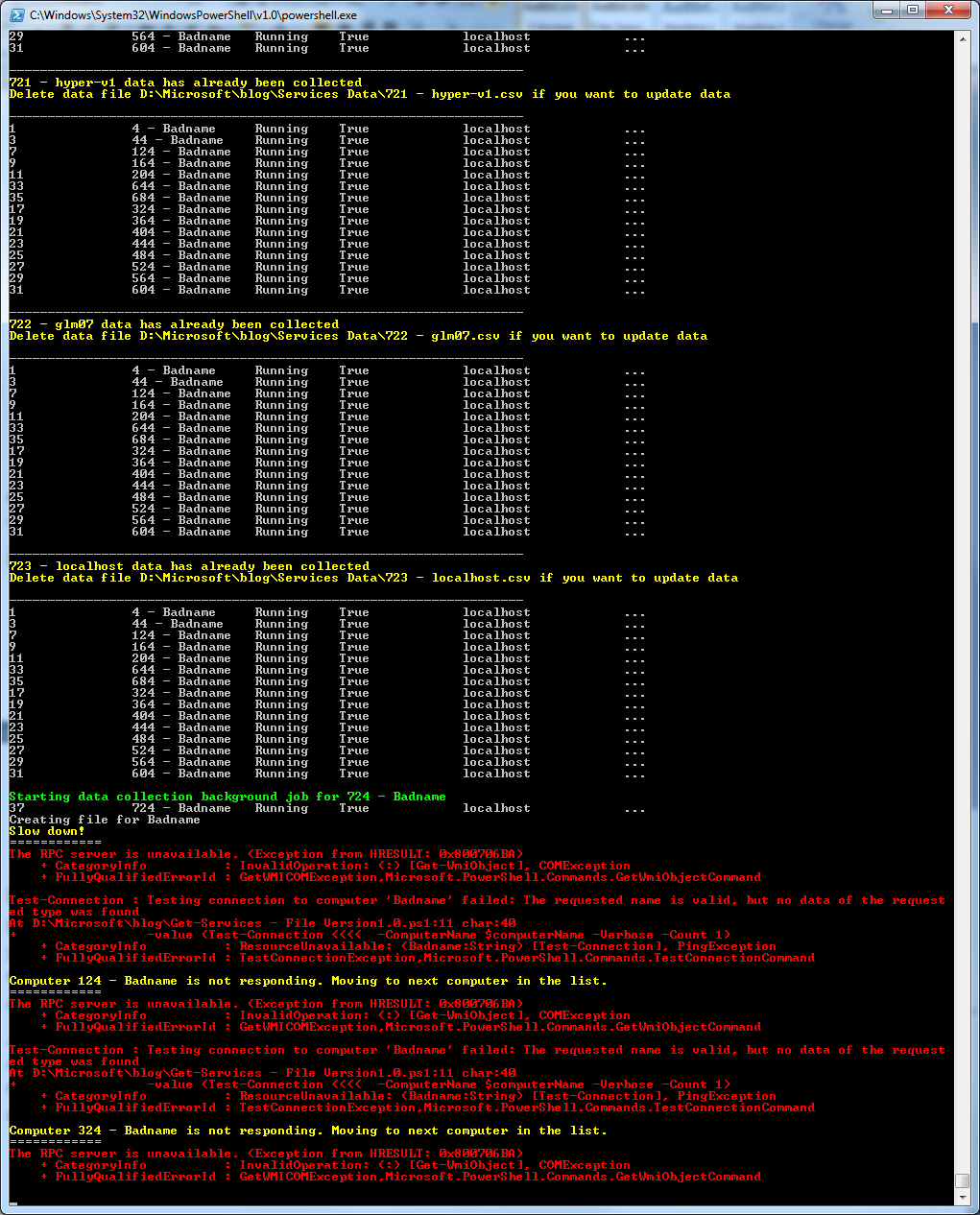

Most of the optimization techniques that I have shown so far can be reused, but there are a few additional challenges. One of them is, “What should I do if a computer does not respond?” With 50 computers, one can afford to run a 43 second script repeatedly until all the data is gathered. Not so obvious when 5000 computers or more are involved.

One could use the file system to keep track of computers already processed. If a CSV file exists, there is no need to collect the information again. If there is a need to update the information for a specific computer, simply delete that file and run the script again.

if (Test-Path “$customerDataPath\$($jobName).csv”)

{

write-host “$jobName data has already been collected”

}

else

{

Start-Job -ScriptBlock `

{

param($computerName);

Get-WmiObject `

-class win32_service `

-ComputerName $computerName

} `

-Name $jobName `

-ArgumentList $computerName

“Creating file for $computerName”

} #if (Test-Path “$customerDataPath\$($jobName).csv”)

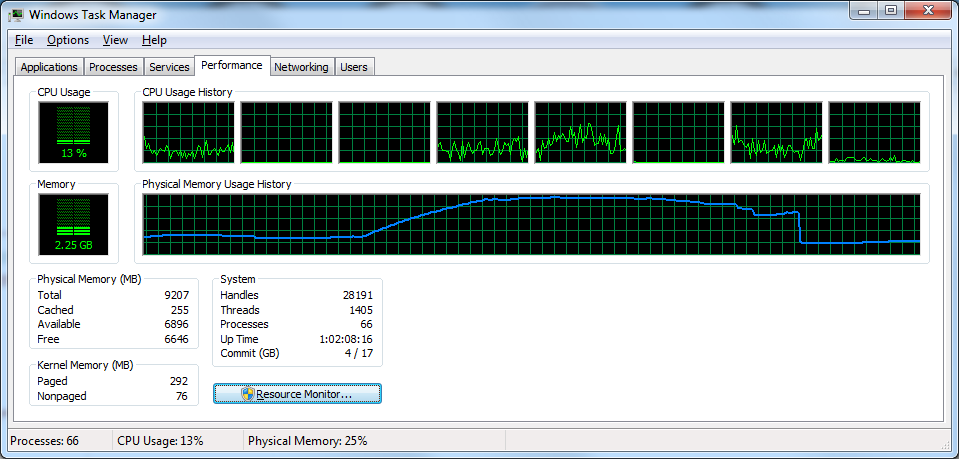

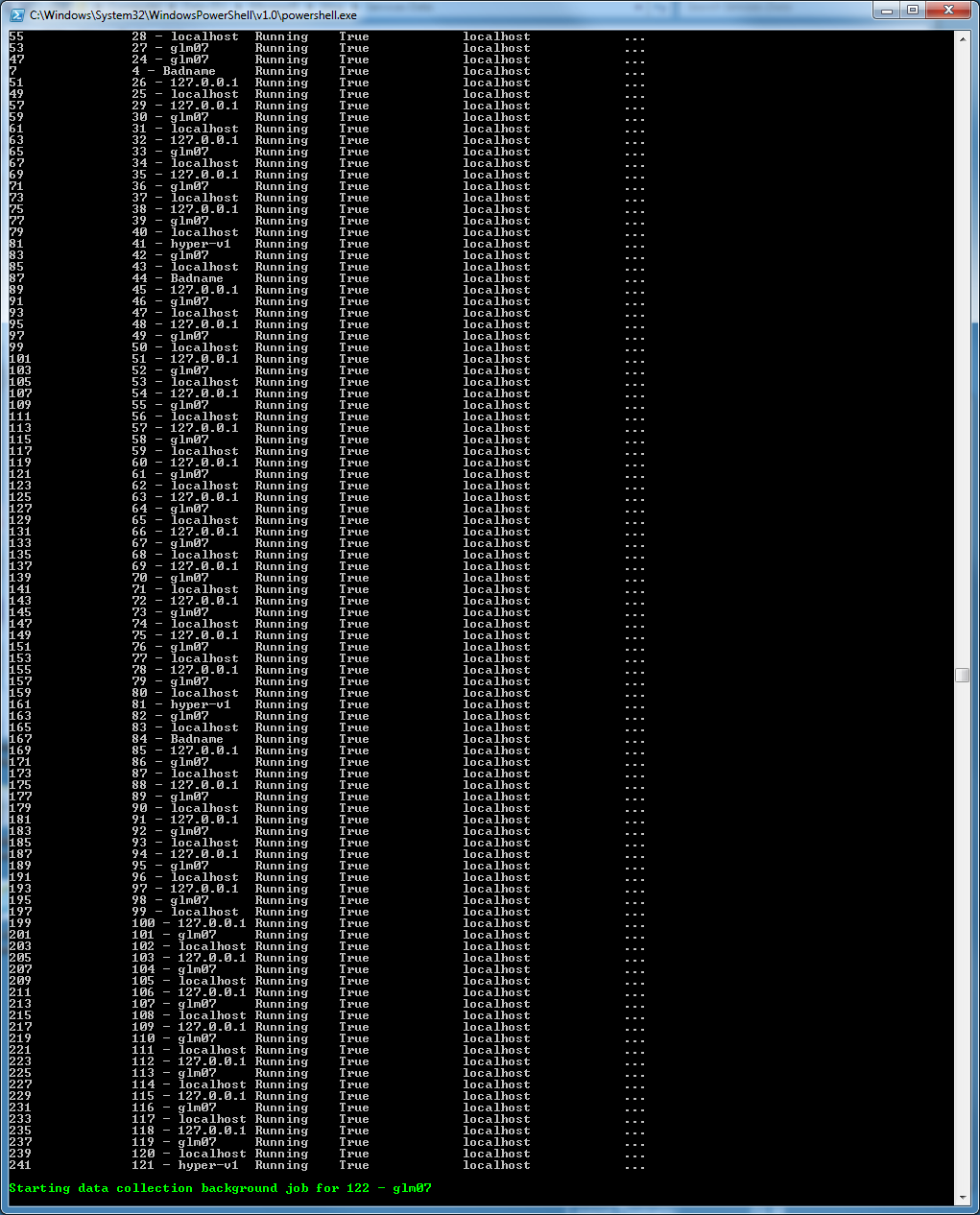

Yesterday, running out of resources was avoided with a rude implementation of a producer–consumer design pattern. This same issue needs to be revisited because the script design was based on the time it took the consumer to write the data in Excel. Writing to a file is much faster, and running out of resources could occur again.

This happens if there are too many jobs running at the same time. In fact, the more jobs that are running concurrently, the longer each ones takes to complete. This can be a vicious circle.

Strangely enough, the performance of the script can be increased by slowing it down! I slow the script down a bit by using the Start-Sleep cmdlet.

if (@(get-job).count -gt 15)

{

Start-Sleep -Milliseconds 250

write-host “Slow down!” -ForegroundColor yellow

}

if (@(get-job).count -gt 5) {Get-CompletedJobs}

Slowing down the rate at which new jobs are started allows existing jobs to finish and be processed. This will help maintain the job queue size within the limits of the computer’s resources. For example, the following 500 computer test run took 8 minutes and 3 seconds after encountering a resource struggle.

After the lines were added to slow the script down, the same script completed in 3 minutes, 39 seconds.

The following screen capture shows these new lines in action after restarting a 5000 computer test run. The screen capture also shows the optimization mentioned earlier by using files as indicators that a computer has already been processed:

This is not an exact science, you will need to experiment with these numbers based on your computer resources and the time it takes to get and process the information.

This latest version of the script gets these numbers:

01 minute 19 seconds for 100 computers.

01 minutes 56 seconds for 250 computers.

03 minutes 39 seconds for 500 computers.

07 minutes 05 seconds for 1000 computers.

40 minutes 20 seconds for 5000 computers.

Not bad! However, there is one more thing to do to wrap up this project. All the services that run using nondefault service accounts need to be extracted.

Here is a second script that will process all those CSV files, extract that information, and store it in another CSV file.

clear-host

$startTime = Get-Date

$scriptPath = Split-Path -parent $myInvocation.myCommand.definition

$customerDataPath = “$scriptPath\Services Data”

$reportPath = “$scriptPath\Services Report”

$CSVfiles = Get-Childitem $customerDataPath -filter *.csv

if ($CSVfiles.count -ge 1)

{

Write-Host “There are: $($CSVfiles.count) data files”

}

else

{

Write-Host “There are no data files in $customerDataPath.” -ForegroundColor red

exit

}

new-item -Path $scriptPath -name “Services Report” -force -type directory | Out-Null #Create report folder

$exceptions = @()

$CSVfiles |

ForEach-Object `

{

$services = Import-csv -Path $_.fullname

“Processing $_”

forEach ($service in $services)

{

# $service.startName

################################################

# EXCEPTION SECTION

# To be customized based on your criteria

################################################

if ( $service.startName -notmatch “LocalService” `

-and $service.startName -notmatch “Local Service” `

-and $service.startName -notmatch “NetworkService” `

-and $service.startName -notmatch “Network Service” `

-and $service.startName -notmatch “LocalSystem” `

-and $service.startName -notmatch “Local System”)

{

Write-Host $service.startName -ForegroundColor yellow

$exceptions += $service

} #if ($service.startName

} #foreach ($service in $services)

} #ForEach-Object

$exceptions | Export-CSV “$reportPath\Non Standard Service Accounts Report.csv” `

-Encoding ASCII -NoTypeInformation

$endTime = get-date

“” #blank line

Write-Host “————————————————-” -ForegroundColor Green

Write-Host “Script started at: $startTime” -ForegroundColor Green

Write-Host “Script completed at: $endTime” -ForegroundColor Green

Write-Host “Script took $($endTime – $startTime)” -ForegroundColor Green

Write-Host “————————————————-” -ForegroundColor Green

“” #blank line

This script took just under 10 minutes to run. In summary, these two optimized scripts processed 5000 computers in about one hour. Not bad!

These scripts can be adapted with minor modifications to collect just about any kind of data. Today’s script focuses on large environments while yesterday’s script is appropriate for a small to mid-size environment. The next time your manager tells you that the auditors are coming, you can sit back and smile. You will be ready.

~ Georges

Thank you, Georges. This has been a great series of blogs. The zip file that you will find in the Script Repository has all the files and scripts from Georges this week.

I invite you to follow me on Twitter and Facebook. If you have any questions, send email to me at scripter@microsoft.com, or post your questions on the Official Scripting Guys Forum. See you tomorrow. Until then, peace.

Ed Wilson, Microsoft Scripting Guy

Light

Light Dark

Dark

0 comments