In this post, Sr. App Dev Manager Paul King shows us how to use Azure Monitor to query Azure Storage API logs for additional metrics.

A customer recently asked me how they could use Azure Storage to determine if a Blob had been accessed and if so, how many times. I though this was a pretty reasonable ask, so I began exploring.

The first place I looked was in Azure Monitor for metrics on the storage account. I can add a storage account to monitor and add the Blob namespace. The metrics available are only for transactions and capacity. Since transactions cannot distinguish between reading and writing, I thought I could filter by the API name. Unfortunately, I was looking for GetBlob and this API is not available to be filtered.

I then spent some time looking at the diagnostic settings on the storage account to see what was available. Under diagnostic settings (classic) I noticed that you could enable metrics for Blob properties and enable API metrics. Perfect! I enabled my storage account to include these metrics and verified that it was working through Storage Explorer. $logs was available as a folder in my Blob Container and logs were being written that detailed my activity. Opening one of the log files I could see entries that contained what I was looking for; the GetBlob API call and the file name requested.

1.0;2019-10-04T13:45:19.0937823Z;GetBlob;Success;200; … "/sa/blob/IMG_0986.JPG";

I then investigated Azure Monitor to query and analyze my logs. I drilled into this and found that Storage account logs cannot automatically send logs Log Analytics. While I’m told that this functionality is coming, I needed a solution for my customer now.

After some additional research, I found that if you convert the log files to JSON format, you can post them to a Log Analytics Workspace. Since I was using Version 1 of the log file format, I found some code on GitHub here to do exactly that. Once you have the log file in JSON format, you can post it to any Log Analytics Workspace using the http data collector API that is documented here. Once you have your data posted, you can create a query that you can save using the Azure Portal that will return the blob names that have been accessed. I wanted a list of blob names that had been accessed more than 2 times, so my query looked like this:

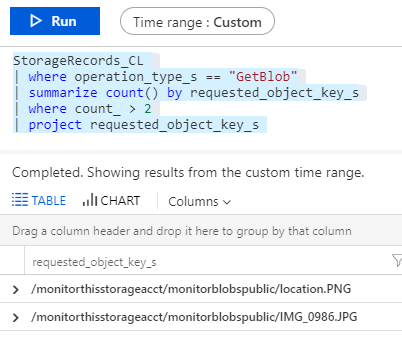

StorageRecords_CL | where operation_type_s == "GetBlob" | summarize count() by requested_object_key_s | where count_ > 2 | project requested_object_key_s

This is a Kusto query. In this case StorageRecords_CL is the name of the log entries I added through the http data collector API to my Workspace. It looks in the operation_type_s field for the GetBlob API call and returns only records that have a count greater than 2. I then only return the name of the impacted files. When I run this query, I get results that look like this:

Information on the Kusto query language is here with some quick pointers on using it with Azure Log Analytics here.

This all could be automated through an Azure Function to post the logs automatically and an alert could be setup on top of it emailing you that you have reached your threshold. Unfortunately, there is no way to include the results of that search in the alert today. You could use the webhook actions documented here. The webhook would allow you to add a JSON payload that defines the search query to run and specifies to return the search results.

0 comments