As you may know from my previous posts “The ‘in’-modifier and the readonly structs in C#” and “Performance traps of ref locals and ref returns in C#”, structs are trickier then you might think. Mutability aside, the behavior of readonly and non-readonly structs in “readonly” contexts is very different.

Structs are meant for high-performance scenarios and to use them efficiently you should know quite a bit about different hidden operations that the compiler emits to enforce struct immutability.

Here is a short overview of the caveats you should remember:

- Large structs which are passed/returned by value can cause performance issues on hot execution paths.

x.Ycauses a defensive copy of thexif:xis a readonly field and- the type of

xis a non-readonly struct and Yis not a field.

The same rules are applied when x is an in-parameter, ref readonly local variable or a result of a method invocation that returns a value by readonly reference.

This is a quite a few rules to remember. And, most importantly, the code that relies on these rules is very brittle. How many people will notice that changing public readonly int X; to public int X { get; } in a widely used non-readonly struct may have a non-negligible performance impact? Or how easy to spot that passing a parameter using ‘in’-modifier instead of passing by value, may actually cause a performance regression? This is definitely possible if a property of the ‘in’-parameter is used in the loop causing a defensive copy on each iteration.

These features are screaming for analyzers. And the scream was heard. Meet ErrorProne.NET – a set of analyzers that informs you when your code may be changed to have better design and performance when dealing with structs.

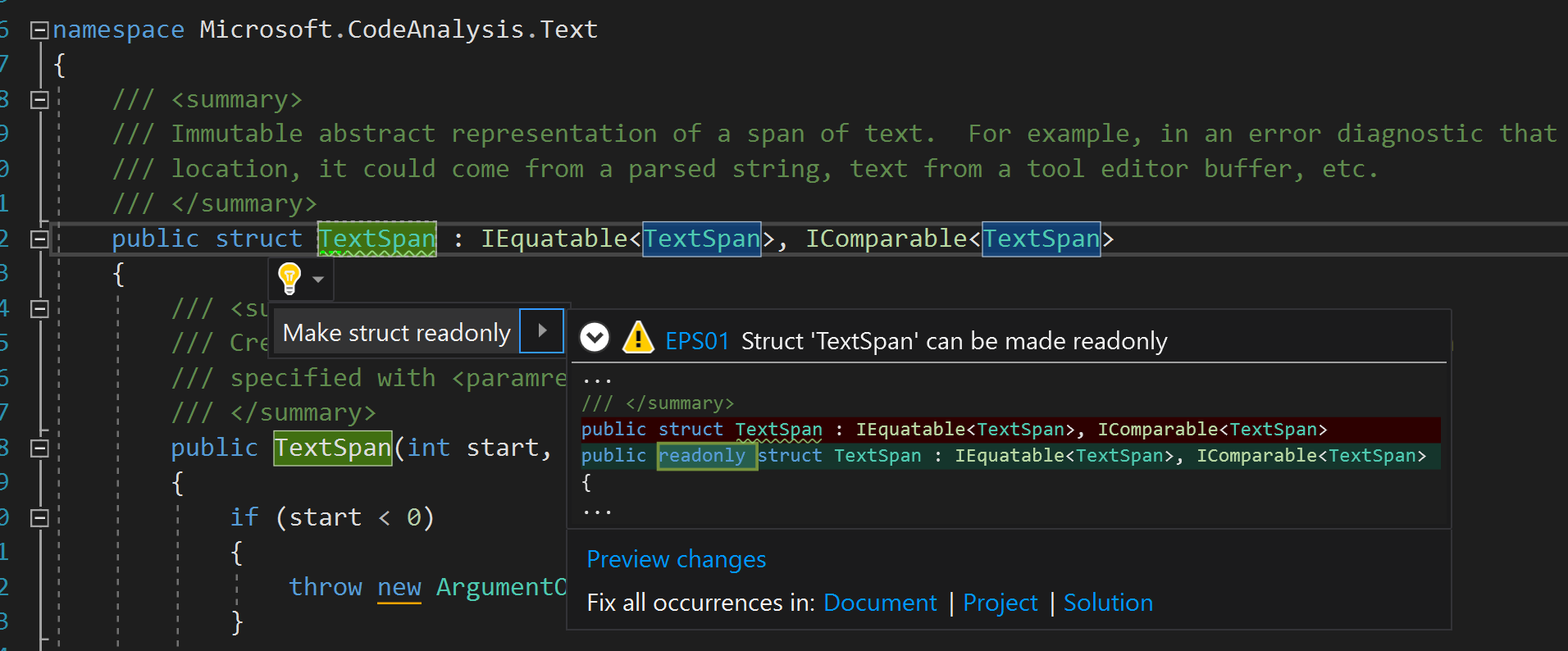

“Make struct ‘X’ readonly” analysis

The best way to avoid subtle bugs and negative performance implications with structs is to make them readonly if possible. Readonly modifier on a struct declaration clearly expresses a design intend (emphasizing that the struct is immutable) and helps the compiler to avoid defensive copies in many contexts mentioned above.

Making a struct readonly is not a breaking change. You can safely run the fixer in a batch mode and make all the structs in the entire solution to be readonly.

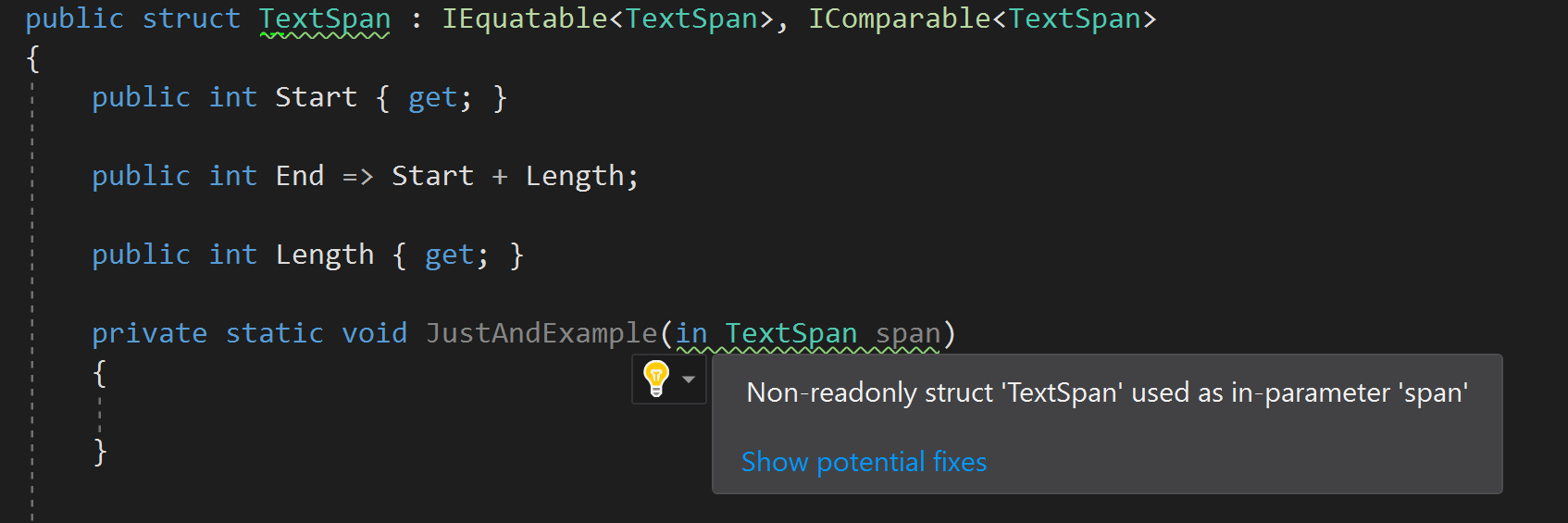

‘Ref readonly’ friendliness

The next step is to detect when the new features like ‘in’-modifier, ‘ref readonly’ local etc are safe to use, meaning when the compiler would not create hidden defensive copies that could harm the performance.

You can think of 3 categories of types:

- ‘ref-readonly’-friendly structs that will never cause defensive copies.

- ‘ref-readonly’-unfriendly structs that will always cause a defensive copy if used in “readonly” contexts and

- neutral structs – the structs that could cause defensive copies or not depending on a member being used in a “readonly” context.

The first category comprises of readonly structs and POCO-structs. The compiler will never emit a defensive copy if the struct is readonly and it is safe to use POCO-structs in “readonly” contexts as well: a field access is considered safe and cause no defensive copies as well.

The second category are non-readonly structs with no exposed fields. In this case, any access to an exposed member in “readonly” context will cause a defensive copy.

The last category are structs with public/internal fields and public/internal properties or methods. In this case the compiler can emit a defensive copy or not depending on a member being used.

This separation helps to warn immediately if an “unfriendly” struct is passed by ‘in’, stored in a ‘ref readonly’ local etc.

The analyzer will not warn if “unfriendly” struct is used as a ‘readonly’ field because there are no alternatives. ‘in’ modifier and ‘ref readonly’ local are optimizations that were designed specifically to avoid redundant copies. If a struct is “unfriendly” with these features you have other options: to pass an argument by value, or to store a copy in a local variable. Readonly fields are different in this regard: if you want to make the type immutable you have to use readonly fields. Remember: the code should be clean and elegant and only after that it should be fast.

Hidden copy analysis

The compiler does a lot of stuff behind the scene. As we saw in the previous post, it could be quite hard to see when the defensive copy is occurred.

The analyzer can detect the following cases:

- Hidden copy of a readonly field.

- Hidden copy of an ‘in’-argument.

- Hidden copy of a ‘ref readonly’ local.

- Hidden copy of a ‘ref readonly’ return value.

- Hidden copy when an extension method is called on a struct instance that takes ‘this’ by value.

public struct NonReadOnlyStruct

{

public readonly long PublicField;

public long PublicProperty { get; }

public void PublicMethod() { }

private static readonly NonReadOnlyStruct _ros;

public static void Samples(in NonReadOnlyStruct nrs)

{

// Ok. Public field access causes no hidden copies

var x = nrs.PublicField;

// Ok. No hidden copies.

x = _ros.PublicField;

// Hidden copy: Property access on 'in'-parameter

x = nrs.PublicProperty;

// Hidden copy: Method call on readonly field

_ros.PublicMethod();

ref readonly var local = ref nrs;

// Hidden copy: method call on ref readonly local

local.PublicMethod();

// Hidden copy: method call on ref readonly return

Local().PublicMethod();

ref readonly NonReadOnlyStruct Local() => ref _ros;

}

}

Please note, that the analyzers emit a diagnostic only when the struct size is >= 16 bytes.

Using analyzers on real projects

Passing large structs by value and defensive copies made by the compiler have non-negligible performance impact. At least the benchmarks are telling us so. But how these things will affect real-life applications in terms of end-2-end time?

To test the analyzers with the real code, I’ve used them with two projects: the Roslyn project and the internal project that I’m working on right now at Microsoft (the project is a monolith desktop app with severe performance requirements), let’s call it “Project D” for clarity.

Here are some results:

- Performance sensitive projects most likely have lots of structs and most of the structs could be made readonly. For instance, in Roslyn project the analyzer detected about 400 structs that can be readonly, and about 300 structs for Project D.

- Performance sensitive projects should have relatively small number of cases where the hidden copies are occurred. I’ve found only a handful of cases for the Roslyn project, because most of the structs have public fields instead of public properties. This helps to avoid defensive copies when structs are stored in readonly fields. The number of hidden copies was higher for Project D, because at least half of the structs have get-only properties instead.

- Passing even fairly large structs using ‘in’-modifier most likely would have very small (if visible) impact on the end-to-end time.

I’ve changed all the 300 structs in Project D to be readonly and then updated hundreds of usages to pass them by ‘in’-modifier. Then I’ve measured the end-to-end time in different performance scenarios. The differences were statistically insignificant.

Do my measurements mean that the features are useless? Not really.

Working on a project with high performance requirements (like Roslyn or “Project D”) means that a lot of people spent a lot of time on different kinds of optimizations. Indeed, in some cases in our code the structs were passed by ‘ref’, and some fields were declared without ‘readonly’ modifier to avoid defensive copies. Lack of performance benefits by passing structs by ‘in’ could just mean that the code is well optimized and there are no excessive struct copies on hot paths.

What should I do with these features?

For me, readonly modifier for structs is a no-brainer. If a struct is immutable then the ‘readonly’ modifier just an explicit enforcement of this design decision. And lack of defensive copies for such structs is just a bonus.

My guideline today is: If you can make a struct readonly, then definitely make it readonly.

Other features are a bit more subtle.

Premature optimization vs. premature pessimization?

Herb Sutter in his amazing book “C++ Coding Standards: 101 Rules, Guidelines, and Best Practices” introduces a notion of “premature pessimization”:

“All other things being equal, notably code complexity and readability, certain efficient design patterns and coding idioms should just flow naturally from your fingertips and are no harder to write than the pessimized alternatives. This is not premature optimization; it is avoiding gratuitous pessimization.”

The ‘in’-parameter from my perspective falls into this bucket. If you know that a struct is relatively large (like 40-sh bytes or more) you may pass it by ‘in’ by default. The tax of ‘in’-modifier is relatively small because you don’t have to update the callers but the benefits could be real.

Readonly ref locals and readonly ref returns, on the other hand, are different. I would say that you should use these features for library code and use them in the application code only when profiling identifies that the copy operation is actually a problem. These features required more work from you to use them, and they are harder to understand by the reader.

Conclusion

- Use readonly modifier on structs whenever possible.

- Consider using ‘in’-modifier for large structs.

- Consider using ref readonly locals and ref return for library code or when profiling tells you that they are useful.

- Use ErrorProne.NET to detect the issues with your code and share your results;)

Additional links

- ErrorProne.NET source code on github.

- ErrorProne.NET Structs analyzer at Visual Studio marketplace.

- ErrorProne.NET Structs on nuget

- “The ‘in’-modifier and the readonly structs in C#”

- “Performance traps of ref locals and ref returns in C#”

0 comments