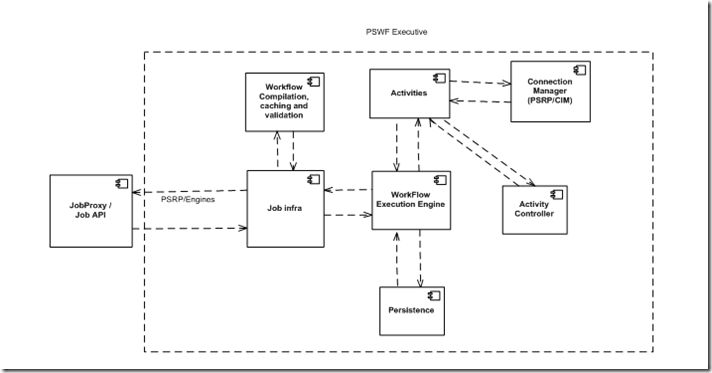

This is the second part of our post on the high level architecture of Windows PowerShell Workflow. Part 1 of this blog post provided an overview of the architecture and its various components. This post will go into more detail on the various subcomponents and provide some insight into the internals of Windows PowerShell Workflow. The component diagram for the PowerShell Workflow Executive is repeated (from part 1) for reference

Each of the components in the diagram is discussed in detail below.

1. Workflow Compilation, Caching and Validation

Windows Workflow Foundation deals with and understands Activity objects. An executable workflow is simply a collection of activity objects. Each step in the workflow corresponds to an activity in the collection. The activity objects in a workflow can be programmatically constructed or constructed from their eXtensible Application Markup Language (XAML) definition. In Windows PowerShell workflow, we use the XAML definition of an activity. .

1.1 Compilation and Caching

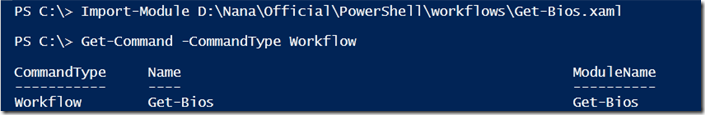

The interaction begins when a user imports a XAML file into a Windows PowerShell session — either a console session or the PowerShell Workflow Configuration. XAML files are treated like modules in PowerShell, so they are imported by using the Import-Module cmdlet.

Here is what happens when a XAML file is imported:

1. The XAML file contents (and any dependencies) are read and compiled into an executable workflow using the ActivityXamlServices class.

2. The Activity object, the XAML definition, and any dependency information are stored in a process-wide cache.

We do this because compiling a XAML definition into an executable workflow is a lengthy operation that we don’t want to repeat.

3. The parameters of the workflow are extracted and a PowerShell function is synthesized that has the same name as the XAML file that contained the workflow definition. The function has the same parameters as the workflow and all common workflow parameters that PowerShell provides. This synthesized function references the workflow that was compiled in step #1 and stored in the process-wide cache.

When the user executes the workflow, this PowerShell function is actually executed.

4. When multiple clients connect to the workflow session configuration, all of these connections will be directed to the same process (all of the information about session configurations warrants a blog post in itself). In this case each of the sessions will contain a synthesized function but all of these functions will refer to the same entry in the cache.

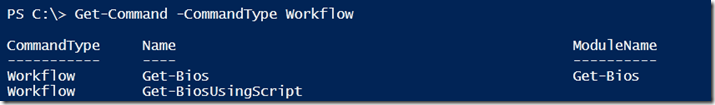

After a XAML file is imported, you can use Get-Command to look at the corresponding command. Note that its command type is "workflow," even though the underlying implementation is a function.

1.1.1 PowerShell Script to Workflow Conversion

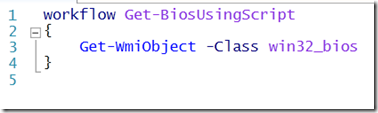

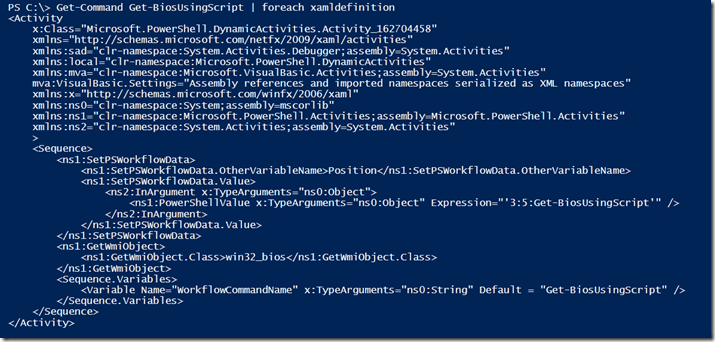

When you author a workflow in PowerShell (using the workflow keyword, as detailed in Part 1), the PowerShell parser converts it into an intermediate representation referred to in developer terms as an Abstract Syntax Tree (AST). The AST is then converted to a XAML document in memory. This XAML document goes through the same compilation and caching steps detailed above. It is possible to examine this XAML definition by using the Get-Command cmdlet. For example, consider the following workflow:

The converted XAML is available in the XamlDefinition property of each workflow.

Note that the Get-WMIObject cmdlet is converted into the GetWmiObject activity. There is also some additional transformation – like ‘3:5:Get-BiosUsingScript’ – which is used to preserve the position information in the script. This is used when writing error messages that actually reference the position of a statement in the PowerShell script, rather than the converted XAML document.

In XAML and PowerShell workflows, the synthesized function is available in the ScriptBlock property of the workflow.

1.2 Validation

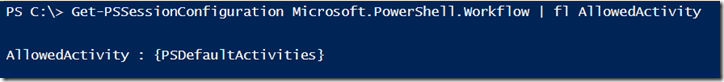

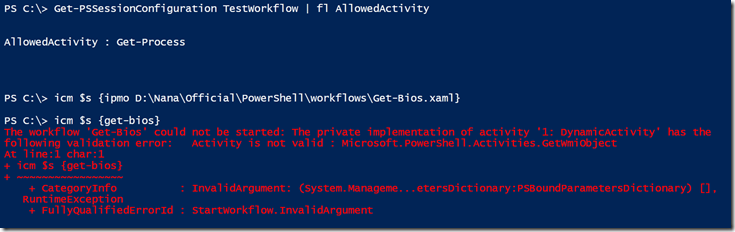

Validation is the process of verifying that all activities in the workflow are allowed. For performance reasons, the workflow is validated when it is executed, rather than at compile time. PowerShell allows you to define workflow sandboxes where you specify which activities can be part of a workflow in a given session configuration. If the workflow includes activities that are not allowed, validation fails and workflow execution will not progress.

PSDefaultActivities indicate that all PowerShell activities are allowed in the session configuration. Here is an example of validation failure.

Since the “TestWorkflow” custom session configuration was defined as a sandbox that allows only the Get-Process activity, the Get-Bios workflow fails because it uses the Get-WmiObject activity.

2. Workflow Jobs Infrastructure

As specified in Part 1 of this blog post, in PowerShell, a workflow is always executed as a PowerShell job. The job infrastructure is not unique to PowerShell workflows but is an infrastructure provided by PowerShell that different modules can plug into. The PowerShell Workflow job infrastructure consists of the following four main components:

1. The PowerShell workflow job implementation (the PSWorkflowJob object).

2. A job manager that maintains the list of all workflow jobs and queries/filters them.

3. A workflow JobSourceAdapter, which is the interface between the PowerShell job cmdlets and the workflow job manager.

4. Workflow Job Throttle Manager –ensures that no more than a specified maximum number of jobs are running at any point in time

Since workflow jobs are built on the common job infrastructure, the PowerShell job cmdlets are used to manage the workflow jobs and they interact with the workflow job source adapter.

2.1 Creation of Workflow Jobs

When the user runs the workflow from the command line, the ScriptBlock associated with the synthesized function is executed. Here is what happens:

1. The Activity object for the specified workflow is obtained from the process-wide cache and it is validated as specified in the validation section (1.2).

2. If validation succeeds, a workflow job is created for the specified workflow. When the user specifies multiple computers (by using the PSComputerName parameter), a child workflow job is created under the parent job for each computer that is targeted. This way, all workflow job instances created by user in a particular invocation are managed together. (Here is where the caching comes in handy. Since the same definition is used, a lot of time is saved by compiling only once. Caching of compiled workflows is one of the first performance improvements we made).

3. The workflow job is submitted to the job throttle manager which invokes the job when it is permitted to do so under the current throttling policies.

4. If the user invoked the workflow by using the AsJob parameter, the parent job object is written to the pipeline. If the user did not specify the AsJob parameter, the synthesized function mimics synchronous behavior for the workflow.

3. Activities

Each workflow job instantiates a WorkflowApplication using its compiled workflow definition it retrieved from the process-wide cache. As mentioned in Part 1, WorkflowApplication provides a host for a single workflow instance. Since a workflow is a series of activities, the workflow engine is responsible for coordinating the execution of these activities.

PowerShell workflows can contain different kinds of activities. These can be broadly divided into three categories:

1. Activities supplied by Windows Workflow Foundation such as the flow control activities (while loops, if statements, etc)

2. Activities that represent PowerShell commands. This includes all the built-in activities supplied by PowerShell.

3. Special activities – like Pipeline, PowerShellValue, GetPSWorkflowData and SetPSWorkflowData. These are primarily “helper” activities and they are mostly used when a workflow authored in the PowerShell language is translated into a XAML. We will provide details about these activities in an upcoming blog post about workflow authoring.

PSActivity is the base for all PowerShell activities. Deriving from this base class, there are a number of subclasses that encapsulate differences in remoting behavior exposed by some commands. For example, some commands like the WMI and CIM cmdlets are capable of handling remoting on their own. The activities wrapping these cmdlets derive from a special subclass that coordinates their execution. Other activities such as Write-Output are never executed remotely. The majority of activities, however, should be executed remotely but are not themselves capable of remote execution. These activities derive from PSRemotingActivity. Any activity derived from PSRemotingActivity gets default remoting support using PowerShell remoting. Since Workflows are meant for multi-machine management, it is important that an activity is able to perform a task on a remote computer.

We wanted to make it really easy for developers to develop activities. Developers just concentrate on developing cmdlets for use in PowerShell. A set of APIs can then be used to generate activities from these cmdlets. These activities now have remoting capabilities and can be used in workflows to target different computers.

3.1 Determining Command Execution Type

As mentioned in Part 1, the command associated with an activity can be run in one of the following ways:

1. In process – command runs within the hosting process

2. Out-of-process – command is run in a separate PowerShell process

3. Remotely – command is run on a remote machine

How the command is run is determined as follows:

1. All CIM and WMI activities always run in-process (these are first-class citizens and have special handling within PowerShell) to provide maximal performance and scalability.

2. If the session configuration allows the activity to be run in-process, the command is executed within the host process

3. If the session configuration specifies that the activity needs to run out of process, the Activity Controller runs it in a separate process

4. If PSComputerName parameter is used, the command is executed remotely against the specified computer.

3.2 Activity Execution

1. WMI and CIM activities are special in that they always run in-process and PowerShell directly talks to the underlying infrastructure to execute these commands – either locally or remotely.

2. For every other activity, there is one commonality – each command (pertaining to an activity) in a workflow is executed in its own runspace. This is true for all the types specified in #2 to #4 above.

3. When a command runs in-process, a runspace is obtained from a local cache of runspaces, the command is executed; the runspace is cleaned up and returned to the cache (Maintaining a cache of runspaces is a performance-enhancing feature of Windows PowerShell Workflows).

4. When a command is run on a remote machine, a runspace is obtained from the Connection Manager, the command is executed, and the runspace is returned to the Connection Manager.

5. When a command needs to be run out-of-process, the command is submitted to the Activity Controller. The Activity Controller maintains a pool of PowerShell worker processes in which it runs the command and calls back when it is done.

If an activity takes a long time to run, the workflow may be passivated i.e. unloaded from memory, and all the information associated with the workflow is saved. After the activity completes, it reactivates the workflow i.e. loads the workflow’s state back into memory, and resumes execution.

4. Connection Manager

As mentioned in part 1, the Connection Manager is responsible for pooling, throttling and managing all connections from workflows within the process. The Connection Manager maintains a pool of connections indexed first by the computer name and then by the session configuration name.

4.1 Servicing a Connection Request

1. When an activity requests a connection to a specified computer, the Connection Manager checks to see if there is an available connection that matches all the required remoting parameters (computer name, authentication, credentials, etc). If it finds one, it marks the connection as busy, and returns the existing connection.

2. If there is no match, it checks to see if the maximum allowed connections to a particular computer have been reached. If there is room for creation, it creates a new connection with the specified parameters.

3. If there are open connections, but they do not match the required remoting parameters, and the maximum allowed connections to a computer has been reached, the Connection Manager finds an open connection that is free, closes that connection, and then creates a new one with the required parameters. This ensures that no more than the maximum number of remote connections is created to a particular computer.

4. When there are no available existing connections, and a new connection cannot be created, the request is queued until a connection is freed up.

To maintain a fair servicing policy, Connection Manager ensures that queued requests are serviced before new incoming requests.

4.2 Closing Connections Not in Use

A timer in the Connection Manager periodically iterates through all the connections available and checks if a connection is busy or free. If the connection is free, it is flagged. If the connection is already flagged (which means it is free since the last check), it is closed. When a connection is open, it not only amounts to resource consumption on the local computer, but there is also an active, but unused process on the remote computer. Closing a connection ensures that a process does not stay open on the remote computer unless it is necessary.

4.3 Disconnect/Reconnect Operations

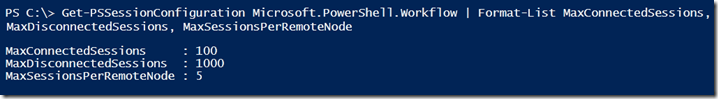

When the number of outbound connections increases, the Connection Manager starts to disconnect the connections. It then connects to the remote computers few at a time to obtain the data. This ensures that PowerShell Workflows can connect to a large set of machines and start commands and then obtain data in a controlled manner. There is also a limit to the total number of disconnected sessions that the Connection Manager will maintain.

MaxSessionPerRemoteNode is the maximum number of connections that will be made to each remote computer.

4.4 Throttling

The Connection Manager throttles all operations – open, close, connect and disconnect – using a single queue. This ensures that there are no more than a specified maximum number of network operations in progress. The throttling works similar to the throttling in PowerShell remoting.

5. Activity Controller

Whenever an activity is considered unreliable (like a script that was grabbed from a blog post), the workflow author or the administrator might want to run the activity in a separate process. This will ensure that, in case there is a crash, the whole session is not brought down; only the activity fails. The Activity Controller maintains a pool of PowerShell processes and is responsible for executing activities out of process.

5.1 Executing Activities Out of Process

The Activity Controller maintains an unbounded queue of incoming requests. The request typically contains the command to execute and its input, the output buffer, the variables that need to set and the modules that need to be made available for the command to execute.

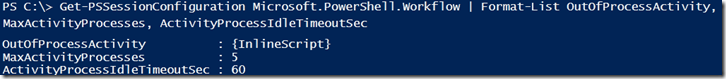

The activities that will be run out of process, the number of processes that the Activity Controller will create, and the amount of time a process will stay idle, are determined by the properties of the session configuration.

A servicing thread in the Activity Controller picks requests from the queue, creates a new PowerShell process if required, creates a new runspace (out-of-process runspace) for every command execution, prepares the runspace, and finally executes the command. Once complete the Activity Controller calls back into the activity and the workflow execution resumes.

If the process crashes during execution of the command, the activity fails and the workflow handles this failure.

6. Persistence

Persistence is the operation within the execution of the workflow that saves information about the workflow to a store. Windows PowerShell Workflow uses a file-based store. The persisted information consists of the workflow definition, workflow parameters, workflow job state, internal state information, input and output, metadata associated with the workflow, etc.

Every workflow has a –PSPersist common parameter that has a tri-state value:

1. Not specified – persist at the beginning and end of a workflow

2. $true – persist after execution of every activity

3. $false – do not persist at all

Persistence can also be managed within the workflow itself. If the workflow has a CheckPoint-Workflow or a Suspend activity specified at any point, persistence happens at those points as well.

When a workflow is resumed, it always resumes from the last persisted point.

6.1 Begin Persistence

All the information, including the workflow definition and the input, are persisted when the workflow job is created. This is because a workflow job may not start running as soon as it is created due to throttling. Before the start of the workflow, if the process restarts (for whatever reason), the job can simply be resumed since all the parameters and input are available. This helps when there are a large number of jobs being executed, which is typical when managing a large number of machines.

6.2 End Persistence

End persistence is persistence that happens when the workflow reaches a terminal state (Completed, Stopped or Failed). The idea is that a user will be able to retrieve output and state of the workflow after a process restart.

Even if a user specifies –PSPersist as $false, we attempt to see if output from the workflow and other information can be persisted. If so, it will be persisted because it frees up memory resources. This helps Windows PowerShell Workflow scale to a large number of jobs. (This is one of the scalability improvements. As a result, the number of jobs that can be executed simultaneously is very large).

6.3 Persistence at Persistence Point

When there is a CheckPoint-Workflow or a Suspend activity in a workflow, persistence takes place at that point. This allows the workflow author to specify logical points in the execution sequence where it makes sense for the workflow to be resumed from that point.

7. Conclusion

This blog post concludes the two-part series on the high level architecture of Windows PowerShell Workflow.

Narayanan Lakshmanan [MSFT] Software Design Engineer – Windows PowerShell Microsoft Corporation

0 comments