Leveraging the Copilot stack with Azure OpenAI and the Teams AI Library

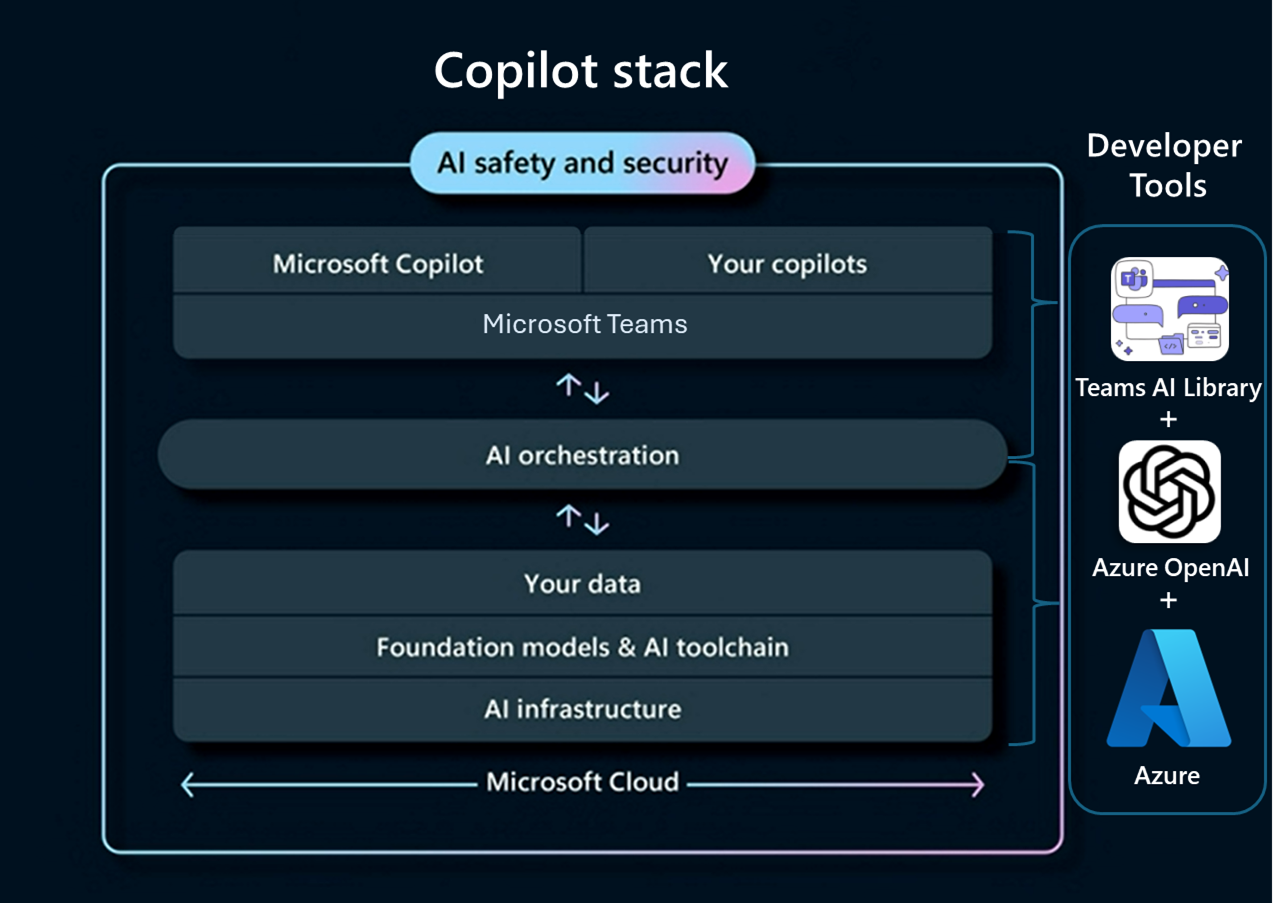

Microsoft presented the Copilot stack as a visual guide designed to clarify and streamline the creation of your custom copilot, which offers intelligent natural language-driven experiences powered by large language models. The Copilot stack empowers you to build more ambitious products by leveraging advanced technology at each layer of the stack. Just as you rely on Azure for your cloud computing infrastructure instead of building your own, using existing foundational models and other technologies to power your generative AI products saves millions of dollars and years of development time. With the right set of pro-developer tools, you can effectively leverage the entire Copilot stack expediting your custom copilot’s development.

For Teams-centric custom copilots, the Teams Platform can guide you on:

- Benefits of building a custom copilot and bringing it to Teams

- Choosing your copilot development path: Teams AI Library and Azure OpenAI or Copilot Studio

- Leveraging the Copilot stack and tools to build the best Teams-centric custom copilots

- Four steps to build your custom copilot on Teams

Benefits of building a custom copilot and bringing it to Teams

Generative AI has become the center of focus for CEOs such as Microsoft’s Satya Nadella because of its capabilities to vastly improve users’ productivity and creativity. It can assist your app’s users with a range of complex tasks from automating workflows to providing real-time intelligence all while interacting with users in natural language. The impact can be substantial with the CEO of GitHub, Thomas Dohmke, noting that “46% – almost half the code on average is written by Copilot in those files where it’s enabled. That alone is mind boggling.” Building your app’s own copilot can give users significant productivity enhancements transforming how they interact with your app.

As conversational models simplify and shift your app’s user interface to its most basic form, natural language, a stronger emphasis is needed in the conversational experience of your app. This is where Teams can help.

As a collaboration platform with 320+ million users, Teams is at the center of work and communication via natural language making it an ideal match with conversational AI apps. With deep integration of your custom copilot into Teams, new collaboration scenarios are possible. Your AI assistant in Teams can have natural conversations with users wherever they collaborate, in chats, channels, and meetings. It can give relevant responses without needing explicit registered commands through advanced reasoning and by remembering context across Teams messages. With access to the group roster, your app can seamlessly coordinate with others across the world. Develop your own copilot and use it on Teams to update members of a global organization in any language on the latest product strategy, autonomously coordinate with each teammate on new project deliverables, and more.

The opportunities for this new wave of AI are vast. Let’s walk through which development path is right for you to start building a powerful and tailored copilot that your users will love.

Choosing your copilot development path: Pro-code vs Low-code

Depending on the goals of your app and your development preferences there are several tools available for building your custom copilot. Microsoft offers two primary development paths:

Depending on the goals of your app and your development preferences there are several tools available for building your custom copilot. Microsoft offers two primary development paths:

- Azure OpenAI and Teams AI Library: For pro-code developers focused on building copilots on Teams, Azure OpenAI and the Teams AI Library are a comprehensive set of tools that streamline your copilot’s development and offer unique Teams-based capabilities. This option is ideal for developers who require the flexibility and power of professional coding environments, and who are looking to extend their app model to other Teams elements such as message extensions, tabs, meeting apps, link unfurling, calling bots, and personal apps.

- Copilot Studio: For creators of all skill levels, Copilot Studio offers an end-to-end natural language or graphical development environment for creating your copilots and deploying them across multiple channels. It’s the primary option for less technical users, and pro-code developers can still utilize advanced functionality within the platform and by extending their copilot using Microsoft Bot Framework skills.

Both options will help you create impactful custom copilots for your organization. If Copilot Studio is the right option for you, see here to learn more about Copilot Studio and try it for free.

As this article is focused on helping developers build the best collaborative copilots on Teams, let’s dive into the pro-code path of using Azure OpenAI and the Teams AI Library to build powerful copilots quickly on Teams.

Leveraging the Copilot stack to create Teams-centric custom copilots

The Copilot stack can be broken into 3 tiers: the back end, an AI orchestration tier, and the front end. Each tier of the Copilot stack has layers within it that are recommended in building your own copilot. Azure OpenAI and the Teams AI Library help you build your copilot by properly utilizing every layer of the Copilot stack.

The back end

The back end tier comprises of the AI infrastructure that hosts the Large Language Model (LLM) powering your AI app and your data that can be used to customize your model making its responses relevant to your users. This part of the Copilot stack is where Azure OpenAI Service and Azure play a major role.

Azure OpenAI Service provides access to OpenAI’s powerful GPT family of language models all while being backed by Azure’s leading AI infrastructure. You can connect a base model to your data on Azure and have it supported by Azure’s built-in enterprise level security and compliance in addition to dedicated AI features such as responsible AI content filtering. With a few clicks, your models are deployed globally and at scale on Azure’s infrastructure. Azure offers supercomputing performance with Azure’s N-series virtual machines and thousands of NDVIDA’s H100 GPUs to handle the heavy AI computational load with reliability at any scale.

While Teams supports the model platform of our choice, choosing Azure OpenAI and Azure for your Copilot stack’s back-end provides a solid foundation capable of supporting your AI’s growth responsibly. This allows you to focus on what truly matters, building the best AI app for your users’ needs.

AI orchestration

Foundational models are full of unrestrained capability. AI orchestration is what manages the multiple different AI components and services within your custom copilot in a coordinated way to accomplish complicated tasks. For instance, instead of just summarizing a client’s meeting, your AI identifies, creates, and completes multiple follow-up tasks from the meeting on command.

For creating a focused custom copilot on Teams, Azure OpenAI and Teams AI Library provide a comprehensive set of orchestration capabilities. Several capabilities are automatically built into your AI app when using the Teams AI Library such as conversational logic and an advanced planning engine to identify a user’s intent and map it to an action you implement.

Other areas such as prompt engineering are simplified to give your AI app instructions on how to interact with users and even provide it with an engaging personality. With augmentation, the Teams AI Library enables your AI to perform multi-step actions independently allowing you to automate complex tasks reliably. Optimizing these areas depends on the needs of your app and experimentation can help figure out what works best for your users on Teams. Azure OpenAI’s Playground quickly tests the results of different prompts, and this guide can help you learn about prompt engineering techniques for chat completion. The Teams AI Library includes several different samples to showcase its capabilities. Try them out and see what works best for you.

The front end

The front end comprises of the user experience (UX) for the user to engage with your AI app conversationally. This can be a chat interface in a bot or it can be a pane in the Microsoft 365 Apps like Microsoft’s own Copilot, which your app can be extended to by building a copilot plugin.

For direct engagement to users with your own AI, the Teams AI Library serves as a Teams-centric interface to large language models and user intent engines making it easy to integrate your app into Teams with its comprehensive development toolkit. Using the Teams component scaffolding with prebuilt templates available, you can concentrate on adding your business logic to the scaffold streamlining the process of adding special Teams based abilities such message extensions, Adaptive Cards, and link unfurling. Additional features such as conversational session history enable your AI to remember context across messages and give relevant responses for a dynamic conversation.

In minutes, the Teams AI Library transforms your model into a full conversational experience seamlessly fitting into the flow of work on Teams. Group chats, meetings, and channels are enhanced with your AI easily being called upon to perform complex intellectual tasks. The entire Teams UX experience is ready for your AI app.

Steps to build your custom copilot for Teams

To get started, let’s walk through the main steps needed to build your copilot and deploy it to Teams utilizing the entire Copilot stack by with Azure OpenAI and the Teams AI Library.

Step 1 – Choose your Model

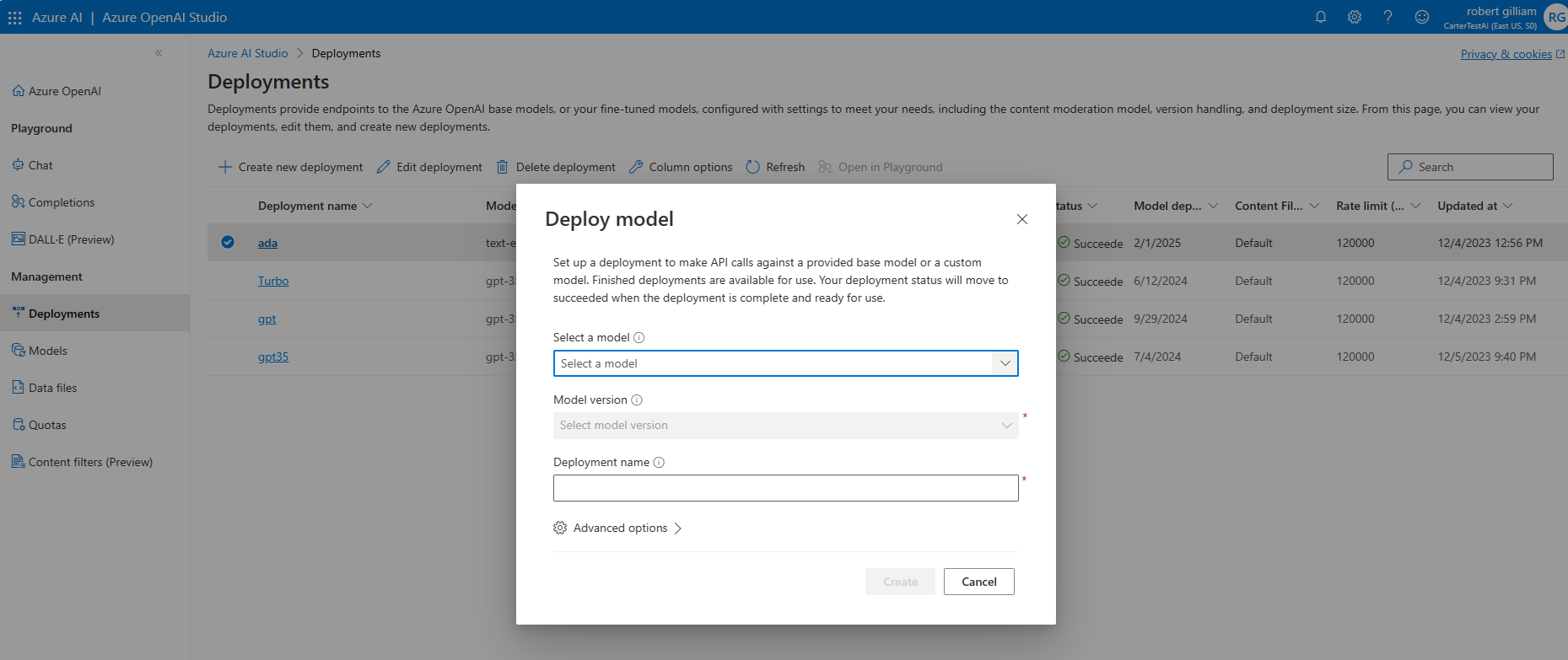

Azure OpenAI Deployments

After creating an Azure account (create one for free) and signing up for access to Azure OpenAI , you can access the Azure OpenAI Service through REST APIs, Python SDK, or the web-based interface in the Azure OpenAI Studio.

In the Azure OpenAI Studio under Deployments, you can “Create new deployment” and select from the different OpenAI models available with more information about each model here. A great model to start with for creating your app’s own copilot on Teams is gpt-35-turbo-16k, which is a highly capable and cost-effective chat-based model in the GPT family.

Once you have chosen your model, you give it a “Deployment name,” which you will later need and specify in your app’s code. Your model has a content filter by default, but you can choose a customized content filter under advanced options. When your model deployment status is marked as “succeeded”, it is ready for use.

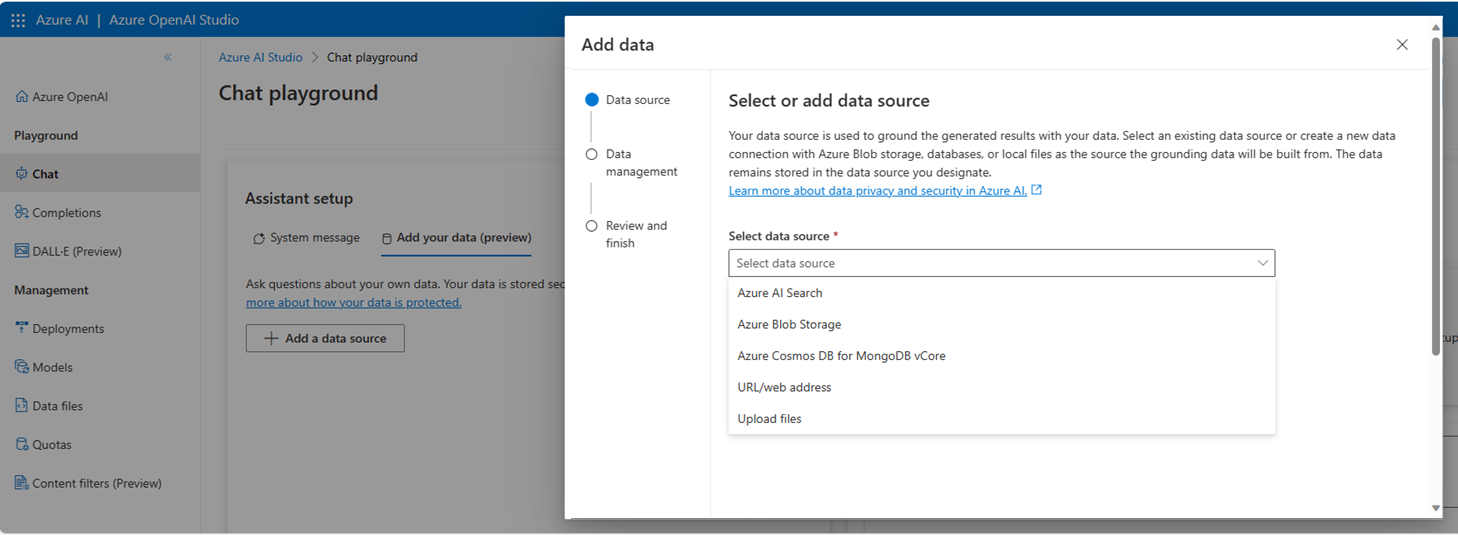

Step 2 – Add your data (optional)

After selecting your underlying foundation model and platform, a powerful way to customize your model and add contextual relevancy is by linking it to your data. Azure OpenAI on your data can connect your data to supported chat models such as GPT-35-Turbo and GPT-4 without needing to re-train or fine-tune the models, and the Teams AI Library allows for several open-source methods to connect your data.

You can access Azure OpenAI on your data using a REST API, multiple programming languages, or Azure OpenAI Studio to create a solution that connects to your data enabling an enhanced chat experience.

Multiple data sources can be connected including:

- Azure Cognitive Search index: Connect your data to an Azure Cognitive Search index, enabling seamless integration with OpenAI models.

- Azure Blob storage container: Connect your data to an Azure Blob storage container and easily access it for analysis and conversation using Azure OpenAI Service

- Local files: Connect to files in your Azure AI portal, providing flexibility and convenience in connecting your data. We ingest and chunk the data into your Azure Cognitive Search index. File formats such as txt, md, html, Word files, PowerPoint, and PDF can be utilized for analysis and conversation.

- URL/web address

- Open Source Methods: Index and connect your database to your model using open source options such as Vectra.

Please note that Azure OpenAI on your data is currently in preview and additional integration guidance with the Teams AI Library is coming soon.

Refer to the Teams ChefBot sample as an illustration for utilizing Vectra as an open-source method to create a local vector database with your documents and enable your model to retrieve relevant content for its answers.

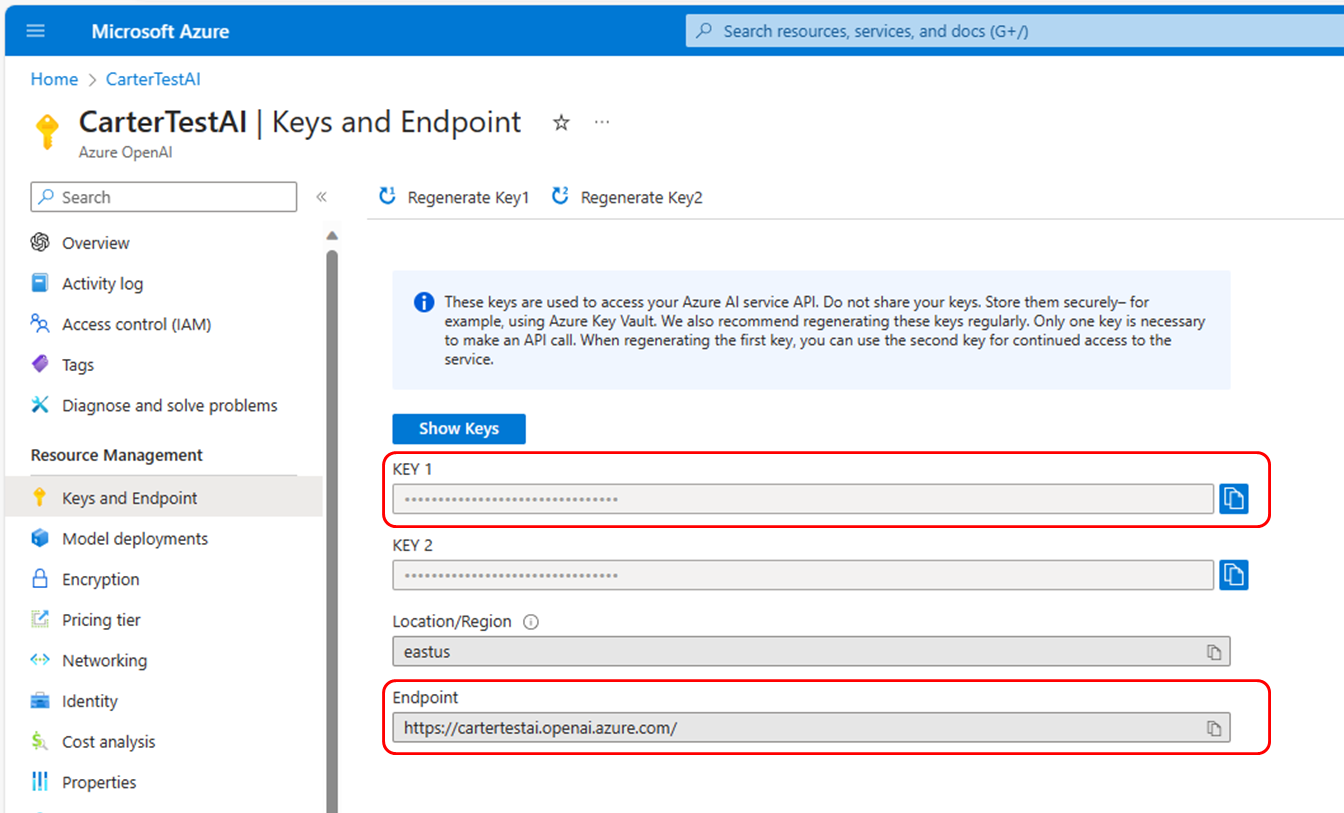

Step 3 – Get your Azure OpenAI Endpoint and Key

When you are ready go to “Keys and Endpoint” in the Azure OpenAI section of Azure to find your Azure OpenAI Endpoint and Key to make calls to the service.

Step 4 – Bring your AI to Teams and the Microsoft 365 Ecosystem

Teams AI Library with Teams Toolkit Extension Building Experience

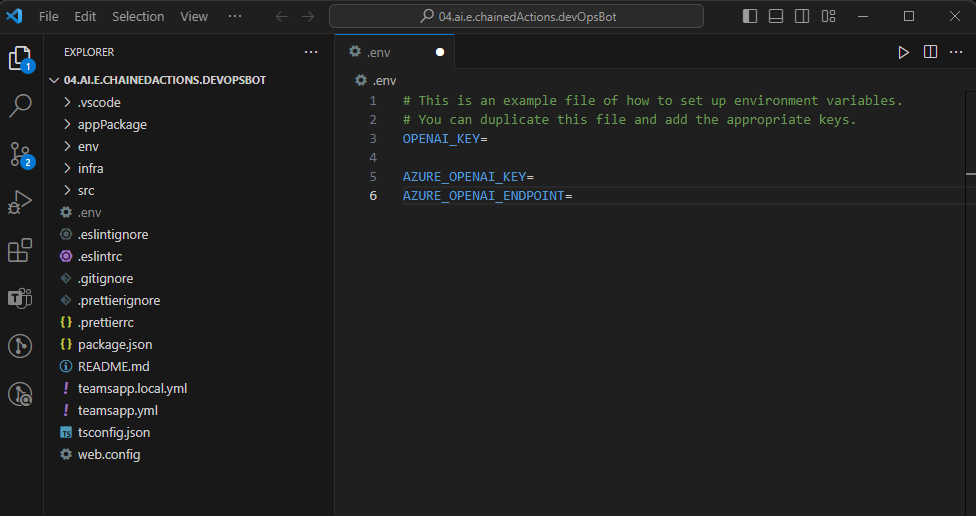

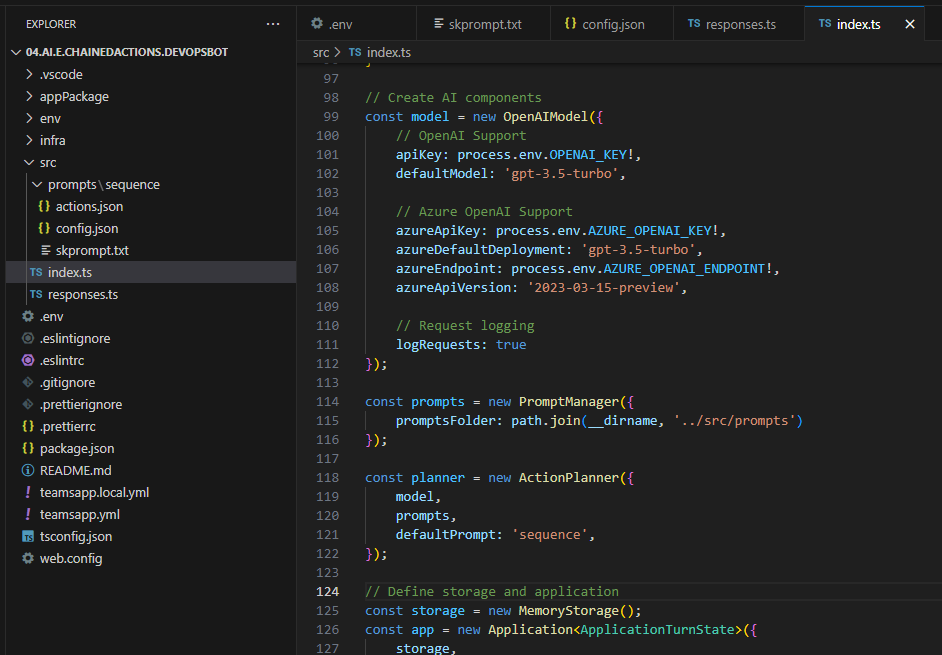

To bring your app to Teams, follow the Build your first app using Teams AI library guide where you can easily create an AI app using the Teams Toolkit Extension for Visual Studio Code or using your preferred development environment. To call your LLM, add in your Azure OpenAI Key and Endpoint here. Alternatively, the Teams AI Library works well with other model platforms such as directly with OpenAI by adding in your OpenAI Key.

You can customize your AI assistant’s responses by adding instructions in the skprompt file giving your app focus and even a personality, if desired.

Then, model name you chose when deploying it from Azure OpenAI can be updated in the Index and Config files allowing you to launch your AI app locally or via Azure to Teams.

Congratulations! You’ve created your own copilot on Teams. There are several ways to further customize your copilot. Be sure to explore many of the samples and other resources available to build the best copilot for your users!

Additional resources

Ready to harness the power of the Teams AI library and create your app’s copilot for Teams?

Get started here:

Follow us on X (Twitter) / @Microsoft365Dev and subscribe to our YouTube channel to stay up to date on the latest developer news and announcements.

0 comments