As 3D printing becomes an integral part of the manufacturing industries, there are new challenges that must be solved to promote mass adoption. Primarily, how do you securely transfer accredited schematics and verify data integrity once it’s been delivered? In other words, how can you ensure the schematics were not tampered with in the midst of the transfer process? This is of paramount importance in mission critical systems.

MOOG, a multi-billion-dollar company headquartered in Buffalo, New York, sought to develop such a system. MOOG identified a need in the manufacturing industry to verify a part’s authenticity before it got installed within a mission critical system. The very real threat of compromised blueprints causing mechanical failures is a problem the manufacturing industry faces regularly.

At the time MOOG started this innovative journey, no reference or open source technologies existed that solved these complex issues in their entirety. Microsoft was able to partner with MOOG to help solve these real-world problems.

MOOG is traditionally known for manufacturing motion and control systems for the aerospace, defense, industrial, and medical industries; so, this project would require development as well as research. In order to produce a usable proof of concept quickly, they chose to collaborate with Microsoft’s CSE team, which specializes in such novel engagements.

Challenges and Objectives

At the outset, the biggest obstacle for our provenance solution was designing an immutable database to store the order details at every step of the transaction. The solution also needed to provide a mechanism to verify the integrity of digital blueprints on the buyer’s side.

The problem was a prime candidate for a blockchain solution. Once a block in a blockchain is created it becomes immutable, since each new block is based off of its hash. Furthermore, the cryptographic algorithms used in a blockchain can verify the integrity of each block’s data before it is added on to the chain.

However, there was a major obstacle with using blockchain and we immediately encountered a scarcity of proven design patterns. We knew blockchain was the best approach for verifying the authenticity of manufacturing blueprints given its established reputation in the provenance space. That said, designing an architecture where MOOG could implement their custom part ordering logic was a challenge.

We set out with the intention of developing a solution which would allow a “seller” to list 3D parts available for one or more “buyers” to order. Once a part has been ordered, a buyer can request for the seller to transfer the file to a location dictated by the buyer. After the buyer has printed the part, they log the details of the printing so that the seller can have a record of all prints.

Solution

Since we needed to use a blockchain to back the system, we decided to use Ethereum. Importantly, Ethereum supports user-defined Smart Contracts. We deployed an Ethereum Proof-Of-Authority Consortium[1] on Azure to develop against, since it closely matched the production scenario. On our local machines, and for testing, we often used a local Ethereum blockchain called Ganache[2].

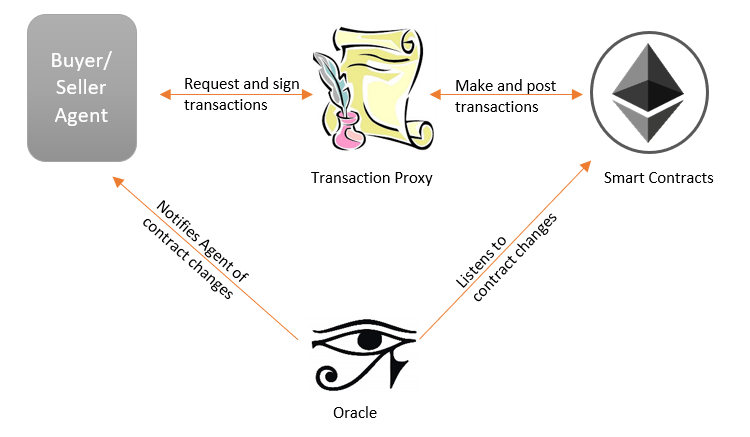

In the end our solution consisted of four key components:

- Ethereum Smart Contracts that acted as the core business logic for interacting with and modifying the state of the chain.

- A Transaction Proxy to form & submit smart contract transactions, which abstracted away direct interaction with the chain.

- An Agent, which provides a user-friendly API for users to interact with the solution, as well as additional business logic.

- An Oracle to read the state of the blockchain and notify the Agent of events so that it may react in real-time.

A diagram of how these components interact is illustrated below:

Smart Contracts

We used the blockchain in a manner similar to how a database might be used in a non-blockchain system; information such as “who is authorized to buy a part”, “who has ordered parts”, and “what serial number is associated with what part” are all stored on the blockchain. The actual mechanism which allows us to do this are Ethereum Smart Contracts,[3] which not only store the data on the blockchain but also provide the core business logic for mutating the data. Thus, both the actual data of “how many parts has user X ordered” and the logic that allows “user X to order a part” both exist inside of a Smart Contract on the blockchain.

Designing the Smart Contracts for the system provided a unique set of challenges. Given the number of restrictions and technical considerations that must be handled when developing Solidity smart contracts, and the fact that the Smart Contracts made up the core business logic of our system, we spent quite a bit of time designing and auditing our smart contracts for security.

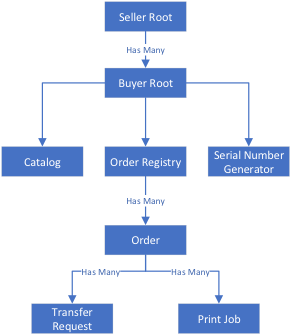

In developing the contracts, we decided to make one Smart Contract per “object” type to reduce the amount of code per contract, to give each contract a specific purpose, and to prevent future updates from requiring a full redeployment. Furthermore, we wanted the smart contracts to leverage data stored in others to reduce the amount of data stored per contract. We accomplished this by “linking” the contracts together in a tree-like hierarchy such that they are aware of their “parent contract.”

We developed eight core contracts with the following purposes:

- Seller Root: Each seller has a root contract that contains a collection of any buyers’ (as Buyer Root contracts) who are registered to order and print 3D part schematics.

- Buyer Root: Each buyer has a root contract which acts as a central reference point for any contracts related to the system, such as the buyer’s Catalog, Order Registry, and Serial Number Generator.

- Catalog: Stores a list of 3D part schematic hashes available for the buyer to order. From the catalog, a part can be ordered where an Order contract is created and registered inside of the Order Registry.

- Order Registry: Stores a list of all orders (as Order contracts) the buyer has placed, as well as providing other helpful business logic functionality relating to Orders. – Serial Number Generator: Encapsulates business logic for generating a list of serial numbers to assign to an order.

- Order: Contains details relating to an order, such as the quantity, requests to transfer the schematics, and a print log. It also contains the functionality to initiate a schematic transfer by creating a Transfer Request, and to log a print by creating a Print Job.

- Transfer Request: Stores details and state of a request to transfer a part schematic from the seller to the buyer.

- Print Job: Stores the details and state of an attempted print job of the part schematic.

This approach proved to work well. It allowed us to unit test each contract independently, stubbing out any dependencies that may be required for testing. Additionally our storage footprint was minimized – common data, such as a part number, would be stored once inside of an Order contract, and dependent contracts such as a Print Job would reference upwards in the tree to retrieve it when ended.

Transaction Proxy

Smart Contracts, once deployed on a blockchain, can be cumbersome and difficult to interact with. When a Smart Contract is compiled, an ABI (Application Binary Interface) is created. The ABI defines what methods and variables the contract contains, as well as how to interact with them. Since this ABI is required whenever communication is attempted with the blockchain, we decided to create a single service that would hold a copy of the ABIs used in our solution and broker any interaction with the Smart Contracts.

We called this service the Transaction Proxy, and gave it three responsibilities: – Form Transaction Payloads for Smart Contract method calls – Submit Signed Transaction Payloads – Read Data Contained Within Smart Contracts

This utility service meant that instead of having to update every service with a copy of the Smart Contract ABIs (so that it can communicate with the blockchain), we could store them in only one place (the Transaction Proxy), and funnel all interaction through the Transaction Proxy. Additionally, this service is application-agnostic and can be used with other projects in the future.

The implementation turned out to be pretty straightforward. First, an RPC (Remote Procedure Call) connection to a blockchain node is established, allowing the Transaction Proxy to interact with the blockchain. In our case it was the Ethereum POA (Proof-Of-Authority) Consortium we used for development. Secondly, the Transaction Proxy needs to have access to the Smart Contract ABI files so that it can properly form transaction payloads. For this engagement, we implemented support for local filesystem lookup and Azure Blob Storage lookup. Lastly, we expose the functionality of the Transaction Proxy through a Web API using Azure Functions.

Here’s an example request that creates a transaction payload which then calls the onboardBuyer(address buyerWallet) method in the Seller contract:

POST https://transaction-proxy.azurewebsites.net/create

{

"from": "0x30ab3d9f876005ed7f351b7cd7330b90136deb92",

"to": "0x0c7079484589afa47f04f625c12f747376a43e00",

"contractName": "Seller",

"method": "onboardBuyer",

"arguments":

{

"buyerWallet": "0xe01fd9bf37ada2eee73fca87a44aa853014e1c8b"

}

}

In this way we had a way of interacting with the Smart Contracts in our solution through the Transaction Proxy, without needing the actual ABIs. The user still must know the name of the contracts they wish to interact with, as well as the names of the methods to call, but this is easier to account for compared to needing an entire ABI file.

The Transaction Proxy’s source code can be accessed on GitHub.

Agent

Interacting with the blockchain through the Transaction Proxy requires contextual knowledge of what Smart Contracts are being interacted with. If you didn’t know that a Smart Contract named Buyer existed and had a method named GetCatalog, then you’d never know what to input for the "contractName" and "method" fields for a request to the Transaction Proxy. We wanted to abstract away any sort of interaction like this from the user, so we created another micro-service called the Agent, with which the user would interact instead.

To accomplish this we had the Agent implement individual endpoints for each relevant Smart Contract method.

For instance, the endpoint on the Agent GET /buyer/0x123.../orders/ would return a list of all orders, fulfilled by the Transaction Proxy by calling the GetOrders() method on the OrderRegistry contract belonging to the buyer at the 0x123... address.

Likewise the endpoint POST /parts/cf0194z would create an order for the part whose hash is cf0194z.

We also realized that some operations that might take multiple calls to the Transaction Proxy (and therefore the Smart Contracts) could be simplified into a single call to the Agent.

An example is when a user asks for the details of an order. In our solution, an order has a number of details associated with it: the quantity of parts ordered, the hash of the part, how many parts have been printed, and more. Since the actual data is stored in a Smart Contract, interaction is also restricted to what Smart Contracts allow. One such restriction is that Smart Contract methods cannot return structs (or collections of data), and so each detail of the order (quantity, hash, ect.) must be retrieved individually.

We weren’t satisfied with having our API require the user to make individual calls for each detail and instead wanted to return all the details in a single call. Therefore we wrote additional business logic into the GET /order/0x321... endpoint, so that the Agent would make multiple calls to the Transaction Proxy – getting each individual detail and then concatenating it into a single response.

The Oracle

At this point our solution was almost complete. We had Smart Contracts that allowed us to store and interact with our data on the blockchain, a Transaction Proxy that simplified interacting with Smart Contracts, and an Agent that provided a specialized and user-friendly interface for the Transaction Proxy. The last piece of our solution was to enable the Agent to react to changes to the blockchain instead of only when a user accessed it through a Web API.

In our solution, whenever a request for a 3D part file to be transferred is created on the blockchain, we needed the Agent to automatically see this and react accordingly. To do this, we needed a way to monitor the blockchain transactions and look for events indicating a transfer was created.

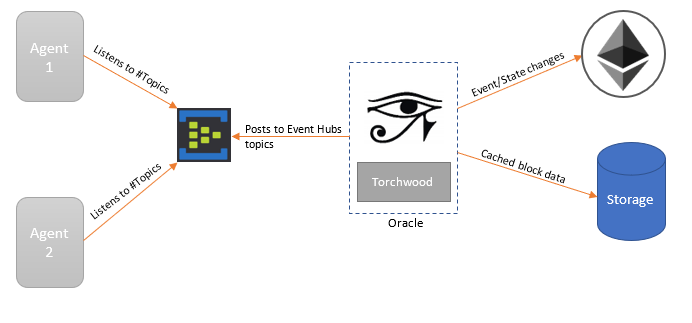

To this end, we created an Oracle that finds and verifies real-world occurrences and submits this information to a blockchain to be used by Smart Contracts. This can be done by listening to events or activities on the chain. For this project we decided to go with a chain watcher approach rather than the usual event listening approach. In other words, we wanted an Oracle that would read all the blocks on a chain and log all changes that happen to a contract, as opposed to just listening for events triggered by a Smart Contract.

To implement an Oracle in this way, we decided to leverage the Torchwood project, an open-source Ethereum library for reading blocks on a chain and logging contract changes. The Torchwood project was initially started here at Microsoft and we extended its functionality further in order to be able to detect and read event triggers on the chain. Torchwood also provided the ability to cache the chain data on a storage and thus allow for possible further processing.

The next obstacle was deciding how the Oracle would notify the Agent. We wanted to follow an observer design pattern to create a loosely coupled connection. Also, it was vital to ensure multiple agents could listen for the same contract changes. For these reasons, Event Hubs was the ideal choice for our needs. The Oracle would post any changes on Event Hubs topics and the agents could subscribe to any topics they were interested in.

Summary

MOOG wanted to build a technology solution that proves the provenance and transfer of digital assets securely between parties. Through examination of available technologies, Microsoft and MOOG set out to develop a demo of this solution that leverages Azure and blockchain technology.

Along the way we discovered that many parts of our design pattern are reusable for similar projects built on blockchain. We have open-sourced the Oracle and we’re exploring doing the same with Transaction Notary, hoping that it will help others designing similar solutions.

Additional Reading

[1] Deploying a private Ethereum Proof-of-Authority Consortium on Azure: https://docs.microsoft.com/en-us/azure/blockchain/templates/ethereum-poa-deployment [2] Ganache, a personal blockchain: https://truffleframework.com/ganache [3] What are smart contracts? https://blockgeeks.com/guides/smart-contracts/