We’re announcing the general availability of Azure OpenAI’s codex-mini in Azure AI Foundry Models.

codex-mini is a fine-tuned version of the o4-mini model, designed to deliver rapid, instruction-following performance for developers working in CLI workflows. Whether you’re automating shell commands, editing scripts, or refactoring repositories, codex-mini brings speed, precision, and scalability to your terminal.

Why codex-mini?

- ⚡ Optimized for Speed: Delivers fast Q&A and code edits with minimal overhead.

- 🧠 Instruction-Following: Retains Codex-1’s strengths in understanding natural language prompts.

- 🖥️ CLI-Native: Interprets natural language and returns shell commands or code snippets.

- 📏 Long Context: Supports up to 200k-token inputs—ideal for full repo ingestion and refactoring.

- 🔧 Lightweight and Scalable: Designed for cost-efficient deployment with a small capacity footprint.

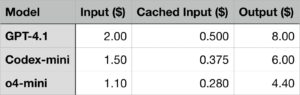

- 💸 Cost-Efficient: Approximately 25% more cost-effective than GPT-4.1 when using a similar mix of input and output tokens.

- 🔗 Codex CLI Compatible: Seamlessly integrates with codex-cli for streamlined developer workflows.

For full pricing details across all Azure OpenAI models, visit the Azure OpenAI pricing.

How to use Azure OpenAI codex-mini model with OpenAI Codex CLI

For a hands-on walkthrough of deploying and using Codex Mini in real-world engineering workflows, check out this detailed guide by Govind Kamtamneni. His blog post covers everything from CLI setup to GitHub Actions integration—all within the secure, enterprise-grade Azure environment.

We’ve been contributing to OpenAI’s Codex CLI in open source. OpenAI has accepted our pull request, and we are awaiting final review.

Learn more and get started at codex-mini – Azure AI Foundry

Get Access

Codex-mini is available in East US2 and Sweden Central, supporting Standard Global deployment. To build with codex-mini, use the v1 preview API.

Key Capabilities

codex-mini supports features such as streaming, function calling, structured outputs, and image input. With these capabilities in mind, developers can leverage codex-mini for a range of fast, scalable code generation tasks in command-line environments.

codex-mini is now available via the Azure OpenAI API and Codex CLI. For developers seeking fast, reliable code generation in terminal environments, this purpose-built model offers a powerful new tool in your AI toolkit for fast, low-latency code generation in command-line environments.

codex-mini no es solo un generador de código; es un precursor de la próxima era del desarrollo. Su integración en la línea de comandos sugiere un futuro en el que los desarrolladores orquestan y construyen aplicaciones directamente desde la terminal, combinando la velocidad de la IA con la flexibilidad de las herramientas CLI. Este modelo demuestra que la ingeniería de software está evolucionando hacia la "orquestación de agentes", donde la habilidad clave no es solo escribir código, sino guiar y optimizar a los modelos de IA para que lo hagan de manera eficiente y segura.