Coinciding with the Microsoft Ignite 2019 conference, we are thrilled to announce the GA release of ML.NET 1.4 and updates to Model Builder in Visual Studio, with exciting new machine learning features that will allow you to innovate your .NET applications.

ML.NET is an open-source and cross-platform machine learning framework for .NET developers. ML.NET also includes Model Builder (easy to use UI tool in Visual Studio) and CLI (Command-Line Interface) to make it super easy to build custom Machine Learning (ML) models using Automated Machine Learning (AutoML).

Using ML.NET, developers can leverage their existing tools and skillsets to develop and infuse custom ML into their applications by creating custom machine learning models for common scenarios like Sentiment Analysis, Price Prediction, Sales Forecast prediction, Customer segmentation, Image Classification and more!

Following are some of the key highlights in this update:

ML.NET Updates

In ML.NET 1.4 GA we have released many exciting improvements and new features that are described in the following sections.

Image classification based on deep neural network retraining with GPU support (GA release)

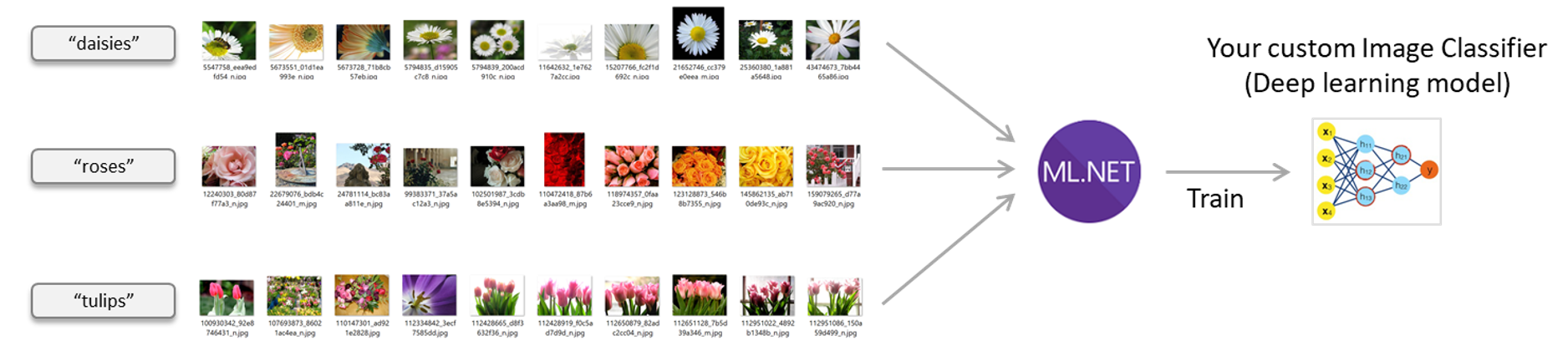

This feature enables native DNN (Deep Neural Network) transfer learning with ML.NET targeting image classification.

For instance, with this feature you can create your own custom image classifier model by natively training a TensorFlow model from ML.NET API with your own images.

Image classifier scenario – Train your own custom deep learning model with ML.NET

ML.NET uses TensorFlow through the low-level bindings provided by the Tensorflow.NET library. The advantage provided by ML.NET is that you use a high level API very simple to use so with just a couple of lines of C# code you define and train an image classification model. A comparable action when using the low level Tensorflow.NET library would need hundreds of lines of code.

The Tensorflow.NET library is an open source and low-level API library that provides the .NET Standard bindings for TensorFlow. That library is part of the open source SciSharp stack libraries.

The below stack diagram shows how ML.NET is implementing these new features on DNN training.

As the first main scenario for high level APIs, we are currently providing image classification, but the goal in the future for this new API is to allow easy to use DNN training for additional scenarios such as object detection and other DNN scenarios in addition to image classification, by providing a powerful yet simple API very easy to use.

This Image-Classification feature was initially released in v1.4-preview. Now, we’re releasing it as a GA release plus we’ve added the following new capabilities for this GA release:

Improvements in v1.4 GA for Image Classification

The main new capabilities in this feature added since v1.4-preview are:

-

GPU support on Windows and Linux. GPU support is based on NVIDIA CUDA. Check hardware/software requisites and GPU requisites installation procedure here. You can also train on CPU if you cannot meet the requirements for GPU.

- SciSharp TensorFlow redistributable supported for CPU or GPU: ML.NET is compatible with

SciSharp.TensorFlow.Redist(CPU training),SciSharp.TensorFlow.Redist-Windows-GPU(GPU training on Windows) andSciSharp.TensorFlow.Redist-Linux-GPU(GPU training on Linux).

- SciSharp TensorFlow redistributable supported for CPU or GPU: ML.NET is compatible with

-

Predictions on in-memory images: You make predictions with in-memory images instead of file-paths, so you have better flexibility in your app. See sample web app using in-memory images here.

-

Training early stopping: It stops the training when optimal accuracy is reached and is not improving any further with additional training cycles (epochs).

-

Learning rate scheduling: Learning rate is an integral and potentially difficult part of deep learning. By providing learning rate schedulers, we give users a way to optimize the learning rate with high initial values which can decay over time. High initial learning rate helps to introduce randomness into the system, allowing the Loss function to better find the global minima. While the decayed learning rate helps to stabilize the loss over time. We have implemented Exponential Decay Learning rate scheduler and Polynomial Decay Learning rate scheduler.

-

Added additional supported DNN architectures to the Image Classifier: The supported DNN architectures (pre-trained TensorFlow model) used internally as the base for ‘transfer learning’ has grown to the following list:

- Inception V3 (Was available in Preview)

- ResNet V2 101 (Was available in Preview)

- Resnet V2 50 (Added in GA)

- Mobilenet V2 (Added in GA)

Those pre-trained TensorFlow models (DNN architectures) are widely used image recognition models trained on very large image-sets such as the ImageNet dataset and are the culmination of many ideas developed by multiple researchers over the years. You can now take advantage of it now by using our easy to use API in .NET.

Example code using the new ImageClassification trainer

The below API code example shows how easily you can train a new TensorFlow model.

Image classifier high level API code example:

// Define model's pipeline with ImageClassification defaults (simplest way)

var pipeline = mlContext.MulticlassClassification.Trainers

.ImageClassification(featureColumnName: "Image",

labelColumnName: "LabelAsKey",

validationSet: testDataView)

.Append(mlContext.Transforms.Conversion.MapKeyToValue(outputColumnName: "PredictedLabel",

inputColumnName: "PredictedLabel"));

// Train the model

ITransformer trainedModel = pipeline.Fit(trainDataView);

The important line in the above code is the line using the ImageClassification classifier trainer which as you can see is a high level API where you just need to provide which column has the images, the column with the labels (column to predict) and a validation dataset to calculate quality metrics while training so the model can tune itself (change internal hyper-parameters) while training.

There’s another overloaded method for advanced users where you can also specify those optional hyper-parameters such as epochs, batchSize, learningRate and other typical DNN parameters, but most users can get started with the simplified API.

Under the covers this model training is based on a native TensorFlow DNN transfer learning from a default architecture (pre-trained model) such as Resnet V2 50. You can also select the one you want to derive from by configuring the optional hyper-parameters.

For further learning read the following resources:

-

Sample app: end-to-end sample app training a TensorFlow model with custom images, including a web app for predicting with in-memory images coming through HTTP. Supports GPU or CPU based training.

-

Detailed Blog Post: Training Image Classification/Recognition models based on Deep Learning & Transfer Learning with ML.NET

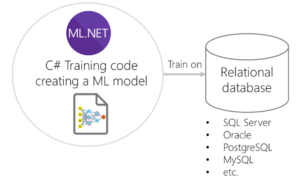

Database Loader (GA Release)

This feature was previously introduced as preview and now is released as general availability in v1.4.

The database loader enables to load data from databases into the IDataView and therefore enables model training directly against relational databases. This loader supports any relational database provider supported by System.Data in .NET Core or .NET Framework, meaning that you can use any RDBMS such as SQL Server, Azure SQL Database, Oracle, SQLite, PostgreSQL, MySQL, Progress, etc.

In previous ML.NET releases, you could also train against a relational database by providing data through an IEnumerable collection by using the LoadFromEnumerable() API where the data could be coming from a relational database or any other source. However, when using that approach, you as a developer are responsible for the code reading from the relational database (such as using Entity Framework or any other approach) which needs to be implemented properly so you are streaming data while training the ML model, as in this previous sample using LoadFromEnumerable().

However, this new Database Loader provides a much simpler code implementation for you since the way it reads from the database and makes data available through the IDataView is provided out-of-the-box by the ML.NET framework so you just need to specify your database connection string, what’s the SQL statement for the dataset columns and what’s the data-class to use when loading the data. It is that simple!

Here’s example code on how easily you can now configure your code to load data directly from a relational database into an IDataView which will be used later on when training your model.

//Lines of code for loading data from a database into an IDataView for a later model training

//...

string connectionString = @"Data Source=YOUR_SERVER;Initial Catalog= YOUR_DATABASE;Integrated Security=True";

string commandText = "SELECT * from SentimentDataset";

DatabaseLoader loader = mlContext.Data.CreateDatabaseLoader();

DbProviderFactory providerFactory = DbProviderFactories.GetFactory("System.Data.SqlClient");

DatabaseSource dbSource = new DatabaseSource(providerFactory, connectionString, commandText);

IDataView trainingDataView = loader.Load(dbSource);

// ML.NET model training code using the training IDataView

//...

public class SentimentData

{

public string FeedbackText;

public string Label;

}

It is important to highlight that in the same way as when training from files, when training with a database ML.NET also supports data streaming, meaning that the whole database doesn’t need to fit into memory, it’ll be reading from the database as it needs so you can handle very large databases (i.e. 50GB, 100GB or larger).

Resources for the DatabaseLoader:

-

Sample app: For further learning see this complete sample app using the new DatabaseLoader.

-

‘How to’ doc: For a step by step explanation, follow the Load data from a relational database ‘How to’ document

PredictionEnginePool for scalable deployments released as GA

![]()

When deploying an ML model into multithreaded and scalable .NET Core web applications and services (such as ASP.NET Core web apps, WebAPIs or an Azure Function) it is recommended to use the PredictionEnginePool instead of directly creating the PredictionEngine object on every request due to performance and scalability reasons.

The PredictionEnginePool comes as part of the Microsoft.Extensions.ML NuGet package which is being released as GA as part of the ML.NET 1.4 release.

For further details on how to deploy a model with the PredictionEnginePool, read the following resources:

- Tutorials:

- Sample App:

For further background information on why the PredictionEnginePool is recommended, read this blog post.

Enhanced for .NET Core 3.0 – Released as GA

ML.NET is now building for .NET Core 3.0 (optional). This feature was previosly released as preview but it is now released as GA.

This means ML.NET can take advantage of the new features when running in a .NET Core 3.0 application. The first new feature we are using is the new hardware intrinsics feature, which allows .NET code to accelerate math operations by using processor specific instructions.

Of course, you can still run ML.NET on older versions, but when running on .NET Framework, or .NET Core 2.2 and below, ML.NET uses C++ code that is hard-coded to x86-based SSE instructions. SSE instructions allow for four 32-bit floating-point numbers to be processed in a single instruction.

Modern x86-based processors also support AVX instructions, which allow for processing eight 32-bit floating-point numbers in one instruction. ML.NET’s C# hardware intrinsics code supports both AVX and SSE instructions and will use the best one available. This means when training on a modern processor, ML.NET will now train faster because it can do more concurrent floating-point operations than it could with the existing C++ code that only supported SSE instructions.

Another advantage the C# hardware intrinsics code brings is that when neither SSE nor AVX are supported by the processor, for example on an ARM chip, ML.NET will fall back to doing the math operations one number at a time. This means more processor architectures are now supported by the core ML.NET components. (Note: There are still some components that don’t work on ARM processors, for example FastTree, LightGBM, and OnnxTransformer. These components are written in C++ code that is not currently compiled for ARM processors).

For more information on how ML.NET uses the new hardware intrinsics APIs in .NET Core 3.0, please check out Brian Lui’s blog post Using .NET Hardware Intrinsics API to accelerate machine learning scenarios.

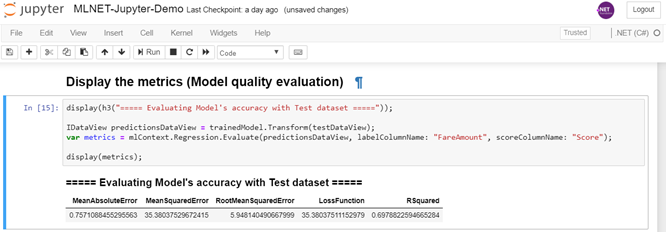

Use ML.NET in Jupyter notebooks

Coinciding with Microsoft Ignite 2019 Microsoft is also announcing the new .NET support on Jupyter notebooks, so you can now run any .NET code (C# / F#) in Jupyter notebooks and therefore run ML.NET code in it as well! – Under the covers, this is enabled by the new .NET kernel for Jupyter.

The Jupyter Notebook is an open-source web application that allows you to create and share documents that contain live code, visualizations and narrative text.

In terms of ML.NET this is awesome for many scenarios like exploring and documenting model training experiments, data distribution exploration, data cleaning, plotting data charts, learning scenarios such as ML.NET courses, hands-on-labs and quizzes, etc.

You can simply start exploring what kind of data is loaded in an IDataView:

Then you can continue by plotting data distribution in the Jupyter notebook following an Exploratory Data Analysis (EDA) approach:

You can also train an ML.NET model and have its training time documented:

Right afterwards you can see the model’s quality metrics in the notebook, and have it documented for later review:

Additional examples are ‘plotting the results of predictions vs. actual data’ and ‘plotting a regression line along with the predictions vs. actual data’ for a better and visual analysis:

For additional explanation details, check out this detailed blog post:

- Detailed Blog Post: Using ML.NET on Jupyter notebooks – Blog Post

For a direct “try it out experience”, please go to this Jupyter notebook hosted at MyBinder and simply run the ML.NET code:

Live Jupyter Notebook with ML.NET. This will launch a ready-to-use Jupyter environment in the web for trying the experience without needing to install anything.

Updates for Model Builder in Visual Studio

The Model Builder tool for Visual Studio has been updated to use the latest ML.NET GA version (1.4 GA) plus it includes new exciting features such the visual experience in Visual Studio for local Image Classification model training.

Model Builder updated to latest ML.NET GA version

Model Builder was updated to use latest GA version of ML.NET (1.4) and therefore the generated C# code also references ML.NET 1.4 NuGet packages.

Visual and local Image Classification model training in VS

As introduced at the begining of this blog post you can locally train an Image Classification model with the ML.NET API. However, when dealing with image files and image folders, the easiesnt way to do it is with a visual interface like the one provided by Model Builder in Visual Studio, as you can see in the image below:

When using Model Builder for training an Image Classifier model you simply need to visually select the folder (with a structure based on one sub-folder per image class) where you have the images to use for training and evaluation and simply start training the model. Then, when finished training you will get the C# code generated for inference/predictions and even for training if you want to use C# code for training from other environments like CI pipelines. Is that easy!

-

Check further details on Model Builder and Image classification in this blog post: ML.NET Model Builder Updates

Try ML.NET and Model Builder today!

- Get started with ML.NET here.

- Get started with Model Builder here.

- Refer to documentation for tutorials and more resources.

- Learn from samples apps targeting a variety of scenarios.

- Watch free ML.NET videos at the ML.NET Youtube playlist.

We are excited to release these updates for you and we look forward to seeing what you will build with ML.NET. If you have any questions or feedback, you can ask here at this blog post or at the ML.NET repo at GitHub.

Happy coding!

The ML.NET team.

This blog was authored by Cesar de la Torre plus additional contributions of the ML.NET team.

Hello!

How to use ML.net in ARM-based platform(like raspberrypi 4 or jetson nano)?

I want to try to develop a program in raspberrypi 4 or jetson nano.

I found some problems in raspberrypi 4,There problems like some dll not found(like CPUNativeMath.dll).Maybe this dll not complie for ARM.

If anyway can solve this problem or have any sample to run problem in ARM-based platform,please tell me .Thanks

How can i train a neural network to solve a prediction problem with ML.NET?

Hi! great job! I'm really having fun with the builder and now with the samples uses in preview (in github). I'm wondering now about how to choose the right GPU and I got to this blog https://www.pugetsystems.com/labs/hpc/TensorFlow-Performance-with-1-4-GPUs----RTX-Titan-2080Ti-2080-2070-GTX-1660Ti-1070-1080Ti-and-Titan-V-1386/ and I came to two questions:

1) can we specify FP16 for ResnetV250 in the ImageClassificationTrainer.Options to profit from the RTX tensor cores?

2) do we have to do something special to use multigpu or just with SciSharp.TensorFlow.Redist-Windows-GPU it automatically detects them?

if I should put these questions in another place I would appreciate if you can redirect me.

thanks!!

GPU is supported in ML.NET Image Classification API (your C# code), but still not when using Visual Studio Model Builder in local environment (your machine).

In order to see how to use GPU check out this blog post and this sample where I explained the steps:

Blog Post: https://devblogs.microsoft.com/cesardelatorre/training-image-classification-recognition-models-based-on-deep-learning-transfer-learning-with-ml-net/

Sample app: https://github.com/dotnet/machinelearning-samples/tree/master/samples/csharp/getting-started/DeepLearning_ImageClassification_Training

Hi Cesar! sorry for asking you again about these two topics:

1) can we use multiple Gpus with ML.NET ?

2) can we configure it to use FP16 instead of FP32 (in case it is not already changed) in Image Classification?

I would appreciate it a lot if you could help me to figure it out.

thanks and regards!

Jose

Hi Cesar, thanks for you answer, I was able to use the GPU using that sample already. I’m trying to figure it now if I can use FP16 in the options, and how to leverage multiple gpus. About FP16 I’ve found this link https://towardsdatascience.com/rtx-2060-vs-gtx-1080ti-in-deep-learning-gpu-benchmarks-cheapest-rtx-vs-most-expensive-gtx-card-cd47cd9931d2 that show how to using Pythorch + fastai and I’m wondering how to apply it in my C# project

regards!!

Hello,

i have a question about yolov3

i study ML.NET sample: https://github.com/dotnet/machinelearning-samples/tree/master/samples/csharp/getting-started/DeepLearning_ObjectDetection_Onnx

and modify pipline

<code>

when i predict data, i dont know how to input three output layer ids

<code>

Please give me some guidance, thank you very much again!

yolov3 model download from https://github.com/onnx/models

and use Netron to get output layers

If you want to get outputs from multiple columns, you can use a . An example of how to use it is here:

https://github.com/dotnet/machinelearning-samples/tree/master/samples/csharp/getting-started/DeepLearning_ImageClassification_TensorFlow

And specifically, the line where the PredictionEngine is defined is here:

https://github.com/dotnet/machinelearning-samples/blob/master/samples/csharp/getting-started/DeepLearning_ImageClassification_TensorFlow/ImageClassification/ModelScorer/TFModelScorer.cs#L76

In your case, the class for predictions would be defined something like this:

<code>

(not sure I got the types of outputs correctly, but this is the general idea).

You can then call the Predict API on the PredictionEngine, like this:

https://github.com/dotnet/machinelearning-samples/blob/master/samples/csharp/getting-started/DeepLearning_ImageClassification_TensorFlow/ImageClassification/ModelScorer/TFModelScorer.cs#L97

this comment has been deleted.

Thank you very much for your comment and sample 😀

Hi!

Could you please share your yolov3 implementation if you have finished it?

There is still a lot of data manipulation tools missing which makes this impossible to use for certain data sets. For example, no stratification (eg k-fold cross validation with stratification) for unbalanced data. Is there a roadmap with dates for future features?

Hello !

how to use model Resnet V2 50 (Added in GA) ?

Is there an sample? thanks

By default, if not specifying the DNN architecture, it'll use Resnet V2 50.

If you want to specify a selected DNN architecture, you can do it with the optional hyper-parameters, like in the following code, also available in the mentioned sample app in the link above:

https://github.com/dotnet/machinelearning-samples/blob/master/samples/csharp/getting-started/DeepLearning_ImageClassification_Training/ImageClassification.Train/Program.cs#L66

<code>

Thanks for your replay

if i don’t want use custom data for trainer model

i can use inception V3 model?,

i read this sample https://github.com/dotnet/machinelearning-samples/tree/master/samples/csharp/getting-started/DeepLearning_ImageClassification_TensorFlow/ImageClassification

and not find answer

Thank you for taking the time to answer

Of course, if you just want to use the pre-trained Inception V3 model directly (for scoring, making predictions on the pre-existing Inception V3 classes) without training with your custom data, you can simply use the TensorFlow model like in this ML.NET sample:

https://github.com/dotnet/machinelearning-samples/tree/master/samples/csharp/getting-started/DeepLearning_ImageClassification_TensorFlow

In the following blog post you have it all explained in further details. What you want to do is the simplest approach explained at the begining, no training, simply scoring/inference (making predictions) with an existing pre-trained TensorFlow model (Inception V3, in your case):

https://devblogs.microsoft.com/cesardelatorre/training-image-classification-recognition-models-based-on-deep-learning-transfer-learning-with-ml-net/

You can also do a similar approach with existing pre-trained ONNX models, like we do in...

Thank you very much for your information.

This page are helpful to me

Thanks 😀