ML.NET is an open-source and cross-platform framework (Windows, Linux, macOS) which makes machine learning accessible for .NET developers.

ML.NET allows you to create and use machine learning models targeting scenarios to achieve common tasks such as sentiment analysis, issue classification, forecasting, recommendations, fraud detection, image classification and more. You can check out these common tasks at our GitHub repo with ML.NET samples.

Today we’re happy to announce the release of ML.NET 0.8. (ML.NET 0.1 was released at //Build 2018). This release focuses on adding improved support for recommendation scenarios, model explainability in the form of feature importance, debuggability by previewing your in-memory datasets, API improvements such as caching, filtering, and more.

This blog post provides details about the following topics in the ML.NET 0.8 release:

- New Recommendation scenarios

- Improved debuggability

- Model explainability

- Additional API improvements

New Recommendation Scenarios (e.g. Frequently Bought Together)

Recommender systems enable producing a list of recommendations for products in a product catalog, songs, movies, and more. Products like Netflix, Amazon, Pinterest have democratized use of Recommendation like scenarios over the last decade.

ML.NET uses Matrix Factorization and Field Aware Factorization machines based approach for recommendation which enable the following scenarios. In general Field Aware Factorization machines is the more generalized case of Matrix Factorization and allows for passing additional meta data.

With ML.NET 0.8 we have added another scenario for Matrix Factorization which enables recommendations.

| Recommendation Scenarios | Recommended solution | Link to Sample |

| Product Recommendations based upon Product Id, Rating, User Id, and additional meta data like Product Description, User Demographics (age, country etc.) | Field Aware Factorization Machines | Since ML.NET 0.3 (Sample here) |

| Product Recommendations based upon Product Id, Rating and User Id only | Matrix Factorization | Since ML.NET 0.7 (Sample here) |

| Product Recommendations based upon Product Id and Co-Purchased Product IDs | One Class Matrix Factorization | New in ML.NET 0.8 (Sample here) |

Yes! product recommendations are still possible even if you only have historical order purchasing data for your store.

This is a popular scenario as in many situations you might not have ratings available to you.

With historical purchasing data you can still build recommendations by providing your users a list of “Frequently Bought Together” product items.

The below snapshot is from Amazon.com where its recommending a set of products based upon the product selected by the user.

We now support this scenario in ML.NET 0.8, and you can try out this sample which performs product recommendations based upon an Amazon Co-purchasing dataset.

Improved debuggability by previewing the data

In most of the cases when starting to work with your pipeline and loading your dataset it is very useful to peek at the data that was loaded into an ML.NET DataView and even look at it after some intermediate transformation steps to ensure the data is transformed as expected.

First what you can do is to review schema of your DataView. All you need to do is hover over IDataView object, expand it, and look for the Schema property.

If you want to take a look to the actual data loaded in the DataView, you can do following steps shown in the animation below.

The steps are:

- While debugging, open a Watch window.

- Enter variable name of you DataView object (in this case

testDataView) and callPreview()method for it. - Now, click over the rows you want to inspect. That will show you actual data loaded in the DataView.

By default we output first 100 values in ColumnView and RowView. But that can be changed by passing the amount of rows you interested into the to Preview() function as argument, such as Preview(500).

Model explainability

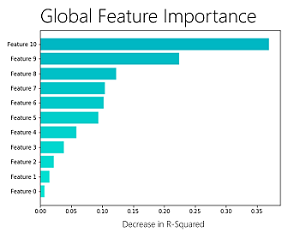

In ML.NET 0.8 release, we have included APIs for model explainability that we use internally at Microsoft to help machine learning developers better understand the feature importance of models (“Overall Feature Importance”) and create high-capacity models that can be interpreted by others (“Generalized Additive Models”).

Overall feature importance gives a sense of which features are overall most important for the model. When creating Machine Learning models, it is often not enough to simply make predictions and evaluate its accuracy. As illustrated in the previous image, feature importance helps you understand which data features are most valuable to the model for making a good prediction. For instance, when predicting the price of a car, some features are more important like mileage and make/brand, while other features might impact less, like the car’s color.

The “Overall feature importance” of a model is enabled through a technique named “Permutation Feature Importance” (PFI). PFI measures feature importance by asking the question, “What would the effect on the model be if the values for a feature were set to a random value (permuted across the set of examples)?”.

The advantage of the PFI method is that it is model agnostic — it works with any model that can be evaluated — and it can use any dataset, not just the training set, to compute feature importance.

You can use PFI like so to produce feature importances with code like the following:

// Compute the feature importance using PFI

var permutationMetrics = mlContext.Regression.PermutationFeatureImportance(model, data);

// Get the feature names from the training set

var featureNames = data.Schema.GetColumns()

.Select(tuple => tuple.column.Name) // Get the column names

.Where(name => name != labelName) // Drop the Label

.ToArray();

// Write out the feature names and their importance to the model's R-squared value

for (int i = 0; i < featureNames.Length; i++)

Console.WriteLine($"{featureNames[i]}\t{permutationMetrics[i].rSquared:G4}");

You would get a similar output in the console than the metrics below:

Console output:

Feature Model Weight Change in R - Squared

--------------------------------------------------------

RoomsPerDwelling 50.80 -0.3695

EmploymentDistance -17.79 -0.2238

TeacherRatio -19.83 -0.1228

TaxRate -8.60 -0.1042

NitricOxides -15.95 -0.1025

HighwayDistance 5.37 -0.09345

CrimesPerCapita -15.05 -0.05797

PercentPre40s -4.64 -0.0385

PercentResidental 3.98 -0.02184

CharlesRiver 3.38 -0.01487

PercentNonRetail -1.94 -0.007231

Note that in current ML.NET v0.8, PFI only works for binary classification and regression based models, but we’ll expand to additional ML tasks in the upcoming versions.

See the sample in the ML.NET repository for a complete example using PFI to analyze the feature importance of a model.

Generalized Additive Models, or (GAMs) have very explainable predictions. They are similar to linear models in terms of ease of understanding but are more flexible and can have better performance and and could also be visualized/plotted for easier analysis.

Example usage of how to train a GAM model, inspect and interpret the results, can be found here.

Additional API improvements in ML.NET 0.8

In this release we have also added other enhancements to our APIs which help with filtering rows in DataViews, caching data, allowing users to save data to the IDataView (IDV) binary format. You can learn about these features here.

Filtering rows in a DataView

Sometimes you might need to filter the data used for training a model. For example, you might need to remove rows where a certain column’s value is lower or higher than certain boundaries because of any reason like ‘outliers’ data.

This can now be done with additional filters like FilterByColumn() API such as in the following code from this sample app at ML.NET samples, where we want to keep only payment rows between $1 and $150 because for this particular scenario, because higher than $150 are considered “outliers” (extreme data distorting the model) and lower than $1 might be errors in data:

IDataView trainingDataView = mlContext.Data.FilterByColumn(baseTrainingDataView, "FareAmount", lowerBound: 1, upperBound: 150);

Thanks to the added DataView preview in Visual Studio previously mentioned above, you could now inspect the filtered data in your DataView.

Additional sample code can be check-out here.

Caching APIs

Some estimators iterate over the data multiple times. Instead of always reading from file, you can choose to cache the data to sometimes speed training execution.

A good example is the following when the training is using an OVA (One Versus All) trainer which is running multiple iterations against the same data. By eliminating the need to read data from disk multiple times you can reduce model training time by up to 50%:

var dataProcessPipeline = mlContext.Transforms.Conversion.MapValueToKey("Area", "Label")

.Append(mlContext.Transforms.Text.FeaturizeText("Title", "TitleFeaturized"))

.Append(mlContext.Transforms.Text.FeaturizeText("Description", "DescriptionFeaturized"))

.Append(mlContext.Transforms.Concatenate("Features", "TitleFeaturized", "DescriptionFeaturized"))

//Example Caching the DataView

.AppendCacheCheckpoint(mlContext)

.Append(mlContext.BinaryClassification.Trainers.AveragedPerceptron(DefaultColumnNames.Label,

DefaultColumnNames.Features,

numIterations: 10));

This example code is implemented and execution time measured in this sample app at the ML.NET Samples repo.

An additional test example can be found here.

Enabled saving and loading data in IDataView (IDV) binary format for improved performance

It is sometimes useful to save data after it has been transformed. For example, you might have featurized all the text into sparse vectors and want to perform repeated experimentation with different trainers without continuously repeating the data transformation.

IDV format is a binary dataview file format provided by ML.NET.

Saving and loading files in IDV format is often significantly faster than using a text format because it is compressed.

In addtion, because it is already schematized ‘in-file’, you don’t need to specify the column types like you need to do when using a regular TextLoader, so the code to use is simpler in addition to faster.

Reading a binary data file can be done using this simple line of code:

mlContext.Data.ReadFromBinary("pathToFile");

Writing a binary data file can be done using this code:

mlContext.Data.SaveAsBinary("pathToFile");

Enabled stateful prediction engine for time series problems such as anomaly detection

ML.NET 0.7 enabled anomaly detection scenarios based on Time Series. However, the prediction engine was stateless, which means that every time you want to figure out whether the latest data point is anomolous, you need to provide historical data as well. This is unnatural.

The prediction engine can now keep state of time series data seen so far, so you can now get predictions by just providing the latest data point. This is enabled by using CreateTimeSeriesPredictionFunction() instead of CreatePredictionFunction().

Example usage can be found here

Get started!

If you haven’t already get started with ML.NET here.

Next, going further explore some other resources:

- Tutorials and resources at the Microsoft Docs ML.NET Guide

- Code samples at the machinelearning-samples GitHub repo

- Important ML.NET concepts for understanding the new API are introduced here

- “How to” guides that show how to use these APIs for a variety of scenarios can be found here

We will appreciate your feedback by filing issues with any suggestions or enhancements in the ML.NET GitHub repo to help us shape ML.NET and make .NET a great platform of choice for Machine Learning.

Thanks,

The ML.NET Team.

This blog was authored by Cesar de la Torre, Gal Oshri, Rogan Carr plus additional contributions from the ML.NET team

Wow, amazing stuff!