My titlepage contents

One giant leap for gamers!

It’s been less than 18 months since we announced DirectX 12 at GDC 2014. Since that time, we’ve been working tirelessly with game developers and graphics card vendors to deliver an API that offers more control over graphics hardware than ever before. When we set out to design DirectX 12, game developers gave us a daunting set of requirements:

1) Dramatically reduce CPU overhead while increasing GPU performance

2) Work across the Windows and Xbox One ecosystem

3) Provide support for all of the latest graphics hardware features

Today, we’re excited to announce the fulfillment of these ambitious goals! With the release of Windows 10, DirectX 12 is now available for everyone to use, and the first DirectX 12 content will arrive in the coming weeks. For a personal message from our Vice President of Development, click here.

What will DirectX 12 do for me?

We’re very pleased to see all of the excitement from gamers about DirectX 12! This excitement has led to a steady stream of articles, tweets, and YouTube videos discussing DirectX 12 and what it means to gamers. We’ve seen articles questioning whether DirectX 12 will provide substantial benefits, and we’ve seen articles that promise that with DirectX 12, the 3DFX Voodoo card you have gathering dust in your basement will allow your games to cross the Uncanny Valley.

Let’s set the record straight. We expect that games that use DirectX 12 will:

1) Be able to write to one graphics API for PCs and Xbox One

2) Reduce CPU overhead by up to 50% while scaling across all CPU cores

3) Improve GPU performance by up to 20%

4) Realize more benefits over time as game developers learn how to use the new API more efficiently

To elaborate, DirectX 12 is a paradigm shift for game developers, providing them with a new way to structure graphics workloads. These new techniques can lead to a tremendous increase in expressiveness and optimization opportunities. Typically, when game developers decide to support DirectX 12 in their engine, they will do so in phases. Rather than completely overhauling their engine to take full advantage of every aspect of the API, they will start with their DirectX 11 based engine and then port it over to DirectX 12. We expect such engine developers to achieve up to a 50% CPU reduction while improving GPU performance by up to 20%. The reason we mention “up to” is because every game is different – the more of the various DirectX 12 features (see below) a game uses, the more optimization they can expect.

Over time, we expect that games will build DirectX 12’s capabilities into the design of the game itself, which will lead to even more impressive gains. The game “Ashes of the Singularity” is a good example of a game that bakes DirectX 12’s capabilities into the design itself. The result: a RTS game that can show tens of thousands of actors engaged in dozens of battles simultaneously.

Speaking of games, support for DirectX 12 is currently available to the public in an early experimental mode in Unity 5.2 Beta and in Unreal 4.9 Preview, so the many games powered by these engines will soon run on DirectX 12. In addition to games based on these engines, we’re on pace for the fastest adoption of a new DirectX technology that we’ve had this millennium – so stay tuned for lots of game announcements!

What hardware should I buy?

The great news is that, because we’ve designed DirectX 12 to work broadly cross a wide variety of hardware, roughly 2 out of 3 gamers will not need to buy any new hardware at all. If you have supported hardware, simply get your free upgrade to Windows 10 and you’re good to go.

However, as a team full of gamers, our professional (and clearly unbiased) opinion is that the upcoming DirectX 12 games are an excellent excuse to upgrade your hardware. Because DirectX 12 makes all supported hardware better, you can rest assured that whether you speed $100 or $1000 on a graphics card, you will benefit from DirectX 12.

But how do you know which card is best for your gaming dollar? How do you make sense of the various selling points that you see from the various graphics hardware vendors? Should you go for a higher “feature level” or should you focus on another advertised feature such as async compute or support for a particular bind model?

Most of these developer-focused features do provide some incremental benefit to users and more information on each of these can be found later in this post. However, generally speaking, the most important thing is to simply get a card that supports DirectX 12. Beyond that, we would recommend focusing on how the different cards actually perform on real games and benchmarks. This gives a much more reliable view of what kind of performance to expect.

DirectX 11 game performance is widely available today, and we expect DirectX 12 game performance to be data available in the very near future. Combined, this performance data is a great way to make your purchasing decisions.

Technical Details: (note much of this content is taken from earlier blogs)

CPU Overhead Reduction and Multicore Scaling

Pipeline state objects

Direct3D 11 allows pipeline state manipulation through a large set of orthogonal objects. For example, input assembler state, pixel shader state, rasterizer state, and output merger state are all independently modifiable. This provides a convenient, relatively high-level representation of the graphics pipeline, however it doesn’t map very well to modern hardware. This is primarily because there are often interdependencies between the various states. For example, many GPUs combine pixel shader and output merger state into a single hardware representation, but because the Direct3D 11 API allows these to be set separately, the driver cannot resolve things until it knows the state is finalized, which isn’t until draw time. This delays hardware state setup, which means extra overhead, and fewer maximum draw calls per frame.

Direct3D 12 addresses this issue by unifying much of the pipeline state into immutable pipeline state objects (PSOs), which are finalized on creation. This allows hardware and drivers to immediately convert the PSO into whatever hardware native instructions and state are required to execute GPU work. Which PSO is in use can still be changed dynamically, but to do so the hardware only needs to copy the minimal amount of pre-computed state directly to the hardware registers, rather than computing the hardware state on the fly. This means significantly reduced draw call overhead, and many more draw calls per frame.

Command lists and bundles

In Direct3D 11, all work submission is done via the immediate context, which represents a single stream of commands that go to the GPU. To achieve multithreaded scaling, games also have deferred contexts available to them, but like PSOs, deferred contexts also do not map perfectly to hardware, and so relatively little work can be done in them.

Direct3D 12 introduces a new model for work submission based on command lists that contain the entirety of information needed to execute a particular workload on the GPU. Each new command list contains information such as which PSO to use, what texture and buffer resources are needed, and the arguments to all draw calls. Because each command list is self-contained and inherits no state, the driver can pre-compute all necessary GPU commands up-front and in a free-threaded manner. The only serial process necessary is the final submission of command lists to the GPU via the command queue, which is a highly efficient process.

In addition to command lists, Direct3D 12 also introduces a second level of work pre-computation, bundles. Unlike command lists which are completely self-contained and typically constructed, submitted once, and discarded, bundles provide a form of state inheritance which permits reuse. For example, if a game wants to draw two character models with different textures, one approach is to record a command list with two sets of identical draw calls. But another approach is to “record” one bundle that draws a single character model, then “play back” the bundle twice on the command list using different resources. In the latter case, the driver only has to compute the appropriate instructions once, and creating the command list essentially amounts to two low-cost function calls.

Descriptor heaps and tables

Resource binding in Direct3D 11 is highly abstracted and convenient, but leaves many modern hardware capabilities underutilized. In Direct3D 11, games create “view” objects of resources, then bind those views to several “slots” at various shader stages in the pipeline. Shaders in turn read data from those explicit bind slots which are fixed at draw time. This model means that whenever a game wants to draw using different resources, it must re-bind different views to different slots, and call draw again. This is yet another case of overhead that can be eliminated by fully utilizing modern hardware capabilities.

Direct3D 12 changes the binding model to match modern hardware and significantly improve performance. Instead of requiring standalone resource views and explicit mapping to slots, Direct3D 12 provides a descriptor heap into which games create their various resource views. This provides a mechanism for the GPU to directly write the hardware-native resource description (descriptor) to memory up-front. To declare which resources are to be used by the pipeline for a particular draw call, games specify one or more descriptor tables which represent sub-ranges of the full descriptor heap. As the descriptor heap has already been populated with the appropriate hardware-specific descriptor data, changing descriptor tables is an extremely low-cost operation.

In addition to the improved performance offered by descriptor heaps and tables, Direct3D 12 also allows resources to be dynamically indexed in shaders, providing unprecedented flexibility and unlocking new rendering techniques. As an example, modern deferred rendering engines typically encode a material or object identifier of some kind to the intermediate g-buffer. In Direct3D 11, these engines must be careful to avoid using too many materials, as including too many in one g-buffer can significantly slow down the final render pass. With dynamically indexable resources, a scene with a thousand materials can be finalized just as quickly as one with only ten.

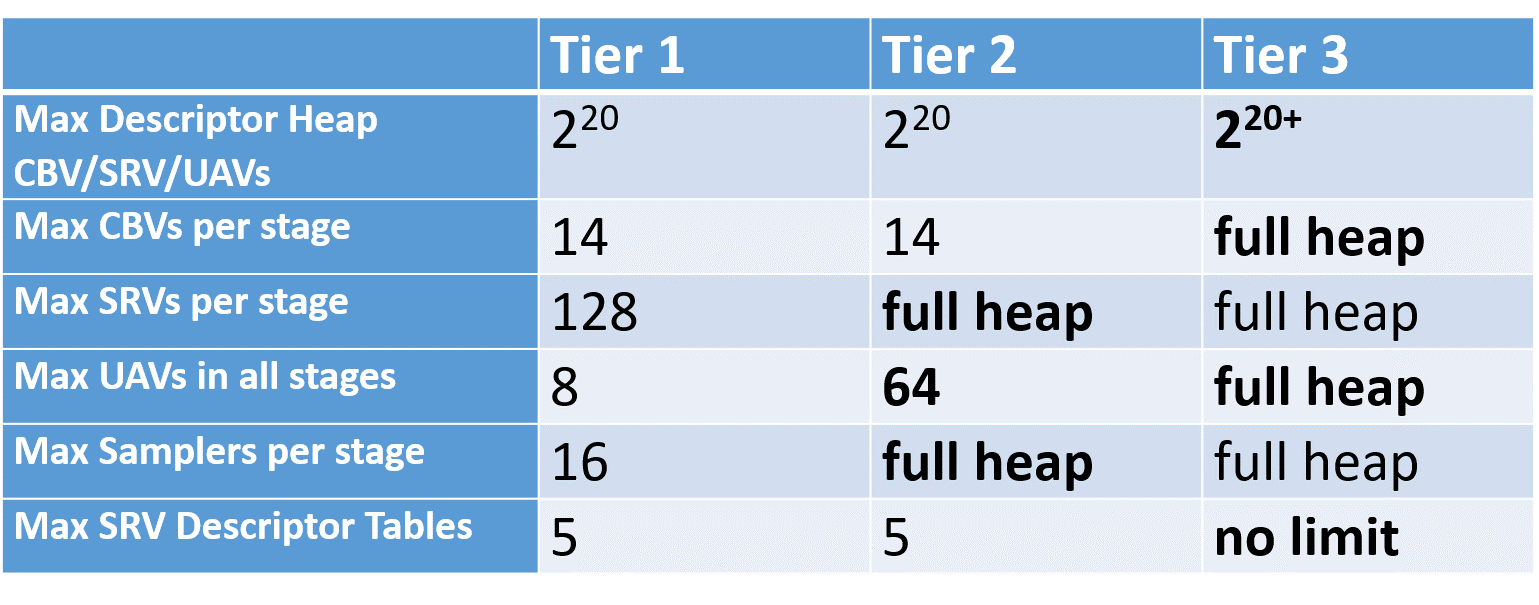

Modern hardware has a variety of different capabilities with respect to the total number of descriptors that can reside in a descriptor heap, as well as the number of specific descriptors that can be referenced simultaneously in a particular draw call. With DirectX 12, developers can take advantage of hardware with more advanced binding capabilities by using our tiered binding system. Developers who take advantage of the higher binding tiers can use more advanced shading algorithms which lead to reduced GPU cost and higher rendering quality.

Increasing GPU Performance

GPU Efficiency

Currently, there are three key areas where GPU improvements can be made that weren’t possible before: Explicit resource transitions, parallel GPU execution, and GPU generated workloads. Let’s take a quick look at all three.

Explicit resource transitions

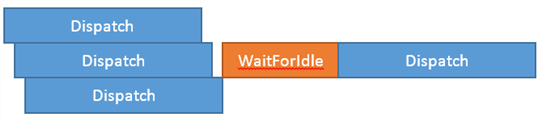

In DirectX 12, the app has the power of identifying when resource state transitions need to happen. For instance, a driver in the past would have to ensure all writes to a UAV are executed in order by inserting ‘Wait for Idle’ commands after each dispatch with resource barriers.

If the app knows that certain dispatches can run out of order, the ‘Wait for Idle’ commands can be removed.

Using the new Resource Barrier API, the app can also specify a ‘begin’ and ‘end’ transition while promising not to use the resource while in transition. Drivers can use this information to eliminate redundant pipeline stalls and cache flushes.

Parallel GPU execution

Modern hardware can run multiple workloads on multiple ‘engines’. Three types of engines are exposed in DirectX 12: 3D, Compute, and Copy. It is up to the app to manage dependencies between queues.

We are really excited about two notable compute engine scenarios that can take advantage of this GPU parallelism: long running but low priority compute work; and tightly interleaved 3D/Compute work within a frame. An example would be compute-heavy dispatches during shadow map generation.

Another notable example use case is in texture streaming where a copy engine can move data around without blocking the main 3D engine which is especially great when going across PCI-E. This feature is often referred to in marketing as “Async computing”

GPU-generated workloads

ExecuteIndirect is a powerful new API for executing GPU-generated Draw/Dispatch workloads that has broad hardware compatibility. Being able to vary things like Vertex/Index buffers, root constants, and inline SRV/UAV/CBV descriptors between invocations enables new scenarios as well as unlocking possible dramatic efficiency improvements.

Multiadapter Support

DirectX 12 allows developers to make use of all graphics cards in the system, which opens up a number of exciting possibilities:.

1) Developers no longer need to rely on multiple manufacturer-specific code paths to support AFR/SFR for gamers with CrossFire/SLI systems

2) Developers can further optimize for gamers who have CrossFire/SLI systems

Developers can also make use of the integrated graphics adapter, which previously sat idle on gamer’s machines.

Support for new hardware features

DirectX has the notion of “Feature Level” which allows developers to use certain graphics hardware features in a deterministic way across different graphics card vendors. Feature levels were created to reduce complexity for developers. A given “Feature Level” combines a number of different hardware features together, which are then supported (or not supported) by the graphics hardware. If the hardware supports a given “Feature Level” the hardware must support all features in that “Feature Level” and in previous “Feature Levels.” For instance, if hardware supports “Feature Level 11_2” the hardware must also support “Feature Level 11_1, 11_0”, etc. Grouping features together in this way dramatically reduces the number of hardware permutations that developers need to worry about.

“Feature Level” has been a source of much confusion because we named it in a confusing way (we even considered changing the naming scheme as part of the DirectX 12 API release but decided not to since changing it at this point would create even more confusion). Despite the numeric suffix of “Feature Level ‘11’” or “Feature Level ‘12’”, these numbers are mostly independent of the API version and are not indicative of game performance. For example, it is entirely possible that a “Feature Level 11” GPU could substantially outperform “Feature Level 12” GPU when running a DirectX 12 game..

With that being said, Windows 10 includes two new “Feature Levels” which are supported on both the DirectX 11 and DirectX 12 APIs (as mentioned earlier, “Feature Level” is mostly independent of the API version).

- Feature Level 12.0

- Resource Binding Tier 2

- Tiled Resources Tier 2: Texture3D

- Typed UAV Tier 1

- Feature Level 12.1

- Conservative Rasterization Tier 1

- ROVs

More information on the new rendering features can be found here.

You passed the endurance test!

If you’ve made it this far, you should check out our instructional videos here. Ready to start writing some code? Check out our samples here to help you get started. Oh, and to butcher Churchill – this is end of the beginning, not the end or the beginning of the end. There is much, much more to come. Stay tuned!

0 comments