It is time for DirectX to evolve once again.

From the team that has brought PC and Console gamers the latest in graphics innovation for nearly 25 years, we are beyond pleased to bring gamers DirectX 12 Ultimate, the culmination of the best graphics technology we’ve ever introduced in an unprecedented alignment between PC and Xbox Series X.

When gamers purchase PC graphics hardware with the DX12 Ultimate logo or an Xbox Series X, they can do so with the confidence that their hardware is guaranteed to support ALL next generation graphics hardware features, including DirectX Raytracing, Variable Rate Shading, Mesh Shaders and Sampler Feedback. This mark of quality ensures stellar “future-proof” feature support for next generation games!

Ultimate Graphics Innovation

Microsoft’s Game Stack exists to bring developers the tools they need to create bold, immersive game experiences, and DX12 Ultimate is the ideal tool to amplify gaming graphics. DX12 Ultimate is the result of continual investment in the DirectX 12 platform made over the last five years to ensure that Xbox and Windows 10 remain at the very pinnacle of graphics technology. To further empower game developers to create games with stunning visuals, we enhanced features that are already beginning to transform gaming such as DirectX Raytracing and Variable Rate Shading, and have added new major features such as Mesh Shaders and Sampler Feedback.

Together, these features represent many years of innovation from Microsoft and our partners in the hardware industry. DX12 Ultimate brings them all together in one common bundle, providing developers with a single key to unlock next generation graphics on PC and Xbox Series X.

Of course, even the most powerful features are of limited use without the tools necessary to fully exploit them, so we are pleased to announce that our industry-leading PIX graphics optimization tool and our open-source HLSL compiler will provide game developers with the ability to squeeze every last drop of performance out of an entire ecosystem of DX12 Ultimate hardware.

An Additive Benefit

DirectX12 Ultimate is fundamentally an additive initiative that provides gamers with assurance that their hardware meets the highest bar for feature support in next-generation games.

It is very important to note that DX12 Ultimate will not impact game compatibility with existing hardware which does not support the entire breath of DX12 Ultimate features. In fact, next-generation games which use DX12 Ultimate features will continue to run on non-DX12 Ultimate hardware. Though such hardware won’t provide the visual benefits of the new features, it can still provide a very compelling gaming experience on next generation games, depending on the specifics of the hardware.

The PC ecosystem encompasses a broad variety of hardware and DX12 Ultimate makes that ecosystem strictly better with no adverse effect on hardware which does not support DX12 Ultimate!

Amplifying a Virtuous Cycle

As any gamer would attest, there are few higher virtues than the appreciation of a well-crafted and beautiful game. DX12 Ultimate creates unprecedented opportunities for the entire gaming ecosystem, creating a self-reinforcing virtuous cycle that results in better gaming experiences!

The cycle below describes the general graphics improvement process in games that has occurred for many years. As new hardware (or a new console generation) slowly reaches market saturation, the number of addressable sockets with next-generation capable graphics features increases. As the number of sockets with these features increases, the number of game studios willing to adopt the new features likewise increases, until finally, market saturation of hardware occurs, and most game studios adopt the features.

Prior to DX12 Ultimate, there was limited overlap between these two cycles. Even when hardware was similar, the software interfaces were quite dissimilar, discouraging aligned adoption by developers.

By unifying the graphics platform across PC and Xbox Series X, DX12 Ultimate serves as a force multiplier for the entire gaming ecosystem. No longer do the cycles operate independently! Instead, they now combine synergistically: when Xbox Series X releases, there will already be many millions of DX12 Ultimate PC graphics cards in the world with the same feature set, catalyzing a rapid adoption of new features, and when Xbox Series X brings a wave of new console gamers, PC will likewise benefit from this vast surge of new DX12 Ultimate capable hardware!

The result? An adrenaline shot to new feature adoption, groundbreaking graphics in the hands of gamers more quickly than ever before!

For more information about how our hardware partners are supporting DX12 Ultimate, see these articles by AMD and NVIDIA:

- https://community.amd.com/community/gaming/blog/2020/03/19/powering-next-generation-gaming-visuals-with-amd-rdna-2-and-directx-12-ultimate

- https://www.nvidia.com/en-us/geforce/news/geforce-rtx-ready-for-directx-12-ultimate

DirectX 12 Ultimate Deep Dive

Now, join us for a deeper look under the hood of DirectX 12 Ultimate to learn how these features work. Some of this info is a bit technical, so if you have further questions, we invite you to join our discord to ask questions!

DirectX Raytracing 1.1

DirectX Raytracing (DXR) brings a new level of graphics realism to video games, previously only achievable in the movie industry. The effects achievable by DXR feel more real, because in a sense they are more real: DXR traces paths of light with true-to-life physics calculations, which is a far more accurate simulation than the heuristics based calculations used previously.

We’ve already seen an unprecedented level of visual quality from titles that use DXR 1.0 since we unveiled it, and built DXR 1.1 in response to developer feedback, giving them even more tools with which to utilize DXR.

DXR 1.1 is an incremental addition over the top of DXR 1.0, adding three major new capabilities:

- GPU Work Creation now allows Raytracing. This enables shaders on the GPU to invoke raytracing without an intervening round-trip back to the CPU. This ability is useful for adaptive raytracing scenarios like shader-based culling / sorting / classification / refinement. Basically, scenarios that prepare raytracing work on the GPU and then immediately spawn it.

- Streaming engines can more efficiently load new raytracing shaders as needed when the player moves around the world and new objects become visible.

- Inline raytracing is an alternative form of raytracing that gives developers the option to drive more of the raytracing process, as opposed to handling work scheduling entirely to the system (dynamic-shading). It is available in any shader stage, including compute shaders, pixel shaders etc. Both the dynamic-shading and inline forms of raytracing use the same opaque acceleration structures.

When to use inline raytracing

Inline raytracing can be useful for many reasons:

- Perhaps the developer knows their scenario is simple enough that the overhead of dynamic shader scheduling is not worthwhile. For example, a well constrained way of calculating shadows.

- It could be convenient/efficient to query an acceleration structure from a shader that doesn’t support dynamic-shader-based rays. Like a compute shader or pixel shader.

- It might be helpful to combine dynamic-shader-based raytracing with the inline form. Some raytracing shader stages, like intersection shaders and any hit shaders, don’t even support tracing rays via dynamic-shader-based raytracing. But the inline form is available everywhere.

- Another combination is to switch to the inline form for simple recursive rays. This enables the app to declare there is no recursion for the underlying raytracing pipeline, given inline raytracing is handling recursive rays. The simpler dynamic scheduling burden on the system can yield better efficiency.

Scenarios with many complex shaders will run better with dynamic-shader-based raytracing, as opposed to using massive inline raytracing uber-shaders. Meanwhile, scenarios that have a minimal shading complexity and/or very few shaders will run better with inline raytracing.

If the above all seems quite complicated, well, it is! The high-level takeaway is that both the new inline raytracing and the original dynamic-shader-based raytracing are valuable for different purposes. As of DXR 1.1, developers not only have the choice of either approach, but can even combine them both within a single renderer. Hybrid approaches are aided by the fact that both flavors of DXR raytracing share the same acceleration structure format, and are driven by the same underlying traversal state machine.

Best of all, gamers with DX12 Ultimate hardware can be assured that no matter what kind of Raytracing solution the developer chooses to use, they will have a great experience.

Variable Rate Shading

Variable Rate Shading (VRS) allows developers to selectively vary a game’s shading rate. This lets them ‘dial up’ the GPU power in more importance parts of the game for better visuals and ‘dial back’ the GPU power in less important areas of a game for better speed. Variable Rate Shading also has the advantage of being relatively low cost to implement for developers. More details on Variable Rate Shading can be found in our previous blog post.

Mesh Shaders

Mesh Shaders give developers more programmability than ever before. By bringing the full power of generalized GPU compute to the geometry pipeline, mesh shaders allow developers to build more detailed and dynamic worlds than ever before.

Prior to mesh shader, the GPU geometry pipeline hid the parallel nature of GPU hardware execution behind a simplified programming abstraction which only gave developers access to seemingly linear shader functions. For instance, the developer writes a vertex shader function that is called once for each vertex in a model, implying serial execution. However, behind the scenes, the hardware packs adjacent vertices to fill a SIMD wave, then executes 32 or 64 vertex shader functions in parallel on a single shader core. This model has worked extremely well for many years, but it is leaving performance and flexibility on the table by hiding the details of what the hardware is really doing from developers.

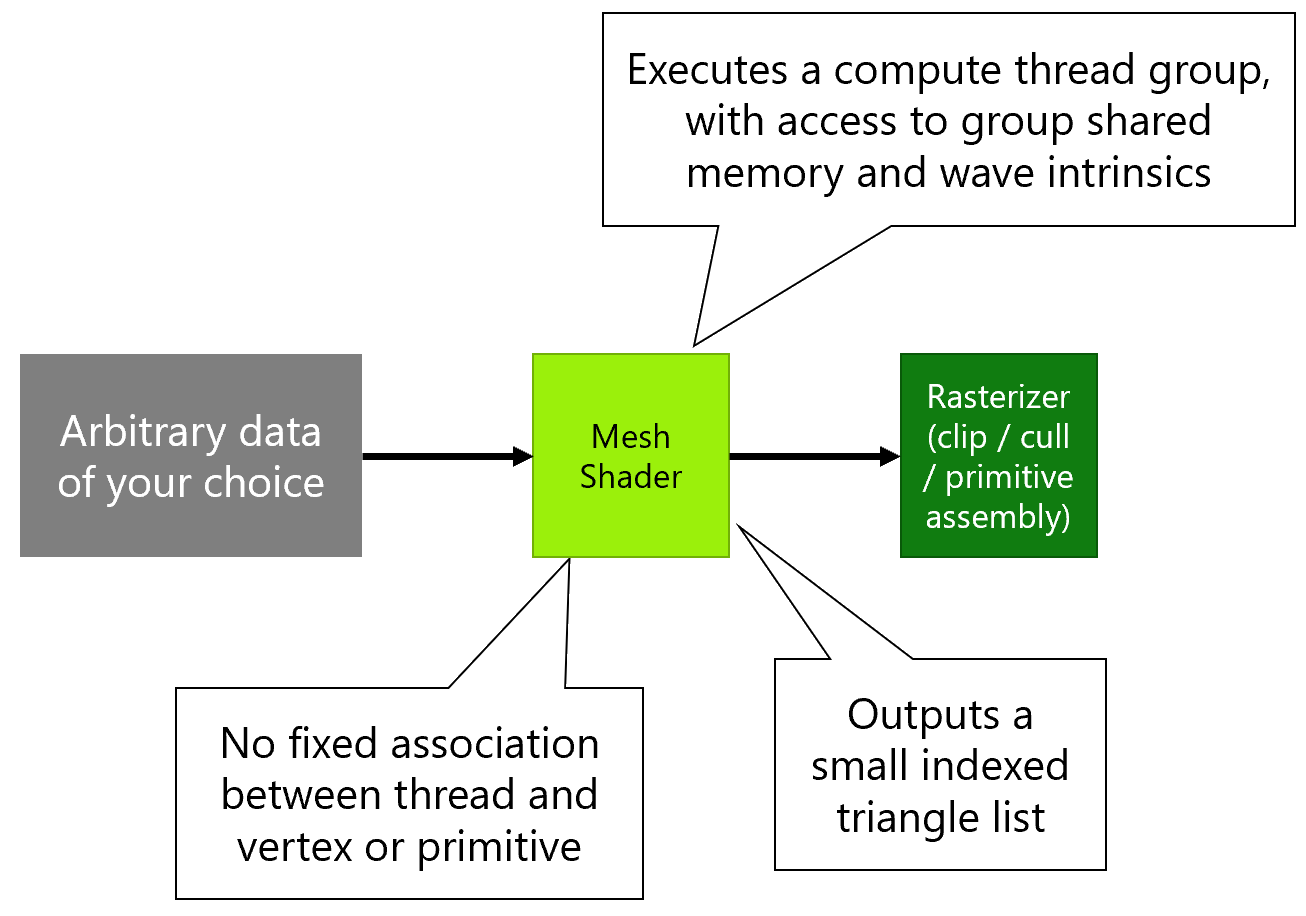

Mesh shaders change this by making geometry processing behave more like compute shaders. Rather than a single function that shades one vertex or one primitive, mesh shaders operate across an entire compute thread group, with access to group shared memory and advanced compute features such as cross-lane wave intrinsics that provide even more fine grained control over actual hardware execution. All these threads work together to shade a small indexed triangle list, called a ‘meshlet’. Typically there will be a phase of the mesh shader where each thread is working on a separate vertex, then another phase where each thread works on a separate primitive – but this model is completely flexible allowing data to be shared across threads, new vertices or primitives created as needed, existing primitives clipped or culled, etc.

Along with this new flexibility of thread allocation comes a flexibility of input data formats. Mesh shader no longer uses the Input Assembler block, which was previously responsible for fetching index and vertex data from memory. Instead, shader code is free to read whatever data is needed from any format it likes. This enables novel new techniques such as index buffer compression, or the use of multiple different index buffers for different channels of vertex data. Such approaches can reduce memory usage and also reduce the memory bandwidth used during rendering, thus boosting performance.

Although more flexible than the previous geometry pipeline, the mesh shader model is also much simpler:

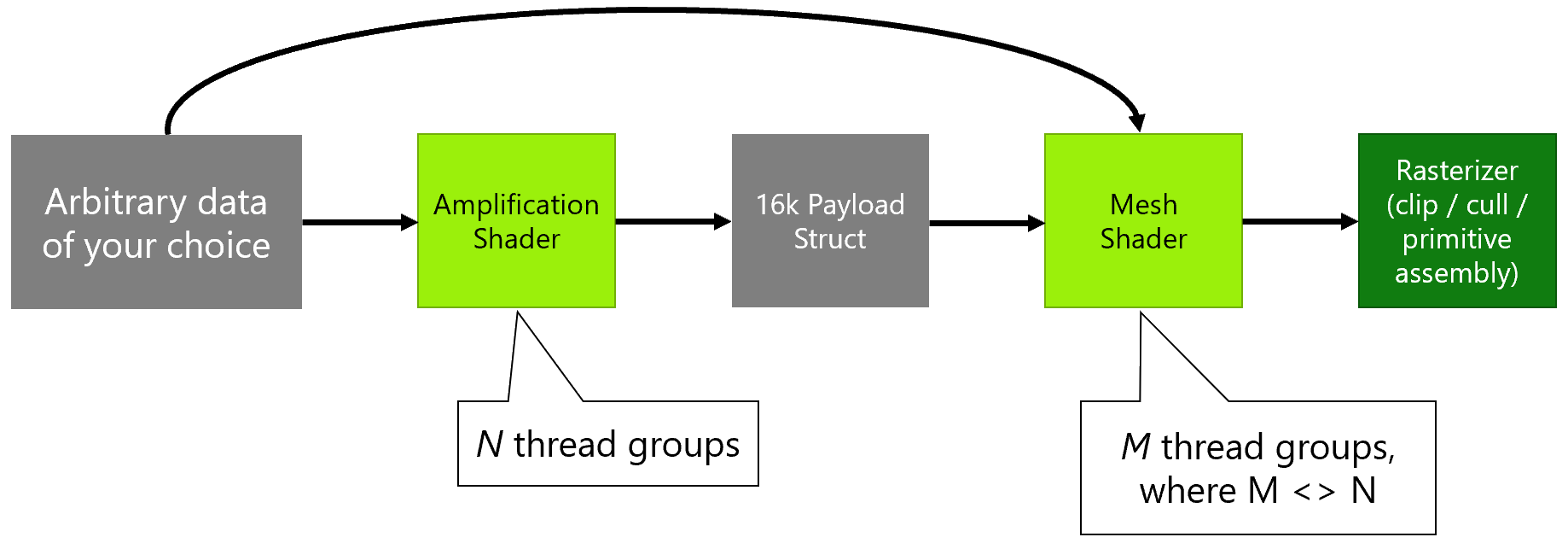

Along with mesh shader comes an optional new shader stage called the Amplification Shader. This runs before the mesh shader, runs some computations, determines how many mesh shader thread groups are needed, and then launches that many mesh shaders:

Amplification shaders are especially useful for culling, as they can determine which meshlets are visible, testing each set of between 32-256 triangles against a geometric bounding volume, normal cone, or more advanced techniques such as portal visibility planes, before deciding whether to launch a mesh shader thread group for that meshlet. Previously, culling was typically performed on a coarser per-mesh level to decide whether to draw an object at all, and also on a finer per-triangle level at the end of the geometry pipeline. This new intermediate level of culling improves performance when drawing models that are only partially occluded. For instance, if part of a character is on screen while just one arm is not, an amplification shader can cull that entire arm after much less computation than it would have taken to shade all the triangles within it.

Sampler Feedback

Sampler Feedback enables better visual quality, shorter load time, and less stuttering by providing detailed information to enable developers to only load in textures when needed.

Suppose you are a game developer shading a complicated 3D scene. The camera moves swiftly throughout the scene, causing some objects to be moved into different levels of detail. Since you need to aggressively optimize for memory, you bind resources to cope with the demand for different LODs. Perhaps you use a texture streaming system; perhaps it uses tiled resources to keep those gigantic 4K mip 0s non-resident if you don’t need them. Anyway, you have a shader which samples a mipped texture using A Very Complicated sampling pattern. Pick your favorite one, say anisotropic.

The sampling in this shader has you asking some questions.

What mip level did it ultimately sample? Seems like a very basic question. In a world before Sampler Feedback there’s no easy way to know. You could cobble together a heuristic. You can get to thinking about the sampling pattern, and make some educated guesses. But 1) You don’t have time for that, and 2) there’s no way it’d be 100% reliable.

Where exactly in the resource did it sample? More specifically, what you really need to know is— which tiles? Could be in the top left corner, or right in the middle of the texture. Your streaming system would really benefit from this so that you’d know which mips to load up next.c

Sampler feedback solves this by allowing a shader to efficiently query what part of a texture would have been needed to satisfy a sampling request, without actually carrying out the sample operation. This information can then be fed back into the game’s asset streaming system, allowing it to make more intelligent, precise decisions about what data to stream in next. In conjunction with the D3D12 tiled resources feature, this allows games to render larger, more detailed textures while using less video memory.

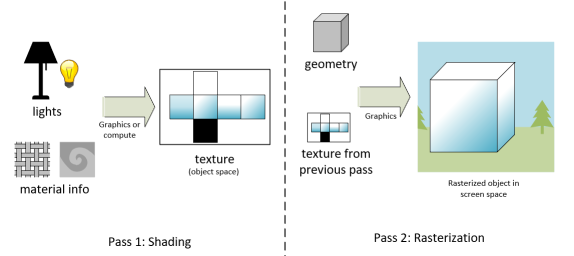

Sampler feedback also enables Texture-space shading (TSS), a rendering technique which de-couples the shading of an object in world space from the rasterization of the shape of that object to the final target.

TSS is a technique that allows game developers to do expensive lighting computations in object space, and write them to a texture— for example, something that looks like a UVW unwrapping of the object. Since nothing is being rasterized the shading can be done using compute, without the graphics pipeline at all. Then, in a separate step, bind the texture and rasterize to screen space, performing a dead simple sample. This approach reduces aliasing and allows computing lighting less often than rasterization. Decoupling these two rates allows the use of more sophisticated lighting techniques at higher framerates.

Setup of a scene using texture-space shading

One obstacle in getting TSS to work well is figuring out what in object space to shade for each object. Everything? That would be hardly efficient. What if only the left-hand side of an object is visible? With the power of sampler feedback, the rasterization step can simply record what texels are being requested and only perform the application’s expensive lighting computation on those.

Now that we’ve scratched the surface…

If you’ve made it through our light reading and are wondering when we’re going to get to the technical stuff, we’ve written detailed blog posts for developers about DirectX Raytracing 1.1, Variable Rate Shading, Mesh Shaders and Sampler Feedback, the four features inside of DX12 Ultimate.

For game developers, we have put together a detailed Getting Started with DirectX 12 Ultimate guide, which contains all of the details you need to get started on supporting DX12 Ultimate today, including how to join the Insiders program, the right build, SDK, drivers, etc. As always, we invite you to our active Discord channel, and we’re eager to help developers and gamers alike! discord.gg/directx

how come that game responsiveness is going down year after year? what do you improve on the back end exactly that is making games perform consistently worse?

the mess that you did with EFS is so disappointing

DX12 is disappointing by itself, from the user’s POV.

Good news from microsoft, but i like more to play on console!

It is a very good news. Now i can run my huge programs easily. By the way, how one can make even more use of the latest directx12?

Thats a really good news for all those with huge software holders. loved it, Thanks for the announcement.

The DirectX 12 Ultimate is compatible with newly launch 1660 ti in 2020? Am from TechisNext

Thank You... This artical is very help full for me.

This will work better for my gtx 750 ti ?

Does gt 710 supports dx11 because I seen that it support dx 12? Please let me help to get out from this problem

ahomeapplliance

so when you done will get it with windows update or we should download it from the website like 9.0

Well, so far DX12 has been a total dumpster fire; worst API ever made. Vulkan has its share of issues, but in terms of performance it has absolutely obliterated DX12. I've run both APIs alongside DX11 on 13 systems (Xeon X3230, C2Q 9650, Athlon X4 765 BE, i7 920, i5 750, i7 950, i7 960, i7 980X, i7 4770K, i5 6600K, i7 6700, i7 6850K, i7 7800X) and 24 DX12 compatible GPUs (GTX 480, GTX 570, GTX 580 3GB, GTX 690, GTX 770 4GB, R9 290X, GTX 770 4GB, GTX 780, GTX 780Ti, GTX Titan Black, R9 390X, R9...